Lessons On How To Get Things Right On The First Try

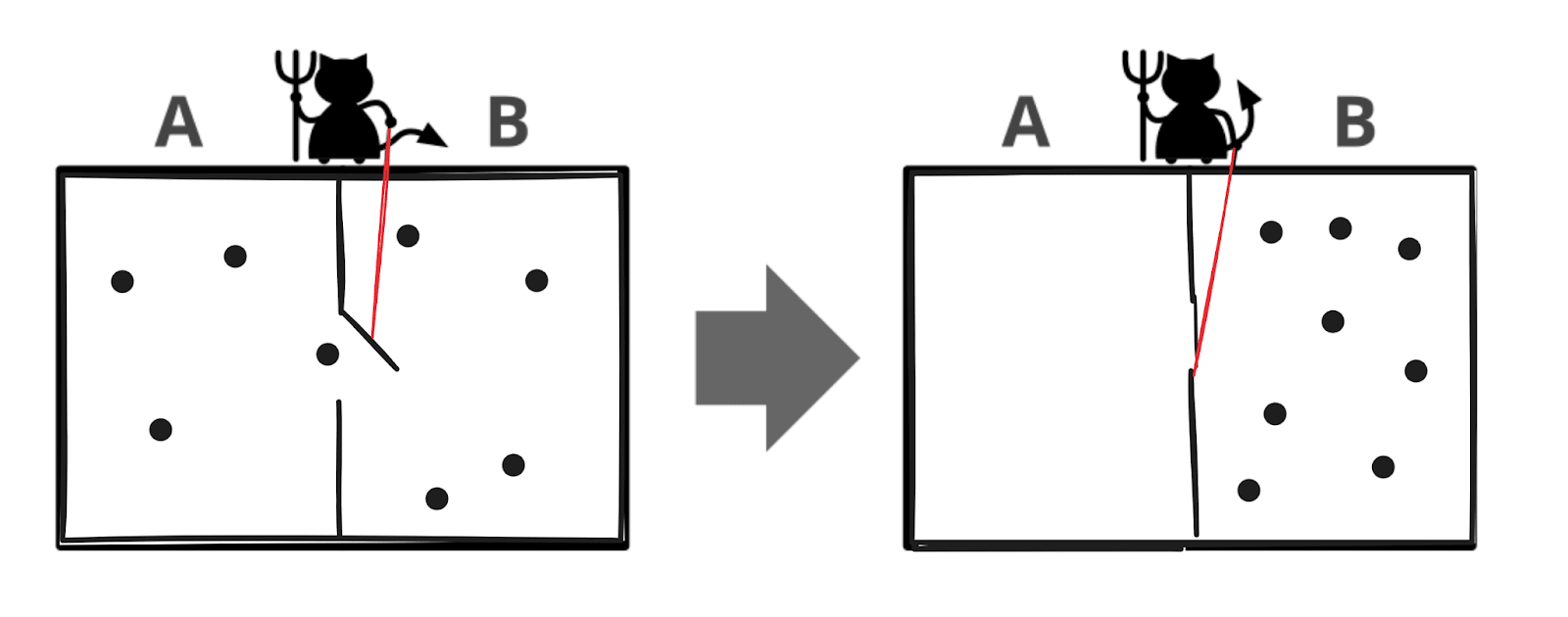

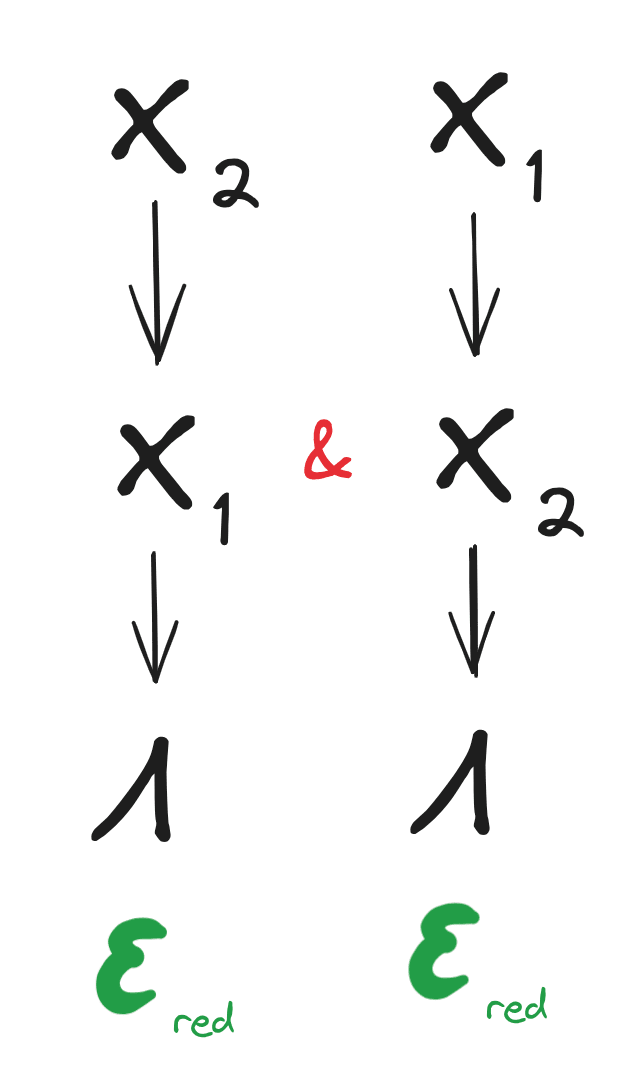

This post is based on several true stories, from a workshop which John has run a few times over the past year. John: Welcome to the Ball -> Cup workshop! Your task for today is simple: I’m going to roll this metal ball: … down this hotwheels ramp: … and off the edge. Your job is to tell me how far from the bottom of the ramp to place a cup on the floor, such that the ball lands in the cup. Oh, and you only get one try. General notes: * I won’t try to be tricky with this exercise. * You are welcome to make whatever measurements you want of the ball, ramp, etc. * You can even do partial runs, e.g. roll the ball down the ramp and stop it at the bottom, or throw the ball through the air. * But you only get one full end-to-end run (from top of the ramp to the cup/floor), and anything too close to an end-to-end run (let's say more than ~half the run) is discouraged. After all, in the AI situation for which the exercise is a metaphor, we don’t know exactly when something might foom; we want elbow room. That’s it! Good luck, and let me know when you’re ready to give it a shot. [At this point readers may wish to stop and consider the problem themselves.] Alison: Let’s get that ball in that cup. It looks like this is probably supposed to be a basic physics kind of problem…but there’s got to be some kind of twist or else why would he be having us do it? Maybe the ball is surprisingly light….or maybe the camera angle is misleading and we are supposed to think of something wacky like that?? The Unnoticed Observer: Muahahaha. Alison: That seems…hard. I’ll just start with the basic physics thing and if I run out of time before I can consider the wacky stuff, so be it. So I should probably split this problem into two parts. The part where the ball arcs through the air once off the table is pretty easy… The Unnoticed: True in this case, but how would you notice if it were false? What evidence have you seen? Alison: …bu