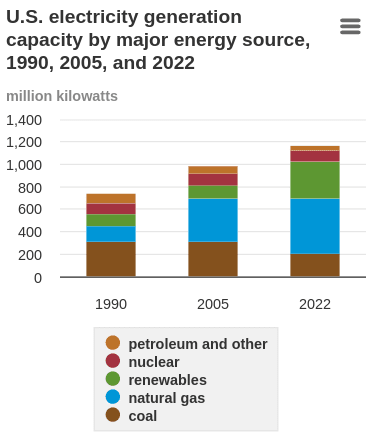

I doubt it (or at least, doubt that power plants will be a bottleneck as soon as this analysis says). Power generation/use varies widely over the course of a day and of a year (seasons), so the 500 GW number is an average, and generating capacity is overbuilt; this graph on the same EIA page shows generation capacity > 1000 GW and non-stagnant (not counting renewables, it declined slightly from 2005 to 2022 but is still > 800 GW):

This seems to indicate that a lot of additional demand[1] could be handled without building new generation, at least (and maybe not only) if it's willing to shut down at infrequent times of peak load. (Yes, operators will want to run as much as possible, but would accept some downtime if necessary to operate at all.)

This EIA discussion of cryptocurrency mining (estimated at 0.6% to 2.3% of US electricity consumption!) is highly relevant, and seems to align with the above. (E.g. it shows increased generation at existing power plants with attached crypto mining operations, mentions curtailment during peak demand, and doesn't mention new plant construction.)

- ^

Probably not as much as implied by the capacity numbers, since some of that capacity is peaking plants and/or just old, meaning not only inefficient, but sometimes limited by regulations in how many hours it can operate per year. But still.

[Epistemic status: purely anecdotal]

I know people who work in the design and construction of data centers and have heard that some popular data center cities aren't approving nearly as many data centers due to power grid concerns. Apparently, some of the newer data center projects are being designed to include net new power generation to support the data center.

For less anecdotal information, I found this useful: https://sprottetfs.com/insights/sprott-energy-transition-materials-monthly-ais-critical-impact-on-electricity-and-energy-demand/

This is why people in Silicon Valley are talking about power plants.

Another concern is powering an individual datacenter campus in order to train a GPT-6 level model (going by 30x a generation, that's a $10 billion/3M H100/6GW training run). I previously thought the following passage from Gemini 1.0 report clearly indicates that at least Google is ready to spread training compute across large distances:

Training Gemini Ultra used a large fleet of TPUv4 accelerators owned by Google across multiple datacenters. [...] we combine SuperPods in multiple datacenters using Google’s intra-cluster and inter-cluster network. Google’s network latencies and bandwidths are sufficient to support the commonly used synchronous training paradigm, exploiting model parallelism within superpods and data-parallelism across superpods.

But in principle inter-cluster network could just be referring to connecting different clusters within a single campus, which doesn't help with the local power requirements constraint. (This SemiAnalysis post offers some common sense on the topic.)

Without that, there are research papers on asynchronous distributed training, which haven't seen enough scaling to be a known way forward yet. As this kind of thing isn't certain to work, plans are probably being made for the eventuality that it doesn't, which means directing 6 GW of power to a single datacenter campus.

The pressure of decentralization at this scale will also incentivize a lot more research on how to do search/planning. Otherwise you wind up with a lot of 'stranded' GPU/TPU capacity where they are fully usable, but aren't necessary for serving old models and can't participate in the training of new scaled-up models. But if you switch to a search-and-distill-centric approach, suddenly all of your capacity comes online.

I came to the comments section to say a similar thing. Right now, the easiest way for companies to push the frontier of capabilities is via throwing more hardware and electricity at the problem, as well as doing some efficiency improvements. If the cost or unavailability of electrical power or hardware were to become a bottleneck, then that would simply tilt the equation more in the direction of searching for more compute efficient methods.

I believe there's plenty of room to spend more on research there and get decent returns for investment, so I doubt the compute bottleneck would make much of a difference. I'm pretty sure we're already well into a compute-overhang regime in terms of what the compute costs of more efficient model architectures would be like. I think the same is true for data, and the potential to spend more research investment on looking for more data efficient algorithms.

What about solar power? If you build a data center in the desert, buy a few square km of adjacent land and tile them with solar panels presumably that can be done far quicker and with far less regulation than building a power plant, and at night you can use off peak grid electricity at cheaper rates.

Electricity is ~$0.1 per kWh for industry in Texas. Running a H100 for a full year costs ~$1800 at that price. I'm not sure how much depreciation is for an H100, but 20% seems reasonable. If a H100 is $40,000, and a year of depreciation is $8000, then you would be losing $800 a year if you just had 10% idle time.

So... maybe? But my guess is that natural gas power plants are just cheaper and more reliable - a few cloudy weeks out of a year would easily shift the equation in favor of natural gas. No idea how power cycling affects depreciation. The AI industry people aren't talking much about solar or wind, and they would be if they thought it was more cost effective.

I don't think there will actually be an electricity shortage due to AI training - I just think industry is lobbying state and federal lawmakers very very hard to make these power plants ASAP. I think it is quite likely that various regulations will be cut though and those natural gas plants will go up faster than the 2-3 years figure I gave.

The AI industry people aren't talking much about solar or wind, and they would be if they thought it was more cost effective.

I don't see them talking about natural gas either, but nuclear or even fusion, which seems like an indication that whatever's driving their choice of what to talk about, it isn't short-term cost-effectiveness.

I was going to make a similar comment, but related to OPEX vs CAPEX for the H100

If the H100 consumes 700 W, and we assume 2KW and costs $30,000 CAPEX

Then at $1800 per year, running costs are < 5% of CAPEX.

For electricity the data center just wants to buy from the grid, its only if they are so big they are forced to have new connections that they can't do that. Given electricity is worth so much more than $0.1 to them they would just like to compete on the national market for the spot price.

To me that gives a big incentive to have many small datacenters (maybe not possible for training, but perhaps for inference?)

If they can't do that, then we need to assume they are so large there is no/limited grid connection available. Then they would build the fastest elec first. That is solar + battery, then nat gas to fill in the gaps + perhaps even mobile generators before the gas can be built to cover the days with no sun?

I can't see any credible scenario where nuke makes a difference. You will have AGI first, or not that much growth in power demand.

This scenario also seems to depend on a slow takeoff happening in 0-2 years - 2+ million GPU need to be valuable enough but not TAI?

If you pair solar with compressed air energy storage, you can inexpensively (unlike chemical batteries) get to around 75% utilization of your AI chips (several days of storage), but I’m not sure if that’s enough, so natural gas would be good for the other ~25% (windpower is also anticorrelated with solar both diurnally and seasonally, but you might not have good resources nearby).

Data centers running large numbers of AI chips will obviously run them as many hours as possible, as they are rapidly depreciating and expensive assets. Hence, each H100 will require an increase in peak powergrid capacity, meaning new power plants.

My comment here explains how the US could free up greater than 20% of current electricity generation for AI, and my comment here explains how the US could produce more than 20% extra electricity with current power plants. Yes, duty cycle is an issue, but backup generators (e.g. at hospitals) could come on during peak demand if the price is high enough to ensure that the chips could run continuously.

There have been presistent rumors that electricity generation was somehow bottlenecking new data centers. This claim was recently repeated by Donald Trump, who implied that San Francisco donors requested the construction of new power plants for powering new AI data centers in the US. While this may sound unlikely, my research suggests it's actually quite plausible.

US electricity production has been stagnant since 2007. Current electricity generation is ~ 500 million kW. An H100 consumes 700 W at peak capacity. Sales of H100s were ~500,000 in 2023 and expected to climb to 1.5-2 million in 2024. "Servers" account for only 40% of data center power consumption, and that includes non-GPU overhead. I'll assume a total of 2 kW per H100 for ease of calculation. This means that powering all H100s produced to the end of 2024 would require ~1% of US power generation.

H100 production is continuing to increase, and I don't think it's unreasonable for it (or successors) to reach 10 million per year by, say, 2027. Data centers running large numbers of AI chips will obviously run them as many hours as possible, as they are rapidly depreciating and expensive assets. Hence, each H100 will require an increase in peak powergrid capacity, meaning new power plants.

I'm assuming that most H100s sold will be installed in the US, a reasonable assumption given low electricity prices and the locations of the AI race competitors. If an average of 5 million H100s go online each year in the US between 2024 and 2026, that's 30 kW, or 6% of the current capacity! Given that the lead time for power plant construction may range into decades for nuclear, and 2-3 years for a natural gas plant (the shortest for a consistant-output power plant), those power plants would need to start the build process now. In order for there to be no shortfall in electricity production by the end of 2026, there will need to be ~30 million kW of capacity that begins the construction process in Jan 2024. That's about close to the US record (+40 million kW/year), and 6x the capacity currently planned to come online in 2025. I'm neglecting other sources of electricity since they take so much longer to build, although I suspect the recent bill easing regulations on nuclear power may be related. Plants also require down-time, and I don't think the capacity delow takes that into account.

This is why people in Silicon Valley are talking about power plants. It's a big problem, but fortunately also the type that can be solved by yelling at politicians. Note the above numbers are assuming the supply chain doesn't have shortages, which seems unlikely if you're 6x-ing powerplant construction. Delaying decommisioning of existing power plants and reactivation of mothballed ones will likely help a lot, but I'm not an expert in the field, and don't feel qualified to do a deeper analysis.

Overall, I think the claim that power plants are a bottleneck to data center construction in the US is quite reasonable, and possibly an understatement.