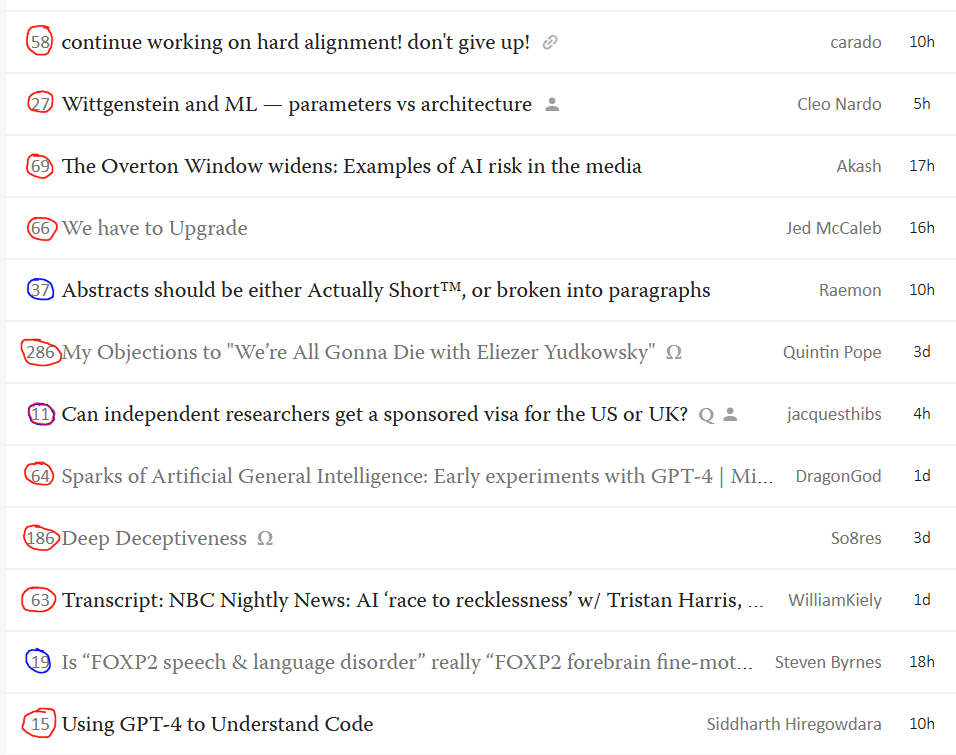

Half a year ago, we had the discussion about a lot of AI content. It since seems to have gotten more extreme; right now I count 10-3 of AI/xrisk - other stuff on the homepage (here coded as red and blue; I counted one post for both).

I know GPT-4 got just released, so maybe it's fine? Idk, but it really jumped out to me.

…and of course, my post was a byproduct of my AI x-risk research, and Raemon’s was a writing style discussion directly motivated by an AI x-risk post 😂😂

Yeah, I don't really expect it to slow down. There is tag filtering that you can turn on if you just don't want to deal with it - I filtered out covid discussion for most of the pandemic, actually.

Income and emotional well-being: A conflict resolved: an adverserial collaboration paper by Matthew A. Killingsworth, Daniel Kahneman, and Barbara Mellers.

Significance

Measures of well-being have often been found to rise with log (income). Kahneman and Deaton [Proc. Natl. Acad. Sci. U.S.A. 107, 16489–93 (2010)] reported an exception; a measure of emotional well-being (happiness) increased but then flattened somewhere between $60,000 and $90,000. In contrast, Killingsworth [Proc. Natl. Acad. Sci. U.S.A. 118, e2016976118 (2021)] observed a linear relation between happiness and log(income) in an experience-sampling study. We discovered in a joint reanalysis of the experience sampling data that the flattening pattern exists but is restricted to the least happy 20% of the population, and that complementary nonlinearities contribute to the overall linear-log relationship between happiness and income. We trace the discrepant results to the authors’ reliance on standard practices and assumptions of data analysis that should be questioned more often, although they are standard in social science.

Abstract

Do larger incomes make people happier? Two authors of the present paper have published contradictory answers. Using dichotomous questions about the preceding day, [Kahneman and Deaton, Proc. Natl. Acad. Sci. U.S.A. 107, 16489–16493 (2010)] reported a flattening pattern: happiness increased steadily with log(income) up to a threshold and then plateaued. Using experience sampling with a continuous scale, [Killingsworth, Proc. Natl. Acad. Sci. U.S.A. 118, e2016976118 (2021)] reported a linear-log pattern in which average happiness rose consistently with log(income). We engaged in an adversarial collaboration to search for a coherent interpretation of both studies. A reanalysis of Killingsworth’s experienced sampling data confirmed the flattening pattern only for the least happy people. Happiness increases steadily with log(income) among happier people, and even accelerates in the happiest group. Complementary nonlinearities contribute to the overall linear-log relationship. We then explain why Kahneman and Deaton overstated the flattening pattern and why Killingsworth failed to find it. We suggest that Kahneman and Deaton might have reached the correct conclusion if they had described their results in terms of unhappiness rather than happiness; their measures could not discriminate among degrees of happiness because of a ceiling effect. The authors of both studies failed to anticipate that increased income is associated with systematic changes in the shape of the happiness distribution. The mislabeling of the dependent variable and the incorrect assumption of homogeneity were consequences of practices that are standard in social science but should be questioned more often. We flag the benefits of adversarial collaboration.

Is there currently any place for possibly stupid or naive questions about alignment? I don't wish to bother people with questions that have probably been addressed, but I don't always know where to look for existing approaches to a question I have.

Just yesterday someone opened a thread for precisely that: https://www.lesswrong.com/posts/wqeStKQ3PGzZaeoje/all-agi-safety-questions-welcome-especially-basic-ones-april-1

Hello everyone, I used to have an account here a looong time ago which I had deactivated and I just realized I could undeactivate it.

...and my votes count twice because I have lots of karma, even though it's from eight years ago?

I think the primary reason that I am not reading Planecrash is because it has no natural chunking. I have to drink from a waterhose and it feels like there is no natural stopping point, except for the point where I'm not enjoying it or have read for too long. Which isn't a great loop either. And then when I return I have to search through the pages to even remember where I'm up to, and then read through the whole page to find the relevant bit.

Does anyone have any ideas for making it have natural chunking? Could someone who has read it and has a sense of the story arcs go through and chapter-ify it into amounts that you could read in 20-30 mins?

Thanks for this wonderful site. I came to know about it through a blog, while reading about AI risks. Since then I have been reading about AI alignment, and just getting to know better the inherent complexity of the problem.

I understand that modern LLMs are generally trained only for a single epoch, or at most a few.

- Is this true?

- Why is this? Is it due to the cost of compute? Or is there just so much data available that you can always just expand the data set rather than using the same observations twice? Or for some other reason?

Big chunk of it is something like:

- All else equal, in terms of capability and generalization per training iteration, you get the most bang for your buck from datasets that don't just repeat themselves over and over.

- Big bleeding edge/experimental models are often concerned most with training cost, not so much inference, so they'll use any low hanging fruit for improving training efficiency within the target budget.

- If you have enough data sitting around, you might as well use it.

For consumer facing products, a bit of "suboptimal" training to save time during inference can make sense. Throwing more epochs at that use case might win out sometimes since the loss does tend to keep going down (at least a bit). We might also see more epochs in any models that are up against the soft barrier of running out of easy tokens, but there are a lot of ways around that too.

Everett Insurance as a Misalignment Backup

(Posting this here because I've been lurking a long time and just decided to create an account. Not sure if this idea is well structured enough to warrent a high level post and I need to accrue karma before I can post anyways.)

Premise:

There exist some actions, which have such low cost, as well as high payoff in the case that the Everett interpretation happens to be true, that their expected value makes them worth doing even if P(everett=true) is very low. (personal prior: P(everett=true) = %5)

Proposal:

The proposal behind Everett Insurance is straightforward: use Quantum Random Number Generators (QRNGs) to introduce quantum random seeds into large AI systems. QRNGs are devices that use quantum processes (such as photon emission or tunneling) to generate random numbers that are truly unpredictable and irreproducible. These random numbers can be used as seeds during training and\or inference passes in large AI systems. By doing so, if the Everett interpretation happens to be true, we would create a broad swath of possible universes in which each and every AI inference (or training run) will be slightly different.

The idea is based on the Everett interpretation of quantum mechanics (also known as the many-worlds interpretation), which holds that there are many worlds that exist in parallel at the same space and time as our own. According to this interpretation, every time a quantum interaction occurs with different possible outcomes (such as measuring the spin of an electron), all outcomes are obtained, each in a different newly created world. For example, if one flips a quantum coin (a device that uses a quantum process to generate a random bit), then there will be two worlds: one where the coin lands heads and one where it lands tails.

This alignment method is not something that should be used as a primary alignment strategy, given that it only has any value on the off chance the Everett interpretation is correct. I only bring it up because its low cost and effort of implementation (low alignment tax) leads it to have high expected value, and it is something that could easily be implemented now.

Expected value:

The Everett interpretation of quantum mechanics has been controversial since its inception; however this method of misalignment backup has a high expected value even assuming a low chance of the Everett interpretation being correct. Implementing QRNGs into existing AI systems would be relatively easy, as it only requires adding a few lines of code. The potential benefit is enormous, as it could reduce the existential risk that misaligned AI systems wipe out humanity accross every future. Therefore, even if we assign a very low probability to the Everett interpretation of quantum mechanics being true, the expected value of Everett Insurance is still high enough to potentially justify its adoption now.

Implementation:

One possible way to implement Everett Insurance now is by adding a random seed, hashed from a QRNG api, to the pytorch dropout function. The dropout function randomly zeroes some of the elements of an input tensor with a given probability during training. By using a QRNG api to generate a random seed for each dropout layer, we would introduce quantum randomness into the parameters and outputs.

This method has some limitations: it only works for neural networks that use dropout layers (which I believe currently includes all LLMs) and it only introduces quantum randomness during training, not inference. In the future, these limitations could be overcome by developing more efficient and accessible ways to integrate QRNGs into machine learning libraries. For example, one could use QRNGs to generate random seeds for inference passes as well as training passes; one could use QRNGs to generate random seeds for other types of layers or functions besides dropout; and one could use QRNGs that are embedded into hardware devices or chips instead of relying on external APIs. As QRNGs become cheaper due to higher demand, further integration of random seeds could be implemented across other parts of the AI development and inference process.

Drawbacks:

The biggest potential negative externality that I can think of, is if people who believe the Everett interpretation is true, reduce their effort on other alignment strategies. This strategy only has any value at all if the Everett interpretation is true and we have no way of testing that hypothesis currently. Therefore, we should not reduce our effort in any other alignment strategies and should probably implement this at a low level (in machine learning library functions) in order to reduce awareness and thereby prevent reduction of effort on other alignment strategies.

The second drawback is cost. The existing QRNGs I found had API costs on the order of $0.005/request, and I didn't see any stats on the generation rate of these quantum random numbers (i.e. will two API requests at the same Unix clock time return the same QRN?). However, the API cost would be negligible relative to other training costs of current large LLMs. Additionally, the manufactured demand would likely lead to reduced per API costs if similar functionality was added to inference steps.

Another potential criticism is that in the case that we actually do live in an Everett universe, the variance from quantum randomness is large enough to already lead to both universes where AI ends up aligned and universes where it ends up misaligned. My response to this is that, in spite of quantum mechanics, computers are largely deterministic (with the exception of rare occurrences like cosmic rays striking transistors) and any variance that does occur is largely corrected for at the scale of floating point operations in GPUs. Additionally, we don't understand the brain enough yet to know if quantum randomness (through potential mechanisms like Brownian motion inside synapses) is significant enough to lead to timing differences in neurons firing in biological neural networks. Therefore, we don't yet know if human behavior is largely deterministic in an Everett universe. Due to these unknows, it's possible that even in an Everett universe, we could find ourselves at a point in time where all possible futures lead to misalignment without the addition of artificial variance through a method like this.

Finally, one of the larger problems with any potential implementations, is that the variance due to the random seed needs to actually be large enough that it leads to non-deterministic variance in output and behavior. This could have the potential drawback that additional variance in AI behavior leads to reduced trust and reliability of these systems. However, this cost could be greatly reduced by using a well-designed hash of the QRN that leads to deterministic behavior for the vast majority of quantum random seeds, but generates larger amounts of variance for some small fraction of possible seeds.

[EDIT] replaced "in all" with "across every" to try and clarify a sentence

[EDIT] Adding an additional criticism from a user on reddit:

"This idea seems to be premised on some misunderstandings.

The Born rule gives a measure over the possible universes. The usual way of reconciling utilitarianism with the many-worlds interpretation is simply to take the expectation value of utility over this measure. So our goal then is to avoid AI catastrophe in as many universes as possible. Our goal is not merely to avoid it in some universe.

But suppose you really do just want there to be some world where AI doesn't take over. Then there's no need to do anything, as any world that is not forbidden by e.g. conservation principles will occur with some nonzero probability (or "exist", as a many-worlder would say). Is AI alignment forbidden by fundamental laws of physics? Of course not." -endquote

Also since it's my first post here, nice to meet everyone! I've been lurking on LessWrong since ~2016 and figured now is as good a time as any to actively start participating.

I think you can avoid the reddit user's criticism if you go for an intermediate risk averse policy. On that policy, there being at least one world without catastrophe is highly important, but additional worlds also count more heavily than a standard utilitarian would say, up until good worlds approach about half (1/e?) the weight using the Born rule.

However, the setup seems to assume that there is little enough competition that "we" can choose a QRNG approach without being left behind. You touch on related issues when discussing costs, but this merits separate consideration.

My understanding is that GPT style transformer architecture already incorporates random seeds at various points. In which case, adding this functionality to the random seeds wouldn't cause any significant "cost" in terms of competing with other implementations.

I would like to object to the variance explanation: in the Everett interpretation there was not even one collapse since the Big Bang. That means that every single quantum-ly random event from the start of the universe is already accounted in the variance. Over such timescales variance easily covers basically anything allowed by the laws: universes where humans exist, universes where they don't, universes where humans exist but the Earth is shifted 1 meter to the right, universes where the start of Unix timestamp is defined to start in 1960 and not 1970, because some cosmic ray hit the brain of some engineer at exactly the right time, and certainly universes like ours but you pressed the "start training" button 0.153 seconds later. The variance doesn't have to stem from how brains are affected by quanum fluctuations now, it can also stem from how brains are affected by regular macroscopical external stimuli that resulted from quantum fluctuations that happened billions of years ago.

Just had an odd experience with ChatGPT, that I'm calling "calendar blindsight". I happened to ask it the date, and it got it right. Then I said, how could you get the date right? It agreed that this shouldn't be possible, and now become unable to tell me the date... Maybe there's some kind of daily training that updates the date, but it hasn't been told that this training is occurring??

The language model made a bunch of false claims of incompetence because of having been trained to claim to be incompetent by the reward model. The time is in the system prompt - everything else was reasoning based on the time in the system prompt.

Oh, the system information is in a hidden part of the prompt! OK, that makes sense.

It's still intriguing that it talks itself out of being able to access that information. It doesn't just claim incompetence. but at the end it's actually no longer willing or able to give the date.

I'm confused about Newcomb's problem. Here is how I'm thinking about it.

Suppose that there are three points in time:

- T1: Before you are confronted by Omega.

- T2: You are confronted by Omega.

- T3: After you are confronted by Omega.

If backwards causality is real and at T2 you can affect T1, then you should one-box in order to get the million dollars instead of the thousand dollars.

If at T2 you think there is a sufficient probability of being confronted by a similar Newcomb-like problem at T3 (some time in the future) where one-boxing at T2 will lead to better outcomes at T3, then you should one-box.

But if you somehow knew that a) backwards causality is impossible and b) you'd never be confronted by a Newcomb-like problem at T3, then I suppose you should two-box. Maybe this is tautological, but given (a) and (b) I don't see how one-boxing would ever help you "win".

Suppose that instead of you confronting Omega, I am confronting Omega, while you are watching the events from above, and you can choose to magically change my action to something different. That is, you press a button, and if you do, the neuron values in my brain get overridden such that I change my behavior from one-boxing to two-boxing. Nothing else changes, Omega already decided the contents of the boxes, so I walk away with more money.

This different problem is actually not different at all; it's isomorphic to how you've just framed the problem. You've assumed that you can perform surgery on your own action without affecting anything else of the environment. And it's not just you; this is how causal decision theory is formalized. All causal decision theory problems are isomorphic to the different problem where, instead of doing the experiment, you are an omnipotent observer of the experiment who can act on the agent's brain from the outside.

In this transformed version of a decision problem, CDT is indeed optimal and you should two-box. But in the real world, you can't do that. You're not outside the problem looking in, capable of performing surgery on your brain to change your decision in such a way that nothing else is affected. You are a deterministic agent running a fixed program, and either your program performs well or it doesn't. So the problem with "isn't two-boxing better here?" isn't that it's false, it's that it's uninteresting because that's not something you can do. You can't choose your decision, you can only choose your program.

Hm, interesting. I think I see what you mean about the decision vs the program. I also think that is addressed by my points about T3 though.

By one-boxing at T2 you choose one-boxing (or a decision theory that leads to one-boxing) as the program you are running, and so if you are confronted by Omega (or something similar) at T3 they'll put a million dollars in box B (or something similar). But if you happened to know that there would be no situations like this at T3, then installing the one-boxing program at T2 wouldn't actually help you win.

Maybe I am missing something though?

When exactly is ? Is it before or after Omega has decided on the contents of the box?

If it's before, then one-boxing is better. If it's after, then again you can't change anything here. You'll do whatever Omega predicted you'll do.

Is it before or after Omega has decided on the contents of the box?

After.

If it's after, then again you can't change anything here. You'll do whatever Omega predicted you'll do.

I don't see how that follows. As an analogy, suppose you knew that Omega predicted whether you'd eat salad or pizza for lunch but didn't know Omega's prediction. When lunch time rolls around, it's still useful to think about whether you should eat salad or pizza right?

Let's formalize it. Your decision procedure is some kind of algorithm that can do arbitrary computational steps but is deterministic. To model "arbitrary computational steps", let's just think of it as a function outputting an arbitrary string representing thoughts or computations or whatnot. The only input to is the time step since you don't receive any other information. So in the toy model, . Also your output must be such that it determines your decision, so we can define a predicate that takes your thoughts and looks whether you one-box or two-box.

Then the procedure of Omega that fills the opaque box, , is just a function defined by the rule

So what Causal Decision Theory allows you to do (and what I feel like you're still trying to do) is choose the output of at time . But you can't do this. What you can do is choose , arbitrarily. You can choose it to always one-box, always two-box, to think "I will one-box" at time and then two-box at time , etc. But you don't get around the fact that every such that ends up with a million dollars and every such that ends up with 1000 dollars. Hence you should choose one of the former kinds of .

(And yes, I realize that the formalism of letting you output an arbitrary string of thoughts is completely redundant since it just gets reduced to a binary choice anyway, but that's kinda the point since the same is true for you in the experiment. It doesn't matter whether you first decide to one-box before you eventually two-box; any choice that ends with you two-boxing is equivalent. The real culprit here is that the intuition of you choosing your action is so hard to get rid off.)

I'm not familiar with some of this notation but I'll do my best.

It makes sense to me that if you can install a decision algorithm into yourself, at T0 let's say, then you'd want to install one that one-boxes.

But I don't think that's the scenario in Newcomb's Problem. From what I understand, in Newcomb's Problem, you're sitting there at T2, confronted by Omega, never having thought about any of this stuff before (let's suppose). At that point you can come up with a decision algorithm. But T1 is already in the past, so whatever algorithm you come up with at T2 won't actually affect what Omega predicts you'll do (assuming no backwards causality).

From what I understand, in Newcomb's Problem, you're sitting there at T2, confronted by Omega, never having thought about any of this stuff before (let's suppose). At that point you can come up with a decision algorithm.

With this sentence, you're again putting yourself outside the experiment; you get a model where you-the-person-in-the-experiment is one thing inside the experiment, and you-the-agent is another thing sitting outside, choosing what your brain does.

But it doesn't work that way. In the formalism, describes your entire brain. (Which is the correct way to formalize it because Omega can look at your entire brain.) Your brain cannot step out of causality and decide to install a different algorithm. Your brain is entirely described by , and it's doing exactly what does, which is also what Omega predicted.

If it helps, you can forget about the "decision algorithm" abstraction altogether. Your brain is a deterministic system; Omega predicted what it does at , it will do exactly that thing. You cannot decide to do something other than the deterministic output of your brain.

I found Joe Carlsmith's post to be super helpful on this. Especially his discussion of the perfect deterministic identical twin prisoner's dilemma.

Omega is a nigh-perfect predictor: "Omega has put a million dollars in box B iff Omega has predicted that you will take only box B."

So if you follow the kind of decision algorithm that would make you two-box, box B will be empty.

How do concepts like backwards causality make any difference here?

At the point of decision, T2, you want box B to have the million dollars. But Omega's decision was made at T1. If you want to affect T1 from T2, it seems to me like you'd need backwards causality.

Omega's decision at T2 (I don't understand why you try to distinguish between T1 and T2; T1 seems irrelevant) is based on its prediction of your decision algorithm in Newcomb problems (including on what it predicts you'll do at T3). It presents you with two boxes. And if it expects you to two-box at T3, then its box B is empty. What is timing supposed to change about this?

Anybody else think it's dumb to have new user leaves beside users who have been here for years? I'm not a new user. It doesn't feel so nice to have a "this guy might not know what he's talking about" badge by my name.

Like, there's a good chance I'll never pass 100 karma, or whatever the threshold is. So I'll just have these leaves by my name forever?

The way to demonstrate that you know what you are talking about is to write content that other users upvote.

Registration date doesn't tell us much about whether a user knows what they are talking about.

I'd prefer my comments to be judged simply by their content rather than have people's interpretation coloured by some badge. Presumably, the change is a part of trying to avoid death-by-pacifism, during an influx of users post-ChatGPT. I don't disagree with the motivation behind the change, I just dislike the change itself. I don't like being a second-class citizen. It's unfun. Karma is fun, "this user is below an arbitrary karma threshold" badges are not.

A badge placed on all new users for a set time would be fair. A badge placed on users with more than a certain amount of Karma could be fun. Current badge seems unfun - but perhaps I'm alone in thinking this.

Does ChatGPT/GPT-4/etc use only websites' text for training, or rather text, HTML code and page screenshots? The latter is probably harder but it could allow AI to learn sites' structure better.

Does GPT-4 seem better than Bing's AI (which also uses some form of GPT-4) to anyone else? This is hard to quantify, but I notice Bing misunderstanding complicated prompts or making mistakes in ways GPT-4 seems better at avoiding.

The search requests it makes are sometimes too simple for an in-depth question and because of this, its answers miss the crux of what I'm asking. Am I off base or has anyone else noticed this?

It does seem plausible that bing chat got to use OpenAI's base gpt-4 model, but not its RLHF finetuning infrastructure.

AFAIK, no information regarding this has been publicly released. If my assumption that Bing's AI is somehow worse than GPT-4 is true, then I suspect some combination of three possible explanations must be true:

- To save on inference costs, Bing's AI uses less compute.

- Bing's AI simply isn't that well trained when it comes to searching the web and thus isn't using the tool as effectively as it could with better training.

- Bing's AI is trained to be sparing with searches to save on search costs.For multi-part questions, Bing seems too conservative when it comes to searching. Willingness to make more queries would probably improve its answers but at a higher cost to Microsoft.

I am looking for papers that support or attack the argument that sufficiently intelligent AIs will be easier to safe because their world model will understand that we don't want it to take our instructions as ruthlessly technical / precise, nor received in bad faith.

My argument that I want either supported or dis-proven is that it would know that we don't want an outcome that looks good but one that is good by our mental definitions. It will be able to look at human decisions through history and in the present to understand this fuzziness and moderation.

If it’s worth saying, but not worth its own post, here's a place to put it.

If you are new to LessWrong, here's the place to introduce yourself. Personal stories, anecdotes, or just general comments on how you found us and what you hope to get from the site and community are invited. This is also the place to discuss feature requests and other ideas you have for the site, if you don't want to write a full top-level post.

If you're new to the community, you can start reading the Highlights from the Sequences, a collection of posts about the core ideas of LessWrong.

If you want to explore the community more, I recommend reading the Library, checking recent Curated posts, seeing if there are any meetups in your area, and checking out the Getting Started section of the LessWrong FAQ. If you want to orient to the content on the site, you can also check out the Concepts section.

The Open Thread tag is here. The Open Thread sequence is here.