tl;dr: OpenAI leaked AI breakthrough called Q*, acing grade-school math. It is hypothesized combination of Q-learning and A*. It was then refuted. DeepMind is working on something similar with Gemini, AlphaGo-style Monte Carlo Tree Search. Scaling these might be crux of planning for increasingly abstract goals and agentic behavior. Academic community has been circling around these ideas for a while.

Reuters: OpenAI researchers warned board of AI breakthrough ahead of CEO ouster, sources say

"Ahead of OpenAI CEO Sam Altman’s four days in exile, several staff researchers sent the board of directors a letter warning of a powerful artificial intelligence discovery that they said could threaten humanity

Mira Murati told employees on Wednesday that a letter about the AI breakthrough called Q* (pronounced Q-Star), precipitated the board's actions.

Given vast computing resources, the new model was able to solve certain mathematical problems. Though only performing math on the level of grade-school students, acing such tests made researchers very optimistic about Q*’s future success."

"What could OpenAI’s breakthrough Q* be about?

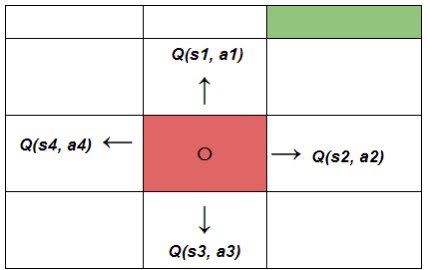

It sounds like it’s related to Q-learning. (For example, Q* denotes the optimal solution of the Bellman equation.) Alternatively, referring to a combination of the A* algorithm and Q learning.

One natural guess is that it is AlphaGo-style Monte Carlo Tree Search of the token trajectory. 🔎 It seems like a natural next step: Previously, papers like AlphaCode showed that even very naive brute force sampling in an LLM can get you huge improvements in competitive programming. The next logical step is to search the token tree in a more principled way. This particularly makes sense in settings like coding and math where there is an easy way to determine correctness. -> Indeed, Q* seems to be about solving Math problems 🧮"

"Anyone want to speculate on OpenAI’s secret Q* project?

- Something similar to tree-of-thought with intermediate evaluation (like A*)?

- Monte-Carlo Tree Search like forward roll-outs with LLM decoder and q-learning (like AlphaGo)?

- Maybe they meant Q-Bert, which combines LLMs and deep Q-learning

Before we get too excited, the academic community has been circling around these ideas for a while. There are a ton of papers in the last 6 months that could be said to combine some sort of tree-of-thought and graph search. Also some work on state-space RL and LLMs."

OpenAI spokesperson Lindsey Held Bolton refuted it:

"refuted that notion in a statement shared with The Verge: “Mira told employees what the media reports were about but she did not comment on the accuracy of the information.”"

Google DeepMind's Gemini, that is currently the biggest rival with GPT4, which was delayed to the start of 2024, is also trying similar things: AlphaZero-based MCTS through chains of thought, according to Hassabis.

Demis Hassabis: "At a high level you can think of Gemini as combining some of the strengths of AlphaGo-type systems with the amazing language capabilities of the large models. We also have some new innovations that are going to be pretty interesting."

anton, Twitter, links to a video of Shane Legg

Aligns with DeepMind Chief AGI scientist Shane Legg saying:

"To do really creative problem solving you need to start searching."

"With Q*, OpenAI have likely solved planning/agentic behavior for small models.

Scale this up to a very large model and you can start planning for increasingly abstract goals.

It is a fundamental breakthrough that is the crux of agentic behavior.

To solve problems effectively next token prediction is not enough.

You need an internal monologue of sorts where you traverse a tree of possibilities using less compute before using compute to actually venture down a branch.

planning in this case refers to generating the tree and predicting the quickest path to solution"

My thoughts:

If this is true, and really a breakthrough, that might have caused the whole chaos: For true superintelligence you need flexibility and systematicity. Combining the machinery of general and narrow intelligence (I like the DeepMind's taxonomy of AGI https://arxiv.org/pdf/2311.02462.pdf ) might be the path to both general and narrow superintelligence.

Why I strong-downvoted

[update: now it’s a weak-downvote, see edit at bottom]

[update 2: I now regret writing this comment, see my reply-comment]

I endorse the general policy: "If a group of reasonable people think that X is an extremely important breakthrough that paves the path to imminent AGI, then it's really important to maximize the amount of time that this group can think about how to use X-type AGI safely, before dozens more groups around the world start trying to do X too."

And part of what that entails is being reluctant to contribute to a public effort to fill in the gaps from leaks about X.

I don't have super strong feelings that this post in particular is super negative value. I think its contents are sufficiently obvious and already being discussed in lots of places, and I also think the thing in question is not in fact an extremely important breakthrough that paves the path to imminent AGI anyway. But this post has no mention that this kind of thing might be problematic, and it's the kind of post that I'd like to discourage, because at some point in the future it might actually matter.

As a less-bad alternative, I propose that you should wait until somebody else prominently publishes the explanation that you think is right (which is bound to happen sooner or later, if it hasn't already), and then linkpost it.

See also: my post on Endgame Safety.

EDIT: Oh oops I wasn’t reading very carefully, I guess there are no ideas here that aren’t word-for-word copied from very-widely-viewed twitter threads. I changed my vote to a weak-downvote, because I still feel like this post belongs to a genre that is generally problematic, and by not mentioning that fact and arguing that this post is one of the exceptions, it is implicitly contributing to normalizing that genre.

Update: I kinda regret this comment. I think when I wrote it I didn’t realize quite how popular the “Let’s figure out what Q* is!!” game is right now. It’s everywhere, nonstop.

It still annoys me as much as ever that so many people in the world are playing the “Let’s figure out what Q* is!!” game. But as a policy, I don’t ordinarily complain about extremely widespread phenomena where my complaint has no hope of changing anything. Not a good use of my time. I don’t want to be King Canute yelling at the tides. I un-downvoted. Whatever.