This is a crosspost from my blog. Its intention is to act as a primer on non-causal decision theories, and how they apply to the prisoner's dilemma in particular, for those who were taught traditional game theory and are unfamiliar with the LessWrong memeplex. I'm posting it here primarily to get feedback on it and make sure I didn't make any errors. I plan to update it as I hear counterarguments from causal decision theorists, so check the one on my blog if you want the most recent version.

The Prisoner's Dilemma is a well-known problem in game theory, economics, and decision theory. The simple version: Alice and Bob have been arrested on suspicion of having committed a crime. If they both stay silent, they'll each do a year in prison. If one of them testifies against the other while the other stays silent, the one silent one goes to prison for 4 years and the narc goes free. If they both testify against each other, they each serve two years in prison. In order to simplify the math, game theorists also make three rather unrealistic assumptions: the players are completely rational, they're completely self-interested, and they know all of these details about the game.

There's a broad consensus in these academic fields that the rational choice is to defect. This consensus is wrong. The correct choice in the specified game is to cooperate. The proof of this is quite straightforwards:

Both agents are rational, meaning that they'll take the action that gets them the most of what they want. (In this case, the fewest years in jail.) Since the game is completely symmetric, this means they'll both end up making the same decision. Both players have full knowledge of the game and each other, meaning they know that the other player will make the rational choice, and that the other player knows the same thing about them, etc. Since they're guaranteed to make the same decision, the only possible outcomes of the game are Cooperate-Cooperate or Defect-Defect, and the players are aware of this fact. Both players prefer Cooperate-Cooperate to Defect-Defect, so that's the choice they'll make.

Claiming that an entire academic field is wrong is a pretty bold claim, so let me try to justify it.

How an entire academic field is built on an incorrect assumption

The standard argument for Defecting is that no matter what the other player does, you're better off having defected. If they cooperate, it's better if you defect. If they defect, it's better if you defect. So by the sure-thing principle, since you know that if you knew the other player's decision in advance it would be best for you to defect no matter what their decision was, you should also defect without the knowledge of their decision.

The error here is to fail to take into account that both player's decisions are correlated. As the Wikipedia page explains:

"Richard Jeffrey and later Judea Pearl showed that [the Sure-thing] principle is only valid when the probability of the event considered is unaffected by the action (buying the property)."

The technical way of arriving at Defect-Defect is though iterated elimination of strictly dominated strategies. A strictly dominated strategy is one that pays less regardless of what the other player does. Since no matter what Bob does, Alice will get fewer years in jail if she defects than if she cooperates, there's (supposedly) no rational reason for hr to ever pick cooperate. Since Bob will reason the same way, Bob will also not pick cooperate, and both players will defect.

This line of logic is flawed. At the time that Alice is making her decision, she doesn't know what Bob's decision is; just that it will be the same as hers. So if she defects, she knows that her payoff will be two years in jail, whereas if she cooperates, it will only be one year in jail. Cooperating is better.

But wait a second, didn't we specify that Alice knows all details of the game? Shouldn't she know in advance what Bob's decision will be, since she knows all of the information that Bob does, and she knows how Bob reasons? Well, yeah. That's one of the premises of the game. But what's also a premise of the game is that Bob knows Alice's decision before she makes it, for the same reasons. So what happens if both players pick the strategy of "I'll cooperate if I predict the other player will defect, and I'll defect if I predict the other player will cooperate"? There's no answer; this leads to an infinite regress.

Or to put it in a simpler way: If Alice knows what Bob's decision will be, and knows that Bob's decision will be the same as hers, that means that she knows what her own decision will be. But knowing what your decision is going to be before you've made it means that you don't have any decision to make at all, since a specific outcome is guaranteed.

Here's the problem; this definition of "rationality" that's traditional used in game theory assumes logical omniscience from the player; that they have "infinite intelligence" or "infinite computing power" to figure out the answer to anything they want to know. But as Gödel's incompleteness theorem and the halting problem demonstrate, this is an incoherent assumption, and attempting to take it as a premise will lead to contradictions.

So the actual answer is that this notion of "rationality" is not logically valid, and a game phased in terms of it may well have no answer. What we actually need to describe games like these are theories of bounded rationality. This makes the problem more complicated, since the result could depend on exactly how much computing power each player has access to.

So ok, let's assume that our players are boundedly rational, but it's a very high bound. And the other assumptions are still in place; the players are trying to minimize their time in jail, they're completely self-interested, and they have common knowledge of these facts.

In this case, the original argument still holds; the players each know that "I cooperate and the other player defects" is not a possible outcome, so their choice is between Cooperate-Cooperate and Defect-Defect, and the former is clearly better.[1]

A version of the prisoner's dilemma where people tend to find cooperating more intuitive is that of playing against your identical twin. (Identical not just genetically, but literally an atom-by-atom replica of you.) Part of this may be sympathy leaking into the game; humans are not actually entirely self-interested, and it may be harder to pretend to be when imagining your decisions harming someone who's literally you. But I think that's not all of it; when you imagine playing against yourself, it's much more intuitive that the two yous will end up making the same decision.

The key insight here is that full "youness" is not necessary. If your replica had a different hair color, would that change things? If they were a different height, or liked a different type of food, would that suddenly make it rational to defect? Of course not. All that matters is that the way you make decisions about prisoner's dilemmas is identical; all other characteristics of the players can differ. And that's exactly what the premise of "both players are rational" does; it ensures that their decision-making processes are replicas of each other.

(Note that theories of bounded rationality often involve probability theory, and removing certainty from the equation doesn't change this. If each player only assigns 99% probability to the other person making the same decision, the expected value is still better if they cooperate.[2])

But causality!

A standard objection raised at this point is that this treatment of Alice as able to "choose" what Bob picks by picking the same thing herself violates causality. Alice could be on the other side of the galaxy from Bob, or Alice could have made her choice years before Bob; there's definitely no causal influence between the two.

...But why does there need to be? The math doesn't lie; Alice's expected value is higher if she cooperates than if she defects. It may be unintuitive for some people that Alice's decision can be correlated with Bob's without there being a casual link between them, but that doesn't mean it's wrong. Unintuitive things are discovered to be true all the time!

Formally, what's being advocated for here is Causal decision theory. Causal decision theory underlies the approach of iterated elimination of strictly dominated strategies; when in game theory you say "assume the other player's decision is set, now what should I do", that's effectively the same as how causal decision theory says "assume that everything I don't have causal control over is set in stone; now what should I do?". It's a great theory, except for the fact that it's wrong.

The typical demonstration of this is Newcomb's problem. A superintelligent trickster offers you a choice to take one or both of two boxes. One is transparent and contains $100, and the other is opaque, but the trickster tells you that it put $1,000,000 inside if and only if it predicted that you'd take only the $1,000,000 and not also the $100. The trickster then wanders off, leaving you to decide what to do with the boxes in front of you. In the idealized case, you know with certainty that the trickster is being honest with you and can reliably predict your future behavior. In the more realistic case, you know those things with high probability, as the scientists of this world have investigated its predictive capabilities, and it has demonstrated accuracy in thousands of previous such games with other people.

Similarly to the prisoner's dilemma, as per the sure-thing principle, taking both boxes is better regardless of what's inside the opaque one. Since the decision of what to put inside the opaque box has already been made, you have no causal control over it, and causal decision theory says you should take both boxes, getting $1000. Someone following a better decision theory can instead take just the opaque box, getting $1,000,000.

Newcomb's problem is perfectly realizable in theory, but we don't currently have the technology to predict human behavior with a useful degree of accuracy. This leads it to not feel very "real", and people can say they'd two-box without having to actually deal with the consequences of their decision.

So here's an even more straightforwards example. I'll offer you a choice between two vouchers: a red one or a blue one. You can redeem them for cash from my friend after you pick one. My friend Carol is offering $10 for any voucher handed in by someone who chose to take the blue voucher, and is offering $1 for any voucher from anyone else. She's also separately offering $5 for any red voucher.

If you take the red voucher and redeem it, you'll get $6. If you take the blue voucher and redeem it, you'll get $10. A rational person would clearly take the blue voucher. Causal decision theory concurs; you have causal control over how much Carol is offering, so you should take that into account.

Now Carol is replaced by Dave and I offer you the same choice. Dave does things sightly differently; he considers the position and velocity of all particles in the universe 24 hours ago, and offers $10 for any voucher handed in by someone who would have been caused to choose a blue voucher by yesterday's state of the universe, and $1 to anyone else. He also offers $5 for any red voucher.

A rational person notices that this is a completely equivalent problem and takes the blue voucher again. Causal decision theory notices that it can't affect yesterday's state of the universe, and takes the red voucher, since it's guaranteed to be worth $5 more than the blue one.

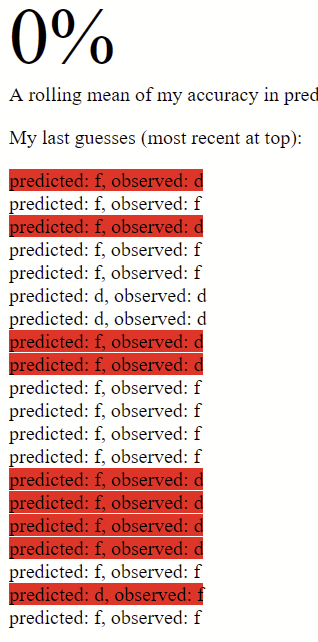

Unlike Newcomb's problem, this is a game we could play right now. Sadly though, I don't feel like giving out free money just to demonstrate that you could have gotten more. So here's a different offer; a modified Newcomb's problem that we can play with current technology. You can pay $10 to have a choice between two options, A and B. Before you make your choice, I will predict which option you're going to pick, and assign $20.50 to the other option. You get all the money assigned to the option you picked. We play 100 times in a row, 0.5 seconds per decision (via keypresses on a computer). I will use an Aaronson oracle (or similar) to predict your decisions before you make them.

This is a completely serious offer. You can email me, I'll set up a webpage to track your choices and make my predictions (letting you inspect all the code first), and we can transfer winnings via Paypal.

If you believe that causal decision theory describes rational behavior, you should accept this offer, since its expected value to you is $25. You can, of course, play around with the linked Aaronson oracle and note that it can predict your behavior with better than the ~51.2% accuracy needed for me to come out ahead in this game. This is completely irrelevant. CDT agents can have overwhelming evidence of their own predictability, and will still make decisions without updating on this fact. That's exactly what happens in Newcomb's problem: it's specified that the player knows with certainty, or at least with very high probability, that the trickster can predict their behavior. Yet the CDT agent chooses to take two boxes anyway, because it doesn't update on its own decision having been made when considering potential futures. This game is the same: you may believe that I can predict your behavior with 70% probability, but when considering option A, you don't update on the fact that you're going to choose option A. You just see that you don't know which box I've put the money in, and that by the principle of maximum entropy, without knowing what choice you're you're going to make, and therefore without knowing where I have a 70% chance of having not put the money, it has a 50% chance of being in either box, giving you an expected value of $0.25 if you pick box A.

If you're an advocate of CDT and find yourself not wanting to take me up on my offer to give you a free $25, because you know it's not actually a free $25, great! You've realized that CDT is a flawed model that cannot be trusted to make consistently rational decisions, and are choosing to discard it in favor of a better (probably informal) model that does not accept this offer.

But free will!

This approach to decision theory, where we consider the agent's decision to be subject to deterministic causes, tends to lead to objections along the lines of "in that case it's impossible to ever make a decision at all".

First off, if decisions can't occur, I'd question why you're devoting so much time to the study of something that doesn't exist.[3] We know that the universe is deterministic, so the fact that you chose to study decision theory anyway would seem to mean you believe that "decisions" are still a meaningful concept.

Yes yes, quantum mechanics etc. Maybe the universe is actually random and not deterministic. Does that restore free will? If your decisions are all determined by trillions of tiny coin flips that nothing has any control over, least of all you, does that somehow put you back in control of your destiny in a way that deterministic physics doesn't? Seems odd.

But quantum mechanics is a distraction. The whole point of decision theory is to formalize the process of making a decision into a function that takes in a world state and utility function, and outputs a best decision for the agent. Any such function would be deterministic[4] by design; if your function can output different decisions on different days despite getting the exact same input, then it seems like it's probably broken, since two decisions can't both be "best".[5]

This demonstrates why "free will" style objections to cooperation-allowing theories of rationality are nonsense. Iterated elimination of strictly dominated strategies is a deterministic process. If you claim that rational agents are guaranteed to defect in the prisoner's dilemma, and you know this in advance, how exactly do they have free will? An agent with true libertarian free will can't exist inside any thought experiment with any known outcome.

(Humans, by the way, who are generally assumed to have free will if anything does, have knowledge of causes of their own decisions all the time. We can talk about how childhood trauma changes our responses to things, take drugs to modify our behavior, and even engage in more direct brain modification. I have yet to see anyone argue that these facts cause us to lose free will or renders us incapable of analyzing the potential outcomes of our decisions.)

What exactly it means to "make a decision" is still an open question, but I think the best way to think about it is the act of finding out what your future actions will be. The feeling of having a choice between two options only comes from the fact that we have imperfect information about the universe. Maxwell's demon, with perfect information about all particle locations and speeds, would not have any decisions to make, as it would knows exactly what physics would compel it to do.[6] If Alice is a rational agent and is deciding what to do in the prisoner's dilemma, that means that Alice doesn't yet know what the rational choice is, and is performing the computation to figure it out. Once she knows what she's going to do, her decision has been made.

This is not to say that questions about free will are not interesting or meaningful in other ways, just that they're not particularly relevant to decision theory. Any formal decision theory can be implemented on a computer just as much as it can be followed by a human; if you want to say that both have free will, or neither, or only one, go for it. But clearly such a property of "free will" has no effect on which decision maximizes the agent's utility, nor can it interfere with the process of making a decision.

Models of the world are supposed to be useful

Many two-boxers in Newcomb's problem accept that it makes them less money, yet maintain that two-boxing is the rational decision because it's what their theory predicts and/or what their intuition tells them they should do.

You can of course choose to define words however you want. But if you're defining "rational" as "making decisions that lose arbitrary amounts of money", it's clearly not a very useful concept. It also has next to no relation to the normal English meaning of the word "rational", and I'd encourage you to pick a different word to avoid confusion.

I think what's happened here is a sort of streetlight effect. People wanted to formally define and calculate rational (i.e. "effective") decisions, and they invented traditional rational choice theory, or Homo economicus. And for most real-world problems they tested it on, this worked fine! So it became the standard theory of the field, and over time people started thinking about CDT as being synonymous with rational behavior, not just as a model of it.

But it's easy to construct scenarios where it fails. In addition to the ones discussed here, there are many other well-known counterexamples: the dollar auction, the St. Petersburg paradox, the centipede game[7], and more generally all sorts of iterated games where backwards induction leads to a clearly terrible starrting decision. (The iterated prisoner's dilemma, the ultimatum game, etc.)

I think causal decision theorists tend to have a bit of a spherical cow approach to these matters, failing to consider whether their theories are actually applicable to the real world. This is why I tried to provide real-life examples and offers to bet real money; to remind people that we're not just trying to create the most elegant mathematical equation, we're trying to actually improve the real world.

When your decision theory requires you to reject basic physics like a deterministic universe... maybe it's time to start looking for a new theory.

I welcome being caused to change my mind

My goal with this article is to serve as a comprehensive explanation of why I don't subscribe to causal decision theory, and operate under the assumption that some other decision theory is the correct one. (I don't know which.)

None of what I'm saying here is at all new, by the way. Evidential decision theory, functional decision theory, and superrationality are all attempts to come up with better systems that do things like cooperate in the prisoner's dilemma.[8] But they're often harder to formalize and harder to calculate, so they haven't really caught on.

I'm sure many people will still disagree with my conclusions; if that's you, I'd love to talk about it! If I explained something poorly or failed to mention something important, I'd like to find out so that I can improve my explanation. And if I'm mistaken, that would be extremely useful information for me to learn.

This isn't just an academic exercise. It's a lot easier to justify altruistic behavior when you realize that doing so makes it more likely that other people who reason in similar ways to you will treat you better in return. People will be less likely to threaten you if you credibly demonstrate that you won't accede to their demands, even if doing so in-the-moment would benefit you. And when you know that increasing the amount of knowledge people have about each other makes them more likely to cooperate with each other, it becomes easier to solve collective action problems.

Most philosophers only interpret the world in various ways. The point, however, is to change it.

- ^

Technically they could also implement a mixed strategy, but if you do the math you'll find that expected value is maximized if the probability of cooperation is set to 1.

- ^

With the traditional payoffs of 0, 1, 2, or 3 years in jail and the assumption of equal disutility for each of those years, it becomes better to defect once the probability falls below 75%. But this threshold depends on the exact payoffs and the player's utility curve, so that number isn't particularly meaningful.

- ^

Seems like an irrational decision.

- ^

if a mixed strategy is best, it was still a deterministic algorithm that calculated it to be better than any pure strategy.

- ^

Two specific decisions could have the same expected utility, but then the function should just output the set of all such decisions, not pick a random one. Or in an alternative framing, it should output the single decision of "use a random method to pick among all these options". This deterministic version of the decision function always exists and cannot be any less useful than a nondeterministic version, so we may as well only talk about the deterministic ones.

- ^

Note, by the way, the similarity here. The original Maxwell's demon existed outside of the real universe, leading to problematic conclusions such as its ability to violate the second law of thermodynamics. Once physicists tried to consider a physically realizable Maxwell's demon, they found that the computation necessary to perform its task would increase entropy by at least as much as it removes from the boxes it has control over. If you refuse to engage with reality by putting your thought experiment outside of it, you shouldn't be surprised when its conclusions are not applicable to reality.

- ^

Wikipedia's description of the centipede game is inelegant; I much prefer that in the Stanford Encyclopedia of Philosophy.

- ^

In particular I took many of my examples from some articles by Eliezer Yudkowsky, modified to be less idiosyncratic.

Not really disagreeing with anything specific, just pointing out what I think is a common failure mode where people first learn of better decision theories than CDT, say, "aha, now I'll cooperate in the prisoner's dilemma!" and then get defected on. There's still some additional cognitive work required to actually implement a decision theory yourself, which is distinct from both understanding that decision theory, and wanting to implement it. Not claiming you yourself don't already understand all this, but I think it's important as a disclaimer in any piece intended to introduce people previously unfamiliar with decision theories.