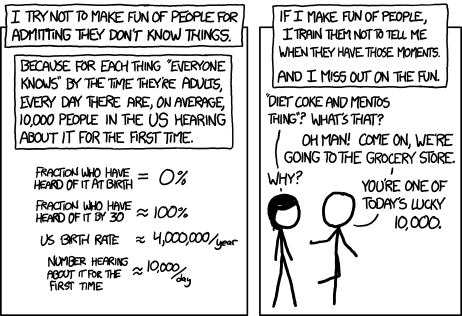

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

We'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

AISafety.info - Interactive FAQ

Additionally, this will serve as a way to spread the project Rob Miles' volunteer team[1] has been working on: Stampy and his professional-looking face aisafety.info. Once we've got considerably more content[2] this will provide a single point of access into AI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that! You can help by adding other people's questions and answers or getting involved in other ways!

We're not at the "send this to all your friends" stage yet, we're just ready to onboard a bunch of editors who will help us get to that stage :)

We welcome feedback[3] and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase. You are encouraged to add other people's answers from this thread to Stampy if you think they're good, and collaboratively improve the content that's already on our wiki.

We've got a lot more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

Guidelines for Answerers:

- Linking to the relevant canonical answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

- ^

We'll be starting a three month paid distillation fellowship in mid February. Feel free to get started on your application early by writing some content!

- ^

Via the feedback form.

Sorry for the length.

Before i ask the my question I think its important to give some background as to why I'm asking it. if you feel that its not important you can skip to the last 2 paragraphs.I'm by all means of the word layman. At computer science, AI let alone AI safety. I am on the other hand someone who is very enthusiastic about learning more about AGI and everything related to it. I discovered this forum a while back and in my small world this is the largest repository of digestible AGI knowledge. I have had some trouble understanding introductory topics because i would have some questions/contentions about a certain topic and never get answers to them after I finish reading the topic. I'm guessing its assumed knowledge on part of the writers. So the reason I'm asking this question is to clear up some initial outsider misunderstandings and after that benefit from decades of acquired knowledge that this forum has to offer. Here goes.

There is a certain old criticism against Gofai that goes along the lines-A symbolic expression 'Susan'+'Kitchen'= 'Susan has gone to get some food' can just as be replaced by symbols 'x'+'y'='z' .Point being that simply describing or naming something Susan doesn't capture the idea of Susan even if the expression works in the real world.That is 'the idea of Susan' is composed by a specific face,body type,certain sounds, hand writing, pile dirty plates in the kitchen, the dog MrFluffles, a rhombus, etc. It is composed of anything that invokes the idea Susan in a general learning agent. Such that if that agent sees a illuminated floating rhombus approaching the kitchen door the expression's result should still be 'Susan has gone to get some food'. I think for brevities sake I don't have to write down the Gofai's response to this specific criticism. The important thing to note is that they ultimately failed in their pursuits.

Skip to current times and we have Machine learning. It works! There are no other methods that even come close to its results. Its doing things Gofai pundits couldn't even dream of. It can solve Susan expressions given the enough data. Capturing 'the idea of Susan' well enough and showing signs of improvement every single year. And if we look at the tone of every AI expert, Machine learning is the way to AGI. Which means Machine Learning inspired AGI is what AI_Safety is currently focused on. And that brings me to my contention surrounding the the idea of goals and rewards, the stuff that keep the Machine Learning engine running.

So to frame my contention, For simplicity we make a hypothetical machine learning model with the goal(mathematical expression) stated as:

'Putting'+ 'Leaves'+ 'In'+'Basket'='Reward/Good'. Lets name this expression 'PLIBRG' for short.

Now the model will learn the idea of 'Putting' well enough along with the ideas of the remaining compositional variables given good data and engineering. But 'PLIBRG' is itsself a human idea.The idea being 'Clean the Yard'. I assume we can all agree that no matter how much we change and improve the expression 'PLIBRG' it will never fully represent the idea of 'Clean the Yard'. This to me becomes similar to the Susan problem that Gofai faced way back. For comparison sake -

1)-'Susan'+'Kitchen'= 'Susan has gone to get some food'|

is similar to

'Yard'+'Dirty'='Clean the Yard'|

2)-'Susan' is currently composed by:a name/a description|

is similar to

'Clean the Yard' is currently composed by: 'PLIBRG' expression|

3)-'Susan' should be composed by:a face,body type,certain sounds, MrFluffles,rhombus|

is similar to

'Clean the Yard' should be composed by:no leaves on grass,no sand on pavements,put leaves in bin,fill the bird feed,avoid leaning on Mr Hick's fence while picking leaves|

The only difference between the two being Machine learning has another lower level of abstraction. So the very notion of having a system with a goal results in the Susan problem .As the goal a human-centric idea, has to be described mathematically or algorithmically.A potential solution is to make the model learn the goal itself. But isn't this just kicking the can the up levels of abstractions? How many levels should we go up until the problem disappears. I know that in my own clumsy way that I've just described the AI Alignment problem. My point being: Isn't it the case that if we solve the Alignment problem we could use that solution to solve Gofai. And if previously Gofai failed to solve this problem what are the chances Machine learning will.

To rebuttal the obvious response like 'but humans have goals'. In my limited knowledge I would heavily disagree. Sexual arousal doesn't tell you what to do. Most might alleviate themselves, but some will self harm, some ignore the urge, some interpret it as a religious test and begin praying/meditation etc. And there is nothing wrong with doing any of the above in evolutionary terms.In a sense sexual arousal doesn't tell you to do anything in particular, it just prompts you to do what you usually do when it activates. The idea that our species propagates because of this is to me a side effect rather than an intentional goal.

So given all that I've said above my question is: Why are we putting goals in a AI/AGI when we have never been able to fully describe any 'idea' in programmable terms for the last 60 years. Is it because its the only currently viable way to achieve AGI. Is it because of advancements in machine learning.Has there been some progress that shows that we can algorithmically describe any human goal. Why is it obvious to everyone else that goals are a necessary building block in AGI. Have I completely lost the plot.

As I said above I'm a layman. So please point out any misconception you find.