What important questions would you want to see discussed and debated here about Anthropic? Suggest and vote below.

(This is the third such poll, see the first and second linked.)

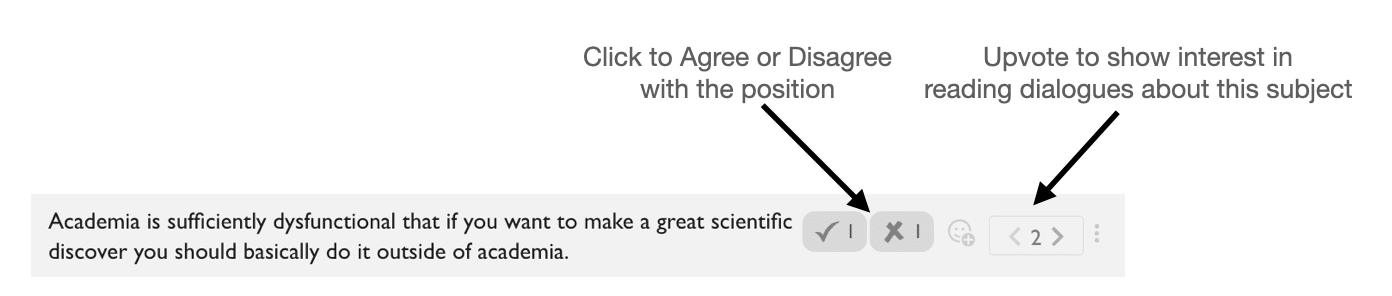

How to use the poll

- Reacts: Click on the agree/disagree reacts to help people see how much disagreement there is on the topic.

- Karma: Upvote positions that you'd like to read discussion about.

- New Poll Option: Add new positions for people to take sides on. Please add the agree/disagree reacts to new poll options you make.

The goal is to show people where a lot of interest and disagreement lies. This can be used to find discussion and dialogue topics in the future.

I would probably be up for dialoguing. I don't think deploying Claude 3 was that dangerous, though I think that's only because the reported benchmark results were misleading (if the gap was as large as advertised it would be dangerous).

I think Anthropic overall has caused a lot of harm by being one of the primary drivers of an AI capabilities arms-race, and by putting really heavily distorting incentives on a large fraction of the AI Safety and AI governance ecosystem, but Claude 3 doesn't seem that much like a major driver of either of these (on the margin).