What important questions would you want to see discussed and debated here about Anthropic? Suggest and vote below.

(This is the third such poll, see the first and second linked.)

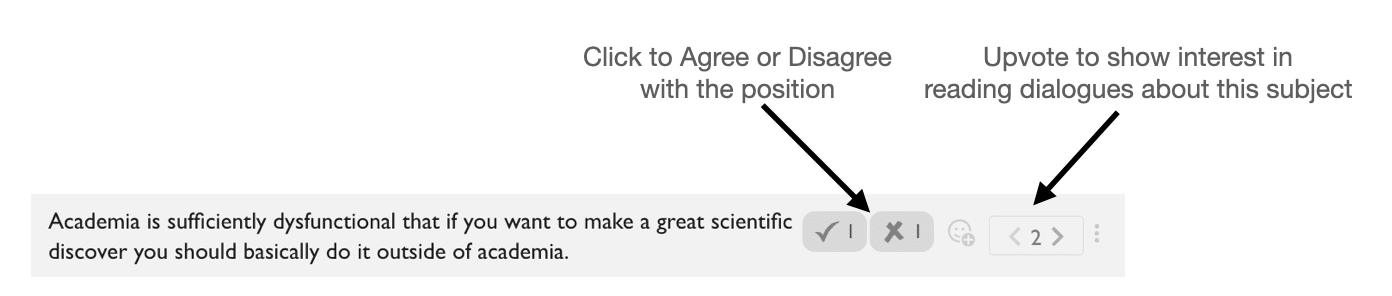

How to use the poll

- Reacts: Click on the agree/disagree reacts to help people see how much disagreement there is on the topic.

- Karma: Upvote positions that you'd like to read discussion about.

- New Poll Option: Add new positions for people to take sides on. Please add the agree/disagree reacts to new poll options you make.

The goal is to show people where a lot of interest and disagreement lies. This can be used to find discussion and dialogue topics in the future.

My Anthropic take, which is sort of replying to this thread between @aysja and @LawrenceC but felt enough of a new topic to just put here.

It seems overwhelmingly the case that Anthropic is trying to thread some kind of line between "seeming like a real profitable AI company that is worth investing in", and "at the very least paying lip service to, and maybe, actually taking really seriously, x-risk."

(This all goes for OpenAI too. OpenAI seems much worse on these dimensions to me right now. Anthropic feels more like it has the potential to actually be a good/safe org in a way that OpenAI feels beyond hope atm, so I'm picking on Anthropic)

For me, the open, interesting questions are:

Like, it seems like Anthropic is trying to market itself to investors and consumers as "our products are powerful (and safe)", and trying to market itself to AI Safety folk as "we're being responsible as we develop along the frontier." These are naturally in tension.

I think it's plausible (although I am suspicious) that Anthropic's strategy is actually good. i.e. maybe you really do need to iterate on frontier AI to do meaningful safety work, maybe you do need to stay on the frontier because the world is accelerating whether Anthropic wants it to or not. Maybe pausing now is bad. Maybe this all means you need a lot of money, which means you need investors an consumers to believe your product is good.

But, like, for the AI safety community to be epistemically healthy, we need to have some way of engaging with this question.

I would like to live in a world where it's straightforwardly good to always spell out true things loudly/clearly. I'm not sure I have the luxury of living in that world. I think I need to actually engage with the possibility that it's necessary for Anthropic to murkily say one thing to investors and another thing to AI safety peeps. But, I do not think Anthropic has earned my benefit of the doubt here.

But, the way I wish the conversation was playing out was less like "did Anthropic say a particular misleading thing?" and more like "how should EA/x-risk/safety folk comport themselves, such that they don't have to trust Anthropic? And how should Anthropic comport itself, such that it doesn't have to be running on trust, when it absorbs talent and money from the EA landscape?"

I think it’s pretty unlikely that Anthropic’s murky strategy is good.

In particular, I think that balancing building AGI with building AGI safely only goes well for humanity in a pretty narrow range of worlds. Like, if safety is relatively easy and can roughly keep pace with capabilities, then I think this sort of thing might make sense. But the more the expected world departs from this—the more that you might expect safety to be way behind capabilities, and the more you might expect that it’s hard to notice just how big that gap is and/or how much of... (read more)