What important questions would you want to see discussed and debated here about Anthropic? Suggest and vote below.

(This is the third such poll, see the first and second linked.)

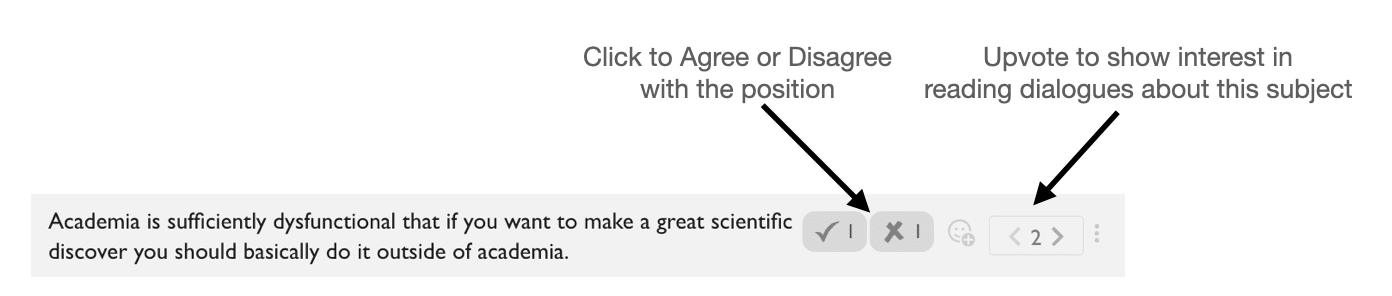

How to use the poll

- Reacts: Click on the agree/disagree reacts to help people see how much disagreement there is on the topic.

- Karma: Upvote positions that you'd like to read discussion about.

- New Poll Option: Add new positions for people to take sides on. Please add the agree/disagree reacts to new poll options you make.

The goal is to show people where a lot of interest and disagreement lies. This can be used to find discussion and dialogue topics in the future.

I think deploying Claude 3 was fine and most AI safety people are confused about the effects of deploying frontier-ish models. I haven't seen anyone else articulate my position recently so I'd probably be down to dialogue. Or maybe I should start by writing a post.

[Edit: this comment got lots of agree-votes and "Deploying Claude 3 increased AI risk" got lots of disagreement so maybe actually everyone agrees it was fine.]

Unsure how much we disagree Zach and Oliver so I'll try to quantify: I would guess that Claude 3 will cut release date of next gen models from OpenAI by a few months at least (I would guess 3 months), which has significant effects on timelines.

Tentatively, I'm thinking that this effect may be surlinear. My model is that each new release increases the speed of development (bc of increased investment in all the value chain including compute + realization from people that it's not like other technologies etc) and so that a few months now causes more than a few months on AGI timelines.