I'm looking for a list such that for each entry on the list we can say "Yep, probably that'll happen by 2040, even conditional on no super-powerful AGI / intelligence explosion / etc." Contrarian opinions are welcome but I'm especially interested in stuff that would be fairly uncontroversial to experts and/or follows from straightforward trend extrapolation. I'm trying to get a sense of what a "business as usual, you'd be a fool not to plan for this" future looks like. ("Plan for" does not mean "count on.")

Here is my tentative list. Please object in the comments if you think anything here probably won't happen by 2040, I'd love to discuss and improve my understanding.

- Energy is 10x cheaper. [EDIT: at least for training and running giant neural nets, I'm less confident about energy for e.g. powering houses but I still think probably yes.] This is because the cost of solar energy has continued on its multi-decade trend, though it is starting to slow down a bit. Energy storage has advanced as well, smoothing out the bumps. [EDIT: Now I think fusion power will also be contributing, probably. Though it may not be competitive with solar, idk.]

- Compute (of the sort relevant to training neural nets) is 2 OOMs cheaper. Energy is the limiting factor.

- Models 5 OOMs more compute-costly than GPT-3 have been trained; these models are about human brain-sized and also have somewhat better architecture than GPT-3 but nothing radically better. They have much higher-quality data to train on. Overall they are about as much of an improvement over GPT-3 as GPT-3 was over GPT-1.

- There's been 20 years of "Prompt programming" now, and so loads of apps have been built using it and lots of kinks have been worked out. Any thoughts on what sorts of apps would be up and running by 2040 using the latest models?

- Models merely the size of GPT-3 are now cheap enough to run for free. And they are qualitatively better too, because (a) they were trained to completion rather than with early stopping, (b) they were trained on higher-quality data, (c) various other optimized architectures and whatnot were employed, (d) they were then fine-tuned on loads of data for whatever task is at hand, and (e) decades of prompt programming and prompt-SGD has resulted in excellent prompts as well that fully utilize the model's knowledge, (f) they even have custom chips specialized to run specific models.

- The biggest models--3 OOMs bigger than GPT-3--are still only a bit more expensive at inference time than GPT-3 was in 2021. Energy is the main cost. Vast solar panel farms power huge datacenters on which these models live, performing computations to serve requests from all around the world during the day when energy is cheapest.

- Some examples of products and services:

- Basically all the apps that people talk about maybe doing with GPT-3 in 2021 have been successfully implemented by now, and work as well as anyone in 2021 hoped. It just took two decades to accomplish (and bigger models!) instead of two years and GPT-3.

- There are now very popular chatbots, that are in most ways more engaging and fun to talk to than the average human. There are many of these bots catering to different audiences, and they can be fine-tuned to particular customers. A billion people talk to them daily.

- There are specialized chatbots for various jobs, e.g. customer support.

- There are now excellent predictive tools that can read data about a person, especially text authored by that person, and then make predictions like "probability that they will buy product X" and "probability that they will vote Republican"

- Cars are all BEVs, with comparable range to 2020s gas cars but much lower operating costs due to energy being practically free and maintenance being very easy for BEVs.

- Cars are finally self-driving, with cheap LIDAR sensors and bigger brains trained on way more data along with many layers of hard-coded tweaks to maximize safety. (Also various regulations that make it easier for them, e.g. by starting with restrictions on what sorts of areas they can operate in, and using big pre-trained models in server farms to make important judgment calls for individual cars and monitor the roads more generally via cameras to look out for anomalies). (I'm not so sure about this one, part of me wonders if self-driving cars just won't happen on business-as-usual).

- Starlink internet is fast, reliable, cheap, and covers the entire globe.

- 3D printing is much better and cheaper now. Most cities have at least one "Additive Factory" that can churn out high-quality metal or plastic products in a few hours and deliver them to your door, some assembly required. (They fill up downtime by working on bigger orders to ship to various factories that use 3D-printed components, which is most factories at this point since there are some components that are best made that way)

- Drone delivery? I feel confused about this, shouldn't it have happened already? What is the bottleneck? This article makes it seem like the bottleneck is FAA regulation. [EDIT: I talked to an amazon drone delivery guy recently. He said 95% of the job is trying to figure out how to improve safety to meet regulatory requirements. He said they have trouble using neural nets for vision because they aren't interpretable so you can't prove anything about their safety properties.]

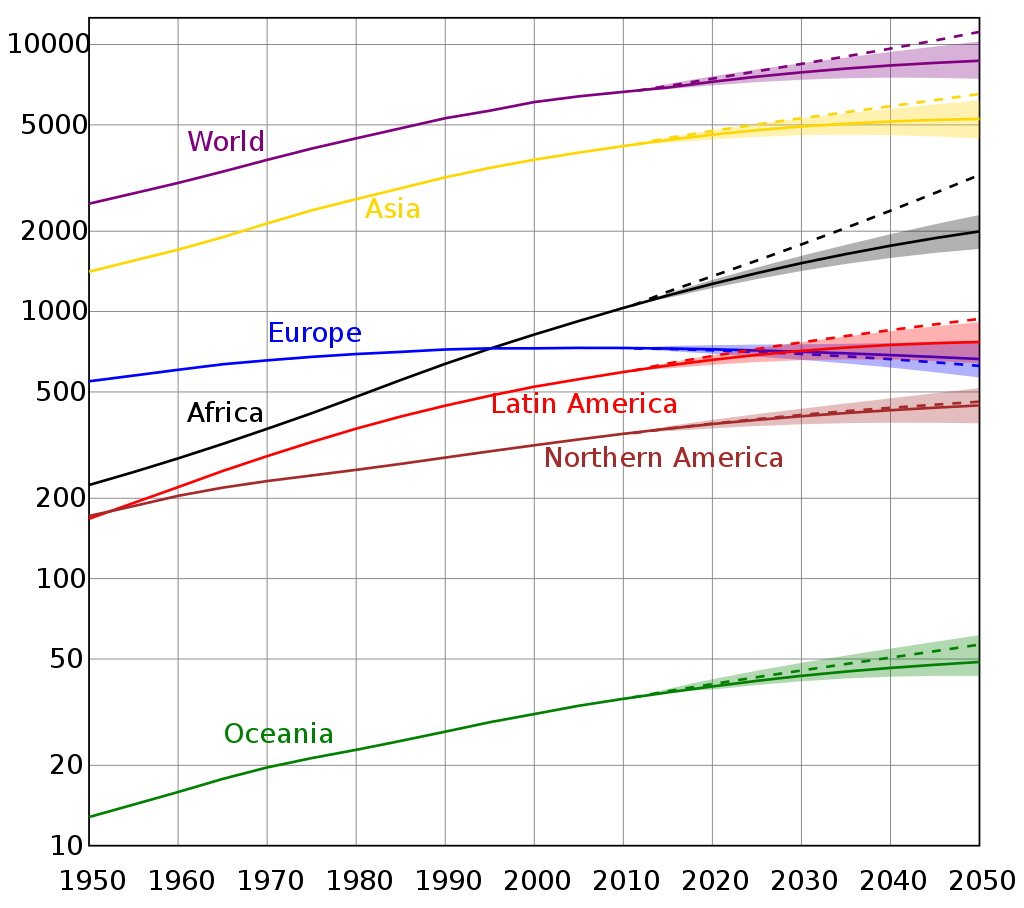

- World GDP is a bit less than twice what it is now. Poverty is lower but not eliminated.

- Boring company? Neuralink? I'm not sure what to think of them. I guess I'll ignore them for now, though I do feel like probably at least one of them will be a big deal...

- Starship or something similar is operational and working more or less according to specs promised in 2020. Maybe point-to-point transport on Earth didn't work out, maybe the cost per kilo to LEO never got quite as low as $15, but still it's gotta be pretty low--maybe $50? (For comparison, it's currently about $1000 and five years ago was $5000) Thus, Elon probably gets his colony on Mars after all, and NASA gets their moon base, and there's probably a big space station too and maybe some asteroid mining operations?

- Video games now employ deep neural nets in a variety of ways. Language model chatbots give NPC's personality; RL-trained agents make bots challenging and complex; and perhaps most of all, vision models process the wireframe video game worlds into photorealistic graphics. Perhaps you need to buy specialized AI chips to enjoy these things, like people buy specialized graphics cards today.

- Virtual reality is now commonplace; most people have one or two headsets just like they have phones, laptops, etc. today. The headsets are low weight and high-definition compared to 2021's. Many people use them for work, and many more people use them for games and socializing.

- The military technology outlined here exists, though it hasn't been used in a major war because there hasn't been a major war, and as a result the actual composition of most major militaries still looks pretty traditional (tanks, aircraft carriers, etc.) It's been used in various proxy wars and civil wars though, and it's becoming increasingly apparent that the old tech is obsolete.

- Household robots. Today Spot Mini costs $74,500. In 2040 you'll be able to buy a robot that can load and unload a dishwasher, go up and down stairs, open and close doors, and do various other similar tasks, for less than $50,000. (Maybe as low as $7,500?) That's not to say that many people will buy such robots; they might be still expensive enough and finicky enough to be mostly toys for rich people.

My list is focused on technology because that's what I happened to think about a bunch, but I'd be very interested to hear other predictions (e.g. geopolitical and cultural) as well.

AI-written books will be sold on Amazon, and people will buy them. Specialty services will write books on demand based on customer specifications. At least one group, and probably several, will make real money in erotica this way. The market for hand-written mass market fiction, especially low-status stuff like genre fiction and thrillers, will contract radically.

By this point, academia may have begun shifting away from papers as a unit of information transmission, and away from manual editing. Some combination of NLP and standardized ontologies may replace them, a shift which is already underway.