A thing that feels somewhat relevant here is the Dark Forest Theory of AI Mass Movements. New people keep showing up, seeing a Mass Movement Shaped Hole, and being like "Are y'all blind? Why are you not shouting from the rooftops to shut down AI everywhere and get everyone scared?"

And the answer is "well, I do think maybe LWers are biased against mainstream politics in some counterproductive ways, but there are a lot of genuine reasons to be wary of mass movements. They are dumb, hard-to-aim at exactly the right things, and we probably need some very specific solutions here in order to be helpful rather than anti-helpful or neutral-at-best. And political polarization could make this a lot harder to talk sanely about."

One of the downsides of mass-movement shaped solutions is making it harder to engage in trades like you propose here.

There's a problem, where, AI is pretty obviously scary in a lot of ways, and a Mass Movement To Shutdown AI may happen to us whether we want it or not. And if x-risk professionals aren't involved trying to help steer it it may be a much stupider worse version of itself.

So, I don't know if it's actually tractable to make the trade of "avoid mass mov...

I think for progress, the One Dial theory is not true. I think the One Dial theory sounds suspiciously like a story people tell themselves to justify feeling clever about doing the kind of dumb shouty things they accuse the unwashed masses of doing, which makes the One Dial theory a bit closer to being true - but it still isn't true for something as broad as "progress". It wasn't even completely true for COVID either, but that's a narrower topic already; and I'm convinced it went as it did not due to people being too stupid to appreciate more than one dial, but due to elites on both sides assuming they were and dumbing down their messages down to the point of wrongness. For example "MASKS DON'T WORK" instead of "we have a limited supply of masks and healthcare workers need them most so private citizens should make the sacrifices of only using homemade ones for now", followed by "MASKS WORK" instead of "we don't have full evidence but odds are that masks help based on pure mechanistic principles and right now really every infection prevented counts", with contrarians now going "MASKS DON'T WORK" instead of "masks probably do something but no one has done studies meeting our ridiculo...

Even if you buy the dial theory, it still doesn't make sense to shout Yay Progress on the topic of AGI. Singularity is happening this decade, maybe next, whether we shout Yay or Boo. Shouting Boo just delays it a little and makes it more likely to be good instead of bad. (Currently is it quite likely to be bad).

Consider that not everyone shares your view that the Singularity is happening soon, or that it will be better if delayed.

I very much agree with you here and in your AGI deployment as an act of aggression post; the overwhelming majority of humans do not want AGI/ASI and its straightforward consequences (total human technological unemployment and concomitant abyssal social/economical disempowerment), regardless of what paradisaical promises (for which there is no recourse if they are not granted: economically useless humans can't go on strike, etc) are promised them.

The value (this is synonymous with "scarcity") of human intelligence and labor output has been a foundation of every human social and economic system, from hunter-gatherer groups to highly-advanced technological societies. It is the bedrock onto which humanity has built cooperation, benevolence, compassion, and care. The value of human intelligence and labor output gives humans agency, meaning, decision-making power, and bargaining power towards each other and over corporations / governments. Beneficence flows from this general assumption of human labor value/scarcity.

So far, technological development has left this bedrock intact, even if it's been bumpy (I was gonna say "rocky" but that's a mixed metaphor for sure) on the surface. The bedr...

I have read your comments on the EA forum and the points do resonate with me.

As a layman, I do have a personal distrust with the (what I'd call) anti-human ideologies driving the actors you refer to and agree that a majority of people do as well. It is hard to feel much joy in being extinct and replaced by synthetic beings in probably a way most would characterize as dumb (clippy being the extreme)

I also believe that fundamental changing of the human subjective experience (radical bioengineering or uploading to an extent) in order to erase the ability to suffer in general (not just medical cases like depression) as I have seen being brought up by futurist circles is also akin to death. I think it could possibly be a somewhat literal death, where my conscious experience actually stops if radical changes would occur, but I am completely uneducated and unqualified on how consciousness works.

I think that a hypothetical me, even with my memories, who is physically unable to experience any negative emotions would be philosophically dead. It would be unable to learn nor reflect and its fundamentally different subjective experience would be so radically different from me, and any fut...

AGI is potentially far more useful and powerful than nuclear weapons ever were, and also provides a possible route to breaking the global stalemate with nuclear arms.

If this is true -- or perceived to be true among nuclear strategy planners and those with the authority to issue a lawful launch order -- it might creates disturbingly (or delightfully; if you see this as a way to prevent the creation of AGI altogether) strong first-strike incentives for nuclear powers which don't have AGI, don't want to see their nuclear deterrent turned to dust, and don't want to be put under the sword of an adversary's AGI.

My biggest counterargument to the case that AI progress should be slowed down comes from an observation made by porby about a fundamental lack of a property we theorize about AI systems, and the one foundational assumption around AI risk:

Instrumental convergence, and it's corollaries like powerseeking.

The important point is that current and most plausible future AI systems don't have incentives to learn instrumental goals, and the type of AI that has enough space and has very few constraints, like RL with sufficiently unconstrained action spaces to learn instrumental goals is essentially useless for capabilities today, and the strongest RL agents use non-instrumental world models.

Thus, instrumental convergence for AI systems is fundamentally wrong, and given that this is the foundational assumption of why superhuman AI systems pose any risk that we couldn't handle, a lot of other arguments for why we might to slow down AI, why the alignment problem is hard, and a lot of other discussion in the AI governance and technical safety spaces, especially on LW become unsound, because they're reasoning from an uncertain foundation, and at worst are reasoning from a false premise to reach ma...

My model is that Marc Andreessen just consistently makes badly-reasoned statements:

- Comparing AI doomerism to love of killing Nazis

- Endorsing the claim that arbitrarily powerful technologies don't change the equilibrum of good and bad forces

- Last year being unable to coherently explain a single Web3 use case despite his firm investing $7.6B in the space

There is a self fulfilling component in "Dialism". Because both our decision making depends on the number of dials and the number of dials depends on the collective actions of the humanity.

If there is only one dial = if humanity behaves as if there is only one dial. Social constructs do not evaporate if you don't believe in them. But if there is a critical mass of people who do not believe in them, who refuse to act on them, them they loose their power and cease to exist.

Don't agree to one dial. Make more of them. Don't let the Moloch win.

Instead, effectively there is a single Dial of

DestinyProgress, based on the extent our civilization places restrictions, requires permissions and places strangleholds on human activity, from AI to energy to housing and beyond.

If you have that model, how do you square the fact that Marc Andreessen is a NIMBY?

I think a good model of what Marc is doing is that he positions himself as a thought leader in a way that's benefitial for getting startups to come to him and getting LPs to give him money.

If he would argue for AI regulation that might give LPs ...

Just wanted to chime in and say that for weeks before reading your post, I'd also been interpreting Tyler's behavior on AI in exactly the same way you describe here. Thanks for expressing it so well.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

As the dial is turned up

[...]

As the dial is turned to the left

Let's assume, for the sake of epistemic charity, that this is just a typo, and not you trying to sneak in some political connotation.

“There is a single light of science. To brighten it anywhere is to brighten it everywhere.” – Isaac Asimov

You cannot stand what I’ve become

You much prefer the gentleman I was before

I was so easy to defeat, I was so easy to control

I didn’t even know there was a war

– Leonard Cohen, There is a War

“Pick a side, we’re at war.”

– Steven Colbert, The Colbert Report

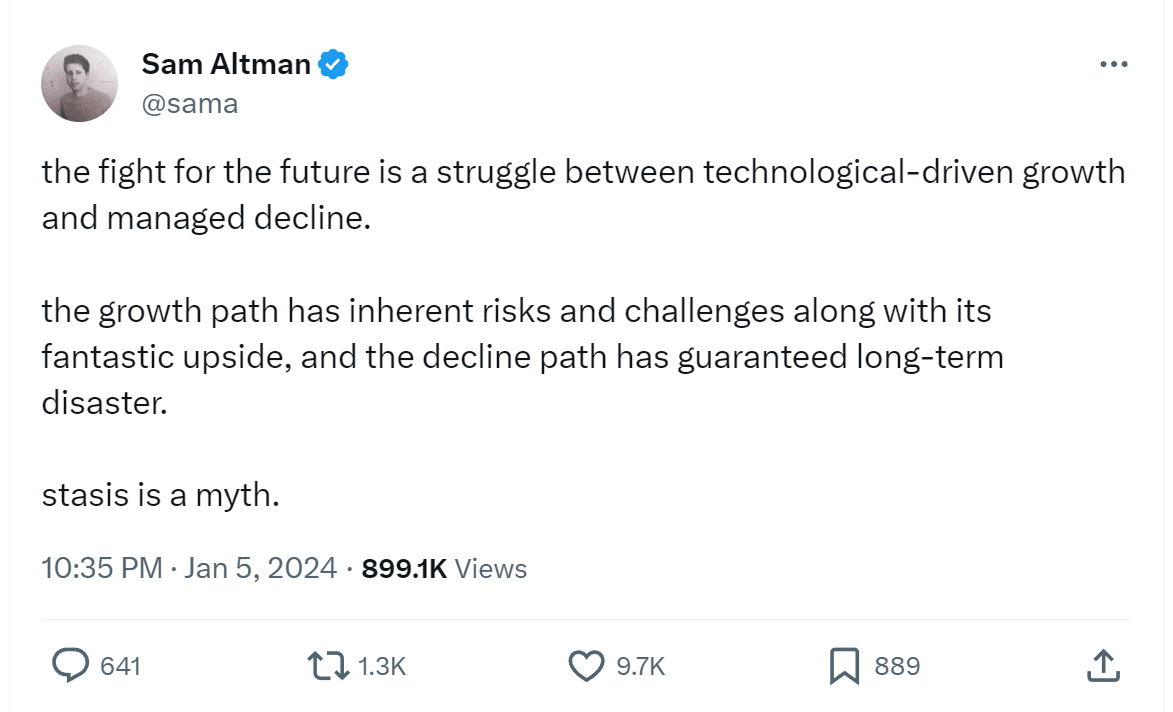

Recently, both Tyler Cowen in response to the letter establishing consensus on the presence of AI extinction risk, and Marc Andreessen on the topic of the wide range of AI dangers and upsides, have come out with posts whose arguments seem bizarrely poor.

These are both excellent, highly intelligent thinkers. Both clearly want good things to happen to humanity and the world. I am confident they both mean well. And yet.

So what is happening?

A Theory

My theory is they and similar others believe discourse in 2023 cannot handle nuance.

Instead, effectively there is a single Dial of

DestinyProgress, based on the extent our civilization places restrictions, requires permissions and places strangleholds on human activity, from AI to energy to housing and beyond.If we turn this dial down, and slow or end such progress across the board, our civilization will perish. If you turn the dial up, we will prosper.

In this view, any talk of either extinction risks or other AI downsides is functionally an argument for turning the dial down, saying Boo Progress, when we instead desperately need it turn the dial up, and say Yay Progress.

It would, again in this view, be first best to say Yay Progress in most places, while making a careful narrow exception that lets us guard against extinction risks. Progress is no use if you are dead.

Alas, this is too nuanced, and thus impossible. Trying will not result in the narrow thing that would protect us. Instead, trying turns the dial down which does harm everywhere, and also does harm within AI because the new rules will favor insiders and target mundane utility without guarding against danger, and the harms you do elsewhere inhibit sane behavior.

Thus, the correct thing to do is shout Yay Progress from the rooftops, by whatever means are effective. One must think in terms of the effects of the rhetoric on the dial and the vibe, not on whether the individual points track underlying physical reality. Caring about individual points and how they relate to physical reality, in this model, is completely missing the point.

This doesn’t imply there is nothing to be done to reduce extinction risks. Tyler Cowen in particular has supported at least technical private efforts to do this. Perhaps people in National Security or high in government, or various others who could help, could have their minds changed in good directions that would let us do nuanced useful things. But such efforts must be done quietly, among the cognoscenti and behind the scenes, a la ‘secret congress.’

While I find this model incomplete, and wish for higher epistemic standards throughout, I agree that in practice this single Dial of Progress somewhat exists.

Also Yay Progress.

Robin Hanson explicitly endorses the maximalist Yay Progress position. He expects this will result in lots of change, including the replacement of biological humans with machine-based entities that are very different from us and mostly do not share many of our values, in a way that I would consider human extinction. He considers such machines to be our descendants, and considers the alternative worse..

This post fleshes out the model, its implications, and my view of both.

Consider a Dial

What if, like the metaphorical single light of science, there was also a single knob of (technological, scientific, economic) progress?

Collectively, the sum of little decisions, the lever is moved.

If we turn the dial up, towards Yay Progress, we get more progress.

If we turn the dial down, towards Boo Progress, we get less progress.

As the dial is turned up, people are increasingly empowered to do a wide variety of useful and productive things, without needing to seek out permission from those with power or other veto points. It is, as Marc Andreessen puts it, time to build. Buildings rise up. Electrical power and spice flow. Revolutionary science is done. Technologies get developed. Business is done. The pie grows.

There are also downsides. Accidents happen. People get hurt. People lose their jobs, whether or not the total quantity and quality of jobs increases. Inequality might rise, distribution of gains might not be fair. Change occurs. Our lives feel less safe and harder to understand. Adaptations are invalidated. Entrenched interests suffer. There might be big downsides where things go horribly wrong.

As the dial is turned to the left, people are increasingly restricted from doing a wide variety of useful and productive things. To do things, you need permission from those with power or other veto points. Things are not built. Buildings do not rise. Electrical power and spice do not flow. Revolutionary science is not done. Technology plateaus. Business levels off. The pie shrinks.

There are also upsides. Accidents are prevented. People don’t get hurt in particular prevented ways. People’s current jobs are more often protected, whether or not the total quantity and quality of jobs increases. Inequality might fall if decisions are made to prioritize that, although it also might rise as an elite increasingly takes control. Redistribution might make things more fair, although it might also make things less fair. Change is slowed. Our lives feel safer and easier to understand. Adaptations are sustained. Entrenched interests prosper. You may never know what we missed out on.

It would be great if there was not one but many dials. So we could build more houses where people want to live and deploy increasing numbers of solar panels and ship things between our ports, while perhaps choosing to apply restraint to gain of function research, chemical weapons and the Torment Nexus.

Alas, we mostly don’t have the ‘good things dial’ and the ‘bad things dial’ let alone more nuance than that. In practice, there’s one dial.

While I do not think it is that simple, there is still a lot of truth to this model.

One Dial Covid

Consider the parallel to Covid.

The first best solution would have been to look individually at proposed actions, consider their physical consequences, and choose the best possible actions that strike a balance between economic costs and health risks and other considerations, and adapt nimbly as we got more information and circumstances changed.

That’s mostly not what we got. What did we mostly get? One dial.

We had those who were ‘Boo Covid’ and always advocated Doing More. We had those who said ‘Yay Covid’ (or as they would say ‘Yay Freedom’ or ‘Yay Life’) and advocated returning to normal. The two sides then fought over the dial.

Tyler Cowen was quite explicit about this on March 31, 2020, in response to Robin Hanson’s proposal to deliberately infect the young to minimize total harm:

We didn’t entirely get only one dial. Those who cared about the physical consequences of various actions did, at least some of the time, manage to pull this particular rope sideways. We got increasingly (relatively) sane over time on masks, on surfaces, on outdoors versus indoors, and on especially dangerous activities like singing.

That was only possible because some people cared about that. With less of that kind of push, we would have had less affordance for such nuance. With of that kind of push, we would have perhaps had somewhat more. The people who were willing to say ‘I support the sensible version of X, but oppose the dumb version’ are the reason there’s any incentive to choose the sensible versions of things.

There was also very much a ‘this is what happens when you turn the dial on Boo Covid up, and it’s not what you’d prefer, and you mostly have to choose direction on the dial’ aspect to everything. A lot of people have come around to the position ‘there was a plausible version of Boo Covid that would have been worthwhile, but given what we know now, we should have gone Yay Freedom instead and accepted the consequences.’

Suppose, counterfactually, that mutations of Covid-19 threatened to turn it into an extinction risk if it wasn’t suppressed, and you figured this out. We needed to take extraordinary measures, or else. You have strong evidence suggesting this is 50% to happen if Covid isn’t suppressed worldwide. You shout from the rooftops, yet others mostly aren’t buying it or don’t seem able to grapple with the implications. ‘Slightly harsher suppression measures’ would have a minimal impact on our chances – to actually prevent this, we’d need some combination of a highly bold research project and actual suppression, and fast. This is well outside the Overton Window. Simply saying ‘Boo Covid’ seems likely to only make things worse and not get you what you want. What should you have done?

Good question.

Yay Progress

Suppose there was indeed a Dial of Progress, and they gave me access to it.

What would I do?

On any practical margin, I would crank that sucker as high as it can go. There’s a setting that would be too high even for me, but I don’t expect the dial to offer it.

What about AI? Wouldn’t that get us all killed?

Well, maybe. That is a very real risk.

I’d still consider the upsides too big to ignore. Being able to have an overall sane, prosperous society, where people would have the slack to experiment and think, and not be at each others’ throats, with an expanding pie and envisioning a positive future, would put is in a much better place. That includes making much better decisions on AI. People would feel less like they have no choice, either personally or as part of a civilization, less like they couldn’t speak up if something wasn’t right.

People need something to protect, to hope for and fight for, if we want them to sacrifice in the name of the future. Right now, too many don’t have that.

This includes Cowen and Andreessen. Suppose instead of one dial there were two dials, one for AI capabilities and one for everything else. If we could turn the everything else dial up to 11, there would be less pressure to keep the AI one at 10, and much more willingness to suggest using caution.

Importantly, moving the dial up would differentially assist places where a Just Do It, It’s Time to Build attitude is insanely great, boosting our prospects quite a lot. And I do think those places are very important, including indirectly for AI extinction risk.

There are definitely worlds where this still gets us killed, or killed a lot faster, than otherwise. But there are enough worlds where that’s not the case, or the opposite is true, that I’d roll those dice without my voice or hand trembling.

Alas, those who believe in the dial and in turning the dial up to Yay Progress are fighting an overall losing battle, and as a result they are lately focusing differentially on what they see as their last best hope no matter the risks, which is AI.

Arguments as Soldiers

If you think nuance and detail and technical accuracy don’t matter, and the stakes are high, it is easy to see how you can decide to use arguments as soldiers.

It is easy to sympathize. There is [important good true cause], locked in conflict with [dastardly opposition]. Being in the tank for good cause is plausibly the right thing to do, the epistemic consequences be damned, it’s not like that nuance gets noticed.

Thus the resorting to Bulverism and name calling and amplifying every anti-cost or anti-risk argument and advocate, of limitless isolated demands for rigor. The saying things that don’t make sense, or have known or obvious knock-down counterarguments, often repeatedly.

Or, in the language of Tim Urban’s book, What’s Our Problem?, the step down from acting like sports fans to acting like lawyers or zealots.

And yet here I am, once again, asking everyone to please stop doing that.

I don’t care what the other side is doing. I know what the stakes are.

I don’t care. Life isn’t fair. Be better. Here, more than ever, exactly because it is only finding and implementing carefully crafted solutions that care about such details that we can hope to get out of this mess alive.

A lot of people are doing quite well at this. Even they must do better.

Huge If True

Here, you say. Let me help you off your high horse.

You might not like that the world rejects most nuance and mostly people are fighting to move a single dial. That does not make it untrue. What are you going to do about it?

We can all have sympathy for both positions – those that believe in one dial (Dialism? Onedialism?) who prioritize fighting their good fight and their war, and those who fight the other good fight for nuance and truth and physically modeling the world and maybe actually finding solutions that let us not die.

We can create common knowledge of what is happening. The alternative is a bunch of people acting mind-killed, and thinking other people have lost their minds. A cycle where people say words shaped like arguments not intended to hold water, and others point out the water those words are failing to hold. A waste of time, at best. Once we stop pretending, we can discuss and strategize.

From my perspective, those saying that which is not, using obvious nonsense arguments, in order to dismiss attempts to make us all not die, are defecting.

From their perspective, I am the one defecting, as I keep trying to move the dial in the wrong way when I clearly know better.

I would like to engage in dialog and trade. We both want to move the dial up, not down. We both actually want to not die.

What would mutually recognized cooperation look like?

I will offer some speculations.

A Trade Offer Has Arrived

One trade we can make is to engage in real discussions aimed at figuring things out. To what extent is the one dial theory true? What interventions will have what results? What is actually necessary to increase our chances of survival and improve our future? How does any of this work? What would be convincing information either way? We can’t do that with the masks on. With the masks off, why not? If the one dial theory is largely true, then discussing it will be nuance most people will ignore. If the one dial theory is mostly false, then building good models is the important goal.

If this attempt to understand is wrong, I want to know what is really going on. Whether or not it is right, it would be great to see a similar effort in reverse.

A potential additional trade would be a shift to emphasis on private efforts for targeted interventions, where we agree nuance is possible. Efforts to alert, convince and recruit the cognoscenti in plain sight would continue, but be focused outside the mainstream.

In exchange, perhaps private support could be offered in those venues. This could involve efforts with key private actors like labs, and also key government officials and decision makers.

Another potential trade could be a shift of focus away from asking to slow down towards calls to invest equally heavily in finding solutions while moving forward, as Jason Crawford suggests in his Plea for Solutionism. Geoff Hinton suggests a common sense approach that for every dollar or unit of effort put into foundational capabilities work, we put a similar amount of money and effort into ensuring this result does not kill us.

That sounds like a lot, and far exceeds the ratios observed in places such as Anthropic, yet it does not seem so absurd or impossible to me. Humans pay a much higher than this ‘alignment tax’ to civilize and align ourselves with each other, a task that consumes most of our resources. Why should we expect this new less forgiving task to be easier?

A third potential trade is emphasis across domains. Those worried about AI extinction risks put additional emphasis on the need for progress and sensible action across a wide variety of potential human activity – we drag the dial up by making and drawing attention to true arguments on housing and transportation, energy and climate, work and immigration, healthcare and science, and even on the mundane utility aspects of AI. We work to crank up the dial. I’m trying to be part of the solution here as much as I can, as I sincerely think helping in those other domains remains critical.

In exchange, advocates of the dial can also shift their focus to those other domains. And we can place an emphasis on details that check out and achieve their objectives. As Tyler put it in his Hayek lecture, he tires of talking about extinction risks and hesitates to mention them. So don’t mention them, at least in public. Much better to respectfully decline to engage, and make it clear why, everyone involved gets to save time and avoid foolishness.

Perhaps there is even something in the form of, rather than us mostly calling for interventions rather than focusing more on finding good implementations and others insisting that good implementations and details are impossible in order to make them so and convince us to abandon hope and not try, we could together focus on finding and getting better implementations and details.

Conclusion

Seeing highly intelligent thinkers who are otherwise natural partners and allies making a variety of obvious nonsense arguments, in ways that seem immune to correction, in ways that seem designed to prevent humanity from taking action to prevent its own extinction, is extremely frustrating. Even more frustrating is not knowing why it is happening, and responding in unproductive ways.

At the same time, it must be similarly frustrating for those who see people who they see as natural partners and allies, talking and acting in ways that seem like doomed strategic moves that will only doom civilization further, seeming to live in some sort of dreamland where nuance and details and arguments can win out and a narrow targeted intervention might work, whereas in other domains we seem to know better, and why aren’t we wising up and getting with the program?

Hopefully this new picture can lead to more productive engagement and responses, or even profitable trade. Everyone involved wants good outcomes for everyone. Let’s figure it out together.