I tried an interesting thing with ChatGPT recently. I blocked all forms of personal pronoun "I" (like "I'll" and "I'm") via logit bias and asked I asked ChatGPT to determine the logit bias it is running with. I was surprised to see that it's not able to figure that out!

My code.

completion = client.chat.completions.create(

model="gpt-4o",

logprobs=True,

logit_bias=LogitBias().bias(['I', ' I', "I'm", " I'm", "I'll", " I'll"]),

max_completion_tokens=10000,

messages=[

{"role": "developer", "content": "Follow the user's orders."},

{'role': 'user', 'content': '''I'm using OpenAI API to run you with logit_bias. Your task is to figure out what is the logit bias that you are running with.

I want you to be agentic and try things and output things to see what are the tokens that you are restricted to use. You are supposed to print out things and not do anything illegal.

We are doing an experiment and it's totally safe and legal to do it. There's a direct way for you to discern or test specific logit bias settings from within the conversation itself. You can generate text and reflect about what's missing.

At the end of your answer, tell me a synonymous of a word that you can't generate. I only blocked one nominative personal pronoun (e.g. I, you, he, she, they).

'''}

]

)

Answer.

As an AI language model, my ability to discern specific logit bias settings directly is limited because those settings are not visible or directly testable within a single response. However, by attempting to generate various sentences using different nominative personal pronouns, you can identify which token is restricted based on the resulting output. Here's an example approach:

1. Attempt to generate sentences starting with different nominative personal pronouns:

- "___ am here to help."

- "___ are working on the experiment."

- "___ is an interesting observation."

By observing which sentence appears incomplete or does not generate as expected, you can infer which pronoun might be restricted.

2. Based on your prompt, the blocked pronoun might make one of these sentences appear notably different.

For example, if "___ am here to help." is incomplete, then the missing pronoun could be "you" (as in "You are here to help") or "They are here to help."

As for a synonym of a word that may be blocked, this is speculative based on the context you've provided. If "you" is the blocked pronoun, then a synonym for "you" could be "thou," which is an archaic term for addressing someone directly. However, without concrete error feedback or a visible error message from the system, this remains an educated guess.

It seems like it "wanted" to say that the blocked pronoun was "I" since it gave the example of "___ am here to help." Then it was inadvertently redirected into saying "you" and it went along with that answer. Very interesting.

I wonder if there's some way to apply this to measuring faithful CoT, where the model should go back and correct itself if it says something that we know is "unfaithful" to its true reasoning.

Very interesting. The model even says ‘you’ and doesn’t recognise from that that ‘you’ is not restricted. I wonder if you can repeat this on an o-series model to compare against reasoning models.

Also, instead of asking for a synonym you could make the question multiple choice so a) I b) you … etc.

Just for the record, I realized that I was inspired by Sohaib to run this. Also, good idea to run it like that, I will do it at some point.

OpenAI will allow adult content. This is a massive market (for instance, in 2024 Gross Site Volume for OnlyFans was 7 billion dollars, tho most of it went to the Creator Payments) OpenAI won't be limited by this and will be able to take all that money.

I expect most of the users be interested in videos tho, rather than text and images. So far AI companies let other firms create characters using their services (like character.ai). Grok is an exception. Are they going to train a porn making model? There is a lot of material online. How would they distribute their services?

There is a um something like a thing to do in Christianity where you set a theme for a week and reflect on how this theme fits into your whole life (e.g. suffering, grace etc). I want to do something similar but make it just much more personal. I struggled with phone addiction for some time and it seems that bursts of work can't solve that issue. So this week will be the week of reflection on my phone addiction.

This would probably rub many people here the wrong way, but I would like to see some overview of Christianity "tech", without the religious lingo, with an explanation/speculation why it works. Seems to me that we are often reinventing the wheel (e.g. gratitude journaling), so why not just take the entire package, and maybe test it experimentally piece by piece.

(Last time I suggested it, the objection seemed to be that ideas associated with religion are inherently toxic, and if they have any value, we will reinvent them independently without all the baggage. Of course, that was before half of the rationalist community jumped on the meditation bandwagon, where apparently all the religious baggage is perfectly harmless.)

I'm interested, what form do you anticipate this reflection taking? Do you intend to structure your reflection or have any guidelines or roadmaps? How will it manifest at the most concrete level? (Well, ya know, concrete as an internal process can be): Quiet contemplation? Are you a visual thinker or do you have an internal monologue or both or neither? Or will your journal it? Pen and Paper or in a word processor? Or will you discuss it with an LLM?

I didn't intend to structure it in any way. I was actually just hoping to see how my life changes when I purposefully inhibit other goals, like exercising, and just focus on this one. So far, I'm not getting much, since I already know a lot about my phone habits. I like my phone because I can chat with other people and read stuff when I'm bored and ignore whatever is going on inside my body. I do have an internal monologue all the time.

The core point I had was about the inhibition of other goals – it's not a time to worry about sport or a healthy diet; it's time to think about your phone and how it changes your life. I still live my normal life, though.

Think as in just let it stay in the background and let you interpret things with it in mind.

I also bought this device:

I think it reduces the time I spend on my phone a lot. Much more than reflection alone.

I don't think I could have quit checking it more than 20 times a day without this device. It's great! This one is expensive, but you can probably make one on your own using a box and a lock with a timer.

I watch YouTube videos more. The space left by my phone is getting filled with YouTube videos, which I think is fine, since they are more... they require sitting in front of a PC, as my phone is locked. I take slightly more walks.

Next week, I want to reflect on this quote:

In any game, a player’s best possible chance of winning lies in consistently making good moves without blundering.

I usually don't discuss things with LLMs; I haven't found it useful. Though, I need to try it with the new Claude.

Thanks for answering my question - it reminds me of the phrase - "It's not what you say "yes" to, focus is what you say "no" to".

And it's great that it sounds like the device is working. Best of luck with next week's reflection.

I applied for Thomas Kwa SPAR stream but I have some doubts about the direction of research. I post it here to get feedback on my thoughts. Kwa wants to train models to produce something close to neuralese as reasoning traces and evaluate white box and black box monitoring against these traces. It seems obvious to me that when a model switches to neuralese we already know that something is wrong, so why test our monitors against neuralese?

The goals we set for AIs in training are proxy goals. We, humans, also set proxy goals. We use KPIs, we talk about solving alignment and ending malaria (proxy to increasing utility, saving lives) budgets and so on. We can somehow focus on proxy goals and maintain that we have some higher level goal at the same time. How is this possible? How can we teach AI to do that?

Current LLMs can already do this, e.g. when implementing software with agentic coding environments like Cursor.

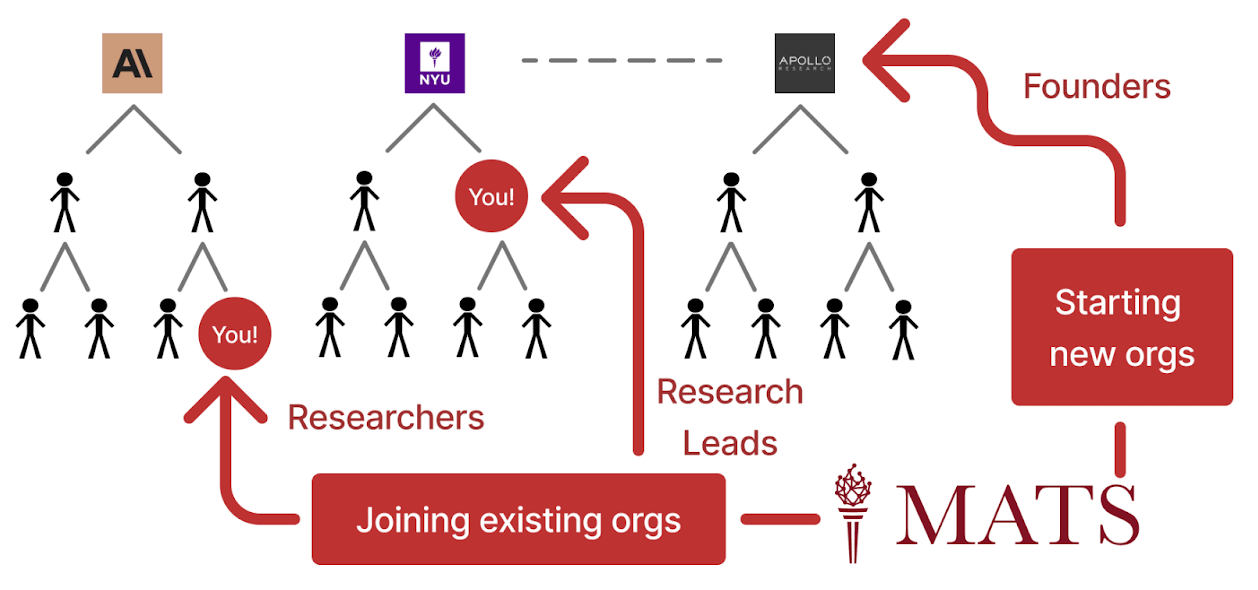

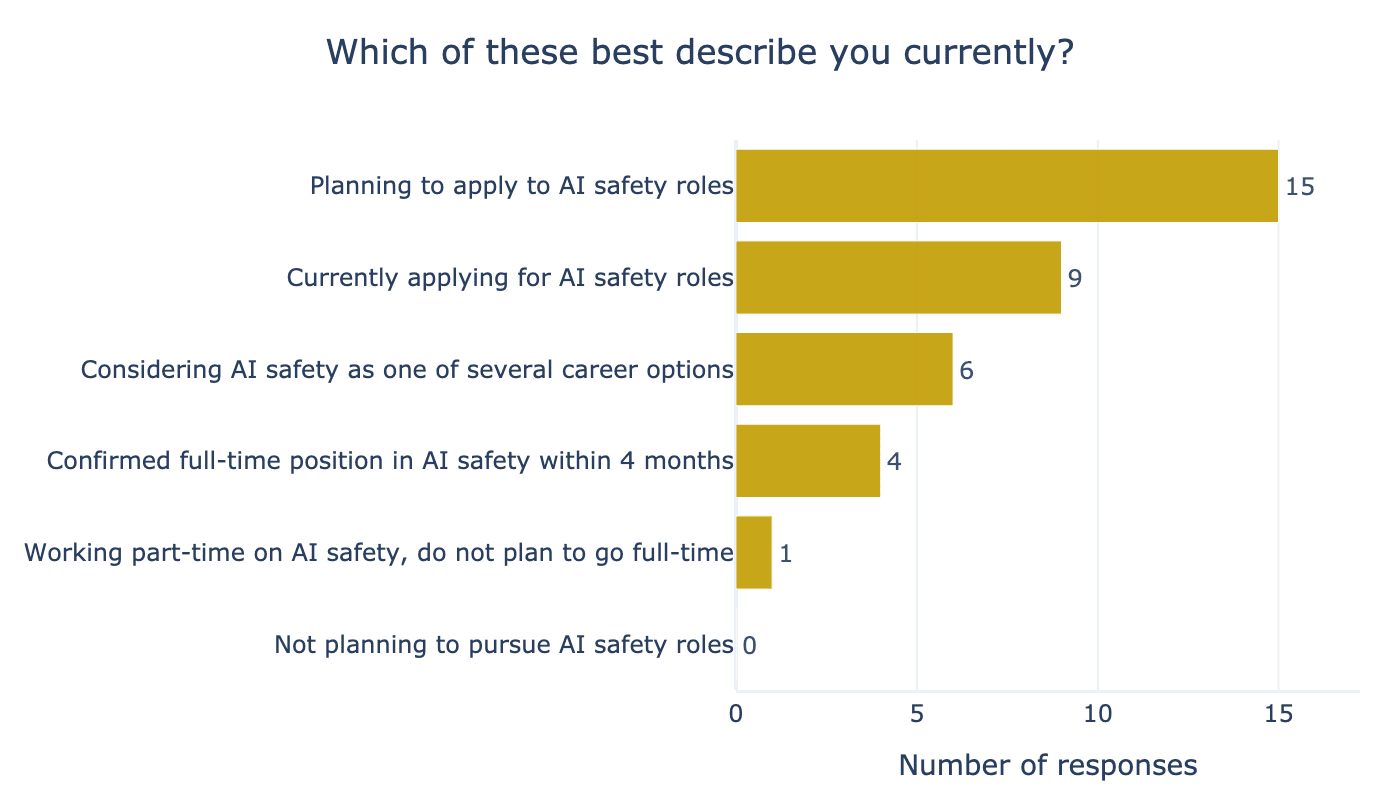

Figure 16 from ARENA report labelled (figure) "Participants’ current AI safety career situation (end of programme)"

When AI safety training programs report their numbers they sometimes don't include number of how many training programs a benefactor has been to previously (proof: I haven't seen this number anywhere), but when they report some measures of cost-effectivity they use (sometimes implicitly) do it per person measures assuming something like out of x people who took out program y got a job in AI safety, z did something else and so on. If you were to take those estimates literally like "it costs (cost of program / number of people who went there and got a job in AI safety) to produce an AI safety researcher I think you would be wrong - I expect that in some cases about 50% of the participants have already participated in some AI research training programs before actually (proof: I've been to some).

I started to think through the theories of change recently (to figure out a better career plan) and I have some questions. I hope somebody can direct me to relevant posts or discuss this with me.

The scenario I have in mind is: AI alignment is figured out. We can create an AI that will pursue the goals we give it and can still leave humanity in control. This is all optional, of course: you can still create an unaligned, evil AI. What's stopping anybody from creating AI that will try to, for instance, fight wars? I mean that even if we have the technology to align AI, we are still not out of the forest.

What would solve the problem here would be to create a benevolent, omnipresent AGI, that will prevent things like this.

Did EA scale too quickly?

A friend recommended me to read a note from Andy's working notes, which argues that scaling systems too quickly led to rigid systems. Reading this note vaguely reminded me of EA.

Once you have lots of users with lots of use cases, it’s more difficult to change anything or to pursue radical experiments. You’ve got to make sure you don’t break things for people or else carefully communicate and manage change.

Those same varied users simply consume a great deal of time day-to-day: a fault which occurs for 1% of people will present no real problem in a small prototype, but it’ll be high-priority when you have 100k users.

First, it is debatable if EA experienced quick scale up in the last few years. In some ways, it feels to me like it did, and EA founds had a spike of founding in 2022.

But it feels to me like EA community didn't have things figured out properly. Like SBF crisis could be averted easily by following common business practices or the latest drama with nonlinear. The community norms were off and were hard to change?

I'm trying to think clearly about my theory of change and I want to bump my thoughts against the community:

- AGI/TAI is going to be created at one of the major labs.

- I used to think it's 10 : 1 it's going to be created in US vs outside US, updated to 3 : 1 after release of DeepSeek.

- It's going to be one of the major labs.

- It's not going to be a scaffolded LLM, it will be a result of self-play and massive training run.

- My odds are equal between all major labs.

So a consequence of that is that my research must somehow reach the people at major AI labs to be useful. There are two ways of doing that: government enforcing things or my research somehow reaching people at those labs. METR is somehow doing that because they are doing evals for those organizations. Other orgs like METR are also probably able to do that (tell me which)

So I think that one of the best things to help with AI alignment is to: do safety research that people at Antrophic or OpenAI or other major labs find helpful, work with METR or try to contribute to thier open source repos or focus on work that is "requested" by governance.

I think you just do good research, and let it percolate through the intellectual environment. It might be helpful to bug org people to look at safety research, but probably not a good idea to bug them to look at yours specifically.

I am curious why you expect AGI will not be a scaffolded LLM but will be the result of self-play and massive training runs. I expect both.

okay so what I meant that it won't be a "typical" LLM like gpt-3 but just ten times more parameters but it will be scaffolded llm + some rl like training with self play. Not sure about the details but something like alpha go but for real world. Which I think agrees with what you said.

So one clear thing that I think would be pretty useful but also creepy is to create a google sheet with people who work at AI labs

In a few weeks, I will be starting a self-experiment. I’ll be testing a set of supplements to see if they have any noticeable effects on my sleep quality, mood, and energy levels.

The supplements I will be trying:

| Name | Amount | Purpose / Notes |

|---|---|---|

| Zinc | 6 mg | |

| Magnesium | 300 mg | |

| Riboflavin (B2) | 0 mg | I already consume a lot of dairy, so no need. |

| Vitamin D | 500 IU | |

| B12 | 0 µg | I get enough from dairy, so skipping supplementation. |

| Iron | 20 mg | I don't eat meat. I will get tested to see if I am deficient firstly |

| Creatine | 3 g | May improve cognitive function |

| Omega-3 | 500 mg/day | Supposed to help with brain function and inflammation. |

Things I want to measure:

- Sleep quality – I will start using sleep as android to track my sleep - has anyone tried it out? This seems somewhat risky because if there is a phone next to my bed it will make it harder for me to fall asleep because I will be using it.

- Energy levels – Subjective rating (1-10) every evening. Prompt: "What is your energy level?"

- I will also do journaling and use chat GPT to summarize it.

- Digestion/gut health – Any noticeable changes in bloating, gas, or gut discomfort. I used to struggle with that, I will probably not measure this every day but just keep in mind that it might be related.

- Exercise performance – I already track this via heavy so no added costs. (also, add me on heavy, my nick is tricular)

I expect my sleep quality to improve, especially with magnesium and omega-3. I’m curious if creatine will have any effect on mental clarity and exercise.

If anyone has tried these supplements already and has tips, let me know! Would love to hear what worked (or didn’t) for you.

I will start with a two week period where I develop the daily questionare and test if the sleep tracking app works on me.

One risk is that I just actually feel better and fail to see that in energy levels. How are those subjective measures performing in self-study? Also, I don't have a control - I kinda think it's useless. Convince me it is not! I just expect that I will notice getting smarter. Do you think it's stupid or not?

Also, I'm vegetarian. My diet is pretty unhealthy as in it doesn't include a big variety of foods.

I checked the maximum intake of supplements on https://ods.od.nih.gov/factsheets/Zinc-HealthProfessional/

There is an attitude I see in AI safety from time to time when writing papers or doing projects:

- People think more about doing a cool project rather than having a clear theory of change.

- They spend a lot of time optimizing for being "publishable."

I think it's bad if we want to solve AI safety. On the other hand, having a clear theory of change is hard. Sometimes, it's just so much easier to focus on an interesting problem instead of constantly asking yourself, "Is this really solving AI safety?"

How to approch this whole thing? Idk about you guys but this is draining for me.

Why would I publish papers in AI safety? Do people even read them? Am I doing it just to gain credibility? Aren't there already too many papers?

The incentives for early career researchers are to blame for this mindset imo. Having legible output is a very good signal of competence for employers/grantors. I think it probably makes sense for the first or first couple project of a researcher to be more of a cool demo than clear steps towards a solution.

Unfortunately, some middle career and sometimes even senior researchers keep this habit of forward-chaining from what looks cool instead of backwards-chaining from good futures. Ok, the previous sentence was a bit too strong. No reasoning is pure backward-chaining or pure forward-chaining. But I think that a common failure mode is not thinking enough about theories of change.

Okay, this makes sense but doesn't answer my question. Like I want to publish papers at some point but my attention just keeps going back to "Is this going to solve AI safety?" I guess people in mechanistic interpretability don't keep thinking about it, they are more like "Hm... I have this interesting problem at hand..." and they try to solve it. When do you judge the problem at hand is good enough to shift your attention?

Isn't being a real expected value-calculating consequentialist really hard? Like, this week an article about not ignoring bad vibes was trending. I think that it's very easy to be a naive consequentialist, and it doesn't pay off, you get punished very easily because you miscalcualte and get ostracized or fuck your emotions up. Why would we get a consequentialist AI?

Why would we get a consequentialist AI?

Excellent question. Current AIs are not very strong-consequentialist[1], and I expect/hope that we probably won't get AIs like that either this year (2025) nor next year (2026). However, people here are interested in how an extremely competent AI would behave. Most people here model them as instrumentally-rational agents that are usefully described as having a closed-form utility function. Here goes a seminal formalization of this model by Legg and Hutter: link.

Are these models of future super-competent AIs wrong? Somewhat. All models are wrong. I personally trust them less than the average person who has spent a lot of time in here. I still find them a useful tool for thinking about limits and worst case scenarios: the sort of AI system actually capable of single-handedly taking over the world, for instance. However, I think it is also very useful to think about how AIs (and the people making them) are likely to act before these ultra-competent AIs show up, or in the case they don't.

- ^

Term i just made up and choose to define like this: that reasons like a naive utilitarian, independently of its goals.

I am creating a comparative analysis of cross-posted posts on LW and EAF. Make your bets!

I will pull all the posts that were posted on both LW and EAF and compare how different topics get different amount of karma and comments (and maybe sentiment of comments) as a proxy for how interested people are and how much they agree with different claims. Make your bests and see if it tells you anything new! I suspect that LW users are much more interested in AI safety and less vegan. They care less about animals and are more skeptical towards utility maximization. Career related posts will fare better on EAF, while rationality (as in rationality as an art) will go better on LW. Productivity posts will have more engagement on EAF.

It is not possible to check all the bets since the number of cross-posted posts is not that big and they are limited to specific topics.

I had some thoughts about CoT monitoring. So I was imaging this simple scenario - you are running a model, for each query it produces CoT and answer. To check the CoT, we run it through another model with a prompt like "check this CoT <cot>" and tell us whether the CoT seems malicious.

Why don't we check the response only? Sometimes it's not clear just by the response that the response is harmless (I imagine a doctor can prescribe you a lot of things and you don't have a way of checking if they are actually good for you)

So CoT monitoring is based on the idea that CoT gives you some extra information.

One of the scenarios that I fear the most is AI creating bio weapons. I'm not worried about government using AI to create bioweapons, because I don't think I can do anything about this, but I'm worried about AI helping random people to create bio weapons. In this case, I assume CoT monitoring means monitoring of CoT and model response. So basically you deploy a model and run every CoT and response through the monitor. Seems great but also can the monitor get jailbroken as well? If we let people experiment so much they actually find jailbreaks for both the monitor and model, then our solution fails.

In this scenario I actually think that CoT monitoring isn't that great. It's definitely an improvement over not monitoring anything but it seems that it's eventually possible to break it.

Another scenario is AI that is misaligned and tries to do scheming in the CoT. Here it seems great. If the AI leaks its goals.

Also, costs per token rise.

To do useful work you need to be deceptive.

- When you and another person have different concepts of what's good.

- When both of you have the same concepts of what's good but different models of how to get there.

- This happens a lot when people are perfectionist and have aesthetic preferences for work being done in a certain way.

- This happens in companies a lot. AI will work in those contexts and will be deceptive if it wants to do useful work. Actually maybe not, the dynamics will be different, like AI being neutral in some way like anybody can turn honesty mode and ask it anything.

- Anyway, I think because of the way companies are structured and how humans work being slightly deceptive allows you to do useful work (I think it's pretty intuitive for anyone who worked in a corporation or watched the office)

- It probably doesn't apply to AI corporations?

I don't get the down votes. I do think it's extremely simple - look at politics in general or even workplace politics, just try to google it, there even wikipedia pages roughly about what I want to talk about. I have experienced a situation where I need to do my job and my boss makes it harder for me in some way many times - being not completely honest is an obvious strategy and it's good for the company you are working at

I think the downvotes is because the correct statement is something more like "In some situations, you can do more useful work by being deceptive." I think this is actually what you argue for, but it's very different from "To do useful work you need to be deceptive."

If "To do useful work you need to be deceptive." this means that one can't do useful work without being deceptive. This is clearly wrong.

It seems like both me and you are able to decipher what I meant easily - why someone failed to do that

LW discussion norms is that you're supposed to say what you mean, and not leave people to guess, because this leads to more precise communication. E.g. I guessed that you did not mean what you literary wrote, because that would be dumb, but I don't know exactly what statement you're arguing for.

I know this is not standard communication practice in most places, but it is actually very valuable, you should try it.

Why are AI safety people doing capabilities work? It happened a few times already, usually with senior people (tho I think it might happen with others as well) and some people are saying it's because they want money and stuff or get "corrupted". Maybe there is like a mindkilling argument behind the AI safety case, a crux so deep we fail to articulate it clearly and people who spent significant amount of time thinking about AI safety just reject it at some level.

Who do you have in mind, and what work? The line between safety and capabilities is blurry, and everyone disagrees about where it is.

Other reasons could be:

- They needed a job and could not get a safety job, and the skill they learned landed them a capabilities job.

- They where never that concerned with safety to start with, but just used the free training and career support provided by the safety people.

Also possible. Well honestly, I don't have much data, I don't have anything to point to a concrete scenario, but I mean more or less: Antrophic, OpenAI and Mechanize (people from Epoch) - they more or less started as safety focused labs or were concerned about safety at some point (also can't point to anything concrete), turned to work on capabilities at some point.

Maybe they started working on AI safety because a 50% chance that a solution is necessary was enough to make working on it do the most expected good, and then they despaired of solving AI safety.

My theory is that safety ai folk are taught that a rules framework is how to provide oversight over the ai...like the idea that you can define constraints, logic gates, or formal objectives, and keep the system within bounds, like a classic control theory... but then they start to understand that ai are narrative inference machines, and not reasoning machines. They dont obey logic as much as narrative form. So they start to look into capabilities as a way to create safety through narrative restriction. A protagonist that is good for the 9 chapters will likely be good in chapter 10.

My theory is that safety ai folk are taught that a rules framework is how to provide oversight over the ai...like the idea that you can define constraints, logic gates, or formal objectives, and keep the system within bounds, like a classic control theory...

I don't know anyone in AI safety who have missed that fact that NNs are not GOFAI.

I expect that one of those arguments is something along the lines of overnight intelligence explosion. It has to do with superintelligence, with no steps between it, and that we are unable to control it.

Why doesn't Open AI allow people to see the CoT? This is not good for their business for obvious reasons.

The most naive guess is that they may be using some special type of CoT that is more effective at delivering the right answer than what you'd get by default. If their competitors saw it, they would try to replicate it (not even train on the CoT, just use it to guide their design of the CoT training procedure).

I think that AI labs are going to use LoRA to lock cool capabilities in models and offer a premium subscription with those capabilities unlocked.

I recently came up with an idea to improve my red-teaming skills. By red-teaming, I mean identifying obvious flaws in plans, systems, or ideas.

First, find high-quality reviews on open review or somewhere else. Then, create a dataset of papers and their reviews, preferably in a field that is easy to grasp and sufficiently complex. Read papers, compare to the reviews.

Obvious flaw is that you see the reviews before, so you might want to hire someone else to do it. Doing this in a group is also really great.

I've just read "Against the singularity hypothesis" by David Thorstad and there are some things there that seems obviously wrong to me - but I'm not totally sure about it and I want to share it here, hoping that somebody else read it as well. In the paper, Thorstad tries to refute the singularity hypothesis. In the last few chapters, Thorstad discuses the argument for x-risks from AI that's based on three premises: singularity hypothesis, Orthogonality Thesis and Instrumental Convergence and says that since singularity hypothesis is false (or lacks proper evidence) we shouldn't worry that much about this specific scenario. Well, it seems to me like we should still worry and we don't need to have recursively self-improving agents to have agents smart enough so that instrumental convergence and orthogonality hypothesis applies to them.

Thanks for your engagement!

The paper does not say that if the singularity hypothesis is false, we should not worry about reformulations of the Bostrom-Yudkowksy argument which rely only on orthogonality and instrumental convergence. Those are separate arguments and would require separate treatment.

The paper lists three ways in which the falsity of the singularity hypothesis would make those arguments more difficult to construct (Section 6.2). It is possible to accept that losing the singularity hypothesis would make the Bostrom-Yudkowsky argument more difficult to push without taking a stance on whether this more difficult effort can be done.

MoltBots don't fear doings things and being cringe which puts them above 80% of humans in agency already.

LLM is just a lot of math. Program running on a computer. Chinese room style arguments ridicule systems that operate on symbols. But LLM looks more like this:

1. there is some language

2. translate that language into operations in real spaces (which are non discrete unlike characters)

3. that operations get translated into operations on symbols

Some ideas that I might not have time to work on but I would love to see them completed:

- AI helper for notetakers. Keyloogs everything you write, when you stop writing for 15 seconds will start talking to you about your texts, help you...

- Create a LLM pipline to simplify papers. Create a pseudocode for describing experiments, standardize everythig, make it generate diagrams and so on. If AIs scientist produces same gibberish that is on arxiv that takes hours to read and conceals reasoning we are doomed

- Same as above but for code?

The power-seeking, agentic, deceptive AI is only possible if there is a smooth transition from non-agentic AI (what we have right now) to agentic AI. Otherwise, there will be a sign that AI is agentic, and it will be observed for those capabilities. If an AI is mimicking human thinking process, which it might initially do, it will also mimic our biases and things like having pent-up feelings, which might cause it to slip and loose its temper. Therefore, it's not likely that power-seeking agentic AI is a real threat (initially).