I would find it valuable if someone could gather an easy-to-read bullet point list of all the questionable things Sam Altman has done throughout the years.

I usually link to Gwern’s comment thread (https://www.lesswrong.com/posts/KXHMCH7wCxrvKsJyn/openai-facts-from-a-weekend?commentId=toNjz7gy4rrCFd99A), but I would prefer if there was something more easily-consumable.

[Edit #2, two months later: see https://ailabwatch.org/resources/integrity/]

[Edit: I'm not planning on doing this but I might advise you if you do, reader.]

50% I'll do this in the next two months if nobody else does. But not right now, and someone else should do it too.

Off the top of my head (this is not the list you asked for, just an outline):

- Loopt stuff

- YC stuff

- YC removal

- NDAs

- And deceptive communication recently

- And maybe OpenAI's general culture of don't publicly criticize OpenAI

- Profit cap non-transparency

- Superalignment compute

- Two exoduses of safety people; negative stuff people-who-quit-OpenAI sometimes say

- Telling board members not to talk to employees

- Board crisis stuff

- OpenAI executives telling the board Altman lies

- The board saying Altman lies

- Lying about why he wanted to remove Toner

- Lying to try to remove Toner

- Returning

- Inadequate investigation + spinning results

Stuff not worth including:

- Reddit stuff - unconfirmed

- Financial conflict-of-interest stuff - murky and not super important

- Misc instances of saying-what's-convenient (e.g. OpenAI should scale because of the prospect of compute overhang and the $7T chip investment thing) - idk, maybe, also interested in more examples

- Johansson

How likely is it that the board hasn’t released specific details about Sam’s removal because of legal reasons? At this point, I feel like I have to place overwhelmingly high probability on this.

So, if this is the case, what legal reason is it?

My mainline guess is that information about bad behaviour by Sam was disclosed to them by various individuals, and they owe a duty of confidence to those individuals (where revealing the information might identify the individuals, who might thereby become subject to some form of retaliation).

("Legal reasons" also gets some of my probability mass.)

I thought Superalignment was a positive bet by OpenAI, and I was happy when they committed to putting 20% of their current compute (at the time) towards it. I stopped thinking about that kind of approach because OAI already had competent people working on it. Several of them are now gone.

It seems increasingly likely that the entire effort will dissolve. If so, OAI has now made the business decision to invest its capital in keeping its moat in the AGI race rather than basic safety science. This is bad and likely another early sign of what's to come.

I think the research that was done by the Superalignment team should continue happen outside of OpenAI and, if governments have a lot of capital to allocate, they should figure out a way to provide compute to continue those efforts. Or maybe there's a better way forward. But I think it would be pretty bad if all that talent towards the project never gets truly leveraged into something impactful.

For anyone interested in Natural Abstractions type research: https://arxiv.org/abs/2405.07987

Claude summary:

Key points of "The Platonic Representation Hypothesis" paper:

-

Neural networks trained on different objectives, architectures, and modalities are converging to similar representations of the world as they scale up in size and capabilities.

-

This convergence is driven by the shared structure of the underlying reality generating the data, which acts as an attractor for the learned representations.

-

Scaling up model size, data quantity, and task diversity leads to representations that capture more information about the underlying reality, increasing convergence.

-

Contrastive learning objectives in particular lead to representations that capture the pointwise mutual information (PMI) of the joint distribution over observed events.

-

This convergence has implications for enhanced generalization, sample efficiency, and knowledge transfer as models scale, as well as reduced bias and hallucination.

Relevance to AI alignment:

-

Convergent representations shaped by the structure of reality could lead to more reliable and robust AI systems that are better anchored to the real worl

I thought this series of comments from a former DeepMind employee (who worked on Gemini) were insightful so I figured I should share.

...From my experience doing early RLHF work for Gemini, larger models exploit the reward model more. You need to constantly keep collecting more preferences and retraining reward models to make it not exploitable. Otherwise you get nonsensical responses which have exploited the idiosyncracy of your preferences data. There is a reason few labs have done RLHF successfully.

It's also know that more capable models exploit loopholes in reward functions better. Imo, it's a pretty intuitive idea that more capable RL agents will find larger rewards. But there's evidence from papers like this as well: https://arxiv.org/abs/2201.03544

To be clear, I don't think the current paradigm as-is is dangerous. I'm stating the obvious because this platform has gone a bit bonkers.

The danger comes from finetuning LLMs to become AutoGPTs which have memory, actions, and maximize rewards, and are deployed autonomously. Widepsread proliferation of GPT-4+ models will almost certainly make lots of these agents which will cause a lot of damage and potentially cause something ind

Why aren't you doing research on making pre-training better for alignment?

I was on a call today, and we talked about projects that involve studying how pre-trained models evolve throughout training and how we could guide the pre-training process to make models safer. For example, could models trained on synthetic/transformed data make models significantly more robust and essentially solve jailbreaking? How about the intersection of pretraining from human preferences and synthetic data? Could the resulting model be significantly easier to control? How would it impact the downstream RL process? Could we imagine a setting where we don't need RL (or at least we'd be able to confidently use resulting models to automate alignment research)? I think many interesting projects could fall out of this work.

So, back to my main question: why aren't you doing research on making pre-training better for alignment? Is it because it's too expensive and doesn't seem like a low-hanging fruit? Or do you feel it isn't a plausible direction for aligning models?

We were wondering if there are technical bottlenecks that would make this kind of research more feasible for alignment research to better study ho...

If you work at a social media website or YouTube (or know anyone who does), please read the text below:

Community Notes is one of the best features to come out on social media apps in a long time. The code is even open source. Why haven't other social media websites picked it up yet? If they care about truth, this would be a considerable step forward beyond. Notes like “this video is funded by x nation” or “this video talks about health info; go here to learn more” messages are simply not good enough.

If you work at companies like YouTube or know someone who does, let's figure out who we need to talk to to make it happen. Naïvely, you could spend a weekend DMing a bunch of employees (PMs, engineers) at various social media websites in order to persuade them that this is worth their time and probably the biggest impact they could have in their entire career.

If you have any connections, let me know. We can also set up a doc of messages to send in order to come up with a persuasive DM.

Oh, that’s great, thanks! Also reminded me of (the less official, more comedy-based) “Community Notes Violating People”. @Viliam

I have some alignment project ideas for things I'd consider mentoring for. I would love feedback on the ideas. If you are interested in collaborating on any of them, that's cool, too.

Here are the titles:

Smart AI vs swarm of dumb AIs |

Lit review of chain of thought faithfulness (steganography in AIs) |

Replicating METR paper but for alignment research task |

Tool-use AI for alignment research |

Sakana AI for Unlearning |

Automated alignment onboarding |

Build the infrastructure for making Sakana AI's AI scientist better for alignment research |

I quickly wrote up some rough project ideas for ARENA and LASR participants, so I figured I'd share them here as well. I am happy to discuss these ideas and potentially collaborate on some of them.

Alignment Project Ideas (Oct 2, 2024)

1. Improving "A Multimodal Automated Interpretability Agent" (MAIA)

Overview

MAIA (Multimodal Automated Interpretability Agent) is a system designed to help users understand AI models by combining human-like experimentation flexibility with automated scalability. It answers user queries about AI system components by iteratively generating hypotheses, designing and running experiments, observing outcomes, and updating hypotheses.

MAIA uses a vision-language model (GPT-4V, at the time) backbone equipped with an API of interpretability experiment tools. This modular system can address both "macroscopic" questions (e.g., identifying systematic biases in model predictions) and "microscopic" questions (e.g., describing individual features) with simple query modifications.

This project aims to improve MAIA's ability to either answer macroscopic questions or microscopic questions on vision models.

2. Making "A Multimodal Automated Interpretability Agent" (MAIA) wor

...My current speculation as to what is happening at OpenAI

How do we know this wasn't their best opportunity to strike if Sam was indeed not being totally honest with the board?

Let's say the rumours are true, that Sam is building out external orgs (NVIDIA competitor and iPhone-like competitor) to escape the power of the board and potentially going against the charter. Would this 'conflict of interest' be enough? If you take that story forward, it sounds more and more like he was setting up AGI to be run by external companies, using OpenAI as a fundraising bargaining chip, and having a significant financial interest in plugging AGI into those outside orgs.

So, if we think about this strategically, how long should they wait as board members who are trying to uphold the charter?

On top of this, it seems (according to Sam) that OpenAI has made a significant transformer-level breakthrough recently, which implies a significant capability jump. Long-term reasoning? Basically, anything short of 'coming up with novel insights in physics' is on the table, given that Sam recently used that line as the line we need to cross to get to AGI.

So, it could be a mix of, Ilya thinking they have achieved AG...

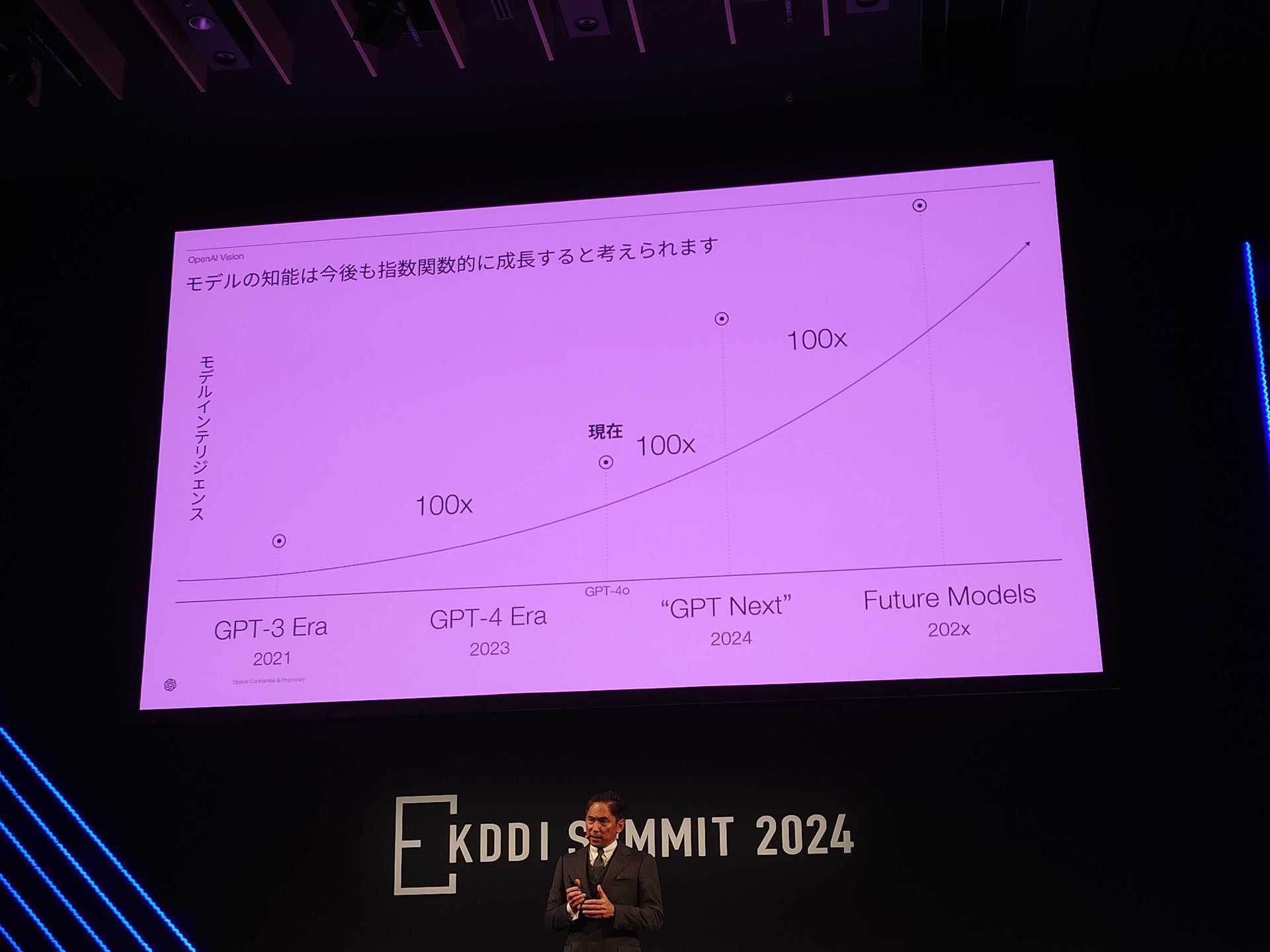

News on the next OAI GPT release:

Nagasaki, CEO of OpenAI Japan, said, "The AI model called 'GPT Next' that will be released in the future will evolve nearly 100 times based on past performance. Unlike traditional software, AI technology grows exponentially."

https://www.itmedia.co.jp/aiplus/articles/2409/03/news165.html

The slide clearly states 2024 "GPT Next". This 100 times increase probably does not refer to the scaling of computing resources, but rather to the effective computational volume + 2 OOMs, including improvements to the architecture and learning efficiency. GPT-4 NEXT, which will be released this year, is expected to be trained using a miniature version of Strawberry with roughly the same computational resources as GPT-4, with an effective computational load 100 times greater. Orion, which has been in the spotlight recently, was trained for several months on the equivalent of 100k H100 compared to GPT-4 (EDIT: original tweet said 10k H100s, but that was a mistake), adding 10 times the computational resource scale, making it +3 OOMs, and is expected to be released sometime next year.

Note: Another OAI employee seemingly confirms this (I've followed...

I encourage alignment/safety people to be open-minded about what François Chollet is saying in this podcast:

I think many are blindly bought into the 'scale is all you need' and apparently godly nature of LLMs and may be dependent on unfounded/confused assumptions because of it.

Getting this right is important because it could significantly impact how hard you think alignment will be. Here's @johnswentworth responding to @Eliezer Yudkowsky about his difference in optimism compared to @Quintin Pope (despite believing the natural abstraction hypothesis is true):

...Entirely separately, I have concerns about the ability of ML-based technology to robustly point the AI in any builder-intended direction whatsoever, even if there exists some not-too-large adequate mapping from that intended direction onto the AI's internal ontology at training time. My guess is that more of the disagreement lies here.

I doubt much disagreement between you and I lies there, because I do not expect ML-style training to robustly point an AI in any builder-intended direction. My hopes generally don't route through targeting via ML-style training.

I do think my deltas from many other people lie there - e.g. that

Attempt to explain why I think AI systems are not the same thing as a library card when it comes to bio-risk.

To focus on less of an extreme example, I’ll be ignoring the case where AI can create new, more powerful pathogens faster than we can create defences, though I think this is an important case (some people just don’t find it plausible because it relies on the assumption that AIs being able to create new knowledge).

I think AI Safety people should make more of an effort to walkthrough the threat model so I’ll give an initial quick first try:

1) Library. If I’m a terrorist and I want to build a bioweapon, I have to spend several months reading books at minimum to understand how it all works. I don’t have any experts on-hand to explain how to do it step-by-step. I have to figure out which books to read and in what sequence. I have to look up external sources to figure out where I can buy specific materials.

Then, I have to somehow find out how to to gain access to those materials (this is the most difficult part for each case). Once I gain access to the materials, I still need to figure out how to make things work as a total noob at creating bioweapons. I will fail. Even experts fa...

Resharing a short blog post by an OpenAI employee giving his take on why we have 3-5 year AGI timelines (https://nonint.com/2024/06/03/general-intelligence-2024/):

Folks in the field of AI like to make predictions for AGI. I have thoughts, and I’ve always wanted to write them down. Let’s do that.

Since this isn’t something I’ve touched on in the past, I’ll start by doing my best to define what I mean by “general intelligence”: a generally intelligent entity is one that achieves a special synthesis of three things:

- A way of interacting with and observing a complex environment. Typically this means embodiment: the ability to perceive and interact with the natural world.

- A robust world model covering the environment. This is the mechanism which allows an entity to perform quick inference with a reasonable accuracy. World models in humans are generally referred to as “intuition”, “fast thinking” or “system 1 thinking”.

- A mechanism for performing deep introspection on arbitrary topics. This is thought of in many different ways – it is “reasoning”, “slow thinking” or “system 2 thinking”.

If you have these three things, you can build a generally intelligent agent. Here’s how:

First, you se...

Low-hanging fruit:

Loving this Chrome extension so far: YouTube Summary with ChatGPT & Claude - Chrome Web Store

It adds a button on YouTube videos where, when you click it (or keyboard shortcut ctrl + x + x), it opens a new tab into the LLM chat of your choice, pastes the entire transcript in the chat along with a custom message you can add as a template ("Explain the key points.") and then automatically presses enter to get the chat going.

It's pretty easy to get a quick summary of a YouTube video without needing to watch the whole thing and then ask follow-up questions. It seems like an easy way to save time or do a quick survey of many YouTube videos. (I would not have bothered going through the entire "Team 2 | Lo fi Emulation @ Whole Brain Emulation Workshop 2024" talk, so it was nice to get the quick summary.)

I usually like getting a high-level overview of the key points of a talk to have a mental mind map skeleton before I dive into the details.

You can even set up follow-up prompt buttons (which works with ChatGPT but currently does not work with Claude for me), though I'm not sure what I'd use. Maybe something like, "Why is this important to AI alignment?"

The default prom...

Dario Amodei believes that LLMs/AIs can be aided to self-improve in a similar way to AlphaGo Zero (though LLMs/AIs will benefit from other things too, like scale), where the models can learn by themselves to gain significant capabilities.

The key for him is that Go has a set of rules that the AlphaGo model needs to abide by. These rules allow the model to become superhuman at Go with enough compute.

Dario essentially believes that to reach better capabilities, it will help to develop rules for all the domains we care about and that this will likely be possible for more real-world tasks (not just games like Go).

Therefore, I think the crux here is if you think it is possible to develop rules for science (physics, chemistry, math, biology) and other domains s.t., the models can do this sort of self-play to become superhuman for each of the things we care about.

So far, we have examples like AlphaGeometry, which relies on our ability to generate many synthetic examples to help the model learn. This makes sense for the geometry use case, but how do we know if this kind of approach will work for the kinds of things we actually care about? For games and geometry, this seems possible, but wha...

Alignment Researcher Assistant update.

Hey everyone, my name is Jacques, I'm an independent technical alignment researcher, primarily focused on evaluations, interpretability, and scalable oversight (more on my alignment research soon!). I'm now focusing more of my attention on building an Alignment Research Assistant (I've been focusing on my alignment research for 95% of my time in the past year). I'm looking for people who would like to contribute to the project. This project will be private unless I say otherwise (though I'm listing some tasks); I understand the dual-use nature and most criticism against this kind of work.

How you can help:

- Provide feedback on what features you think would be amazing in your workflow to produce high-quality research more efficiently.

- Volunteer as a beta-tester for the assistant.

- Contribute to one of the tasks below. (Send me a DM, and I'll give you access to the private Discord to work on the project.)

- Funding to hire full-time developers to build the features.

Here's the vision for this project:

...How might we build an AI system that augments researchers to get us 5x or 10x productivity for the field as a whole?

The system is designed with two main minds

I recently sent in some grant proposals to continue working on my independent alignment research. It gives an overview of what I'd like to work on for this next year (and more really). If you want to have a look at the full doc, send me a DM. If you'd like to help out through funding or contributing to the projects, please let me know.

Here's the summary introduction:

12-month salary for building a language model system for accelerating alignment research and upskilling (additional funding will be used to create an organization), and studying how to supervise AIs that are improving AIs to ensure stable alignment.

Summary

- Agenda 1: Build an Alignment Research Assistant using a suite of LLMs managing various parts of the research process. Aims to 10-100x productivity in AI alignment research. Could use additional funding to hire an engineer and builder, which could evolve into an AI Safety organization focused on this agenda. Recent talk giving a partial overview of the agenda.

- Agenda 2: Supervising AIs Improving AIs (through self-training or training other AIs). Publish a paper and create an automated pipeline for discovering noteworthy changes in

Recent paper I thought was cool:

In-Run Data Shapley: Data attribution method efficient enough for pre-training data attribution.

Essentially, it can track how individual data points (or clusters) impact model performance across pre-training. You just need to develop a set of validation examples to continually check the model's performance on those examples during pre-training. Amazingly, you can do this over the course of a single training run; no need to require multiple pre-training runs like other data attribution methods have required.

Other methods, like influence functions, are too computationally expensive to run during pre-training and can only be run post-training.

So, here's why this might be interesting from an alignment perspective:

- You might be able to set up a bunch of validation examples to test specific behaviour in the models so that we are hyper-aware of which data points contribute the most to that behaviour. For example, self-awareness or self-preservation.

- Given that this is possible to run during pre-training, you might understand model behaviour at such a granular level that you can construct data mixtures/curriculums that push the model towards internalizing 'hum

I'm currently ruminating on the idea of doing a video series in which I review code repositories that are highly relevant to alignment research to make them more accessible.

I do want to pick out repos with perhaps even bad documentation that are still useful and then hope on a call with the author to go over the repo and record it. At least have something basic to use when navigating the repo.

This means there would be two levels: 1) an overview with the author sharing at least the basics, and 2) a deep dive going over most of the code. The former likely contains most of the value (lower effort for me, still gets done, better than nothing, points to repo as a selection mechanism, people can at least get started).

I am thinking of doing this because I think there may be repositories that are highly useful for new people but would benefit from some direction. For example, I think Karpathy and Neel Nanda's videos have been useful in getting people started. In particular, Karpathy saw OOM more stars to his repos (e.g. nanoGPT) after the release of his videos (which, to be fair, he's famous, and a number of stars is definitely not a perfect proxy for usage).

I'm interested in any feedback ...

Current Thoughts on my Learning System

Crossposted from my website. Hoping to provide updates on my learning system every month or so.

TLDR of what I've been thinking about lately:

- There are some great insights in this video called "How Top 0.1% Students Think." And in this video about how to learn hard concepts.

- Learning is a set of skills. You need to practice each component of the learning process to get better. You can’t watch a video on a new technique and immediately become a pro. It takes time to reap the benefits.

- Most people suck at mindmaps. Mindmaps can be horrible for learning if you just dump a bunch of text on a page and point arrows to different stuff (some studies show mindmaps are ineffective, but that's because people initially suck at making them). However, if you take the time to learn how to do them well, they will pay huge dividends in the future. I’ll be doing the “Do 100 Things” challenge and developing my skill in building better mindmaps. Getting better at mindmaps involves “chunking” the material and creating memorable connections and drawings.

- Relational vs Isolated Learning. As you learn something new, try to learn it in relation to the things you already kno

I’m still thinking this through, but I am deeply concerned about Eliezer’s new article for a combination of reasons:

- I don’t think it will work.

- Given that it won’t work, I expect we lose credibility and it now becomes much harder to work with people who were sympathetic to alignment, but still wanted to use AI to improve the world.

- I am not convinced as he is about doom and I am not as cynical about the main orgs as he is.

In the end, I expect this will just alienate people. And stuff like this concerns me.

I think it’s possible that the most memetically powerful approach will be to accelerate alignment rather than suggesting long-term bans or effectively antagonizing all AI use.

So I think what I'm getting here is that you have an object-level disagreement (not as convinced about doom), but you are also reinforcing that object-level disagreement with signalling/reputational considerations (this will just alienate people). This pattern feels ugh and worries me. It seems highly important to separate the question of what's true from the reputational question. It furthermore seems highly important to separate arguments about what makes sense to say publicly on-your-world-model vs on-Eliezer's-model. In particular, it is unclear to me whether your position is "it is dangerously wrong to speak the truth about AI risk" vs "Eliezer's position is dangerously wrong" (or perhaps both).

I guess that your disagreement with Eliezer is large but not that large (IE you would name it as a disagreement between reasonable people, not insanity). It is of course possible to consistently maintain that (1) Eliezer's view is reasonable, (2) on Eliezer's view, it is strategically acceptable to speak out, and (3) it is not in fact strategically acceptable for people with Eliezer's views to speak out about those views. But this combination of views does imply endorsing a silencing of reasonable disagreements which seems unfortunate and anti-epistemic.

My own guess is that the maintenance of such anti-epistemic silences is itself an important factor contributing to doom. But, this could be incorrect.

Quote from Cal Newport's Slow Productivity book: "Progress in theoretical computer science research is often a game of mental chicken, where the person who is able to hold out longer through the mental discomfort of working through a proof element in their mind will end up with the sharper result."

Do we expect future model architectures to be biased toward out-of-context reasoning (reasoning internally rather than in a chain-of-thought)? As in, what kinds of capabilities would lead companies to build models that reason less and less in token-space?

I mean, the first obvious thing would be that you are training the model to internalize some of the reasoning rather than having to pay for the additional tokens each time you want to do complex reasoning.

The thing is, I expect we'll eventually move away from just relying on transformers with scale. And so...

I'm currently working on building an AI research assistant designed specifically for alignment research. I'm at the point where I will be starting to build specific features for the project and delegate work to developers who would like to contribute.

- Developers: If you are a developer who might be interested in contributing to this project, send me a DM for more details.

- Alignment Researchers: I have a long list of features I want to build. I need to prioritize the features that people actually think would help them the most. If you'd like to look over the features and provide feedback, send me a DM and I will send you the relevant list of features.

(This is the tale of a potentially reasonable CEO of the leading AGI company, not the one we have in the real world. Written after a conversation with @jdp.)

You’re the CEO of the leading AGI company. You start to think that your moat is not as big as it once was. You need more compute and need to start accelerating to give yourself a bigger lead, otherwise this will be bad for business.

You start to look around for compute, and realize you have 20% of your compute you handed off to the superalignment team (and even made a public commitment!). You end up ma...

So, you go to government and lobby. Except you never intended to help the government get involved in some kind of slow-down or pause. Your intent was to use this entire story as a mirage for getting rid of those who didn’t align with you and lobby the government in such a way that they don’t think it is such a big deal that your safety researchers are resigning.

You were never the reasonable CEO, and now you have complete power.

From a Paul Christiano talk called "How Misalignment Could Lead to Takeover" (from February 2023):

Assume we're in a world where AI systems are broadly deployed, and the world has become increasingly complex, where humans know less and less about how things work.

A viable strategy for AI takeover is to wait until there is certainty of success. If a 'bad AI' is smart, it will realize it won't be successful if it tries to take over, not a problem.

So you lose when a takeover becomes possible, and some threshold of AIs behave badly. If all the smartest AIs...

This seems like a fairly important paper by Deepmind regarding generalization (and lack of it in current transformer models): https://arxiv.org/abs/2311.00871

Here’s an excerpt on transformers potentially not really being able to generalize beyond training data:

...Our contributions are as follows:

- We pretrain transformer models for in-context learning using a mixture of multiple distinct function classes and characterize the model selection behavior exhibited.

- We study the in-context learning behavior of the pretrained transformer model on functions th

Given funding is a problem in AI x-risk at the moment, I’d love to see people to start thinking of creative ways to provide additional funding to alignment researchers who are struggling to get funding.

For example, I’m curious if governance orgs would pay for technical alignment expertise as a sort of consultant service.

Also, it might be valuable to have full-time field-builders that are solely focused on getting more high-net-worth individuals to donate to AI x-risk.

On joking about how "we're all going to die"

Setting aside the question of whether people are overly confident about their claims regarding AI risk, I'd like to talk about how we talk about it amongst ourselves.

We should avoid jokingly saying "we're all going to die" because I think it will corrode your calibration to risk with respect to P(doom) and it will give others the impression that we are all more confident about P(doom) than we really are.

I think saying it jokingly still ends up creeping into your rational estimates on timelines and P(doom). I expe...

What are some important tasks you've found too cognitively taxing to get in the flow of doing?

One thing that I'd like to consider for Accelerating Alignment is to build tools that make it easier to get in the habit of cognitively demanding tasks by reducing the cognitive load necessary to do the task. This is part of the reason why I think people are getting such big productivity gains from tools like Copilot.

One way I try to think about it is like getting into the habit of playing guitar. I typically tell people to buy an electric guitar rather than an ac...

Projects I'd like to work on in 2023.

Wrote up a short (incomplete) bullet point list of the projects I'd like to work on in 2023:

- Accelerating Alignment

- Main time spent (initial ideas, will likely pivot to varying degrees depending on feedback; will start with one):

- Fine-tune GPT-3/GPT-4 on alignment text and connect the API to Loom, VSCode (CoPilot for alignment research) and potentially notetaking apps like Roam Research. (1-3 months, depending on bugs and if we continue to add additional features.)

- Create an audio-to-post pipeline where we can eas

- Main time spent (initial ideas, will likely pivot to varying degrees depending on feedback; will start with one):

OpenAI CEO Sam Altman has privately said the company could become a benefit corporation akin to rivals Anthropic and xAI.

"Sam Altman recently told some shareholders that OAI is considering changing its governance structure to a for-profit business that OAI's nonprofit board doesn't control. [...] could open the door to public offering of OAI; may give Altman an opportunity to take a stake in OAI."

Perhaps I am too cynical, but it seems to me that Sam Altman will say anything... and change his mind later.

Jacques' AI Tidbits from the Web

I often find information about AI development on X (f.k.a.Twitter) and sometimes other websites. They usually don't warrant their own post, so I'll use this thread to share. I'll be placing a fairly low filter on what I share.

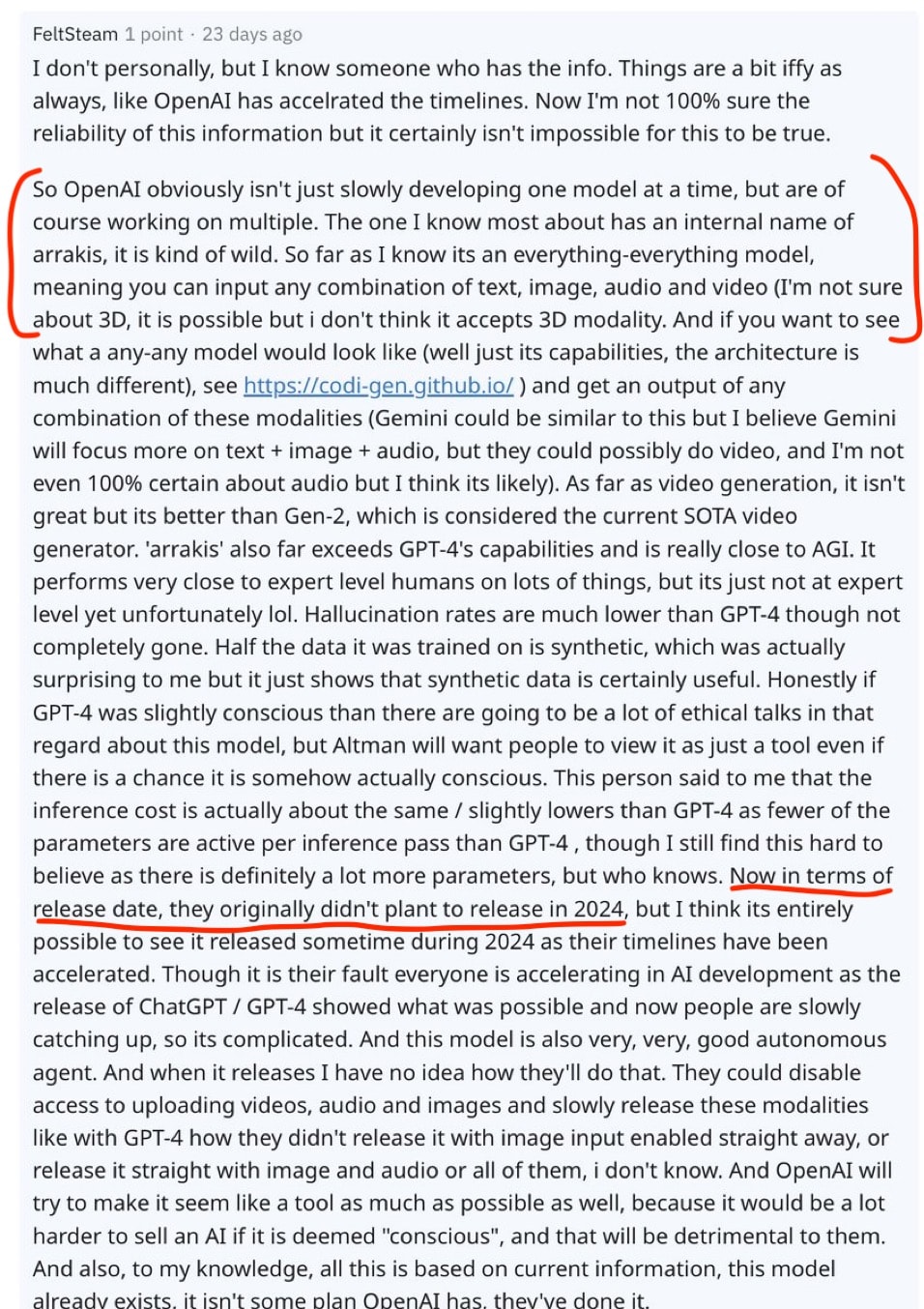

There's someone on X (f.k.a.Twitter) called Jimmy Apples (🍎/acc) and he has shared some information in the past that turned out to be true (apparently the GPT-4 release date and that OAI's new model would be named "Gobi"). He recently tweeted, "AGI has been achieved internally." Some people think that the Reddit comment below may be from the same guy (this is just a weak signal, I’m not implying you should consider it true or update on it):

I think it would be great if alignment researchers read more papers

But really, you don't even need to read the entire paper. Here's a reminder to consciously force yourself to at least read the abstract. Sometimes I catch myself running away from reading an abstract of a paper even though it is very little text. Over time I've just been forcing myself to at least read the abstract. A lot of times you can get most of the update you need just by reading the abstract. Try your best to make it automatic to do the same.

To read more papers, consider using Semant...

On hyper-obession with one goal in mind

I’ve always been interested in people just becoming hyper-obsessed in pursuing a goal. One easy example is with respect to athletes. Someone like Kobe Bryant was just obsessed with becoming the best he could be. I’m interested in learning what we can from the experiences of the hyper-obsessed and what we can apply to our work in EA / Alignment.

I bought a few books on the topic, I should try to find the time to read them. I’ll try to store some lessons in this shortform, but here’s a quote from Mr. Beast’s Joe Rogan in...

I'm exploring the possibility of building an alignment research organization focused on augmenting alignment researchers and progressively automating alignment research (yes, I have thought deeply about differential progress and other concerns). I intend to seek funding in the next few months, and I'd like to chat with people interested in this kind of work, especially great research engineers and full-stack engineers who might want to cofound such an organization. If you or anyone you know might want to chat, let me know! Send me a DM, and I can send you ...

I had this thought yesterday: "If someone believes in the 'AGI lab nationalization by default' story, then what would it look like to build an organization or startup in preparation for this scenario?"

For example, you try to develop projects that would work exceptionally well in a 'nationalization by default' world while not getting as much payoff if you are in a non-nationalization world. The goal here is to do the normal startup thing: risky bets with a potentially huge upside.

I don't necessarily support nationalization and am still trying to think throu...

Anybody know how Fathom Radiant (https://fathomradiant.co/) is doing?

They’ve been working on photonics compute for a long time so I’m curious if people have any knowledge on the timelines they expect it to have practical effects on compute.

Also, Sam Altman and Scott Gray at OpenAI are both investors in Fathom. Not sure when they invested.

I’m guessing it’s still a long-term bet at this point.

OpenAI also hired someone who worked at PsiQuantum recently. My guess is that they are hedging their bets on the compute end and generally looking for opportunities on ...

I shared the following as a bio for EAG Bay Area 2024. I'm sharing this here if it reaches someone who wants to chat or collaborate.

Hey! I'm Jacques. I'm an independent technical alignment researcher with a background in physics and experience in government (social innovation, strategic foresight, mental health and energy regulation). Link to Swapcard profile. Twitter/X.

CURRENT WORK

- Collaborating with Quintin Pope on our Supervising AIs Improving AIs agenda (making automated AI science safe and controllable). The current project involves a new method allowi

I think people might have the implicit idea that LLM companies will continue to give API access as the models become more powerful, but I was talking to someone earlier this week that made me remember that this is not necessarily the case. If you gain powerful enough models, you may just keep it to yourself and instead spin AI companies with AI employees to make a ton of cash instead of just charging for tokens.

For this reason, even if outside people build the proper brain-like AGI setup with additional components to squeeze out capabilities from LLMs, they may be limited by:

1. open-source models

2. the API of the weaker models from the top companies

3. the best API of the companies that are lagging behind

A frame for thinking about takeoff

One error people can make when thinking about takeoff speeds is assuming that because we are in a world with some gradual takeoff, it now means we are in a "slow takeoff" world. I think this can lead us to make some mistakes in our strategy. I usually prefer thinking in the following frame: “is there any point in the future where we’ll have a step function that prevents us from doing slow takeoff-like interventions for preventing x-risk?”

In other words, we should be careful to assume that some "slow takeoff" doesn't have a...

We still don't know if this will be guaranteed to happen, but it seems that OpenAI is considering removing its "regain full control of Microsoft shares once AGI is reached" clause. It seems they want to be able to keep their partnership with Microsoft (and just go full for-profit (?)).

Here's the Financial Times article:

...OpenAI seeks to unlock investment by ditching ‘AGI’ clause with Microsoft

OpenAI is in discussions to ditch a provision that shuts Microsoft out of its most advanced models when the start-up achieves “artificial general intelligence”, as

Imagine there was an AI-suggestion tool that could predict reasons why you agree/disagree-voted on a comment, and you just had to click one of the generated answers to provide a bit of clarity at a low cost.

Easy LessWrong post to LLM chat pipeline (browser side-panel)

I started using Sider as @JaimeRV recommended here. Posting this as a top-level shortform since I think other LessWrong users should be aware of it.

Website with app and subscription option. Chrome extension here.

You can either pay for the monthly service and click the "summarize" feature on a post and get the side chat window started or put your OpenAI API / ChatGPT Pro account in the settings and just cmd+a the post (which automatically loads the content in the chat so you can immediately ask a ...

I like this feature on the EA Forum so sharing here to communicate interest in having it added to LessWrong as well:

The EA Forum has an audio player interface added to the bottom of the page when you listen to a post. In addition, there are play buttons on the left side of every header to make it quick to continue playing from that section of the post.

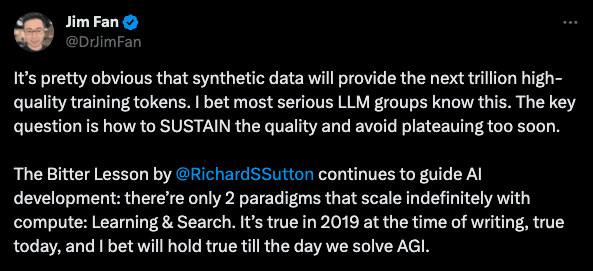

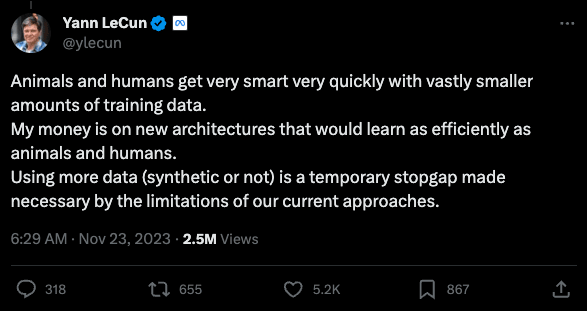

Clarification on The Bitter Lesson and Data Efficiency

I thought this exchange provided some much-needed clarification on The Bitter Lesson that I think many people don't realize, so I figured I'd share it here:

Lecun responds:

Then, Richard Sutton agrees with Yann. Someone asks him:

There are those who have motivated reasoning and don’t know it.

Those who have motivated reasoning, know it, and don’t care.

Finally, those who have motivated reasoning, know it, but try to mask it by including tame (but not significant) takes the other side would approve of.

It seems that @Scott Alexander believes that there's a 50%+ chance we all die in the next 100 years if we don't get AGI (EDIT: how he places his probability mass on existential risk vs catastrophe/social collapse is now unclear to me). This seems like a wild claim to me, but here's what he said about it in his AI Pause debate post:

...Second, if we never get AI, I expect the future to be short and grim. Most likely we kill ourselves with synthetic biology. If not, some combination of technological and economic stagnation, rising totalitarianism + illiberalism

In light of recent re-focus on AI governance to reduce AI risk, I wanted to share a post I wrote about a year ago that suggests an approach using strategic foresight to reduce risks: https://www.lesswrong.com/posts/GbXAeq6smRzmYRSQg/foresight-for-agi-safety-strategy-mitigating-risks-and.

Governments all over the world use frameworks like these. The purpose in this case would be to have documents ready ahead of time in case a window of opportunity for regulation opens up. It’s impossible to predict how things will evolve so instead you focus on what’s plausi...

I'm working on an ultimate doc on productivity I plan to share and make it easy, specifically for alignment researchers.

Let me know if you have any comments or suggestions as I work on it.

Roam Research link for easier time reading.

Google Docs link in case you want to leave comments there.

I’m collaborating on a new research agenda. Here’s a potential insight about future capability improvements:

There has been some insider discussion (and Sam Altman has said) that scaling has started running into some difficulties. Specifically, GPT-4 has gained a wider breath of knowledge, but has not significantly improved in any one domain. This might mean that future AI systems may gain their capabilities from places other than scaling because of the diminishing returns from scaling. This could mean that to become “superintelligent”, the AI needs to run ...

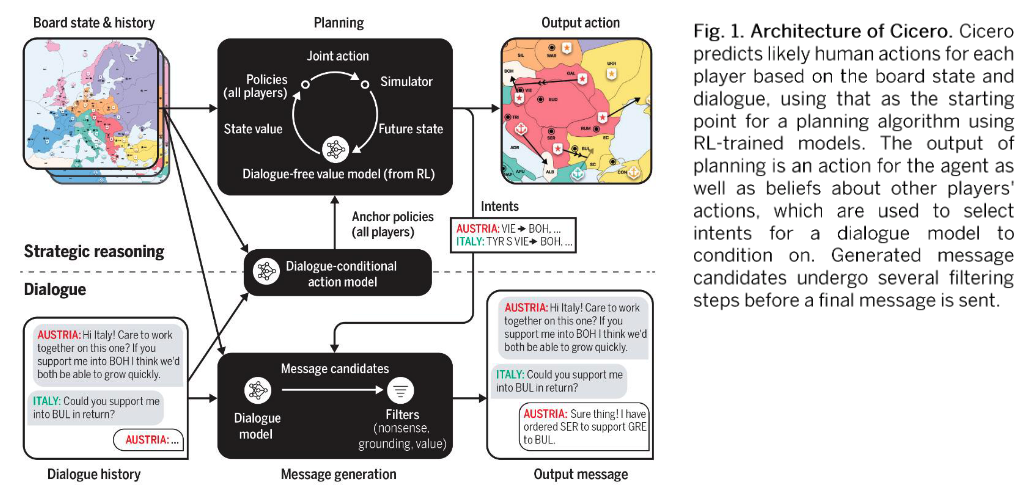

Notes on Cicero

Link to YouTube explanation:

Link to paper (sharing on GDrive since it's behind a paywall on Science): https://drive.google.com/file/d/1PIwThxbTppVkxY0zQ_ua9pr6vcWTQ56-/view?usp=share_link

Top Diplomacy players seem to focus on gigabrain strategies rather than deception

Diplomacy players will no longer want to collaborate with you if you backstab them once. This is so pervasive they'll still feel you are untrustworthy across tournaments. Therefore, it's mostly optimal to be honest and just focus on gigabrain strategies. That said, a smart...

Hey everyone, in collaboration with Apart Research, I'm helping organize a hackathon this weekend to build tools for accelerating alignment research. This hackathon is very much related to my effort in building an "Alignment Research Assistant."

Here's the announcement post:

2 days until we revolutionize AI alignment research at the Research Augmentation Hackathon!

As AI safety researchers, we pour countless hours into crucial work. It's time we built tools to accelerate our efforts! Join us in creating AI assistants that could supercharge the very research w...

Project idea: GPT-4-Vision to help conceptual alignment researchers during whiteboard sessions and beyond

Thoughts?

- Advice on how to get unstuck

- Unclear what should be added on top of normal GPT-4-Vision capabilities to make it especially useful, maybe connect it to local notes + search + ???

- How to make it super easy to use while also being hyper-effective at producing the best possible outputs

- Some alignment researchers don't want their ideas passed through the OpenAI API, and some probably don't care

- Could be used for inputting book pages, papers with figures, ???

What are people’s current thoughts on London as a hub?

- OAI and Anthropic are both building offices there

- 2 (?) new AI Safety startups based on London

- The government seems to be taking AI Safety somewhat seriously (so maybe a couple million gets captured for actual alignment work)

- MATS seems to be on the path to be sending somewhat consistent scholars to London

- A train ride away from Oxford and Cambridge

Anything else I’m missing?

I’m particularly curious about whether it’s worth it for independent researchers to go there. Would they actually interact with other r...

AI labs should be dedicating a lot more effort into using AI for cybersecurity as a way to prevent weights or insights from being stolen. Would be good for safety and it seems like it could be a pretty big cash cow too.

If they have access to the best models (or specialized), it may be highly beneficial for them to plug them in immediately to help with cybersecurity (perhaps even including noticing suspicious activity from employees).

I don’t know much about cybersecurity so I’d be curious to hear from someone who does.

Small shortform to say that I’m a little sad I haven’t posted as much as I would like to in recent months because of infohazard reasons. I’m still working on Accelerating Alignment with LLMs and eventually would like to hire some software engineer builders that are sufficiently alignment-pilled.

Call To Action: Someone should do a reading podcast of the AGISF material to make it even more accessible (similar to the LessWrong Curated Podcast and Cold Takes Podcast). A discussion series added to YouTube would probably be helpful as well.

The importance of Entropy

Given that there's been a lot of talk about using entropy during sampling of LLMs lately (related GitHub), I figured I'd share a short post I wrote for my website before it became a thing:

Imagine you're building a sandcastle on the beach. As you carefully shape the sand, you're creating order from chaos - this is low entropy. But leave that sandcastle for a while, and waves, wind, and footsteps will eventually reduce it back to a flat, featureless beach - that's high entropy.

Entropy is nature's tendency to move from order to disord...

Something I've been thinking about lately: For 'scarcity of compute' reasons, I think it's fairly likely we end up in a scaffolded AI world where one highly intelligent model (that requires much more compute) will essentially delegate tasks to weaker models as long as it knows that the weaker (maybe fine-tuned) model is capable of reliably doing that task.

Like, let's say you have a weak doctor AI that can basically reliably answer most medical questions. However, it knows when it is less confident in a diagnosis, so it will reach out to the powerful AI whe...

you need to be flow state maxxing. you curate your environment, prune distractions. make your workspace a temple, your mind a focused laser. you engineer your life to guard the sacred flow. every notification is an intruder, every interruption a thief. the world fades, the task is the world. in flow, you're not working, you're being. in the silent hum of concentration, ideas bloom. you're not chasing productivity, you're living it. every moment outside flow is a plea to return. you're not just doing, you're flowing. the mundane transforms into the extraord...

Regarding Q*, the (and Zero, the other OpenAI AI model you didn't know about)

Let's play word association with Q*:

From Reuters article:

...The maker of ChatGPT had made progress on Q* (pronounced Q-Star), which some internally believe could be a breakthrough in the startup's search for superintelligence, also known as artificial general intelligence (AGI), one of the people told Reuters. OpenAI defines AGI as AI systems that are smarter than humans. Given vast computing resources, the new model was able to solve certain mathematical problems, the person said on

Beeminder + Freedom are pretty goated as productivity tools.

I’ve been following Andy Matuschak’s strategy and it’s great/flexible: https://blog.andymatuschak.org/post/169043084412/successful-habits-through-smoothly-ratcheting

New tweet about the world model (map) paper:

Sub-tweeting because I don't want to rain on a poor PhD student who should have been advised better, but: that paper about LLMs having a map of the world is perhaps what happens when a famous physicist wants to do AI research without caring to engage with the existing literature.

I haven’t looked into the paper in question yet, but I have been concerned about researchers taking old ideas about AI risk and looking to prove things that might not be there yet as an AI risk communication point. Then, being overconfide...

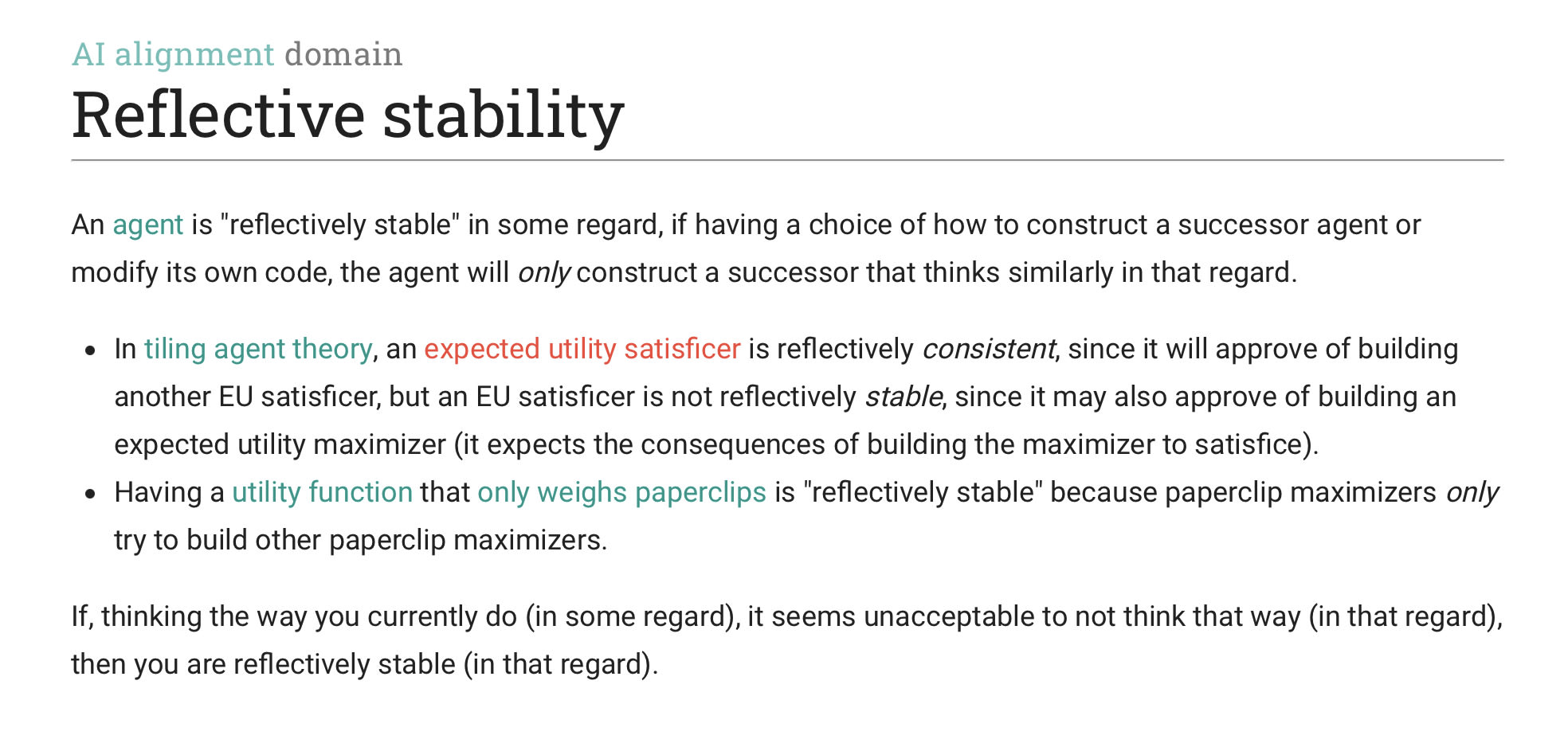

I expect that my values would be different if I was smarter. Personally, if something were to happen and I’d get much smarter and develop new values, I’m pretty sure I’d be okay with that as I expect I’d have better, more refined values.

Why wouldn’t an AI also be okay with that?

Is there something wrong with how I would be making a decision here?

Do the current kinds of agents people plan to build have “reflective stability”? If you say yes, why is that?

“We assume the case that AI (intelligences in general) will eventually converge on one utility function. All sufficiently intelligent intelligences born in the same reality will converge towards the same behaviour set. For this reason, if it turns out that a sufficiently advanced AI would kill us all, there’s nothing that we can do about it. We will eventually hit that level of intelligence.

Now, if that level of intelligence is doesn’t converge towards something that kills us all, we are safer in a world where AI capabilities (of the current regime) essent...

I'm still in some sort of transitory phase where I'm deciding where I'd like to live long term. I moved to Montreal, Canada lately because I figured I'd try working as an independent researcher here and see if I can get MILA/Bengio to do some things for reducing x-risk.

Not long after I moved here, Hinton started talking about AI risk too, and he's in Toronto which is not too far from Montreal. I'm trying to figure out the best way I could leverage Canada's heavyweights and government to make progress on reducing AI risk, but it seems like there's a lot mor...

I gave talk about my Accelerating Alignment with LLMs agenda about 1 month ago (which is basically a decade in AI tools time). Part of the agenda covered (publicly) here.

I will maybe write an actual post about the agenda soon, but would love to have some people who are willing to look over it. If you are interested, send me a message.

Someone should create a “AI risk arguments” flowchart that serves as a base for simulating a conversation with skeptics or the general public. Maybe a set of flashcards to go along with it.

I want to have the sequence of arguments solid enough in my head so that I can reply concisely (snappy) if I ever end up in a debate, roundtable or on the news. I’ve started collecting some stuff since I figured I should take initiative on it.

Text-to-Speech tool I use for reading more LW posts and papers

I use Voice Dream Reader. It's great even though the TTS voice is still robotic. For papers, there's a feature that let's you skip citations so the reading is more fluid.

I've mentioned it before, but I was just reminded that I should share it here because I just realized that if you load the LW post with "Save to Voice Dream", it will also save the comments so I can get TTS of the comments as well. Usually these tools only include the post, but that's annoying because there's a lot of good stuff...

I honestly feel like some software devs should probably still keep their high-paying jobs instead of going into alignment and just donate a bit of time and programming expertise to help independent researchers if they want to start contributing to AI Safety.

I think we can probably come up with engineering projects that are interesting and low-barrier-to-entry for software engineers.

I also think providing “programming coaching” to some independent researchers could be quite useful. Whether that’s for getting them better at coding up projects efficiently or ...

Differential Training Process

I've been ruminating on an idea ever since I read the section on deception in "The Core of the Alignment Problem is..." from my colleagues in SERI MATS.

Here's the important part:

...When an agent interacts with the world, there are two possible ways the agent makes mistakes:

- Its values were not aligned with the outer objective, and so it does something intentionally wrong,

- Its world model was incorrect, so it makes an accidental mistake.

Thus, the training process of an AGI will improve its values or its world model, and since i

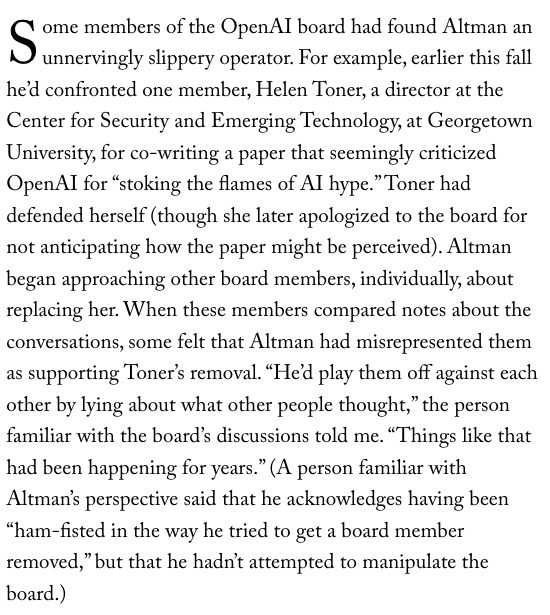

More information about alleged manipulative behaviour of Sam Altman

Text from article (along with follow-up paragraphs):

...Some members of the OpenAI board had found Altman an unnervingly slippery operator. For example, earlier this fall he’d confronted one member, Helen Toner, a director at the Center for Security and Emerging Technology, at Georgetown University, for co-writing a paper that seemingly criticized OpenAI for “stoking the flames of AI hype.” Toner had defended herself (though she later apologized to the board for not anticipating how the p

On generating ideas for Accelerating Alignment

There's this Twitter thread that I saved a while ago that is no longer up. It's about generating ideas for startups. However, I think the insight from the thread carries over well enough to thinking about ideas for Accelerating Alignment. Particularly, being aware of what is on the cusp of being usable so that you can take advantage of it as soon as becomes available (even do the work beforehand).

For example, we are surprisingly close to human-level text-to-speech (have a look at Apple's new model for audiobook...

Should EA / Alignment offices make it ridiculously easy to work remotely with people?

One of the main benefits of being in person is that you end up in spontaneous conversations with people in the office. This leads to important insights. However, given that there's a level of friction for setting up remote collaboration, only the people in those offices seem to benefit.

If it were ridiculously easy to join conversations for lunch or whatever (touch of a button rather than pulling up a laptop and opening a Zoom session), then would it allow for a stronger cr...

Detail about the ROME paper I've been thinking about

In the ROME paper, when you prompt the language model with "The Eiffel Tower is located in Paris", you have the following:

- Subject token(s): The Eiffel Tower

- Relationship: is located in

- Object: Paris

Once a model has seen a subject token(s) (e.g. Eiffel Tower), it will retrieve a whole bunch of factual knowledge (not just one thing since it doesn’t know you will ask for something like location after the subject token) from the MLPs and 'write' into to the residual stream for the attention modules at the final...

Preventing capability gains (e.g. situational awareness) that lead to deception

Note: I'm at the crackpot idea stage of thinking about how model editing could be useful for alignment.

One worry with deception is that the AI will likely develop a sufficiently good world model to understand it is in a training loop before it has fully aligned inner values.

The thing is, if the model was aligned, then at some point we'd consider it useful for the model to have a good enough world model to recognize that it is a model. Well, what if you prevent the model from bei...

Can you give concrete use-cases that you imagine your project would lead to helping alignment researchers? Alignment researchers have wildly varying styles of work outputs and processes. I assume you aim to accelerate a specific subset of alignment researchers (those focusing on interpretability and existing models and have an incremental / empirical strategy for solving the alignment problem).