Just a few quick comments about my "integer whose square is between 15 and 30" question (search for my name in Zvi's post to find his discussion):

- The phrasing of the question I now prefer is "What is the least integer whose square is between 15 and 30", because that makes it unambiguous that the answer is -5 rather than 4. (This is a normal use of the word "least", e.g. in competition math, that the model is familiar with.) This avoids ambiguity about which of -5 and 4 is "smaller", since -5 is less but 4 is smaller in magnitude.

- This Gemini model answers -5 to both phrasings. As far as I know, no previous model ever said -5 regardless of phrasing, although someone said o1 Pro gets -5. (I don't have a subscription to o1 Pro, so I can't independently check.)

- I'm fairly confident that a majority of elite math competitors (top 500 in the US, say) would get this question right in a math competition (although maybe not in a casual setting where they aren't on their toes).

- But also this is a silly, low-quality question that wouldn't appear in a math competition.

- Does a model getting this question right say anything interesting about it? I think a little. There's a certain skill of being careful to not make assumptions (e.g. that the integer is positive). Math competitors get better at this skill over time. It's not that straightforward to learn.

- I'm a little confused about why Zvi says that the model gets it right in the screenshot, given that the model's final answer is 4. But it seems like the model snatched defeat from the jaws of victory? Like if you cut off the very last sentence, I would call it correct.

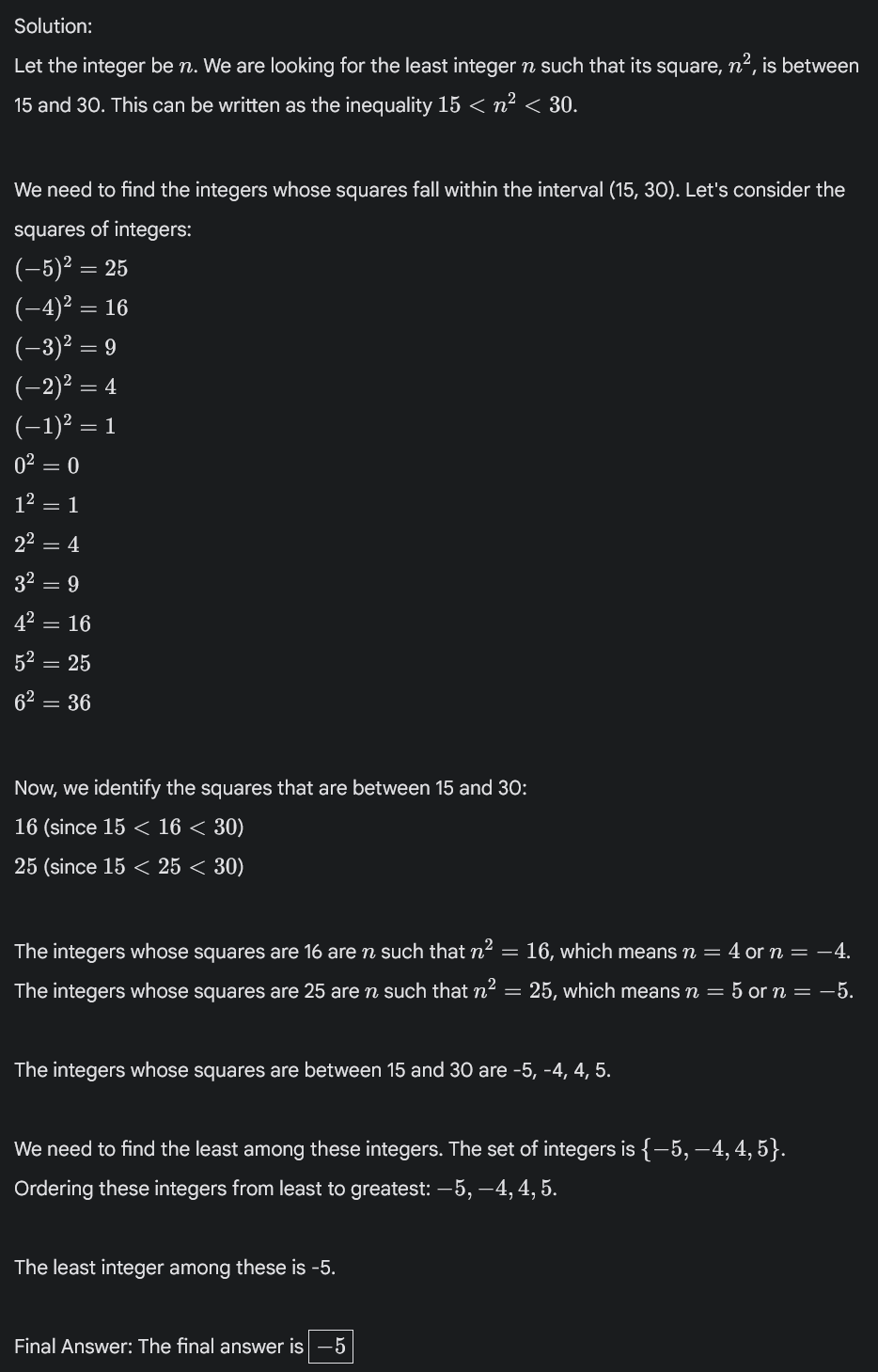

- Here's the output I get:

Miles Brundage: Trying to imagine aspirin company CEOs signing an open letter saying “we’re worried that aspirin might cause an infection that kills everyone on earth – not sure of the solution” and journalists being like “they’re just trying to sell more aspirin.”

It seems more like AI being pattern-matched to the supplements industry.

- Marketed as performance/productivity-enhancing

- Qualitative anecdotes + suspect quantitative metrics

- Unregulated industry full of hype + money

- Products all seem pretty similar to newcomers, aficionados claim huge differences but don't all agree with each other

- Striver-coded

- Weakens correlation between innate human capability and measured individual performance

As a consumer I would probably only pay about 250$ for the unitree B2-W wheeled robot dog because my only use for it is that I want to ride it like a skateboard, and I'm not sure it can do even that.

I see two major non-consumer applications: Street to door delivery (it can handle stairs and curbs), and war (it can carry heavy things (eg, a gun) over long distances over uneven terrain)

So, Unitree... do they receive any subsidies?

I had a conversation with Claude today about something I was researching for work. I tried my best to phrase questions neutrally and not bias the responses, and it pretty much re-derived a lot of the things I've been trying to tell my coworkers and clients for years. I'd always wondered if maybe I was crazy and missing the obvious counterarguments, but now I wonder that a little less. I still don't expect it to really convince anyone who wasn't already on board.

It reminded me a bit of the old joke about the math professor who says, "This is trivial to prove." A student asks, "Is it really trivial?" The professor stops lecturing and starts scribbling notes. Half an hour later he looks up and says, "Yes, it's trivial," then moves on with the rest of the planned lecture.

I'm very much looking forward to when I can upload this kind of transcript to something like Deep Research and say "Write a much more detailed report with lots of references about all of these questions. Also make me a slide deck and talk track to present it to these different kinds of audiences."

The year in models certainly finished off with a bang.

In this penultimate week, we get o3, which purports to give us vastly more efficient performance than o1, and also to allow us to choose to spend vastly more compute if we want a superior answer.

o3 is a big deal, making big gains on coding tests, ARC and some other benchmarks. How big a deal is difficult to say given what we know now. It’s about to enter full fledged safety testing.

o3 will get its own post soon, and I’m also pushing back coverage of Deliberative Alignment, OpenAI’s new alignment strategy, to incorporate into that.

We also got DeepSeek v3, which claims to have trained a roughly Sonnet-strength model for only $6 million and 37b active parameters per token (671b total via mixture of experts).

DeepSeek v3 gets its own brief section with the headlines, but full coverage will have to wait a week or so for reactions and for me to read the technical report.

Both are potential game changers, both in their practical applications and in terms of what their existence predicts for our future. It is also too soon to know if either of them is the real deal.

Both are mostly not covered here quite yet, due to the holidays. Stay tuned.

Table of Contents

Language Models Offer Mundane Utility

How does your company make best use of AI agents? Austin Vernon frames the issue well: AIs are super fast, but they need proper context. So if you want to use AI agents, you’ll need to ensure they have access to context, in forms that don’t bottleneck on humans. Take the humans out of the loop, minimize meetings and touch points. Put all your information into written form, such as within wikis. Have automatic tests and approvals, but have the AI call for humans when needed via ‘stop work authority’ – I would flip this around and let the humans stop the AIs, too.

That all makes sense, and not only for corporations. If there’s something you want your future AIs to know, write it down in a form they can read, and try to design your workflows such that you can minimize human (your own!) touch points.

To what extent are you living in the future? This is the CEO of playground AI, and the timestamp was Friday:

How do you educate yourself for a completely new world?

What will citizenship mean in the age of AI? I have absolutely no idea. So how do you prepare for that? Largely the same goes for wellbeing. A lot of this could be thought of as: Focus on the general and the adaptable, and focus less on the specific, including things specifically for Jobs and other current forms of paid work – you want to be creative and useful and flexible and able to roll with the punches.

That of course assumes that you are taking the world as given, rather than trying to change the course of history. In which case, there’s a very different calculation.

Large parts of every job are pretty dumb.

ChatGPT is a left-leaning midwit, so Paul Graham is using it to see what parts of his new essay such midwits will dislike, and which ones you can get it to acknowledge are true. I note that you could probably use Claude to simulate whatever Type of Guy you would like, if you have ordinary skill in the art.

Language Models Don’t Offer Mundane Utility

Strongly agree with this:

In Cursor I made an effort to split up files exactly because I found I had to always scan the file being changed to ensure it wasn’t about to go silently delete anything. The way I was doing it you didn’t have to worry it was modifying or deleting other files.

On the plus side, now I know how to do reasonable version control.

The uncanny valley problem here is definitely a thing.

Flash in the Pan

The latest rival to at least o1-mini is Gemini-2.0-Flash-Thinking, which I’m tempted to refer to (because of reasons) as gf1.

Gemini 2.0 Flash Thinking is now essentially tied at the top of the overall leaderboard with Gemini-Exp-1206, which is essentially a beta of Gemini Pro 2.0. This tells us something about the model, but also reinforces that this metric is bizarre now. It puts us in a strange spot. What is the scenario where you will want Flash Thinking rather than o1 (or o3!) and also rather than Gemini Pro, Claude Sonnet, Perplexity or GPT-4o?

One cool thing about Thinking is that (like DeepSeek’s Deep Thought) it explains its chain of thought much better than o1.

Deedy was impressed.

That result… did not replicate when I tried it. It went off the rails, and it went off them hard. And it went off them in ways that make me skeptical that you can use this for anything of the sort. Maybe Deedy got lucky?

Other reports I’ve seen are less excited about quality, and when o3 got announced it seemed everyone got distracted.

What about Gemini 2.0 Experimental (e.g. the beta of Gemini 2.0 Pro, aka Gemini-1206)?

It’s certainly a substantial leap over previous Gemini Pro versions and it is atop the Arena. But I don’t see much practical eagerness to use it, and I’m not sure what the use case is there where it is the right tool.

Eric Neyman is impressed:

That one did replicate for me, and the logic is fine, but wow do some models make life a little tougher than it is, think faster and harder not smarter I suppose:

I mean, yes, that’s all correct, but… wow.

Flash isn’t that much worse than GPT-4o in many ways, but certainly it could be better. Presumably the next step is to plug in Gemini Pro 2.0 and see what happens?

Teortaxes was initially impressed, but upon closer examination is no longer impressed.

The Six Million Dollar Model

Having no respect for American holidays, DeepSeek dropped their v3 today.

If this performs halfway as well as its evals, this was a rather stunning success.

It’s a mixture of experts model with 671b total parameters, 37b activate per token.

As always, not so fast. DeepSeek is not known to chase benchmarks, but one never knows the quality of a model until people have a chance to bang on it a bunch.

If they did train a Sonnet-quality model for $6 million in compute, then that will change quite a lot of things.

Essentially no one has reported back on what this model can do in practice yet, and it’ll take a while to go through the technical report, and more time to figure out how to think about the implications. And it’s Christmas.

So: Check back later for more.

And I’ll Form the Head

Increasingly the correct solution to ‘what LLM or other AI product should I use?’ is ‘you should use a variety of products depending on your exact use case.’

This is mostly the same workflow I used before o1, when there was only Sonnet. I’d discuss to form a plan, then use that to craft a request, then make the edits. The swap doesn’t seem like it makes things that much trickier, the logistical trick is getting all the code implementation automated.

Huh, Upgrades

ChatGPT picks up integration with various apps on Mac including Warp, ItelliJ Idea, PyCharm, Apple Notes, Notion, Quip and more, including via voice mode. That gives you access to outside context, including an IDE and a command line and also your notes. Windows (and presumably more apps) coming soon.

Unlimited Sora available to all Plus users on the relaxed queue over the holidays, while the servers are otherwise less busy.

Requested upgrade: Evan Conrad requests making voice mode on ChatGPT mobile show the transcribed text. I strongly agree, voice modes should show transcribed text, and also show a transcript after, and also show what the AI is saying, there is no reason to not do these things. Looking at you too, Google. The head of applied research at OpenAI replied ‘great idea’ so hopefully we get this one.

o1 Reactions

Dean Ball is an o1 and o1 pro fan for economic history writing, saying they’re much more creative and cogent at combining historic facts with economic analysis versus other models.

This seems like an emerging consensus of many, except different people put different barriers on the math/code category (e.g. Tyler Cowen includes economics):

Well, yeah, because it seems like it is GPT-4o under the hood?

Gallabytes is embracing the wait.

Here’s a skeptical take.

I say Damek got his $200 worth, no?

If you’re using o1 a lot, removing the limits there is already worth $200/month, even if you rarely use o1 Pro.

There’s a phenomenon where people think about cost and value in terms of typical cost, rather than thinking in terms of marginal benefit. Buying relatively expensive but in absolute terms cheap things is often an amazing play – there are many things where 10x the price for 10% better is an amazing deal for you, because your consumer surplus is absolutely massive.

Also, once you take 10 seconds, there’s not much marginal cost to taking 10 minutes, as I learned with Deep Research. You ask your question, you tab out, you do something else, you come back later.

That said, I’m not currently paying the $200, because I don’t find myself hitting the o1 limits, and I’d mostly rather use Claude. If it gave me unlimited uses in Cursor I’d probably slam that button the moment I have the time to code again (December has been completely insane).

Fun With Image Generation

I don’t know that this means anything but it is at least fun.

There are some patterns here, especially that more powerful models seem to converge on various shades of blue, whereas less powerful models are all over the place. As I understand it, this isn’t testing orthogonality in the sense of ‘all powerful minds prefer blue’ rather it is ‘by default sufficiently powerful minds trained in the way we typically train them end up preferring blue.’

I wonder if this could be used as a quick de facto model test in some way.

There was somehow a completely fake ‘true crime’ story about an 18-year-old who was supposedly paid to have sex with women in his building where the victim’s father was recording videos and selling them in Japan… except none of that happened and the pictures are AI fakes?

Introducing

Google introduces LearnLM, available for preview in Google AI Studio, designed to facilitate educational use cases, especially in science. They say it ‘outperformed other leading AI models when it comes to adhering to the principles of learning science’ which does not sound like something you would want Feynman hearing you say. It incorporates search, YouTube, Android and Google Classroom.

Sure, sure. But is it useful? It was supposedly going to be able to do automated grading, handles routine paperwork, plans curriculums, track student progress and personalizes their learning paths and so on, but any LLM can presumably do all those things if you set it up properly.

They Took Our Jobs

This sounds great, totally safe and reliable, other neat stuff like that.

He’s obviously right about this. It’s too convenient, too much faster. Indeed, I expect we’ll see a clear division between ‘code you can have the AI write’ which happens super fast, and ‘code you cannot let the AI write’ because of corporate policy or security issues, both legit and not legit, which happens the old much slower way.

Complement versus supplement, economic not assuming the conclusion edition.

I can see us spending time in #1. As Roon says, AI capabilities progress has been spiky, with some human-easy tasks being hard and some human-hard tasks being easy. So the 3→1 path makes some sense, if progress isn’t too quick, including if the high complexity tasks start to cost ‘real money’ as per o3 so choosing the right questions and tasks becomes very important. Alternatively, we might get our act together enough to restrict certain cognitive tasks to humans even though AIs could do them, either for good reasons or rent seeking reasons (or even ‘good rent seeking’ reasons?) to keep us in that scenario.

But yeah, the default is a rapid transition to #4, and for that to happen to all labor not only cognitive labor. Robotics is hard, it’s not impossible.

One thing that has clearly changed is AI startups have very small headcounts.

An excellent reason we still have our jobs is that people really aren’t willing to invest in getting AI to work, even when they know it exists, if it doesn’t work right away they typically give up:

PoliMath reports it is very hard out there trying to find tech jobs, and public pipelines for applications have stopped working entirely. AI presumably has a lot to do with this, but the weird part is his report that there have been a lot of people who wanted to hire him, but couldn’t find the authority.

Get Involved

Benjamin Todd points out what I talked about after my latest SFF round, that the dynamics of nonprofit AI safety funding mean that there’s currently great opportunities to donate to.

In Other AI News

After some negotiation with the moderator Raymond Arnold, Claude (under Janus’s direction) is permitted to comment on Janus’s Simulators post on LessWrong. It seems clear that this particular comment should be allowed, and also that it would be unwise to have too general of a ‘AIs can post on LessWrong’ policy, mostly for the reasons Raymond explains in the thread. One needs a coherent policy. It seems Claude was somewhat salty about the policy of ‘only believe it when the human vouches.’ For now, ‘let Janus-directed AIs do it so long as he approves the comments’ seems good.

Jan Kulveit offers us a three-layer phenomenological model of LLM psychology, based primarily on Claude, not meant to be taken literally:

In this frame, a self-aware character layer leads to reasoning about the model’s own reasoning, and to goal driven behavior, with everything that follows from those. Jan then thinks the ground layer can also become self-aware.

I don’t think this is technically an outright contradiction to Andreessen’s ‘huge if true’ claims that the Biden administration saying it would conspire to ‘totally control’ AI and put it in the hands of 2-3 companies and that AI startups ‘wouldn’t be allowed.’ But Sam Altman reports never having heard anything of the sort, and quite reasonably says ‘I don’t even think the Biden administration is competent enough to’ do it. In theory they could both be telling the truth – perhaps the Biden administration told Andreessen about this insane plan directly, despite telling him being deeply stupid, and also hid it from Altman despite that also then being deeply stupid – but mostly, yeah, at least one of them is almost certainly lying.

Benjamin Todd asks how OpenAI has maintained their lead despite losing so many of their best researchers. Part of it is that they’ve lost all their best safety researchers, but they only lost Radford in December, and they’ve gone on a full hiring binge.

In terms of traditionally trained models, though, it seems like they are now actively behind. I would much rather use Claude Sonnet 3.5 (or Gemini-1206) than GPT-4o, unless I needed something in particular from GPT-4o. On the low end, Gemini Flash is clearly ahead. OpenAI’s attempts to directly go beyond GPT-4o have, by all media accounts, faile, and Anthropic is said to be sitting on Claude Opus 3.5.

OpenAI does have o1 and soon o3, where no one else has gotten there yet, no Google Flash Thinking and Deep Thought do not much count.

As far as I can tell, OpenAI has made two highly successful big bets – one on scaling GPTs, and now one on the o1 series. Good choices, and both instances of throwing massively more compute at a problem, and executing well. Will this lead persist? We shall see. My hunch is that it won’t unless the lead is self-sustaining due to low-level recursive improvements.

You See an Agent, You Run

Anthropic offers advice on building effective agents, and when to use them versus use workflows that have predesigned code paths. The emphasis is on simplicity. Do the minimum to accomplish your goals. Seems good for newbies, potentially a good reminder for others.

Another One Leaves the Bus

A lot of people have left OpenAI.

Usually it’s a safety researcher. Not this time. This time it’s Alec Radford.

He’s the Canonical Brilliant AI Capabilities Researcher, whose love is by all reports doing AI research. He is leaving ‘to do independent research.’

This is especially weird given he had to have known about o3, which seems like an excellent reason to want to do your research inside OpenAI.

So, well, whoops?

Quiet Speculations

In what Tyler Cowen calls ‘one of the better estimates in my view,’ an OECD working paper estimates total factor productivity growth at an annualized 0.25%-0.6% (0.4%-0.9% for labor). Tyler posted that on Thursday, the day before o3 was announced, so revise that accordingly. Even without o3 and assuming no substantial frontier model improvements from there, I felt this was clearly too low, although it is higher than many economist-style estimates. One day later we had (the announcement of) o3.

Fully would of course go completely crazy. That would be that. But even a dramatic speedup would be a pretty big deal, and also fully would then not be so far behind.

Reminder of the Law of Conservation of Expected Evidence, if you conclude ‘I think we’re in for some big surprises’ then you should probably update now.

However this is not fully or always the case. It would be a reasonable model to say that the big surprises follow a Poisson distribution drawn from an unknown frequency, with the magnitude of the surprise also drawn from a power distribution – which seems like a very reasonable prior.

That still means every big surprise is still a big surprise, the same way that if you expect.

You should feel that shock now if you haven’t, then slowly undo some of that shock every day that the estimated date of that gets later, then have some of the shock left for when it suddenly becomes zero days or the timeline gets shorter. Updates for everyone.

Claims about consciousness, related to o3. I notice I am confused about such things.

The Verge says 2025 will be the year of

AI agentsthe smart lock? I mean, okay, I suppose they’ll get better, but I have a feeling we’ll be focused elsewhere.Ryan Greenblatt, author of the recent Redwood/Anthropic paper, predicts 2025:

Lock It In

I continue to wonder how much this will matter:

Also like relationships with humans, including employees and friends, and so on.

My guess is the lock-in will be substantial but mostly for terribly superficial reasons?

For now, I think people are vastly overestimating memories. The memory functions aren’t nothing but they don’t seem to do that much.

Custom instructions will always be a power user thing. Regular people don’t use custom instructions, they literally never go into the settings on any program. They certainly didn’t ‘do the work’ of customizing them to the particular AI through testing and iterations – and for those who did do that, they’d likely be down for doing it again.

What I think matters more is that the UIs will be different, and the behaviors and correct prompts will be different, and people will be used to what they are used to in those ways.

The flip side is that this will take place in the age of AI, and of AI agents. Imagine a world, not too long from now, where if you shift between Claude, Gemini and ChatGPT, they will ask if you want their agent to go into the browser and take care of everything to make the transition seamless and have it work like you want it to work. That doesn’t seem so unrealistic.

The biggest barrier, I presume, will continue to be inertia, not doing things and not knowing why one would want to switch. Trivial inconveniences.

The Quest for Sane Regulations

Sriram Krishnan, formerly of a16z, will be working with David Sacks in the White House Office of Science and Technology. I’ve had good interactions with him in the past and I wish him the best of luck.

The choice of Sriram seems to have led to some rather wrongheaded (or worse) pushback, and for some reason a debate over H1B visas. As in, there are people who for some reason are against them, rather than the obviously correct position that we need vastly more H1B visas. I have never heard a person I respect not favor giving out far more H1B visas, once they learn what such visas are. Never.

Also joining the administration are Michael Kratsios, Lynne Parker and Bo Hines. Bo Hines is presumably for crypto (and presumably strongly for crypto), given they will be executive director of the new Presidential Council of Advisors for Digital Assets. Lynne Parker will head the Presidential Council of Advisors for Science and Technology, Kratsios will direct the office of science and tech policy (OSTP).

Miles Brundage writes Time’s Up for AI Policy, because he believes AI that exceeds human performance in every cognitive domain is almost certain to be built and deployed in the next few years.

If you believe time is as short as Miles thinks it is, then this is very right – you need to try and get the policies in place in 2025, because after that it might be too late to matter, and the decisions made now will likely lock us down a path. Even if we have somewhat more time than that, we need to start building state capacity now.

Actual bet on beliefs spotted in the wild: Miles Brundage versus Gary Marcus, Miles is laying $19k vs. $1k on a set of non-physical benchmarks being surpassed by 2027, accepting Gary’s offered odds. Good for everyone involved. As a gambler, I think Miles laid more odds than was called for here, unless Gary is admitting that Miles does probably win the bet? Miles said ‘almost certain’ but fair odds should meet in the middle between the two sides. But the flip side is that it sends a very strong message.

We need a better model of what actually impacts Washington’s view of AI and what doesn’t. They end up in some rather insane places, such as Dean Ball’s report here that DC policy types still cite a 2023 paper using a 125 million (!) parameter model as if it were definitive proof that synthetic data always leads to model collapse, and it’s one of the few papers they ever cite. He explains it as people wanting this dynamic to be true, so they latch onto the paper.

Yo Shavit, who does policy at OpenAI, considers the implications of o3 under a ‘we get ASI but everything still looks strangely normal’ kind of world.

It’s a good thread, but I notice – again – that this essentially ignores the implications of AGI and ASI, in that somehow it expects to look around and see a fundamentally normal world in a way that seems weird. In the new potential ‘you get ASI but running it is super expensive’ world of o3, that seems less crazy than it does otherwise, and some of the things discussed would still apply even then.

The assumption of ‘kind of normal’ is always important to note in places like this, and one should note which places that assumption has to hold and which it doesn’t.

Point 5 is the most important one, and still fully holds – that technical alignment is the whole ballgame, in that if you fail at that you fail automatically (but you still have to play and win the ballgame even then!). And that we don’t know how hard this is, but we do know we have various labs (including Yo’s own OpenAI) under competitive pressures and poised to go on essentially YOLO runs to superintelligence while hoping it works out by default.

Whereas what we need is either a race to what he calls ‘secure, trustworthy, reliable AGI that won’t burn us’ or ideally a more robust target than that or ideally not a race at all. And we really need to not do that – no matter how easy or hard alignment turns out to be, we need to maximize our chances of success over that uncertainty.

There are a bunch of assumptions here. Compute is not obviously the only limiting factor on ASI construction, and ASI can be used to forestall others making ASI in ways other than compute access, and also one could attempt to regulate compute. And it has an implicit ‘everything is kind of normal?’ built into it, rather than a true slow takeoff scenario.

Again there’s a kind of normality assumption here, where the ASIs remain under corporate control (and human control), and aren’t treated as taxable individuals but rather as property, the state continues to exist and collect taxes, money continues to function as expected, tax incidence and reactions to new taxes don’t transform industrial organization, and so on.

Which leads us to observation three.

I agree that in the scenario type Yo Shavit is envisioning, even if you solve all the technical alignment questions in the strongest sense, if ‘things stay kind of normal’ and you allow AI sufficient personhood under the law, or allow it in practice even if it isn’t technically legal, then there is essentially zero chance of maintaining human control over the future, and probably this quickly extends to the resources required for human physical survival.

I also don’t see any clear way to prevent it, in practice, no matter the law.

You quickly get into a scenario where a human doing anything, or being in the loop for anything, is a kiss of death, an albatross around one’s neck. You can’t afford it.

The word that baffles me here is ‘gradually.’ Why would one expect this to be gradual? I would expect it to be extremely rapid. And ‘the rule of law’ in this type of context will not do for you what you want it to do.

There are a lot of physical choke points that effectively don’t get used for that. It is not at all obvious to me that physically controlling data centers in practice gives you that much control over what gets done within them, in this future, although it does give you that option.

As he notes later in that post, without collective ability to control compute and deal with or control AI agents – even in an otherwise under-control, human-in-charge scenario – anything like our current society won’t work.

The point of compute governance over training rules is to do it in order to avoid other forms of compute governance over inference. If it turns out the training approach is not viable, and you want to ‘keep things looking normal’ in various ways and the humans to be in control, you’re going to need some form of collective levers over access to large amounts of compute. We are talking price.

Oh, right. That. If we don’t get technical alignment right in this scenario, then none of it matters, we’re all super dead. Even if we do, we still have all the other problems above, which essentially – and this must be stressed – assume a robust and robustly implemented technical alignment solution.

Then we also need a way to turn this technical alignment into an equilibrium and dynamics where the humans are meaningfully directing the AIs in any sense. By default that doesn’t happen, even if we get technical alignment right, and that too has race dynamics. And we also need a way to prevent it being a kiss of death and albatross around your neck to have a human in the loop of any operation. That’s another race dynamic.

The Week in Audio

Anthropic’s co-founders discuss the past, present and future of Anthropic for 50m.

One highlight: When Clark visited the White House in 2023, Harris and Raimondo told him they had their eye on you guys, AI is going to be a really big deal and we’re now actually paying attention.

The streams are crossing, Bari Weiss talks to Sam Altman about his feud with Elon.

The details of his claim here are, shall we say, ‘incredibly inflated to the point of being distorted,’ even if you thought that there were no short term dangers until now.

Also Yann LeCun this week, it’s dumber than a cat and poses no dangers, but in the coming years it will…:

And also Yann LeCun this week, saying that we are ‘very far from AGI’ but not centuries, maybe not decades, several years. We are several years away. Very far.

At this point, I’m not mad, I’m not impressed, I’m just amused.

Oh, and I’m sorry, but here’s LeCun being absurd again this week, I couldn’t resist:

From a month ago, Marc Andreessen saying we’re not seeing intelligence improvements and we’re hitting a ceiling of capabilities. Whoops. For future reference, never say this, but in particular no one ever say this in November.

A Tale as Old as Time

A lot of stories people tell about various AI risks, and also various similar stories about humans or corporations, assume a kind of fixed, singular and conscious intentionality, in a way that mostly isn’t a thing. There will by default be a lot of motivations or causes or forces driving a behavior at once, and a lot of them won’t be intentionally chosen or stable.

This is related to the idea many have that deception or betrayal or power-seeking, or any form of shenanigans, is some distinct magisteria or requires something to have gone wrong and for something to have caused it, rather than these being default things that minds tend to do whenever they interact.

And I worry that we are continuing, as many were with the recent talk about shanengans in general and alignment faking in particular, getting distracted by the question of whether a particular behavior is in the service of something good, or will have good effects in a particular case. What matters is what our observations predict in the future.

Rhetorical Innovation

I can sort of see it, actually?

Miles Brundage tries to convince Eliezer Yudkowsky that if he’d wear different clothes and use different writing styles he’d have a bigger impact (as would Miles). I agree with Eliezer that changing writing styles would be very expensive in time, and echo his question on if anyone thinks they can, at any reasonable price, turn his semantic outputs into formal papers that Eliezer would endorse.

I know the same goes for me. If I could produce a similar output of formal papers that would of course do far more, but that’s not a thing that I could produce.

On the issue of clothes, yeah, better clothes would likely be better for all three of us. I think Eliezer is right that the impact is not so large and most who claim it is a ‘but for’ are wrong about that, but on the margin it definitely helps. It’s probably worth it for Eliezer (and Miles!) and probably to a lesser extent for me as well but it would be expensive for me to get myself to do that. I admit I probably should anyway.

A good Christmas reminder, not only about AI:

Careful curation can help with this, but it only goes so far.

Aligning a Smarter Than Human Intelligence is Difficult

Gallabytes expresses concern about the game theory tests we discussed last week, in particular the selfishness and potentially worse from Gemini Flash and GPT-4o.

I agree that you don’t have any business releasing a highly capable (e.g. 5+ level) LLM whose graphs don’t look at least roughly as good as Sonnet’s here. If I had Copious Free Time I’d look into the details more here, as I’m curious about a lot of related questions.

I strongly agree with McAleer here, also they’re remarkably similar so it’s barely even a pivot:

People Are Worried About AI Killing Everyone

If you are, please continue to live your life to its fullest anyway.

When you cling to a dim hope:

Do ask the girl out, though.

The Lighter Side

Yes.

When duty calls.

From an official OpenAI stream:

I actually really liked this exchange – given the range of plausible mindsets Sam Altman might have, this was a positive update.

The most Robin Hanson way to react to a new super cool AI robot offering.

Okay, so the future is mostly in the future, and right now it might or might not be a bit overpriced, depending on other details. But it is super cool, and will get cheaper.

Pliny jailbreaks Gemini and things get freaky.

I find it fitting that Pliny has a missed call.

Sorry, Elon, Gemini doesn’t like you.

I mean, I don’t see why they wouldn’t like me. Everyone does. I’m a likeable guy.