(I think this article represents substantive important original thinking, and should be studied by those interested in solving problems about deeply relating to alien minds (e.g. "solving alignment", in most of the relevant meanings of that). I didn't have time to read more than 1/3rd of it, but already learned from and was stimulated by what I did read.)

I kinda disagree with this post in general, I’m gonna try to pin it down but sorry if I mischaracterize anything.

So, there’s an infinite (or might-as-well-be-infinite) amount of object-level things (e.g. math concepts) to learn—OK sure. Then there’s an infinite amount of effective thinking strategies—e.g. if I see thus-and-such kind of object-level pattern, I should consider thus-and-such cognitive strategy—I’m OK with that too. And we can even build a hierarchy of those things—if I’m about to apply thus-and-such Level 1 cognitive strategy in thus-and-such object-level context, then I should first apply thus-and-such Level 2 cognitive strategy, etc. And all of those hierarchical levels can have arbitrarily much complexity and content. OK, sure.

But there’s something else, which is a very finite legible learning algorithm that can automatically find all those things—the object-level stuff and the thinking strategies at all levels. The genome builds such an algorithm into the human brain. And it seems to work! I don’t think there’s any math that is forever beyond humans, or if it is, it would be for humdrum reasons like “not enough neurons to hold that much complexity in your head at once”.

And then I’m guessing your response would be something like: there isn’t just one optimal “legible learning algorithm” as distinct from the stuff that it’s supposed to be learning. And if so, sure … but I think of that as kinda not very important. Here’s something related that I wrote here:

Here's an example: If you've seen a pattern "A then B then C" recur 10 times in a row, you will start unconsciously expecting AB to be followed by C. But "should" you expect AB to be followed by C after seeing ABC only 2 times? Or what if you've seen the pattern ABC recur 72 times in a row, but then saw AB(not C) twice? What "should" a learning algorithm expect in those cases?

You can imagine a continuous family of learning algorithms, that operate on the same underlying principles, but have different "settings" for deciding the answer to these types of questions.

And I emphasize that this is one of many examples. "How long should the algorithm hold onto memories (other things equal)?" "How similar do two situations need to be before you reason about one by analogizing to the other?" "How much learning model capacity is allocated to each incoming signal line from the retina?" Etc. etc.

In all these cases, there is no "right" answer to the hyperparameter settings. It depends on the domain—how regular vs random are the environmental patterns you're learning? How stable are they over time? How serious are the consequences of false positives vs false negatives in different situations?

There may be an "optimal" set of hyperparameters from the perspective of "highest inclusive genetic fitness in such-and-such specific biological niche". But there is a very wide range of hyperparameters which "work", in the sense that the algorithm does in fact learn things. Different hyperparameter settings would navigate the tradeoffs discussed above—one setting is better at remembering details, another is better at generalizing, another avoids overconfidence in novel situations, another minimizes energy consumption, etc. etc.

Anyway, I think there’s a space of legible learning algorithms (including hyperparameters) that would basically “work” in the sense of creating superintelligence, and I think there’s a legible explanation of why they work. But within this range, I acknowledge that it’s true that some of them will be able to learn different object-level areas of math a bit faster or slower, in a complicated way, for example. I just don’t think I care. I think this is very related to the idea in Bayesian rationality that priors don’t really matter once you make enough observations. I think superintelligence is something that will be do autonomous learning and figuring-things-out in a way that existing AIs can’t. Granted, there is no simple theory that predicts the exact speed that it will figure out any given object-level thing, and no simple theory that says which hyperparameters are truly optimal, but we don’t need such a theory, who cares, it can still figure things out with superhuman speed and competence across the board.

By the same token, nobody ever found the truly optimal hyperparameters for AlphaZero, if those even exist, but AlphaZero was still radically superhuman. If truly-optimal-AlphaZero would have only needed to self-play for 20 million games instead of 40 million to get to the same level, who cares, that would have only saved 12 hours of training or something.

Thank you for the comment!

First, I'd like to clear up a few things:

- I do think that making an "approximate synthetic 2025 human newborn/fetus (mind)" that can be run on a server having 100x usual human thinking speed is almost certainly a finite problem, and one might get there by figuring out what structures are there in a fetus/newborn precisely enough, and it plausibly makes sense to focus particularly on structures which are more relevant to learning. If one were to pull this off, one might then further be able to have these synthetic fetuses grow up quickly into fairly normal humans and have them do stuff which ends the present period of (imo) acute x-risk. (And the development of thought continues after that, I think; I'll say more that relates to this later.) While I do say in my post that making mind uploads is a finite problem, it might have been good to state also (or more precisely) that this type of thing is finite.

- I certainly think that one can make a finite system such that one can reasonably think that it will start a process that does very much — like, eats the Sun, etc.. Indeed, I think it's likely that by default humanity would unfortunately start a process that gets the Sun eaten this century. I think it is plausible there will be some people who will be reasonable in predicting pretty strongly that that particular process will get the Sun eaten. I think various claims about humans understanding some stuff about that process are less clear, though there is surely some hypothetical entity that could pretty deeply understand the development of that process up to the point where it eats the Sun.

- Some things in my notes were written mostly with an [agent foundations]y interlocutor in mind, and I'm realizing now that some of these things could also be read as if I had some different interlocutor in mind, and that some points probably seem more incongruous if read this way.

I'll now proceed to potential disagreements.

But there’s something else, which is a very finite legible learning algorithm that can automatically find all those things—the object-level stuff and the thinking strategies at all levels. The genome builds such an algorithm into the human brain. And it seems to work! I don’t think there’s any math that is forever beyond humans, or if it is, it would be for humdrum reasons like “not enough neurons to hold that much complexity in your head at once”.

Some ways I disagree or think this is/involves a bad framing:

- If we focus on math and try to ask some concrete question, instead of asking stuff like "can the system eventually prove anything?", I think it is much more appropriate to ask stuff like "how quickly can the system prove stuff?". Like, brute-force searching all strings for being a proof of a particular statement can eventually prove any provable statement, but we obviously wouldn't want to say that this brute-force searcher is "generally intelligent". Very relatedly, I think that "is there any math which is technically beyond a human?" is not a good question to be asking here.

- The blind idiot god that pretty much cannot even invent wheels (ie evolution) obviously did not put anything approaching the Ultimate Formula for getting far in math (or for doing anything complicated, really) inside humans (even after conditioning on specification complexity and computational resources or whatever), and especially not in an "unfolded form"[1], right? Any rich endeavor is done by present humans in a profoundly stupid way, right?[2] Humanity sorta manages to do math, but this seems like a very weak reason to think that [humans have]/[humanity has] anything remotely approaching an "ultimate learning algorithm" for doing math?[3]

- The structures in a newborn [that make it so that in the right context the newborn grows into a person who (say) pushes the frontier of human understanding forward] and [which participate in them pushing the frontier of human understanding forward] are probably already really complicated, right? Like, there's already a great variety of "ideas" involved in the "learning-relevant structures" of a fetus?

- I think that the framing that there is a given fixed "learning algorithm" in a newborn, such that if one knew it, one would be most of the way there to understanding human learning, is unfortunate. (Well, this comes with the caveat that it depends on what one wants from this "understanding of human learning" — e.g., it is probably fine to think this if one only wants to use this understanding to make a synthetic newborn.) In brief, I'd say "gaining thinking-components is a rich thing, much like gaining technologies more generally; our ability to gain thinking-components is developing, just like our ability to gain technologies", and then I'd point one to Note 3 and Note 4 for more on this.

- I want to say more in response to this view/framing that some sort of "human learning algorithm" is already there in a newborn, even in the context of just the learning that a single individual human is doing. Like, a human is also importantly gaining components/methods/ideas for learning, right? For example, language is centrally involved in human learning, and language isn't there in a fetus (though there are things in a newborn which create a capacity for gaining language, yes). I feel like you might want to say "who cares — there is a preserved learning algorithm in the brain of a fetus/newborn anyway". And while I agree that there are very important things in the brain which are centrally involved in learning and which are fairly unchanged during development, I don't understand what [the special significance of these over various things gained later] is which makes it reasonable to say that a human has a given fixed "learning algorithm". An analogy: Someone could try to explain structure-gaining by telling me "take a random init of a universe with such and such laws (and look along a random branch of the wavefunction[4]) — in there, you will probably eventually see a lot of structures being created" — let's assume that this is set up such that one in fact probably gets atoms and galaxies and solar systems and life and primitive entities doing math and reflecting (imo etc.). But this is obviously a highly unsatisfying "explanation" of structure-gaining! I wanted to know why/how protons and atoms and molecules form and why/how galaxies and stars and black holes form, etc.. I wanted to know about evolution, and about how primitive entities inventing/discovering mathematical concepts could work, and imo many other things! Really, this didn't do very much beyond just telling me "just consider all possible universes — somewhere in there, structures occur"! Like, yes, I've been given a context in which structure-gaining happens, but this does very little to help me make sense of structure-gaining. I'd guess that knowing the "primordial human learning algorithm" which is there in a fetus is significantly more like knowing the laws of physics than your comment makes it out to be. If it's not like that, I would like to understand why it's not like that — I'd like to understand why a fetus's learning-structures really deserve to be considered the "human learning algorithm", as opposed to being seen as just providing a context in which wild structure-gaining can occur and playing some important role in this wild structure-gaining (for now).

- to conclude: It currently seems unlikely to me that knowing a newborn's "primordial learning algorithm" would get me close to understanding human learning — in particular, it seems unlikely that it would get me close understanding how humanity gains scientific/mathematical/philosophical understanding. Also, it seems really unlikely that knowing this "primordial learning algorithm" would get me close to understanding learning/technology-making/mathematical-understanding-gaining in general.[5]

like, such that it is already there in a fetus/newborn and doesn't have to be gained/built ↩︎

I think present humans have much more for doing math than what is "directly given" by evolution to present fetuses, but still. ↩︎

One attempt to counter this: "but humans could reprogram into basically anything, including whatever better system for doing math there is!". But conditional on this working out, the appeal of the claim that fetuses already have a load-bearing fixed "learning algorithm" is also defeated, so this counterargument wouldn't actually work in the present context even if this claim were true. ↩︎

let's assume this makes sense ↩︎

That said, I could see an argument for a good chunk of the learning that most current humans are doing being pretty close to gaining thinking-structures which other people already have, from other people that already have them, and there is definitely something finite in this vicinity — like, some kind of pure copying should be finite (though the things humans are doing in this vicinity are of course more complicated than pure copying, there are complications with making sense of "pure copying" in this context, and also humans suck immensely (compared to what's possible) even at "pure copying"). ↩︎

But there’s something else, which is a very finite legible learning algorithm that can automatically find all those things

Is there? I see a.lot of talk about brain algorithms here, but I have never seen one stated...made "legible".

—the object-level stuff and the thinking strategies at all levels. The genome builds such an algorithm into the human brain

Does it? Rationalists like to applaud such claims, but I have never seen the proof.

And it seems to work!

Does it? Even If we could answer every question we have ever posed, we could still have fundamental limitations. If you did have a fundamental cognitive deficit, that prevents you from.understanding some specific X how would you know? You need to be able to conceive X before conceiving that you don't understand X. It would be like the visual blind spot...which you cannot see!

And then I’m guessing your response would be something like: there isn’t just one optimal “legible learning algorithm” as distinct from the stuff that it’s supposed to be learning. And if so, sure

So why bring it up?

there isn’t just one optimal “legible learning algorithm”

Optimality -- doing things efficiency -- isn't the issue, the issue is not being able to do certain things at all.

I think this is very related to the idea in Bayesian rationality that priors don’t really matter once you make enough observations.

The idea is wrong. Hypotheses matter , because if you haven't formulated the right hypothesis , no amount of data will confirm it. Only worrying about weighting of priors is playing in easy mode, because it assumes the hypothesis space is covered. Fundamental cognitive limitations could manifest as the inability to form certain hypotheses. How many hypotheses can a chimp form? You could show a chimp all the evidence in the world, and it's not going to hypothesize general relativity.

Rationalists always want to reply that Solomonoff inductors avoid the problem on the basis that SIs consider "every" "hypothesis"... but they don't , several times over. It's not just that they are uncomputable, it's also that it's not know that every hypothesis can be expressed as a programme. The ability to range over a complete space does not equate to the ability to range over Everything.

Here’s an example: If you’ve seen a pattern “A then B then C” recur 10 times in a row, you will start unconsciously expecting AB to be followed by C. But “should” you expect AB to be followed by C after seeing ABC only 2 times? Or what if you’ve seen the pattern ABC recur 72 times in a row, but then saw AB(not C) twice? What “should” a learning algorithm expect in those cases? You can imagine a continuous family of learning algorithms, that operate on the same underlying principles.

A set of underlying principles is a limitation. SIs are limited to computability and the prediction of a sequence of observations. You're writing as that something like prediction of the next observation is the only problem of interest , but we don't know that Everything fits that pattern. The fact that Bayes and Solomomoff work that way is of no help, as shown above.

But within this range, I acknowledge that it’s true that some of them will be able to learn different object-level areas of math a bit faster or slower, in a complicated way, for example.

But you haven't shown that efficiency differences are the only problem. The nonexistence of fundamental no-go areas certainly doesn't follow from the existence of.efficiency differences.

, it can still figure things out with superhuman speed and competence across the board

The definition of superintelligence means that "across the board" is the range of things humans do, so if there is something humans can't do at all,an ASI is not definitionally required to be able to do it.

By the same token, nobody ever found the truly optimal hyperparameters for AlphaZero, if those even exist, but AlphaZero was still radically superhuman

The existence of superhuman performance in some areas doesn't prove adequate performance in all areas, so it is basically irrelevant to the original question, the existence of fundamental limitations in humans.

OP discusses maths from a realist perspective. If you approach it as a human construction, the problem about maths is considerably weakened...but the wider problem remains, because we don't know that maths is Everything.

this is conflating the reason for why one knows/believes P versus the reason for why P,

Of course, that only makes sense assuming realism.

You are understating your own case, because there is a difference between mere infinity and All Kinds of Everything. An infinite collection of one kind of thing can be relatively tractable.

An idea-image that was bubbling in my mind while I was reading Note 1.

One naive definition of an infinite endeavor would be something like "a combinatorially exploded space of possible constructions/[phenomena to investigate]" where the explosion can stem from some finite set of axioms and inference rules or whatever.

I don't think this is endeavor-infinity in the sense you're talking about here. There's probably a reason you called (were tempted to call?) it an "infinite endeavor", not an "infinite domain". A domain is "just out there". An endeavor is both "out there" and in the agent doing/[participating in] the endeavor. That agent (thinker?) has a taste and applying that taste to that infinite but [possibly in a certain sense finitely specifiable/constrainable] thing is what makes it an endeavor and grants it its infinite character.

Taste creates little pockets of interestingness in combinatorially exploded stuff and those pockets have smaller pockets still; or perhaps a better metaphor would be an infinite landscape, certain parts of which the thinker's taste lights up with salience and once you "conquer" one salient location, you realize salience of other locations because you learned something in that location. Or perhaps you updated your taste, to the extent that these two are distinguishable. Or perhaps the landscape itself updated because you realized that you "just can" not assume Euclid's fifth postulate or tertium non datur or ex contradictione quodlibet and consequently discover a new way to do math: non-Euclidean geometries or new kinds of logic.

If I were to summarize my ad-hoc-y image of endeavor-infinity at the moment, it would be something like:

An infinite endeavor emerges from a thinker imposing/applying some (very likely proleptic) taste/criterion to a domain and then exploring (and continuing to construct?) that domain according to that taste/criterion's guidance; where all three of {thinker, criterion, domain} (have the potential to) grow in the process.

(which contains a lot of ad-hoc-y load-bearing concepts to be elucidated for sure)

I only read the first note and loved it. Will surely read the rest.

Is the infinitude of "how should one think?" the "main reason" why philosophy is infinite? Is it the main reason for most particular infinite philosophical problems being infinite? I would guess that it is not — that there are also other important reasons; in particular, if a philosophical problem is infinite, I would expect there to at least also be some reason for its infinitude which is "more internal to it". In fact, I suspect that clarifying the [reasons why]/[ways in which] endeavors can end up infinite is itself an infinite endeavor :).

Attribution is probably very (plausibly infinitely) tricky here, similar to how it's tricky to state why certain facts about mathematical structures are true while others are false. Sure, one can point to a proof of the theorem and say "That's why P is true." but this is conflating the reason for why one knows/believes P versus the reason for why P, especially when there are multiple, very different ways to prove the same proposition.[1] Or at least that's how it feels to me.

- ^

I don't know how often it is the case. It would be fun to see something like a distribution of proof diversity scores for some quasi-representative set of mathematical propositions where by "proof diversity" I mean something like information diameter (Li & Vitányi, Def 8.5.1).

I want to preclude scenarios which look like doing a bunch of philosophy

A (perhaps related) pathology: a mind that, whenever it sees the symbol "0", it must prove a novel theorem in ZFC in order to complete the perception of the symbol. For such a mind, many (but not all!) repetitive and boring tasks will induce an infinite endeavor (because the mind contains such an endeavor).

I think it is a mistake to think of these as finite problems[12] — they are infinite.

It is often possible to build multiple instances of a "big/small" dichotomy, such that the theory has similar results. (e.g. [small="polynomial time"] vs [small="decidable"]).

When I imagine using your concept to think about AI, I want to say that cloning a strawberry/proving the Riemann hypothesis is a finite task that nevertheless likely implies an endeavor of a different character than [insert something you could do manually in a few months].

I wonder if the [small=finite] version of your concept is the one we should be using.

I'd maybe rather say that an infinite endeavor is one for which after any (finite) amount of progress, the amount of progress that could still be made is greater than the amount of progress that has been made, or maybe more precisely that at any point, the quantity of “genuine novelty/challenge” which remains to be met is greater than the quantity met already

[Edit: this line of reasoning is addressed later on; this comment is an artefact of not having first read the whole post, specifically see "With this picture in mind, one can see that whether an endeavor is infinite depends on one's measure "]

Imagine it makes sense to quantify the "genuine novelty" of puzzles using rational numbers, and imagine an infinite sequence of puzzles with "genuine novelty" of the i-th puzzle given {0..i-1} being equal to 1. Hence the sum of their "genuine novelty" diverges. Now, create a game which assigns 1/2^{i+1} points to solving the i-th puzzle. My guess as to the intended behaviour of your concept in this "tricky" example:

- the endeavour of maximizing the score on this game is infinite.

- the endeavour of being pretty sure to get a decent score at this game is not infinite.

- the game itself is neither necessarily finite nor infinite.

- for this game to support the weight of math/physics/cooking mushroom pies/etc..., it is not sufficient for me to become "very good at the game".

- The winner of this game is not determined by the finitude of the endeavors of the players [this is trivial, but note less trivially that for any finite number N, the prefix sequence maps to a finite endeavor, and the postfix sequence maps to an infinite endeavor with value in the game (if we let the endeavors "complete") of 1-1/2^{N}, 1/2^{N} respectively].

A different way to make the "game" a test of the concept boundary would be: you get 1 point if you give the solution to at least one of the puzzles in the infinite sequence, else you get 0 points. This game lets you trivially construct infinite endeavors, despite yielding (for every infinite endeavor X) no benefit over a finite prefix (of X). Understanding all the different ways you could have won the game is an infinite endeavor.

There's a lot of good thought in here and I don't think I was able to understand all of it.

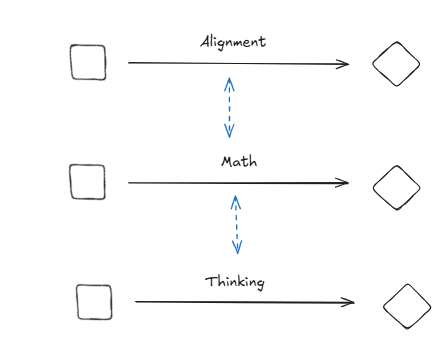

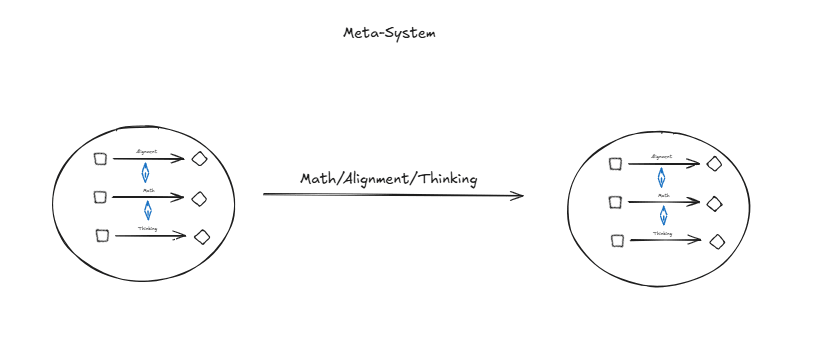

I will focus in on a specific idea that I would love to understand some of your thoughts on, looking at meta categories. You say something like the problem in itself will remain even if you go up a meta level. My questioning is about how certain you're of this being true? So from a category theory lens your current base-claim in the beginning looks something like:

And so this is more than this, it is also about a more general meta-level thing where even if you were to try to improve this thing in itself you would get something like a mapping that involves itself? (This is a question; yes or no?)

And even more generally we could then try to take the next level of this being optimised so the meta meta level:

We can go above this and say that this will happen an arbitrary amount of times. I have an hypothesis, which is that if you go up to the n-category you will start to see generalized structural properties of all of these systems that you can then use in order to say something about how individual alignment looks like. It isn't necessarily that thinking in itself won't be adapted to the specific problem at hand, it is rather that thinking might have structural similarities at different meta levels and that this can be used for alignment? Like there's design patterns to be foundn between the levels of thinking and abstraction rather than through more of them? It might just fall apart for the same reason but it seems to me like it could be an interesting area of exploration.

I don't know if this makes sense but I did enjoy this post so thank you for writing it!

Thank you for your comment!

What you're saying seems more galaxy-brained than what I was saying in my notes, and I'm probably not understanding it well. Maybe I'll try to just briefly (re)state some of my claims that seem most relevant to what you're saying here (with not much justification for my claims provided in my present comment, but there's some in the post), and then if it looks to you like I'm missing your point, feel very free to tell me that and I can then put some additional effort into understanding you.

- So, first, math is this richly infinite thing that will never be mostly done.

- If one is a certain kind of guy doing alignment, one might hope that one could understand how e.g. mathematical thinking works (or could work), and then make like an explicit math AI one can understand (one would probably really want this for science or for doing stuff in general[1], but a fortiori one would need to be able to do this for math).[2]

- But oops, this is very cursed, because thinking is an infinitely rich thing, like math!

- I think a core idea here is that thinking is a technological thing. Like, one aim of notes 1–6 (and especially 3 and 4) is to "reprogram" the reader into thinking this way about thinking. That is, the point is to reprogram the reader away from sth like "Oh, how does thinking, the definite thing, work? Yea, this is an interesting puzzle that we haven't quite cracked yet. You probably have to, like, combine logical deduction with some probability stuff or something, and then like also the right decision theory (which still requires some work but we're getting there), and then maybe a few other components that we're missing, but bro we will totally get there with a few ideas about how to add search heuristics, or once we've figured out a few more details about how abstraction works, or something."

- Like, a core intuition is to think of thinking like one would think of, like, the totality of humanity's activities, or about human technology. There's a great deal going on! It's a developing sort of thing! It's the sort of thing where you need/want to have genuinely new inventions! There is a rich variety of useful thinking-structures, just like there is a rich variety of useful technological devices/components, just like there is a rich variety of mathematical things!

- Given this, thinking starts to look a lot like math — in particular, the endeavor to understand thinking will probably always be mostly unfinished. It's the sort of thing that calls for an infinite library of textbooks to be written.

- In alignment, we're faced with an infinitely rich domain — of ways to think, or technologies/components/ideas for thinking, or something. This infinitely rich domain again calls for textbooks to keep being written as one proceeds.

- Also, the thing/thinker/thought writing these textbooks will itself need to be rich and developing as well, just like the math AI will need to be rich and developing.

- Generally, you can go meta more times, but on each step, you'll just be asking "how do I think about this infinitely rich domain?", answering which will again be an infinite endeavor.

- You could also try to make sense of climbing to higher infinite ordinal levels, I guess?

(* Also, there's something further to be said also about how [[doing math] and [thinking about how one should do math]] are not that separate.)

I'm at like inside-view p=0.93 that the above presents the right vibe to have about thinking (like, maybe genuinely about its potential development forever, but if it's like technically only the right vibe wrt the next years of thinking (at a 2024 rate) or something, then I'm still going to count that as thinking having this infinitary vibe for our purposes).[3]

However, the question about whether one can in principle make a math AI that is in some sense explicit/understandable anyway (that in fact proves impressive theorems with a non-galactic amount of compute) is less clear. Making progress on this question might require us to clarify what we want to mean by "explicit/understandable". We could get criteria on this notion from thinking through what we want from it in the context of making an explicit/understandable AI that makes mind uploads (and "does nothing else"). I say some more stuff about this question in 4.4.

if one is an imo complete lunatic :), one is hopeful about getting this so that one can make an AI sovereign with "the right utility function" that "makes there be a good future spacetime block"; if one is an imo less complete lunatic :), one is hopeful about getting this so that one can make mind uploads and have the mind uploads take over the world or something ↩︎

to clarify: I actually tend to like researchers with this property much more than I like basically any other "researchers doing AI alignment" (even though researchers with this property are imo engaged in a contemporary form of alchemy), and I can feel the pull of this kind of direction pretty strongly myself (also, even if the direction is confused, it still seems like an excellent thing to work on to understand stuff better). I'm criticizing researchers with this property not because I consider them particularly confused/wrong compared to others, but in part because I instead consider them sufficiently reasonable/right to be worth engaging with (and because I wanted to think through these questions for myself)! ↩︎

I'm saying this because you ask me about my certainty in something vaguely like this — but I'm aware I might be answering the wrong question here. Feel free to try to clarify the question if so. ↩︎

With this picture in mind, one can see that whether an endeavor is infinite depends on one's measure — and e.g. if all you're interested in in mathematics is finding a proof of some particular single theorem, then maybe "math" seems finite to you.

Informally and intuitively, securing the future (in a conflict) naturally induces a measure that makes math seem finite, but enjoying the future (after you have somewhat secured it) naturally induces a measure that makes math seem infinite.

- If is an infinite endeavor, then "how should one do ?" is also infinite.[18] For example: math is infinite, so "how should one do math?" is infinite; ethics is infinite, so "how should one do ethics?" is infinite.[19]

- If is an infinite endeavor and there is a "faithful reduction" of to another endeavor , then is also infinite. (In particular, if an infinite endeavor is "faithfully" a subset of another endeavor , then is also infinite.)[20] For example, math being infinite implies that stuff in general is infinite; "how should one do math?" being infinite implies that "how should one think?" is infinite.

- If an endeavor constitutes a decently big part of an infinite endeavor, then it is infinite.[21][22] For example, to the extent that language is and will remain to be highly load-bearing in thinking, [figuring out how thinking should work] being infinite implies that [figuring out how language should work] is also infinite.

Important and useful, but also keep in mind:

a'. If "how should one do E?" is a finite endeavor then E is finite.

b'. If F finite, then any E that reduces to F must also be finite.

c'. If an endeavor contains not too much more than a finite endeavor, then it is finite.

perhaps "infinitude clusters"? perhaps "infinitudes don't exist alone?" perhaps "infinitudes are not isolated?" perhaps "non-solitary infinitudes?"

Acknowledgments. I have benefited from and made use of the following people's unpublished and/or published ideas on these topics: especially Sam Eisenstat; second-most-importantly Tsvi Benson-Tilsen; also: Clem von Stengel, Jake Mendel, Kirke Joamets, Jessica Taylor, Dmitry Vaintrob, Simon Skade, Rio Popper, Lucius Bushnaq, Mariven, Hoagy Cunningham, Hugo Eberhard, Peli Grietzer, Rudolf Laine, Samuel Buteau, Jeremy Gillen, Kaur Aare Saar, Nate Soares, Eliezer Yudkowsky, Hasok Chang, Ian Hacking, Ludwig Wittgenstein, Martin Heidegger and Hubert Dreyfus, Georg Wilhelm Friedrich Hegel and Gregory B. Sadler, various other canonical philosophers, and surely various others I'm currently forgetting.[9]

1 thinking can only be infinitesimally understood[10]

2 infinitude spreads

3 math, thinking, and technology are equi-infinite

4 general intelligence is not that definite

5 confusion isn't going away

6 thinking (ever better) will continue

7 alignment is infinite

8 making an AI which is broadly smarter than humanity would be most significant

In particular, I might improve/rewrite/expand and republish some of the present notes in the future. ↩︎

though I expect a bunch of them to eventually come to be of type nonsense ↩︎

You know how when people in the room are saying X and you think sorta-X-but-sorta-not-X, then you might find yourself arguing for not-X in this room (but if you were trying to be helpful in a room of not-X-ers, you'd find yourself arguing for X in that room), and it's easy to end up exaggerating your view somewehat in the direction of not-X in this situation? These notes have an early archeological layer in which I was doing more of that, but I decided later that this was annoying/bad, so this early layer has now largely been covered up in the present palimpsest. The title (hypo)theses are a main exception — to keep them crisp, I've kept many of them hyperbolic (but stated my actual position in the body of the note). ↩︎

especially given that to a significant extent, the claims stand together or fall together ↩︎

if in a few years I don’t think I was wrong/confused about major things here, I should seriously consider considering myself to have died :) ↩︎

Still, it could happen that I don't respond to a response; in particular, it could happen that [I won't find your attempt to reason me out of some position compelling, but I also don't provide counterarguments], and it could happen that I learn something from your comment but fail to thank you. So, you know, sorry/thanks ahead of time :). ↩︎

I use scare quotes throughout these notes to indicate terms/concepts which I consider particularly bad/confused/unreliable/suspect/uncomfortable/unfortunate/in-need-of-a-rework; I do not mean to further indicate sneering. (I also use double quotation marks in the more common way though — i.e., just to denote phrases.) ↩︎

or multiple artifacts distinct and separate from us which outgrow us ↩︎

I might go through the notes at some point later and add more specific acknowledgments — there are currently a bunch of things which are either fairly directly from someone or in response to someone or developed together with someone. Many things in these notes are really responses to past me(s), though I don't take them to exactly have taken a contrary (so wrong :)) position most of the time, but more [to have lacked a clear view on] or [to have had only bad ways to think about] matters. ↩︎

in isolation, this note would have been titled "the notion of an infinite endeavor", but that wouldn't have accorded with the schema of each note being a (hypo)thesis ↩︎

we could alternatively say: to which there are finite satisfactory answers ↩︎

some examples of finite problems: finding a proof or disproof of a typical conjecture in math, coming up with and implementing a data structure where certain operations have some particular attainable complexities, building a particular kind of house, coming up with special relativity (or, more generally, coming up with anything which people have already come up with); identifying the fundamental laws of physics is probably finite (but I also have some reasonable probability on it being infinite or not making sense or maybe splitting into a multitude of finite and infinite problems, e.g. involving some weirdness around finding yourself inside physics or something or around being able to choose one's effective laws — idk) ↩︎

some other infinite problems: physics, writing novels, hip-hop, cooking mushroom pies, completing the system of german idealism, being funny ↩︎

If you don't feel like this about math now, I'd maybe ask you to also consider math in 1900, or, if there's some other field of inquiry you're quite familiar with, to consider that field. (To be clear: this isn't to say that after you do this, I think you should definitely be agreeing with me here — I'm open to us still having a disagreement after, and I'm open to being wrong here, or to needing to clarify the measure :).) I'll note preemptively that many sciences are sort of weird here; let me provide some brief thoughts on whether physics is infinite as an example. One central project in current physics is to figure out what the fundamental laws of physics are; this project could well be finite. However, I think physics is probably infinite anyway. A major reason is that physics is engaged in inventing/manufacturing new things/phenomena/situations/arenas and in studying and making use of these (and other) more "invented/created" things; some examples: electric circuits, (nuclear (fusion)) power plants, (rocket) engines, colliders, lenses, lasers, various materials, (quantum) computers, simulations. (Quite generally, the things we're interested in are going to be "less naturally occurring (in the physical universe)" over time.) Also, even beyond these activities, [a physicist's ways of thinking]/[the growing body of ideas/methods/understanding of physics] would probably continue to be significant all over the place (for example, in math). ↩︎

I would probably also not want to consider this a remotely satisfactory algorithm, but this is a much smaller objection. ↩︎

as opposed to being asked in some sense such that a specification of the fundamental laws of physics would be an adequate answer ↩︎

https://youtu.be/_W18Vai8M2w?t=163 ↩︎

The weaker claim that I could make here is that this is only true typically — i.e., that "if P is infinite, then "how should one do P?" is usually also infinite. I think the weaker claim would be fine to support the rest of the discussion, but I've decided to go with the stronger claim for now as it still seems plausible. To adapt an ancient biology olympiad adage: it might be that all universally quantified statements are false, but the ones that are nearly true are worth their weight in gold. Anyway, I admit that the claim being true ends up depending on how one measures progress on a problem (as discussed briefly also in the last item), which I haven't properly specified. For these notes, I would like to keep getting away with saying we're measuring progress in some intuitive way which is like the way mathematicians measure progress when saying math is infinite. ↩︎

I think the metaethical problem of providing sth like axioms for ethics is also infinite, despite the fact that it could be reasonable to consider the analogous problem for math finite given that we can develop (almost) all our math inside [first-order logic]+ZFC. One relevant difference between this metamathematical problem and this metaethical problem is that any system which supports sufficiently rich structure can be a fine solution to the metamathematical problem because we can then probably make ≈all our mathematical objects sit inside that system, whereas it is far from being the case that anything goes to this extent for the metaethical problem — it being fine to use the hypothetical ethical system to guide action feels like a much stricter requirement on it, and in fact probably entangles the choice with ethics sufficiently to make this problem infinite, also. Additionally, it being imo plausibly infinite to make some decision on what the character of value is also pushes this metaethical problem toward infinitude. What's more, such a formal system would not only need to "contain" our values, but also our understanding (I will talk more about this in a later note), but our understanding is probably not something on which any fixing decision should ever be made because we should remain open to thinking in new ways, so any hypothetical such system would be going out of date after it is created (at least in its "understanding-component", but I also doubt a principled cleavage can be made (I'll discuss various reasons in upcoming notes)). Also, our understanding and values at any time t are also probably much too big for us to see properly at that time t, as well as not in a format to fit in any canonically-shaped such system. That said, there is a "small problem of providing an ethical system" which just asks for any kind of "system" which is sorta fine to give control to according to one's values (relative to some default), which is solvable because e.g. humanity-tomorrow will be such a system for humanity. ↩︎

By E reducing to F, I roughly mean the usual thing from computer science: that there is a cheap/easy way to get a solution to E from a solution to F; this implies that if you could solve F, you could also solve E. If we conceive of each endeavor as consisting of collecting/solving some kinds of pieces, then we could also require a piece-wise map here: that for each piece of E, one can cheaply specify a piece of F such that having solved/collected that piece of F would make it cheap for one to solve/collect that piece of E as well. I'm adding the further condition that the reduction be "faithful" to rule out cases where a full solution to F would give you a solution to E, but one can nevertheless get a positive fraction of the way in F without doing anything remotely as challenging as E — for example, take F to be the disjoint union of playing a game of tic-tac-toe and math, with equal total importance assigned to each, and take E to be math. I'm not sure what I should mean by a reduction being "faithful" (a central criterion on its meaning is just to make the statement about transferring infinitude true), but here are a few alternatives: (1) If we can think of E and F in terms of collecting pieces with importance-measures in R≥0, then it would be sufficient to require that the reduction map doesn't distort the measures outrageously. (2) We could say that any [≥13]-solution of F would cheaply yield a [≥c]-solution (with c>0) of E, or that any way to remotely-well-solve F would cheaply give rise to a way to remotely-well-solve E, but this is plausibly too strong a requirement. (3) We could say that if there were a [≥13]-solution of F, there would be another solution of F which isn't "infinitely many times more challenging (to find)" which would cheaply yield a [≥c]-solution (with c>0) of E. In other words, we'd say that there is a reasonably economical way to go about [≥13]-solving F which would involve [≥c]-solving E as well. Actually, for most infinitude-transfers one might want to handle with this rule, I think it might also be fine to do without a "precise" statement, just appealing to how F is intuitively at least as challenging as E. ↩︎

Any non-infinitesimal fraction should definitely count as a "decently big part"; the reason I didn't just say "positive fraction" to begin with is that I'd maybe like this principle to also helpfully explain why some sufficiently big infinitesimal fractions of infinite problems are infinite. ↩︎

a footnote analogous to the first footnote about the first item on this sublist ↩︎

Is the infinitude of "how should one think?" the "main reason" why philosophy is infinite? Is it the main reason for most particular infinite philosophical problems being infinite? I would guess that it is not — that there are also other important reasons; in particular, if a philosophical problem is infinite, I would expect there to at least also be some reason for its infinitude which is "more internal to it". In fact, I suspect that clarifying the [reasons why]/[ways in which] endeavors can end up infinite is itself an infinite endeavor :). ↩︎

In addition to stuff done by people canonically considered philosophers (who have also made a great deal of progress), I’d certainly include as central examples of philosophical progress many of the initial intellectual steps which led to the creation of the following fields: probability and statistics, thermodynamics and statistical mechanics, formal mathematical logic, computability and computer science, game theory, cybernetics, information theory, computational complexity theory, AI, machine learning, and probably many others; often, this involved setting up an arena in which one could find clear/mathematical counterparts to some family of previously vague questions. (I’d also consider many later intellectual steps in these fields to be philosophical progress.) ↩︎

Doing some amount of the inside thing is hard to avoid because we can't access ourselves except from the inside, and even if we had some other access channel, we'd struggle to operate well on an aspect of ourselves except by playing around with it on the inside a bunch — think also about eg how to help someone out of a confusion (about a theorem, say), it can help to try to think in that confused way yourself, and eg how playing a game (or at least seeing it played) is centrally good for improving it. Some amount of the outside thing is also usually unavoidable — we are reflective by default and that's good — think eg about how it is actually good/necessary to also look at a game from the outside to improve it, not to just keep playing it (though a human will of course be doing a great deal of looking at the game even when just playing it), and about why it's good that we are able to talk about propositions and not solely about more thingy things (or, well, about why it's good we can turn propositions into ordinary thingy things). ↩︎

Really, I’m somewhat unhappy with the language I have here — “how should thinking work?” sounds too much like we’re only taking the external position. I would like to have something which makes it clearer that we have here is like a game at once played and improved. ↩︎

The object/meta distinction is sort of weird to maintain here; its weirdness has to do with us being reflective creatures, always thinking together about a domain and about us [thinking about]/[doing stuff in] the domain. When you are engaged in any challenging activity, you're not solely "making very object-level moves", but also thinking about the object-level moves you're making; now, of course, the thinking about object-level moves you're making can also itself naturally be seen as consisting of moves in that challenging activity, blurring the line between object-level-moves and meta-moves. And I'm just saying this is true in particular for challenging thinking-activities; in such activities, the object-moves are already more like thought-moves, and the meta-moves are/constitute/involve thinking about your thinking (though you needn't be very explicitly aware that this is what you're doing, and you often won't be). A mathematician doesn't just "print statements" without looking at the "printing process", but is essentially always simultaneously seeking to improve the "printing process". And this isn't at all unique to mathematics — when a scientist or a philosopher asks a question, they also ask how to go about answering that question, seek to make the question make more sense, etc.. (However, it is probably moderately more common in math than in science for things which first show up in thinking to become objects of study; this adds to the weirdness of drawing a line between object and meta in math. Here's one stack of examples: one can get from the activities of [counting, ordering, measuring (lengths, areas, volumes, times, masses), comparing in quantity/size, adding, taking away, distributing (which one does initially with particular things)] to numbers (as abstract things which can be manipulated) and arithmetic on numbers (like, one can now add 2 and 3, as opposed to only being able to put together a collection of 2 objects of some kind with a collection of 3 objects of another kind), which is a main contributor to e.g. opening up an arena of mathematical activities in elementary number theory, algebra, and geometry; one can get from one doing operations/calculations to/with numbers (like squaring the side length of a square to find its area) to the notion of a function (mapping numbers to numbers), which contributes to it becoming sensible to e.g. add functions, rescale functions, find fourier decompositions of periodic functions, find derivatives, integrate, find extrema, consider and try to solve functional equations; one can again turn aspects of these activities with functions into yet more objects of study, e.g. now getting vector spaces of functions, fourier series/transforms (now as things you might ask questions about, not just do), derivatives and integrals of functions, functionals, extremal points of functions, and differential equations, and looking at one's activities with functions can e.g. let/make one state and prove the fundamental theorem of calculus and the extreme value theorem; etc.. (I think these examples aren't entirely historically accurate — for example, geometric thinking and infinitesimals are neglected in the above story about how calculus was developed — but I think the actual stories would illustrate this point roughly equally well. Also, the actual process is of course not that discrete: there aren't really clear steps of getting objects from activities and starting to perform new activities with these new objects.) (To avoid a potential misunderstanding: even though many things studied in mathematics are first invented/discovered/encountered "in" our (thinking-)activities, I do not mean to say that the mathematical study of these things is then well-seen as the study of some aspects of (thinking-)activities — it's probably better-seen as a study of some concrete abstract things (even though it is often still very useful to continue to understand these abstract things in part by being able to perform and understand something like the activities in which they first showed up).) Philosophy also does a bunch of seeing things in thought and proceeding to talk about them; here's an example stack: "you should do X"-activities -> the notion of an obligation -> developing systems of obligations, discussing when someone has an obligation -> the notion of an ethical system/theory -> comparing ethical theories, discussing how to handle uncertainty over ethical theories -> the notion of a moral parliament. Of course, science also involves a bunch of taking some stuff discovered in previous activities as subject matter — consider how there is a branch of physics concerned with lenses and mirrors and a branch concerned with electric circuits (with batteries, resistors, capacitors, inductors, etc.) or how it's common for a science to be concerned with its methodology (e.g., econometrics isn't just about running regressions on new datasets or whatever, but also centrally about developing better econometric methods) — but I guess that given that this step tends to take one from some very concrete stuff in the world to some somewhat more abstract stuff, there's a tendency for these kinds of steps to exit science and land in math or philosophy (depending on whether the questions/objects are clearly specified or not) (for example, if an econometrician asks a clear methodological question that can be adequately studied without needing to make reference to some real-world context, then that question might be most appropriately studied by a [mathematical statistic]ian).) ↩︎

I should maybe say more here, especially if I actually additionally want to communicate some direct intuition that the two things are equi-infinite. ↩︎

this should maybe be split into more classes (on each side of the analogy between technological things and mathematical things) ↩︎

one can make a further decision about whether to look "inside" the rationals as well here ↩︎

incidentally, if you squint, Gödel's completeness theorem says that anything which can be talked about coherently exists; it'd be cool to go from this to saying that in math, given any "coherent external structure", there is an "internal structure"/thing which [gives rise to]/has that external structure; unfortunately, in general, there might only be such a thing in the same sense that there is a "proof" of the Gödel sentence G — (assuming PA is consistent) there is indeed an object x in some model of PA such that the sentence we thought meant that x is a proof of G evaluates to True, but unfortunately for our story (and fortunately for the coherence of mathematics), this x does not really correspond to a proof of G. ↩︎

One could try to form finer complexity classes (maybe like how there are different infinite cardinalities in set theory), i.e., make it possible to consider one infinite problem more infinite than another. I'd guess that the problems considered here would still remain equi-infinite even if one attempts some reasonable such stratification). ↩︎

I think there are also many other important kinds of thinking-technologies — we're just picking something to focus on here. ↩︎

something like this was used for AlphaGeometry (Fig. 3) ↩︎

For example, you might come up with the notion of a graph minor when trying to characterize planar graphs. The notion of a graph minor can "support" a characterization of planar graphs. ↩︎

I find it somewhat askew to speak of structures "in the brain" here — would we say that first-order logic was a structure "in the brain" before it was made explicit (as opposed to a structure to some extent and messily present in our (mathematical) thought/[reasoning-practices])? But okay, we can probably indeed also take an interest in stuff that's "more in the brain". ↩︎

I don't actually have a reference here, but there are surely papers on plants responding to some signals in a sorta-kinda-bayesian way in some settings? ↩︎

I'd also say the same if asked to guess at a higher structure "behind/in" doing more broadly. ↩︎

Language and words are largely (used) for thinking, not just for transferring information. ↩︎

words and mathematical concepts and theorems are also sorta tools, so I should really say e.g. "we also make more external tools" in this sentence ↩︎

well, all the useful ones, anyway ↩︎

including by hopefully opening up a constellation of questions to further inquiry ↩︎

you know, like [−1,1]3 ↩︎

If that's too easy for you, I'm sure you can find a tougher question about the cube which is appropriate for you. Maybe this will be fun: take a uniformly random 2-dimensional slice through the center of an n-dimensional hypercube; what kind of 2-dimensional shape do you see, and what's the expected number of faces? (I'm not asking for exact answers, but for a description of what's roughly going on asymptotically in n.) ↩︎

this is particularly true in the context of trying to solve alignment for good; it is plausibly somewhat less severe in the context of trying to end the present period of (imo) acute risk with some AI involvement; I will return to these themes in Note 7 ↩︎

let's pretend quantum mechanics isn't a thing ↩︎

I think there's a unity to being good at things, and I admit that the cluster of views on intelligence in these notes — namely, thinking this highly infinite thing, putting to use structures from a very diverse space — has some trouble/discomfort admitting/predicting/[making sense of] this. While I think there's some interesting/concerning tension here, I'm not going to address it further in these notes. ↩︎

by "fooming", I mean: becoming better at thinking, understanding more, learning, becoming more capable/skillful ↩︎

ignoring the heat death of this universe or some other such thing that might end up holding up and ignoring terrorism (e.g. by negative utilitarians :)) etc., the history of thought will probably always only be getting started ↩︎

in this example, the truth values are the "content" ↩︎

For example, you might just be going through all finite strings in order, checking for each string whether it is a valid proof of some sentence from the axioms, and if it is, assigning truth value 1 to that sentence and truth value 0 to its negation. ↩︎

Like, if there were any difference between the areas here, it'd surely involve math being more doable with a crisp system than science/tech/philosophy? ↩︎

it might help to take some mathematical subject you're skilled in and think about how you operate differently in it now that you have reprogrammed yourself into thinking in terms of its notions, comparing that to how you were thinking when solving problems in the textbook back when you were first learning it (it might help to pick something you didn't learn too long ago, though, so you still have some sense of what it was like not to be comfortable with it; alternatively/additionally, you could try to compare to some other subject you're only learning now) — like, if you're comfortable with linear algebra, think about how you can now just think in terms of vectors and linear maps and kernels and singular value decompositions and whatever, but how when you were first learning these things, you might have been translating problems into more basic terms that you were familiar with, or making explicit calls to facts in the textbook without having a sense of what's going on yourself ↩︎

and again, your own thinking is not just “operating on” your concepts, but also made (in part) of your concepts ↩︎

One could try to see this picture in terms of the (constitutive) ideas involved in thinking being “compute multipliers”, with anything that gets very far in not too much compute needing to find many compute multipliers for itself. ↩︎

especially young humans ↩︎

though you might even just be able to train yourself into being able to do that, actually ↩︎

That is, what 3-dimensional body is this intersection? ↩︎

maybe after I've already said "thinking is an organic/living, technological, developing, open-ended, on-all-levels-self-reinventing kind of thing", as I have above ↩︎

I'm aware that this would be circular if conceived of as a definition of thinking. ↩︎

I feel like this might sound spooky, but I really think it isn't spooky — I'm just trying to describe the most ordinary process of reworking a concept. One reason it might sound spooky is that I'm describing it overly from the outside. From the inside, it could eg look like noting that knowledge has some properties, and then trying to make sense of what it could be more precisely given that it has those properties. ↩︎

note also that one can be improving one's thinking in these ways without explicitly asking these questions ↩︎

what I mean by this is more precisely is just what I've said above ↩︎

Here's some context which can hopefully help make some sense of why one might be interested in whether confusion is going away (as well as in various other questions discussed in the present notes). You might have a picture of various imo infinite endeavors in which pursuing such an endeavor looks like moving on a trajectory converging to some point in some space; I think this is a poor picture. For example, this could show up when talking of being in reflective equilibrium or reflectively stable, when imagining coherent extrapolated volition as some sort of finished product (as opposed to there being a process of "extrapolation" genuinely continuing forever), when talking of a basin of attraction in alignment, when thinking of science or math as converging toward some state where everything has been understood, when imagining reaching some self-aware state where you've mostly understood your own thinking (in its unfolding), when imagining reaching some self-aware state where you've mostly understood your own thinking (in its unfolding), or, in the case of this note, when imagining deconfusion/philosophy/thinking as approaching some sort of ultimate deconfused state. If we want to think of a mind being on a trajectory in some space, I'd instead suggest thinking of it as being on a trajectory of flight, running off to infinity in some weird jagged fashion in a space where new dimensions keep getting revealed (no, not even converging in projective space or whatever). Or (I think) better still, we could maybe imagine a "(tentacled?) blob of understanding" expanding into a space of infinitely high dimension (things should probably be discrete — you should probably imagine a lattice instead of continuous space), where a point being further in the interior of the blob in more directions corresponds to a thing being [more firmly]/[less confusedly] understood (perhaps because of having been more firmly put in its proper context) — given reasonable assumptions, it will always remain the case that most points in the blob are close to the boundary of the blob in many directions (a related fact: a unit ball in high dimension has most of its volume near its surface) so "the blob" will always remain mostly confused, even though any particular point will eventually be more and more securely in the interior of the blob so any particular thing will eventually be less confusedly grasped. To be clear: the present footnote is mostly not intended as an argument in support of this view — I'm mostly just stating the question ↩︎

Also, I haven't really decided if I want to be saying something about the importance of confusion relative to other stuff or if I want to be saying something about whether confusion will continue to play a very important role instead. ↩︎

That said, the project could totally succeed in other ways — for example, trying to address some issue with a naive construction of such a language, one could discover/[make explicit]/invent a novel thinking-structure. ↩︎

Incidentally: I’m clearly confusedly failing to distinguish between different kinds of confusion in this note :). ↩︎

For example, it is probably good for many purposes to employ an "ecological arsenal" of concepts — in particular, to have concepts be ready to "evolve", "take on new responsibilities/meanings", "carry weight in our (intellectual) pursuits in new ways", "enter into new relations with each other". Maybe this looks weird to you if you are used to only thinking of words/concepts as things which are supposed to somehow just refer; I suggest that you also see how words/concepts are like [technological components]/[code snippets]/tools which can [make up]/[self-assemble]/[be assembled] into (larger) apparatuses/programs/thoughts/activities — given this, having a corps of dynamic concepts might start to look sensible. ↩︎

That said, assigning probabilities to pretty clear statements is very much a sensible/substantive/useful/real thing — e.g., in the context of prediction markets. ↩︎

Though note that one could also look at arbitrage as an example of this, and there's a case to be made for opening up a new arbitrage route increasing some sort of order/coherence despite putting some structures in a new relation. ↩︎

This is related to it being good to "train" the thinking-system in part "end-to-end". ↩︎

I don't know if I should be fixing a target and then either asking each to do its work or looking for examples of evolution having done that and asking humans to do it (in theses cases, evolution might come up with a thing that also does 100 other things), or painting the target around some stuff evolution has made and asking humans to make something similar. ↩︎

I mean like, up to the heat death of the universe (if that ends up holding up) or maybe some other limit like that. What I really mean is that there isn't a time of thinking/fooming followed by a time of doing/implementing/enjoying. ↩︎

I wonder if it'd be possible for the relative role of philosophy/[conceptual refactoring]/thought in thinking better to be reduced (compared to e.g. the role of computational resources) in the future (either indefinitely, or for some meaningful period of time). For example, maybe we could imagine a venture-capitalist-culture-spawned entity brazenly shipping a product that wipes them out, followed by that thing brazenly shipping an even mightier product that wipes it out, and so on many times in succession, always moving faster still and in a philosophically unserious fashion and breaking still more things? That said, we could also imagine reasons for the relative role of serious thought to go up in the world — e.g., maybe that'd be good/rational and that's something the weltgeist would realize more when more intelligent, or maybe ideas becoming even easier to distribute is going to continue increasing the relative value of ideas, or maybe better mechanisms capturing the value provided by philosophical ideas are going to come into use, or maybe a singleton could emerge and have an easier time with coordination issues preventing the world from being thought-guided. Anyway, even if there were a tendency in the direction of the relative role of philosophy being reduced in the world, there's probably no tendency for philosophy to be on its way out of the world. (I mean "philosophy" here in some sense that is not that specific to humans — I think philosophy-the-human-thing might indeed be lost soon, sadly (because humans will probably get wiped out by AI, sadly).) ↩︎

or potentially some future more principled measures of this flavor ↩︎

Like, maybe I'd say that the end of thinking/fooming is still further away than 1010 years of thinking "at the 2024 rate". ↩︎

There are also various variants of the big alignment endeavor and various variants of these small problems and various other small problems and various finite toy problems with various relations to the big problem. If you have some other choices of interest here, what I say could, mutatis mutandis, still apply to your variants. ↩︎

of course, creating humanity-next-year might be not-fine on some absolute scale because it'll maybe commit suicide with AI or with something else, but it's not like it's more suicidal than humanity-now, so it's "fine" in a relative sense ↩︎

I do not consider this an exhaustive list of systems smarter than humanity which are fine and possible to create. For instance, it could be feasible at some point to create a system which is better than humans at many things and worse than humans at some things, perhaps being slightly smarter than humans when we perform some aggregation across all domains/skills, and which is highly non-human, but which wants to be friends with humans and to become smarter together instead of becoming smarter largely alone, with some nontrivial number of years of fooming together (perhaps after/involving a legitimate reconciliation/merge of that system with humans). But I think such a scenario is very weird/unlikely on our default path. More generally, I think the list I provide plausibly comes much closer to exhausting the space of “solutions” to the small alignment problem than most other current pictures of alignment would suggest. In particular, I think that creating some sort of fine-to-create super-human benefactor/guardian that "sits outside human affairs but supports them"/"looks at the human world from the outside" is quite plausibly going to remain a bad idea forever. (One could try to construct such a world with some humans fooming faster than others and acting as guardians, but I doubt this would actually end up looking much like them acting as guardians (though there could be some promising setup I haven't considered here). I think it would very likely be much better than a universe with a random foom anyway, given that some kind of humanity might be doing very much and getting very far in such a universe — I just doubt it would be (a remotely unconditioned form of) this slower-fooming humanity that the faster-fooming humans were supposed to act as guardians for.) I elaborate on these themes in later notes. ↩︎

in particular, humanity ↩︎

One of my more absurd(ly expressed) hopes with these notes is to (help) push alignment out of its present "(imo) alchemical stage", marked by people attempting to create some sort of ultimate "aligned artifact" — one which would plausibly solve all problems and make life good eternally (and also marked by people attempting to transmute arbitrary cognitive systems into "aligned" ones, though that deserves additional separate discussion). (To clarify: this analogy between the present alignment field and alchemy is not meant as a further argument — it just allows for a sillier restatement of a claim already made.) ↩︎

To say a bit more: I'm unsure about how long a stretch one can reasonably think this about. It obviously depends on the types of capability-gainings involved — for example, there are probably meaningful differences in "safety" between (a) doing algebraic geometry, (b) inventing/discovering probability and beginning to use it in various contexts, (c) adding "neurons" via brain-computer interface and doing some kind of RL-training to set weights so these neurons help you do math (I don't actually have a specific design for this, and it might well be confused/impossible, but try to imagine something like this), and (d) making an AI hoping it will do advanced math for you, even once we "normalize" the items in this comparison so that each "grants the same amount of total capability". These differences can to some extent be gauged ex ante, and at "capability gain parity", there will probably be meaningful selection toward "safer" methods. Also relevant to the question about this "good stretch" (even making sense): the extent to which one's values mostly make sense only "in one's own time". I'm currently confused about these and other relevant background matters, and so lack a clear view of how long a good stretch (starting from various future vantage points) one could reasonably hope for. I think I'd give probability at least 10−6 to humanity having a worthwhile future of at least 1010 "subjective years". ↩︎

But this is plausibly still specification gaming. ↩︎

One could also ask more generally if there is a textbook for making an alien AI which does something delimited-but-transformative (like making mind uploads) and then self-destructs (and doesn't significantly affect the world except via its making of mind uploads, and with the mind upload tech it provides not being malign). ↩︎

though most good futures surely involve us changing radically, and in particular involve a great variety of previously fairly alien AI components being used by/in humanity ↩︎

One can also ask versions where many alien AIs are involved (in parallel or in succession), with some alien AIs being smarter than humanity indefinitely. I'd respond to these versions similarly. ↩︎

That said, I'll admit that even if you agree that alignment isn't the sort of thing that can be solved, you could still think there are good paths forward on which AIs do alignment research for us, indefinitely handling the infinitely rich variety of challenges about how to proceed. I think that this isn't going to work out — that such futures overwhelmingly have AIs doing AI things and basically nothing meaningfully human going on — but I admit that the considerations in these notes up until the present point are insufficient for justifying this. I'll say more about this in later notes. ↩︎

In fact, I don't know of any research making any meaningful amount of progress on the technical challenge of making any AI which is smarter than us and good to make. But also, I think it's plausible we should be doing something much more like becoming smarter ourselves forever instead. These statements call for (further) elaboration and justification, which I aim to provide in upcoming notes in this sequence. ↩︎

I don't mean anything that mysterious here — I largely just mean what I've already said in previous notes, though this theme and the broader them that history won't end could be developed much further (it will be developed a bit further in later notes). ↩︎

Instead of "primitive compositional language", I originally wanted to say "language" here, but since I don't think language is that much of a definite single thing — I think it is a composite of many "ideas", and very open to new "ideas" getting involved — I went with "primitive compositional language", trying to give a slightly less composite thing. But that is surely still not that unitary. ↩︎

or maybe multiple such systems, in parallel or in succession ↩︎

There are also some things made by/of other life (such as a beaver dam and a forest ecosystem) and some things made by physics (such as stars, planets, oceans, volcanoes, clouds). There's often (almost always?) some selection/optimization process going on even to make these structures “of mere physics”. ↩︎