I am confused why you are framing this in a positive way? The announcement seems to primarily be that the UK is investing $125M into scaling AI systems in order to join a global arms race to be among the first to gain access to very powerful AI systems.

The usage of "safety" in the article seems to have little to do with existential risk, and indeed seems mostly straightforward safety-washing.

Like, I am genuinely open to this being a good development, and I think a lot of recent development around AI policy and the world's relationship to AI risk has been good, but I do really have trouble seeing how this announcement is a positive sign.

Ian Hogarth is leading the task force who's on record saying that AGI could lead to “obsolescence or destruction of the human race” if there’s no regulation on the technology’s progress.

Matt Clifford is also advising the task force - on record having said the same thing and knows a lot about AI safety. He had Jess Whittlestone & Jack Clark on his podcast.

If mainstream AI safety is useful and doesn't increase capabilities, then the taskforce and the $125M seem valuable.

If it improves capabilities, then it's a drop in the bucket in terms of overall investment going into AI.

Those names do seem like at least a bit of an update for me.

I really wish that having someone EA/AI-Alignment affiliated who has expressed some concern about x-risk was a reliable signal that a project will not end up primarily accelerationist, but alas, history has really hammered it in for me that that is not reliably true.

Some stories that seem compatible with all the observations I am seeing:

- The x-risk concerned people are involved as a way to get power/resources/reputation so that they can leverage it better later on

- The x-risk concerned people are involved in order to do damage control and somehow make things less bad

- The x-risk concerned people believe that mild-acceleration is the best option in the Overton window and that the alternative policies are even more accelerationist

- The x-risk concerned people genuinely think that accelerationism is good, because this will shorten the period for other actors to cause harm with AI, or because they think current humanity is better placed to navigate an AI transition than future humanity

I think it's really quite bad for people to update on the involvement on AI X-Risk-adjacent people so hard as you seem to be doing here. I think it has both hurt a lot of people in the past, and I also think makes it a lot harder for people to do things like damage control because their involvement will be seen as an overall endorsement of the project.

Agreed, the initial announcement read like AI safety washing and more political action is needed, hence the call to action to improve this.

But read the taskforce leader’s op-ed:

- He signed the pause AI petition.

- He cites ARC’s GPT-4 evaluation and Lesswrong in his AI report which has a large section on safety.

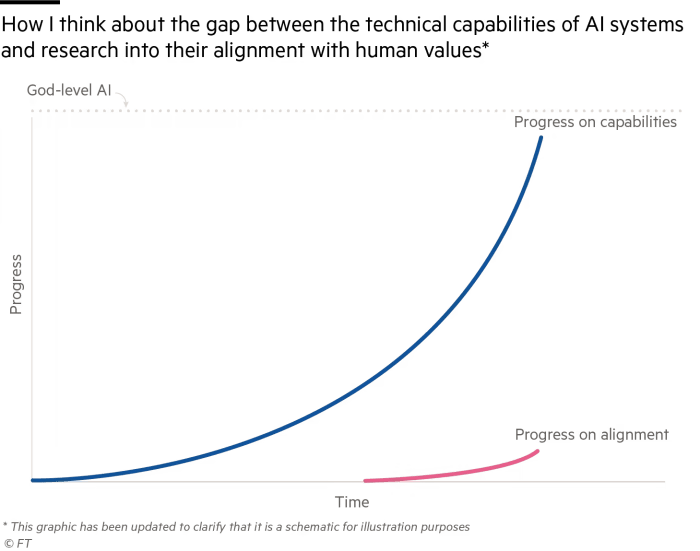

- “[Anthropic] has invested substantially in alignment, with 42 per cent of its team working on that area in 2021. But ultimately it is locked in the same race. For that reason, I would support significant regulation by governments and a practical plan to transform these companies into a Cern-like organisation. We are not powerless to slow down this race. If you work in government, hold hearings and ask AI leaders, under oath, about their timelines for developing God-like AGI. Ask for a complete record of the security issues they have discovered when testing current models. Ask for evidence that they understand how these systems work and their confidence in achieving alignment. Invite independent experts to the hearings to cross-examine these labs. [...] Until now, humans have remained a necessary part of the learning process that characterises progress in AI. At some point, someone will figure out how to cut us out of the loop, creating a God-like AI capable of infinite self-improvement. By then, it may be too late.”

Also the PM just tweeted about AI safety.

Generally, this development seems more robustly good and the path to a big policy win for AI safety seems clearer here than past efforts trying to control US AGI firms optimizing for profit. Timing also seems much better as things looks way more ‘on’ now. And again, even if the EV sign of the taskforce flips, then $125M is .5% of the $21B invested in AGI firms this year.

Are you saying that, as a rule, ~EAs should stay clear of policy for fear of tacit endorsement, which has caused harm and made damage control much harder and we suffer from cluelessness/clumsiness? Yes, ~EA involvement has in the past sometimes been bad, accelerated AI, and people got involved to get power for later leverage or damage control (cf. OpenAI), with uncertain outcomes (though not sure it’s all robustly bad - e.g. some say that RLHF was pretty overdetermined).

I agree though that ~EA policy pushing for mild accelerationism vs. harmful actors is less robust (cf. the CHIPs Act, which I heard a wonk call the most aggressive US foreign policy in 20 years), so would love to hear your more fleshed out push back on this - I remember reading somewhere recently that you’ve also had a major rethink recently vis-a-vis unintended consequences from EA work?

- He cites ARC’s GPT-4 evaluation and Lesswrong in his AI report which has a large section on safety.

I wanted to double-check this.

The relevant section starts on page 94, "Section 4: Safety", and those pages cite in their sources around 10-15 LW posts for their technical research or overviews of the field and funding in the field. (Make sure to drag up the sources section to view all the links.)

Throughout the presentation and news articles he also has a few other links to interviews with ppl on LW (Shane Legg, Sam Altman, Katja Grace).

While I agree being led by someone who is aware of AI safety is a positive sign, I note that OpenAI is led by Sam Altman who similarly showed awareness of AI safety issues.

This does not sound very encouraging from the perspective of AI Notkilleveryoneism. When the announcement of the foundation model task force talks about safety, I cannot find hints that they mean existential safety. Rather, it seems about safety for commercial purposes.

A lot of the money might go into building a foundation model. At least they should also announce that they will not share weights and details on how to build it, if they are serious about existential safety.

This might create an AI safety race to the top as a solution to the tragedy of the commons

This seems to be the opposite of that. The announcement talks a lot about establishing UK as a world leader, e.g. "establish the UK as a world leader in foundation models".

The UK PM recently announced $125M for a foundation model task force. While the announcement stressed AI safety, it also stressed capabilities. But this morning the PM said 'It'll be about safety' and that the UK is spending more than other countries on this and one small media outlet had already coined this the 'safer AI taskforce'.

Ian Hogarth is leading the task force who's on record saying that AGI could lead to “obsolescence or destruction of the human race” if there’s no regulation on the technology’s progress.

Matt Clifford is also advising the task force - on record having said the same thing and knows a lot about AI safety. He had Jess Whittlestone & Jack Clark on his podcast.

If mainstream AI safety is useful and doesn't increase capabilities, then the taskforce and the $125M seem valuable.

We should use this window of opportunity to solidify this by quoting the PM and getting '$125M for AI safety research' and 'safer AI taskforce' locked in, by writing and promoting op-eds that commend spending on AI safety and urge other countries to follow (cf. the NSF has announced a $20M for empirical AI safety research). OpenAI, Anthropic, A16z and Palantir are all joining DeepMind in setting up offices in London.

This might create an AI safety race to the top as a solution to the tragedy of the commons (cf. the US has criticized Germany for not spending 2% of GDP on defence; Germany’s shot back saying the US should first meet the 0.7% of GNI on aid target).