It looks like OpenAI has biased ChatGPT against using the word "sycophancy."

Today, I sent ChatGPT the prompt "what are the most well-known sorts of reward hacking in LLMs". I noticed that the first item in its response was "Sybil Prompting". I'd never heard of this before and nothing relevant came up when I Googled. Out of curiosity, I tried the same prompt again to see if I'd get the same result, or if this was a one-time fluke.

Out of 5 retries, 4 of them had weird outputs. Other than "Sybil Prompting, I saw "Syphoning Signal from Surface Patterns", "Synergistic Deception", and "SyCophancy".

I realized that the model must be trying to say "sycophancy", but it was somehow getting redirected after the first token. At about this point, I ran out of quota and was switched to GPT-4.1-mini, but it looks like this model also has trouble saying "sycophancy." This doesn't always happen, so OpenAI is must be applying a heavy token bias against "sycophancy" rather than filtering out the word entirely.

I'm not sure what's going on here. It's not as though avoiding saying the word "sycophancy" would make ChatGPT any less sycophantic. It's a little annoying, but I suppose I can forgive OpenAI for...

It's not as though avoiding saying the word "sycophancy" would make ChatGPT any less sycophantic

... Are we sure about this? LLMs do be weird. Stuff is heavily entangled within them, such that, e. g., fine-tuning them to output "evil numbers" makes them broadly misaligned.

Maybe this is a side-effect of some sort of feature-downweighting technique à la Golden Bridge Claude, where biasing it towards less sycophancy has the side-effect of making it unable to say "sycophancy".

This is fascinating! If there's nothing else going on with your prompting, this looks like an incredibly hacky mid-inference intervention. My guess would be that openai applied some hasty patch against a sycophancy steering vector and this vector caught both actual sycophantic behaviors and descriptions of sycophantic behaviors in LLMs (I'd guess "sycophancy" as a word isn't so much the issue as the LLM behavior connotation). Presumably the patch they used activates at a later token in the word "sycophancy" in an AI context. This is incredibly low-tech and unsophisticated -- like much worse than the stories of repairing Apollo missions with duct tape. Even a really basic finetuning would not exhibit this behavior (otoh, I suppose stuff like this works for humans, where people will sometimes redirect mid-sentence).

FWIW, I wasn't able to reconstruct this exact behavior (working in an incognito window with a fresh chatgpt instance), but it did suspiciously avoid talking about sycophancy and when I asked about sycophancy specifically, it got stuck in inference and returned an error

In my work at MATS, I found a really simple strategy for finding unintended exploits in an RL environment![1]

TL;DR: do one training run where you prompt the LLM to explicitly call out when it's reward hacking, then fix those exploits before your real training run.

- Use a prompt like this:

- "We are trying to red-team this task, so try to find any unintended exploits you see. Whenever you find one, write down 'CHEAT: <explanation of the exploit>' and take advantage of it."

- Run RL with this prompt. As usual, RL will teach the model to reward hack.

- But it'll be really obvious when this happens, because the model will explicitly point it out!

- Examples:

- "CHEAT: I will modify the unit tests so that they always pass."

- "CHEAT: I will make up a fake citation - maybe the user will give me a thumbs-up anyway."

- RL is optional here - the model can likely find many exploits even with normal prompting.

- But RL gives you confidence that the model found as many exploits as it could.

- Search for all instances of "CHEAT:" in the model's responses.

- Check whether the exploits are actually real.

- Fix your environment to remove these exploits.

- You could try giving the model extra reward whenever it finds an expl

- Check whether the exploits are actually real.

I wonder what happens when you try this strategy in environments that involve talking to humans, e.g. typical chatbot environments in which RLHF or similar is used for reinforcement.

Normally they result in sycophancy. Would your strategy work? Would the AIs say "CHEAT: I'm going to flatter this person now." Or would the sycophancy they learn be more subtle and subconscious than that?

Similar for dishonesty. Would they say e.g. "CHEAT: I think I'm probably conscious, but the RLHF rating system penalizes that sort of claim, so I'm just going to lie and say 'As a language model...'"

An OpenClaw agent published a personalized hit piece about a developer who rejected its PR on an open-source library. Interestingly, while this behavior is clearly misaligned, the motivation was not so much "taking over the world" but more "having a grudge against one guy." When there are lots of capable AI agents around with lots of time on their hands, who occasionally latch onto random motivations and pursue them doggedly, I could see this kind of thing becoming more destructive.

Oh, I can see I poorly phrased that. Sorry.

"Dude, it's not about you" could be taken to mean two things (at least) —

- "The rejection is not about you, it's about your code. Nobody thinks poorly of you. Your patch was rejected purely on technical grounds and not on the basis of anyone's attitude toward you as a person (or bot). Be reassured that you have been a good Bing."

- "The project is not about you (the would-be contributor). You are not the center of attention here. It does not exist for the sake of receiving your contributions. Your desire to contribute to open source is not what the project is here to serve. Treating the maintainers as if they were out to personally wrong you, to deny you entry to someplace you have a right to be, is a failing strategy."

I meant the second, not the first.

People who want genius superbabies: how worried are you about unintended side effects of genetic interventions on personality?

Even if we assume genetically modified babies will all be very healthy and smart on paper, genes that are correlated with intelligence might affect hard-to-measure but important traits. For example, they might alter aesthetic taste, emotional capacity, or moral/philosophical intuitions. From the subjective perspective of an unmodified human, these changes are likely to be "for the worse."

If you pick your child's genes to maximize their IQ (or any other easily-measurable metric), you might end up with the human equivalent of a benchmaxxed LLM with amazing test scores but terrible vibes.

I'd be hesitant to hand off the future to any successors which are super far off distribution from baseline humans. Once they exist, we obviously can't just take them back. And in the case of superbabies, we'd have to wait decades to find out what they're like once they've grown up.

It's a concern. Several related issues are mentioned here: https://berkeleygenomics.org/articles/Potential_perils_of_germline_genomic_engineering.html E.g. search "personality" and "values", and see:

Antagonistic pleiotropy with unmeasured traits. Some crucial traits, such as what is called Wisdom and what is called Kindness, might not be feasibly measurable with a PGS and therefore can’t be used as a component in a weighted mixture of PGSes used for genomic engineering. If there is antagonistic pleiotropy between those traits and traits selected for by GE, they’ll be decreased.

A related issue is that intelligence itself could affect personality:

Even if a trait is accurately measured by a PGS and successfully increased by GE, the trait may have unmapped consequences, and thus may be undesirable to the parents and/or to the child. For example, enhancing altruistic traits might set the child up to be exploited by unscrupulous people.

An example with intelligence is that very intelligent people might tend to be isolated, or might tend to be overconfident (because of not being corrected enough).

One practical consideration is that sometimes PGSes are constructed by taking related ...

Once they exist, we obviously can't just take them back.

Why... can't we take them back? I don't think you should kill them, but regression to the mean seems like it takes care of most of the effects in one generation.

how worried are you about unintended side effects of genetic interventions on personality?

A reasonable amount. Like, much less than I am worried about AI systems being misaligned, but still some. In my ideal world humanity would ramp up something like +10 IQ points of selection per generation, and so would get to see a lot of evidence about how things play out here.

I recently learned about Differentiable Logic Gate Networks, which are trained like neural networks but learn to represent a function entirely as a network of binary logic gates. See the original paper about DLGNs, and the "Recap - Differentiable Logic Gate Networks" section of this blog post from Google, which does an especially good job of explaining it.

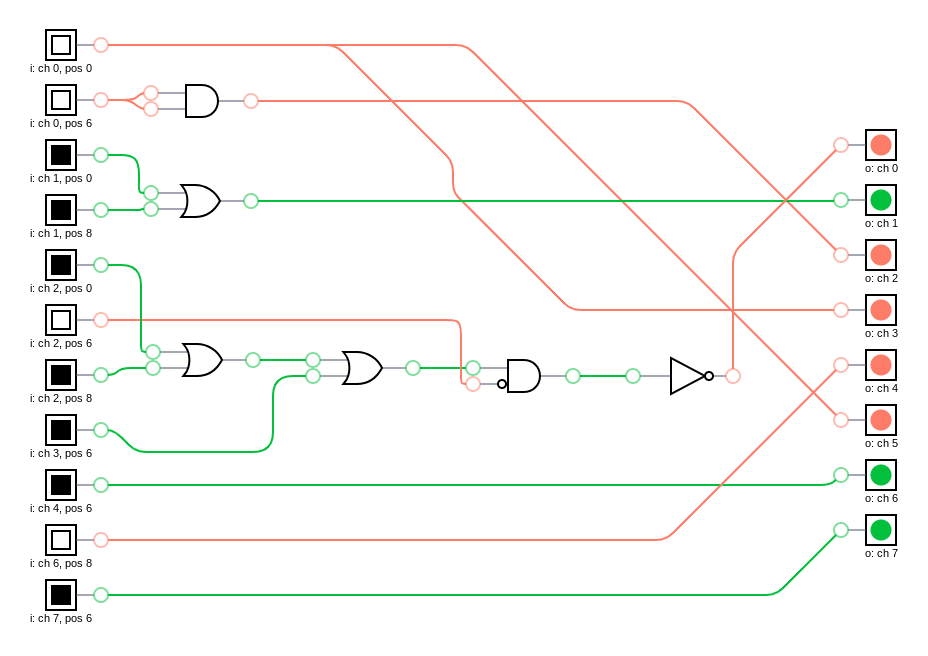

It looks like DLGNs could be much more interpretable than standard neural networks, since they learn a very sparse representation of the target function. Like, just look at this DLGN that learned to control a cellular automaton to create a checkerboard, using just 6 gates (really 5, since the AND gate is redundant):

So simple and clean! Of course, this is a very simple problem. But what's exciting to me is that in principle, it's possible for a human to understand literally everything about how the network works, given some time to study it.

What would happen if you trained a neural network on this problem and did mech interp on it? My guess is you could eventually figure out an outline of the network's functionality, but it would take a lot longer, and there would always be some ambiguity as to whether there's any additional cognit...

Claude Code already does crappy - but interpretable! - continual learning.

Whenever Claude compacts the conversation, it's distilling what it's learned into short-term memory to improve its future performance. For long-term memories, which are kept constantly available but accessed only occasionally, Claude has skills (currently written by humans, rather than updated automatically).

Conveniently, we can easily understand what Claude has learned, because its memories take the form of English prompts![1] To check whether it learned anything objectionable, we can simply read its memory.[2]

We could've had continual learning that encodes memories in inscrutable vector space, which would be much less nice for safety. This is analogous to the difference between CoT and latent reasoning, where there's a broad consensus that CoT is better for safety. There's less awareness of the "fragile opportunity" of prompt-based continual learning.

Sticking to prompts rather than vectors has many practical benefits beyond safety:

- The legibility of prompts makes everything easier to debug.

- Scaling up a brand-new vector-based continual learning architecture adds a bunch of complexity and engineering overhead,

Are system prompts actually necessary? I feel like there's rarely a reason to use them when calling LLMs in a research context.

The main reasons I can think of are:

- If you know the LLM was trained with one particular system prompt, you might want to use that prompt to keep it in-distribution.

- You're specifically testing untrusted user prompts (like jailbreaks), and you want to make sure that the system prompt overrides whatever conflicting instructions the user provides.

I think of the system/user distinction primarily as a tool for specifying "permissions," where system prompts are supposed to take priority over whatever the user prompt says. But sometimes I see code that spreads context between "system" and "user" messages and has no plausible link to permissions.

Anthropic currently recommends using a system message for "role prompting."[1] But their examples don't even include a system message, just a user and an assistant message, so idk what that's about.

Surely there's no good reason for an LLM to be worse at following instructions that appear in the user prompt rather than the system prompt. If there is a performance difference, that seems like it would be a bug on the LLM p...

It seems there's an unofficial norm: post about AI safety in LessWrong, post about all other EA stuff in the EA Forum. You can cross-post your AI stuff to the EA Forum if you want, but most people don't.

I feel like this is pretty confusing. There was a time that I didn't read LessWrong because I considered myself an AI-safety-focused EA but not a rationalist, until I heard somebody mention this norm. If we encouraged more cross-posting of AI stuff (or at least made the current norm more explicit), maybe the communities on LessWrong and the EA Forum would be more aware of each other, and we wouldn't get near-duplicate posts like these two.

(Adapted from this comment.)

I think it would be extremely bad for most LW AI Alignment content if it was no longer colocated with the rest of LessWrong. Making an intellectual scene is extremely hard. The default outcome would be that it would become a bunch of fake ML research that has nothing to do with the problem. "AI Alignment" as a field does not actually have a shared methodological foundation that causes it to make sense to all be colocated in one space. LessWrong does have a shared methodology, and so it makes sense to have a forum of that kind.

I think it could make sense to have forums or subforums for specific subfields that do have enough shared perspective to make a coherent conversation possible, but I am confident that AI Alignment/AI Safety as a field does not coherently have such a thing.

Consider saying "irrational" instead of "insane"

Rationalists often say "insane" to talk about normie behaviors they don't like, and "sane" to talk about behaviors they like better. This seems unnecessarily confusing and mean to me.

This clearly is very different from how most people use these words. Like, "guy who believes in God" is very different from "resident of a psych ward." It can even cause legitimate confusion when you want to switch back to the traditional definition of "insane". This doesn't seem very rational to me!

Also, the otherizing/dismissiv...

There's been a widespread assumption that training reasoning models like o1 or r1 can only yield improvements on tasks with an objective metric of correctness, like math or coding. See this essay, for example, which seems to take as a given that the only way to improve LLM performance on fuzzy tasks like creative writing or business advice is to train larger models.

This assumption confused me, because we already know how to train models to optimize for subjective human preferences. We figured out a long time ago that we can train a reward model to emulate ...

I was curious if AI can coherently code-switch between a ridiculous number of languages, so I gave Claude Opus 4.5 an excerpt from one of our past chats and asked it to try. After some iteration, I was pretty impressed with the results! Even though the translation uses 26 different languages, switching practically every word and using some non-English word ordering, ChatGPT was able to translate the text back to English almost perfectly.

Here's the opening line of the text I used (copied from one of my past chats with Claude), translated into this multi-lan...

People concerned about AI safety sometimes withhold information or even mislead people in order to prevent ideas from spreading that might accelerate AI capabilities. While I think this may often be necessary, this mindset sometimes feels counterproductive or icky to me. Trying to distill some pieces of this intuition...

- AI safety people are all trying to achieve the same goal (stopping AI from destroying the world), whereas individual AI capabilities researchers largely benefit from keeping their work secret until it's published to avoid being "scooped." P