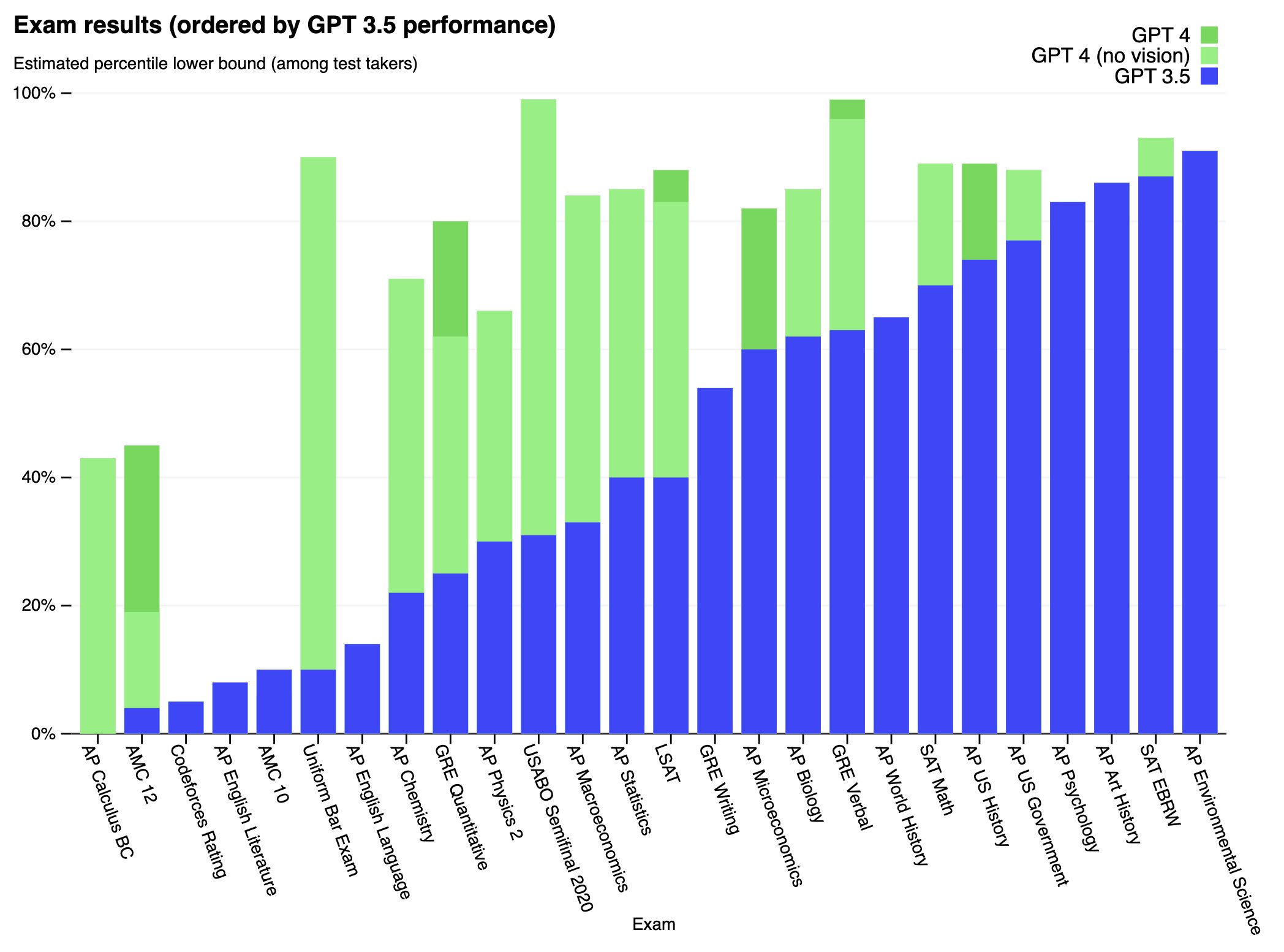

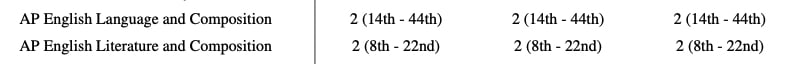

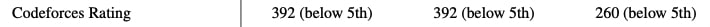

We’ve created GPT-4, the latest milestone in OpenAI’s effort in scaling up deep learning. GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while worse than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.

Full paper available here: https://cdn.openai.com/papers/gpt-4.pdf

Sorry for the late reply, but yeah, it was mostly vibes based on what I'd seen before. I've been looking over the benchmarks in the Technical Report again though, and I'm starting to feel like 500B+10T isn't too far off. Although language benchmarks are fairly similar, the improvements in mathematical capabilities over the previous SOTA is much larger than I first realised, and seem to match a model of that size considering the conventionally trained PaLM and its derivatives' performances.