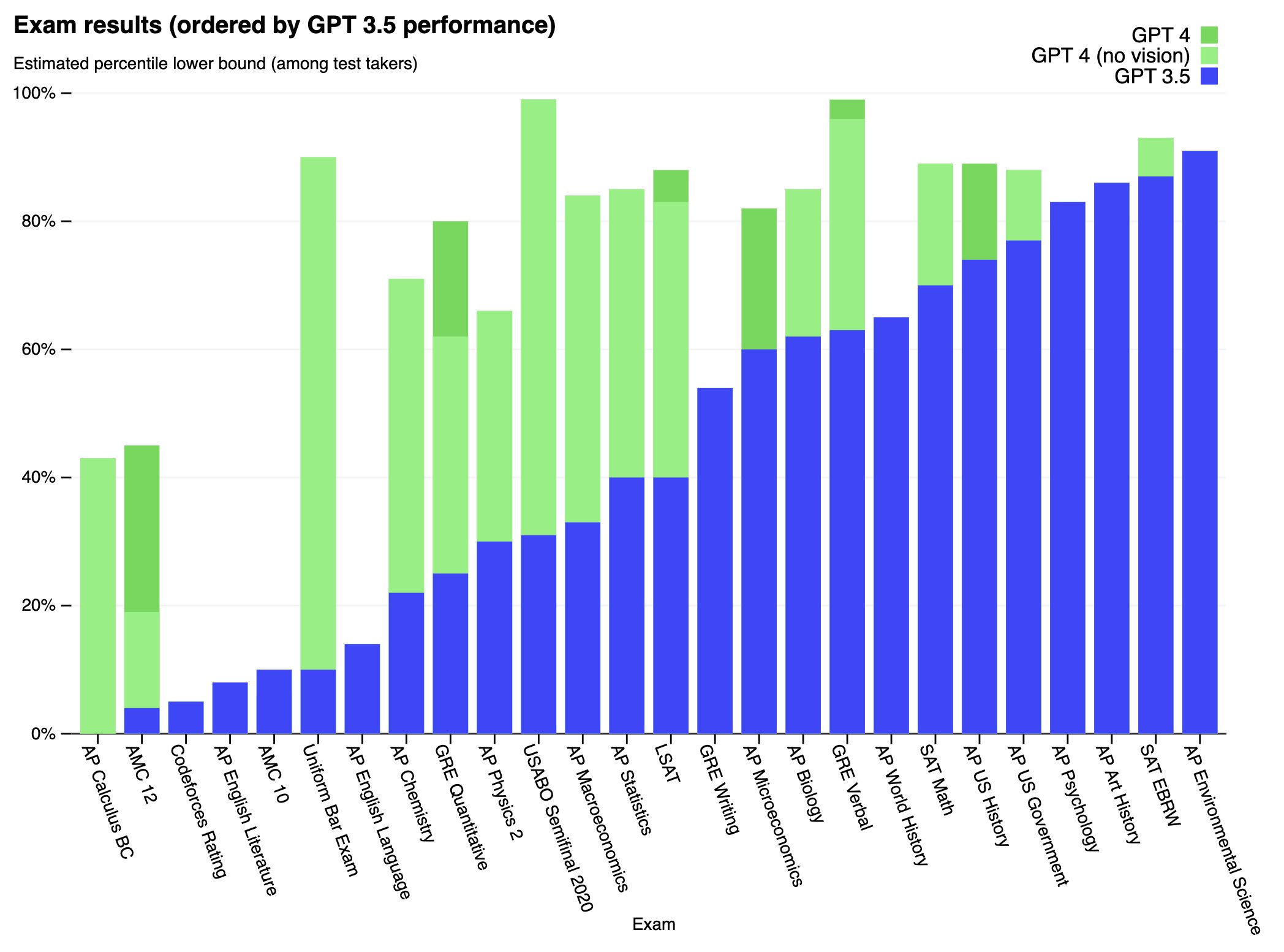

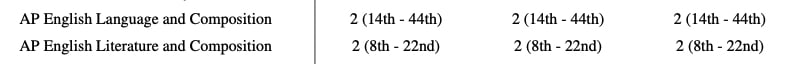

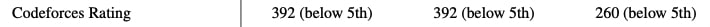

We’ve created GPT-4, the latest milestone in OpenAI’s effort in scaling up deep learning. GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while worse than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.

Full paper available here: https://cdn.openai.com/papers/gpt-4.pdf

What??? This is so weird and concerning.

It seems you and Paul are correct. I still think this suggests that there is something deeply wrong with RLHF, but less in the “intentionally deceives humans” sense, and more in the “this process consistently writes false data to memory” sense.