How is it that someone like Graham could hold so strongly and express so eloquently the opinion that the most important lesson to unlearn is the desire to pass tests, and then create an institution like Y-Combinator?

An alternative hypothesis is that Graham has a bunch of tests/metrics that he uses to evaluate people on, and those tests/metrics work much better when people do not try to optimize for doing well on them (cf. Goodhart's law). He may not be consciously thinking this, but his incentives certainly point in the direction of writing an essay like the one he wrote.

From my perspective, both you and Graham are failing to address or even mention the root cause of the problem, namely that value differences and asymmetric information imply that a utopia where people don't give tests and people don't try to pass or hack tests can't exist; tests are part of a second-best solution, and therefore so is the desire to pass/hack tests.

Your explanation is instead:

There’s a way to succeed (i.e. become a larger share of what exists) through production, and a way to succeed through purely adversarial (i.e. zero-sum) competition. These are incompatible strategies, so that productive people will do poorly in zero-sum systems, and vice versa. The productive strategy really is good, and in production-oriented contexts, a zero-sum attitude really is a disadvantage.

I don't know how to make sense of this, because it's really far from anything I've learned from economics and game theory. For example what are "zero-sum systems" and "production-oriented contexts"? What is a "zero-sum attitude" and what causes people to have a "zero-sum attitude" even in a "production-oriented context"? Why is "zero-sum attitude" a disadvantage in "production-oriented contexts"?

He then sets up an institution optimizing for “success” directly, rather than specifically for production-based strategies. But in the environment in which he’s operating, adversarial strategies can scale faster.

Given that "zero-sum attitude" is a disadvantage in "production-oriented contexts", this seems to be saying that Graham failed to set up a "production-oriented context" and instead accidentally set up a "zero-sum system". Is that right? How is one supposed to go about setting up a "production-oriented context" then? Is it possible to do that without giving tests or using metrics that could be gamed by others?

Y Combinator could very easily (if not for the apparent emotional difficulties mentioned in Black Swan farming) have instead been organized to screen for founders making a credible effort to create a great product, instead of screening for generalized responsiveness to tests.

I believe Ben is distinguishing between tests-that-are-useful-because-of-what-the-test-administrators-can-give-you, vs honest assessments of the value of something.

For example, as a VC you could genuinely not care about what clothes people wear, or you could loudly announce that you don't care while actually assessing applicants based on their visual resemblance to the ideal founder in your head. Ability to guess your clothing password is associated with ability to guess passwords in general, which is associated with success in general, so even an arbitrary clothing test is somewhat predictive of success. What it's not predictive of is the value a product will create or the marginal difference this funding will make, or success in a world that's based on these things instead of password guessing.

An alternative hypothesis is that Graham has a bunch of tests/metrics that he uses to evaluate people on, and those tests/metrics work much better when people do not try to optimize for doing well on them

Isn't it a bit odd that PG's secret filters have the exact same output as those of staid, old, non-disruptive industrialists?

I.e., strongly optimizing for passing the tests of <Whitebread Funding Group> doesn't seem to hurt you on YC's metrics.

I'm not sure whether that's true. One way to optimize for the tests of <Whitebread Funding Group> might be to go to a convention where you can get a change to pitch yourself in person to them. There are also other ways you can spend a lot of time to someone to endorse you to <Whitebread Funding Group>.

Spending that means that's time not spent getting clear about vision, building product or talking to users and that will be negative for applying to VC.

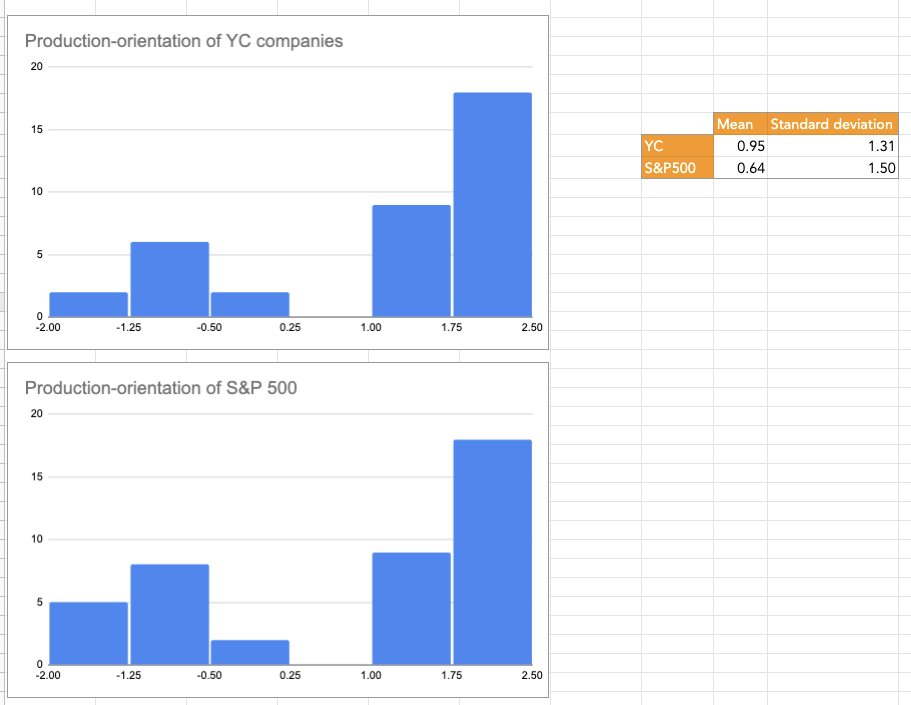

I wrote up a longer, conceptual review. But I also did a brief data collection, which I'll post here as others might like to build on or go through a similar exercise.

In 2019 YC released a list of their top 100 portfolio companies ranked by valuation and exit size, where applicable.

So I went through the top 50 companies on this list, and gave each company a ranking ranging from -2 for "Very approval-extracting" to 2 for "Very production-oriented".

To decide on that number, I asked myself questions like "Would growth of this company seem cancerous?" and "Would I reflectively endorse using this product?"

Companies that scored highly include Doordash, Dropbox and Gusto (all 2's), and companies that score low include Scale.com (which builds tooling to speed up AI research) and Twitch (-2 and -1).

For comparison, I also did the same exercise with the top 50 S&P500 companies by market cap, with high-scoring ones including Microsoft and Visa, and low-scoring ones including Coca Cola and Salesforce.

This scale is Very Made-up and Maybe Useless. But, if nothing else, it seemed like a useful way to get grounded in some data before thinking further about the post.

Overall, the distributions ended up very similar, though YC did come out with a higher mean, mostly driven by fewer negative tail companies.

Spreadsheet here.

I did the first 20 from each column of your spreadsheet, and got a different result. I hid your answers before writing mine. My rubric was different; instead of focusing on social value, I focused on what type of business relations a company has. You can see my answers here. These are all very noisy, and I'm not entirely confident I didn't have rating-drift between when I did the YC ones and when I did the S&P ones, but I got a slightly higher score for S&P companies.

In my rubric, things that mean low scores:

- You have a Compliance department

- A significant portion of your business is oriented towards appeasing gatekeepers (as opposed to there being no relevant gatekeepers, or fighting them not on their normal terms)

- The price is not disclosed until you talk to a salesperson

- You need your business partners but they don't need you

Things that mean high scores:

- You are creating a new market

- Dealing with gatekeepers is not a major concern

- Your customers take your price or leave it

- You do not operate a call center

- Your business partners need you but you don't need them

We had maximally-different scores (2 vs -2) for Gusto, Microsoft, Facebook, Visa, Mastercard and PayPal. The correlation between our scores was 0.6 for the YC companies, -0.16 for the S&P 500 companies.

Nice, this is interesting!

You need your business partners but they don't need you

I don't understand what this means and what it's measuring.

This was a great idea, but I think the spreadsheet fails on two fronts - first, it's measuring the end product rather than the founders and how they operate and attempt to scale, which is the primary thing Benquo is talking about here I believe, and two is that if I ranked these companies I don't think there would be that much correlation with these rankings.

In the examples from the comment, and judging purely on nature of product since I don't know the founders or early histories much, I'd have had Twitch as positive while I had Doordash as negative, I'd agree with Dropbox and Gusto, and Scale is a weird case where we think the product is bad if it is real but that's orthogonal to the main point here.

Looking at the S&P 500 I see the same thing. Amazon at 0 seems insane to me (I'd be +lots) and McDonalds at -2 even more so especially in its early days (The Founder is a very good movie about its origins).

When I read this essay in 2019, I remember getting the impression that approval-extracting vs production-oriented was supposed to be about the behavior of the founders, not the industry the company competes in.

This was a great idea!

Companies that scored highly include Doordash, Dropbox and Gusto (all 2's), and companies that score low include Scale.com and Twitch (-2 and -1).

I can't quite tell why you think Twitch is bad. It is subject to network effects, kind of a social media company, is that why? And I don't know what Scale.com is other than some AI company.

For many of these companies I feel like my opinion changes as they become monopolies. For example, we use Gusto at LW, it's great. That said, if it became the primary company people used in a country to interact with a part of government, then I could imagine Gusto working with that government to extract money from people in some way. So I like it to a point, then suddenly I might really not like it.

Overall, the distributions ended up very similar, though YC did come out with a higher mean, mostly driven by fewer negative tail companies.

On the topic of tails, I wonder if your distribution would've come out differently had the scale been -10, -1, 0, 1, 10.

I can't quite tell why you think Twitch is bad. It is subject to network effects, kind of a social media company, is that why? And I don't know what Scale.com is other than some AI company.

Scale's mission is something like accelerating AI progress, and they have no safety department. So ¯\_(ツ)_/¯ For Twitch I think a bunch of good stuff happens there (chess streamers, Ed Kmett streaming Haskell, or just great gamers), but they're also in a domain where clickbait and similar Goodharting dynamics are strong, and in the worlds where it gets really big I expect those to dominate.

On the topic of tails, I wonder if your distribution would've come out differently had the scale been -10, -1, 0, 1, 10.

I think I would rarely have assigned 10s, due to it being a complex question and this just being a very rough draft.

Another interesting question is whether weighing the rankings by market cap would have made a difference. (But YC didn't make valuations available in their data, so it would require ~30 min of data entry.)

The kind of "decisiveness" Altman is talking about doesn't involve making research or business decisions that matter on the scale of months or weeks, but responding to emails in a few minutes. In other words, the minds Altman is looking for are not just generically decisive, but quickly responsive - not spending long slow cycles doing a new thing and following their own interest, but anxiously attentive to new inputs, jumping through his hoops fast.

I think this proves too much. That is, it also seems like this kind of decisiveness would be helpful for a movie director, where there are thousands of details, and a core task of being a director is when an underling presents you with option A or option B, you pick the option that better suits the project's gestalt, and then the next underling presents you with the next choice. There are also decisions that happen at the scale of months or weeks, and the director needs to be able to make those decisions too. But inability to make snap judgments on problems that only require snap judgments means they'll spend way too much of their time on trivialities and their underlings won't have a rapid feedback loop for iteration, which is poison for a movie and death for a startup.

Our hypothetical movie director doesn't have to be motivated by responsiveness-to-authority; they don't have to have a desire to pass tests. They quite possibly are following their own interest. They just have to make a lot of decisions rapidly in response to input.

Now, quite possibly the thing that's going on is the 'red-haired founder' thing, where if you know future investors won't invest in red-haired founders then you shouldn't either, unless you don't think the company needs any additional investment rounds to survive, and so he's just checking whether or not the founders have the desire-to-pass-tests and skills such that future investors will be interested, and the basic story of the post goes through (where they advertise as being about production, but are also filtering heavily on approval extraction).

But the basic effect that rhetorical move had on me is make me suspect that you're not considering the alternative hypotheticals that seem relevant to me, or think they aren't worth including in the post. Like, in the hypothetical world where normal startups and VCs are 20% about production and 80% about whatever other fluff (both waste and predation), and Y Combinator is 40% about production and 60% about whatever other fluff, would you be writing about how YC still has 60% fluff, or about how there's twice as much production? [Numbers, of course, chosen out of thin air to serve as an example; I'm not sure where the actual fraction is, not paying much attention to YC.]

Both options serve valid roles, but it seems important to advertise which sort of an analysis you're doing, and I think this connects to complaints about word choice of 'apex predator' and 'scam' and so on; if 'scam' normally means "less focused on production than average" then using it to mean "some focus devoted to anything besides production" will cause predictable misunderstandings. [Noting that, in my hypothetical example YC is still more non-production than production, but the population standard isn't '50%'.] But also there are cases where 'what the normal population does' really isn't good enough, and you have to hit a much higher standard; but it's not obvious to me yet that this is such a case.

I didn't use the word scam in the post. Cousin_it's comment was defending YC by saying, substantively, don't worry, it's just a pretend gatekeeper, so I tried to make that more explicit. How, precisely, does that not constitute being a scam?

Are we supposed to be at war with lions now? What's wrong with apex predators?

I didn't use the word scam in the post.

Yeah, I was referring to your reply to cousin_it.

Cousin_it's comment was defending YC by saying, substantively, don't worry, it's just a pretend gatekeeper, so I tried to make that more explicit. How, precisely, does that not constitute being a scam?

I interpreted that exchange quite differently. I understood you as saying, "look, the people that want to pass tests and seek approval, those people are going to flock to YC, because it's at the top of a mountain that people who want to pass tests will seek to climb. The result will be that anyone who fully buys their advertising will be disappointed upon arrival (or too unfamiliar with what actual production looks like to notice the difference)." It reminds me of a friend who went to Harvard, thinking they would meet original thinkers and deep scholars there, and being distressed by how much their fellow students were people who wanted to pass tests and end up in finance or management consulting.

Further, you point to the ways in which YC is explicitly a gatekeeper (for entry into the YC keiretsu / alumni community), which is relevant to the broader question of "is YC flooded by approval-seeking applicants, or is YC seeking out the true producers?", and also relevant to the question of "are YC companies doing real production?".

cousin_it replied on the question of "does a startup need to go to YC in order to succeed?", to which the answer is "no," because success still exists outside of the YC keiretsu. That is, if you want to practice medicine in the US there is a hard gatekeeper of whether or not you have a valid medical license, but if you want to start a company in the Bay there is no such hard gatekeeper. That is, YC's gatekeeping is in no way 'pretend', you're just disagreeing about what fence the gate is in.

Are we supposed to be at war with lions now? What's wrong with apex predators?

I read some disapproval into your choice of 'apex predator' instead of 'paragon' or 'zenith' or so on; perhaps that isn't what you intended to communicate. [Like, I've been using "fluff" to refer to "non-production", but some central elements of "non-production" are predation and conflict and waste and so on, which "fluff" is an overly positive word for, such that I wasn't willing to use it the first time without calling out those examples.]

---

To move towards what I suspect is the center of the disagreement, my current best guess of what's going on is that YC is trying to do 'production in 2019', which is not pure production and nevertheless has some real production. It's not robust to Goodharting on growth and the alumni network likely artificially props up early companies whose products get an early in from passing through an interview that's only somewhat related to quality. It is sometimes accepting the sort of anxious test-passer that you're talking about here, and Goodharting on growth might be a product of this (where the only way to get an anxious test-passer to think about making real products is to get them to focus on the growth metric).

But also they do have some of the 'real production' thing, and they're not McKinsey and able to make use of any anxious test-passer; they have to point towards the 'real production' thing in order to get the more useful sort of founder, and if someone sufficiently manages to pass that test through anxious perfectionism, mission fucking accomplished.

[Interestingly, this take reminds me of the Confucian focus on ritual; "yeah, they're not going to understand what it's for, but it'll help, and it's by just doing the ritual that they eventually figure out what it's for, with the side benefit of not causing problems in the meantime."]

The "false advertising" charge, as I understand it, is that 'production in 2019' is not the right goal, and a different thing is, and this distinction is worth making because it's correct. [I noticed that I really wanted to put an appeal to consequences after the because, as I think it makes the statement more forceful / relevant, but it wasn't how my inner Benquo would put it; the point isn't that people will be confused and that's bad, the point is that it's not correct.]

Altman's responsiveness test might be more useful if it measured a founder's reply time to an underling rather than to the guy holding the purse strings/ contact book.

Cynical view of cynics: Some people are predisposed to be cynical about everything, and their cynicism doesn't provide much signal about the world. Any data can be interpreted to fit a cynical hypothesis. What distinguishes cynics is they are rarely interested in exploring whether a non-cynical hypothesis might be the right one.

I'm too cynical to do a point by point response to this post, but I will quickly say: I have a fair amount of startup experience, and I've basically decided I am bad at startups because I lack the decisiveness characteristic Sam says is essential. The stuff Sam writes about startups rings very true for me, and I think I'd be a better startup founder if I had the disposition he describes, but unfortunately I don't think disposition is a very easy thing to change.

While responsiveness is doubtless a valid test of some sort of intellectual aliveness and ability, it could easily take hours or days to integrate real, substantive new information; in ten minutes all one may be able to do is perform responsiveness.

I get what you're saying. I also think that there's something to be said about how much you can read about someone very quickly. I recall Oli writing a post proposing to test the hypothesis that we should be able to estimate IQ from a short conversation with someone. I agree there are many important things missing in the above test, but note that Altman's belief is explicitly that he does not know what the next company should look like, what area it should be in, and what problem it should solve, and so I think he mostly thinks that good people need to be very reactive to their environment, which is the sort of thing I think you could check quickly.

In other words, the people who best succeed at Y Combinator's screening process are exactly the people you'd expect to score highest at Desire To Pass Tests.

It was you over here who pointed out that these such people who have the slack required to be actually moral perhaps would be the best decision makers. I agree it's sad, but just because it's politically convenient for some people doesn't prove it's false.

ALTMAN: I think it’s correlated with successful founders. It’s fun to have numbers that go up and to the right.

I think that it's fundamentally hard to distinguish TDTPT with being good at optimisation, both of which involve pushing a metric really hard, and just because something sounds like the former doesn't mean it's definitely not the latter. I think surface features can't be the decider here.

Paul Graham cheerfully acknowledged that, by instilling message discipline, “we help the bad founders look indistinguishable from the good ones.”

When I was doing the pre-reading for this post, no line shouted out at me more as "Benquo is going to point at this in his post" than this one.

Treatment Effects

I don't know quite what this section is about. I agree that insofar as money doesn't correlate with human values, Altman's work will produce goodharting. I agree some of the metrics they work on are fake. But I think that... optimising hard for metrics to the ignorance of many social expectations is important for real work, as people often don't do anything because they're optimising for being socially stable to the loss of everything else. I agree that Ramen profitability is a pretty grim psychological tool for doing this, but it's not obvious to me that people shouldn't put everything on the line more often than they do, to get to grips with real risk.

Graham has a natural affinity for production-based strategies which allowed him to acquire various kinds of capital. He blinds himself to the existence of adversarial strategies, so he's able to authentically claim to think that e.g. mean people fail - he just forgets about Jeff Bezos, Larry Ellison, Steve Jobs, and Travis Kalanick because they are too anomalous in his model, and don't feel to him like central cases of success.

I think there's something to this. I remember reading Graham as a teenager and thinking "This is how optimal companies work" and then learning that not all organisations are just "bad startups", but that there's a lot of variation and lots more complicated things going on, especially of the adversarial kind, which YC doesn't really talk about publicly except to say "Ignore it". It does seem like Graham somehow is very blinded to things that aren't production-based startups, and doesn't talk about them very well. Actually, I think this is the best paragraph in your essay.

Here's the thing, though. Graham knows he's doing the wrong thing. He confessed in Black Swan Farming that even though doing the right thing would work out better for him in the long run, he just isn't getting enough positive feedback, so it's psychologically intolerable:

I'm surprised you didn't include the other quote by him on this... I'm having a hard time finding it, I think I read it while doing all the required reading for this essay. Anyway, I distinctly recall him saying that one of the reasons he can't have the supermajority of companies fail to get funding on Demo Day, is because it would demotivate them all and stop them applying to YC. Alas, I can't find the quote.

--

Doing the required reading was great, the TDTPT essay was brilliant, and I got a bunch of your taste from reading it all myself and then only afterwards seeing which parts you pulled out. I think I wouldn't have noticed the connection you make in "A High Health Score is Better Than Health" myself, and I am glad you phrased that idea about Graham's blindspot so precisely, which I'll have to think about more.

Just to state it aloud, the main hypothesis that occurred to me when doing the pre-reading and thinking about why YC would be a scam (to use a term from the comments below), is that after you get in:

- They ensure that you have massive (artificial) growth by hooking you into their massive network of successful companies.

- They destroy information for other funders on demo day by making the good companies look exactly as good as the bad.

This is overall a system that gains a lot of power and resources while wasting everyone else's time and attention, exerting major costs on the world globally. "Apex predator" sounds like an appropriate term.

Altman's belief is explicitly that he does not know what the next company should look like, what area it should be in, and what problem it should solve, and so I think he mostly thinks that good people need to be very reactive to their environment

I expect this to be good at many things - he's probably not wrong that Napoleon would make a good YC founder by his standards - but I expect it's not the mindset that can develop substantive new tech. And sure enough, it seems like substantive new tech is mostly not being developed.

While Graham evaluates startups based on how fast they answer his emails, that's not an evaluation that's important for joining VC or by getting a good demo day evaluation.

When it comes to writing the written application Graham seems to believe that spending time doing a good written application helps the startup, because it helps them to clarify their vision.

It would be easy for Graham to set up a system where email response times factor into admission decisions but he decided against that and instead decided to use a system where outside of the interview he believes the tasks he gives the startup are helpful for the startup.

When it comes to the actual interview, that's a test, but it's not clear that it leads to useless preparation activities either.

If you spend a lot of time practicing your VC interview, that will help you hone your ability to tell people about your vision and that's important for hiring, selling the product and fundraising.

(seems like HC's post is the most "meta" part of the discussion. Could we maybe talk about it, instead? That thing where in the world with AI, the concepts of a job and job satisfaction will undergo terrible change?)

In the great puzzle of what's going wrong with civilization, I think this is a key piece. And it's a piece at risk of having our collective attention slide off it, and slipping through the cracks; awareness of approval-process distortions tends to do that.

The basic claim of this post is that Paul Graham has written clearly and well about unlearning the desire to do perfectly on tests, but that his actions are incongruous, because he has built the organization that most encourages people to do perfectly on tests.

Not that he has done no better – he has done better than most – but that he is advertising himself as doing this, when he has instead probably just made much better tests to win at.

Sam Altman's desire to be a monopology

On tis the post offers quotes giving evidence saying:

- YC is a gatekeeper to funding and a broader network of valuable supplies

- Sam Altman ambitiously wants YC to be the primary funder globally of good companies (and this could imply the sole gatekeeper)

YC creating tests

The post says that the natural way to find such people would be proactive talent scouting rather than creating a formal test, and judges YC for not doing this, and claims that the test filters for people who are obsessed with passing tests.

Here are the points made by the post, in order:

- One factor they care about is extreme responsiveness. The post points out that if you are to goodhart on this metric strongly enough, it will become 'performing' responsiveness.

- The post also quotes the YC CEO (Sam Altman) saying that the primary type of person they select for is smart, upper-middle-class people, which is the set the post thinks is most likely to have the desire to do perfectly on tests.

- The post also Altman talking about the desire to maximise numbers regarding health, and then also quotes Hotel Concierge talking about a time when maximising the numbers was to the clear detriment of the reality and their personal health, suggesting that Altman is selecting for people who maximise at the expense of reality rather than in accordance with it.

- The next section is about how YC forces the founders in their program to do this, to be poor and to make their companies profitable enough to earn food and living.

- The post points out the obsession with growth can be goodharted on in many ways, and points out that one company advertised "fifty-per-cent word-of-mouth growth" which sounds like a straightforward nonsense metric unrelated to building a great product, created by someone who wanted to show growth.

If I were to abstract this a bit, I'd say that if you goodhart on YC's metrics and tests, you will be able to pass them yet keep the desire to do perfectly on tests, and there is suggestive evidence that this has occured.

I think they should be praised for having built better tests. Much of society is about building better metrics to optimize for, and then when we have goodharted on them, learning from our mistakes and making better ones.

Related: I am reminded of Zvi's Less Competition, More Meritocracy. That post talks about how, if the pool of selection gets sufficiently big, the people being selected on are encouraged to take riskier strategies to pass the filters, and the selection process breaksdown. It seems plausible to me that YC substantially changed as an organism at a certain level of success, where initially nobody cared and so the people who passed the tests were very naturally aligned people (the founders of AirBnb, DoorDash, Stripe) but as the competition increased, the meritocracy decreased.

Psychologizing Paul Graham

The post gives some arguments psychologizing Paul Graham:

- The post says that Paul Graham has deceived himself on whether mean people fail, because many mean people succeed.

- It also points out that Paul Graham does not follow his own advice when it comes to funding companies, because (as he says) he would find it psychologically intolerable.

It argues that "this is a case of fooling oneself to avoid confronting malevolent power".

I think that this has some validity. My sense is that Paul Graham has made a lot of succes out of production-based strategies, and has somewhat blinded himself to the existence of adversarial strategies. He seems to me much less politically minded than other very productive people like Bezos and Musk, who I think have engaged much more directly with politics and still succeeded.

I also think that Sam Altman's expressed desire to be something of a monopoly is not something that Paul Graham has engaged with in his writing, I think, and that this would bring with it many political and coordination issues he has not addressed and that could be harmful for the world.

Conclusion

I don't think it's bad for YC to have tests, even tests that are goodharted on to an extent, but I don't think the post is actually that interested in YC. It's more interested in the phenomenon of action being incongruous with speech.

The post is a ton of primary sources followed by a bit of psychologizing of Paul Graham. It's often impolite to publicly psychologize someone. I generally try to emphasize lots of true and positive things about the person when I do so, to avoid the person feeling attacked, but this post didn't choose to do that, which is a fine choice. Either way, I think it is onto something when it talks about Paul Graham being somewhat blind to adversarial and malevolent forces that can pass his tests. If I wanted to grade Paul Graham overall, I feel like this post is failing to properly praise him for his virtues. But the post isn't trying to grade him overall, instead its focus is on the gap between his speech and his actions, and analyzes what's going on there.

I do feel like there's much more to be said there, many more essays, but the post does say quite valuable things about this topic. I feel like I'd get a lot of returns from this post being more fleshed out (in ways I discuss below) and elaborating more on its ideas. In its current form, I'll probably give it a +1 or +2 in the review. I generally found it hard to read, but worthwhile.

Further work

Here are some more questions I'd like to see discussed and answered:

- How did YC change over time with respect to optimizing for production-strategies and adversarial ones?

- (I can imagine the answers here being (1) whenever YC became successful enough for the news media to notice it and make it prestigious, and (2) when Sam Altman became president.)

- How much do the more recent YC companies care about production vs growth?

- Seems like the best YC companies are the early ones, but they naturally have an age-advantage. I've heard rumours but would be interested in more evidence here.

I'd also be interested in a more fleshed out version of much of the discussion in this post. "This is a case of fooling oneself to avoid confronting malevolent power" -> what are other cases in the modern world, and what are some of the forces at play here? "If, to participate in higher growth rates, you have to turn into something else, then in what sense is it you that's getting to grow faster?" -> this is a great point, and i'm interested in where exactly things like YC made the decision to turn into something else, and what that looked like. "He then sets up an institution optimizing for "success" directly, rather than specifically for production-based strategies." -> I'd be interested in a more detailed sketch of what that organisation would look like.

I also think that there'd be some good work in tying this into the ontology of goodhart's law.

This part is a little baffling to me:

For better or worse that's never going to be more than a thought experiment. We could never stand it. How about that for counterintuitive? I can lay out what I know to be the right thing to do, and still not do it. I can make up all sorts of plausible justifications. It would hurt YC's brand (at least among the innumerate) if we invested in huge numbers of risky startups that flamed out. It might dilute the value of the alumni network. Perhaps most convincingly, it would be demoralizing for us to be up to our chins in failure all the time. But I know the real reason we're so conservative is that we just haven't assimilated the fact of 1000x variation in returns.

We'll probably never be able to bring ourselves to take risks proportionate to the returns in this business.

So I get why Y Combinator can't do this, but the "we" seems more inclusive here than just the YC team. I think this because in most other instances of not knowing how or being unable to do something, he troubles to suggest a way someone else might be able to.

If people are prepared to invest a lot of money in high frequency trading algorithms which are famously opaque to the people providing the money, or into hedge funds which systematically lose to the market, why wouldn't someone be willing to invest in an even larger number of startups than Y Combinator?

If we follow the logic of dumping arbitrary tests, it feels like it might be as direct as configuring a few reasoned rules, using a standardized equity offer with standardized paperwork, and then just slowly tweak the reasoned rules as the batch outcomes roll in.

This post makes assertions about YC's culture which I find really fascinating. If it's a valid assessment of YC, I rather expect it to have broad implications for the whole capitalist and educational edifice. I've found lots of crystallized insight in Paul Graham's writing, so if his project is failing in the dimensions he's explicitly pointed out as important this seems like critical evidence towards how hard the problem space really is.

What does it mean for the rationalist commmunity if selecting people for quickness of response correlates with anxiety or test-contrained thinking? Should the LW review cycles be more like a 5 years long? How much value do selection effects leave on the table, from LW being mostly in English and white male geek coded?

If I had more time I'd review the linked documents to see how congruent Benquo's summary is.

I've just realized a potential connection upon remembering something from a different Paul Graham essay.

From this post:

Graham has a natural affinity for production-based strategies which allowed him to acquire various kinds of capital. He blinds himself to the existence of adversarial strategies, so he's able to authentically claim to think that e.g. mean people fail….

From “Lies We Tell Kids”:

Innocence is also open-mindedness. We want kids to be innocent so they can continue to learn. Paradoxical as it sounds, there are some kinds of knowledge that get in the way of other kinds of knowledge. If you're going to learn that the world is a brutal place full of people trying to take advantage of one another, you're better off learning it last. Otherwise you won't bother learning much more.

Very smart adults often seem unusually innocent, and I don't think this is a coincidence. I think they've deliberately avoided learning about certain things. Certainly I do. I used to think I wanted to know everything. Now I know I don't.

So he's already written something similar out explicitly, at least. And juxtaposing those feels like it bends the framing away from the blind spot being a natural accident of affinity (which I don't think is explicit in this post but which is how my mind tends to treat it by default). I'm not sure what to make of this, but it feels interesting.

I found this perspective quite interesting, and would like to see it reviewed.

This matches the impression I’ve gotten from most of the people I’ve talked to about their startups, that Y-Combinator is singularly important as a certifier of potential, and therefore gatekeeper to the kinds of network connections that can enable a fledgling business—especially one building business tools, the ostensible means of production—get off the ground.

Maybe PG could reply that if your startup is good, YC isn't singularly important to it, so there's no point getting angry about gatekeeping?

Yeah, it would be pretty cool for PG to more overtly admit that YC is a scam. As it is, it's not actually harmless, since it does attract a lot of attention from people who think they're paying attention to a nonscam.

Either it matters a lot for a good startup’s success, or it doesn’t.

If it does, then the gatekeeper narrative is true. If it doesn’t, then how exactly isn’t it a scam?

This is such an obvious point that I’m worried that I’m confused about what’s really going on in this conversation.

Improving a startup's chance of success, which can also be improved in other ways, doesn't make YC a gatekeeper or a scam.

The way I understand the objection it that YC promotes "building great products", which attracts (a lot of) certain kinds of founders, but in fact YC is optimizing for something else (primarily described in Black Swan Farming, confirmed by other sources). I believe they are quite value-additive to the companies they accept, but attract more founders than if they were "honest about their optimization function", where some founders could have been better off engaging with other VCs on possibly better terms.

Paul Graham has a new essay out, The Lesson to Unlearn, on the desire to pass tests. It covers the basic points made in Hotel Concierge's The Stanford Marshmallow Prison Experiment. But something must be missing from the theory, because what Paul Graham did with his life was start Y Combinator, the apex predator of the real-life Stanford Marshmallow Prison Experiment. Or it's just false advertising.

As a matter of basic epistemic self-defense, the conscientious reader will want to read the main source texts for this essay before seeing what I do to them:

The first four are recommended on their own merits as well. For the less conscientious reader, I've summarized below according to my own ends, biases, and blind spots. You get what you pay for.

The Desire to Pass Tests hypothesis: a Brief Recap

The common thesis of The Lesson to Unlearn and The Stanford Marshmallow Prison Experiment is:

Our society is organized around tests imposed by authorities; we're trained and conditioned to jump through arbitrary hoops and pretend that's what we wanted to do all along, and the upper-middle, administrative class is strongly selected for the desire to do this. This is why we're so unhappy and so incapable of authentic living.

Graham goes further and points out that this procedure doesn't know how to figure out new and good things, only how to perform for the system the things it already knows to ask for. But he also talks about trying to teach startup founders to focus on real problems rather than passing Venture Capitalists' tests.

The rhetoric of Graham's essay puts his young advisees in the role of the unenlightened who are having a puzzling amount of trouble understanding advice like "the way you get lots of users is to make the product really great." Graham casts himself in the role of someone who has unlearned the lesson that you should just try to pass the test of whoever you're interacting with, implying that the startup accelerator (i.e. combination Venture Capital firm and cult) he co-founded, Y Combinator, is trying to do something outside the domain of Tests.

In fact, Graham's behavior as an investor has perpetuated and continues to perpetuate exactly the problem he describes in the essay. Graham is not behaving exceptionally poorly here - he got rich by doing better than the norm on this dimension - except by persuasively advertising himself as the Real Thing, confusing those looking for the actual real thing.

(The parallels with Effective Altruism, and LessWrong, should be obvious to those who've been following.)

Y Combinator is the apex predator of the real-life Stanford Marshmallow Prison Experiment

If you know anyone in the SF Bay Area startup scene, you know that Y Combinator is the place to go if you're an ambitious startup founder who wants a hand up. Here's a relevant quote from Tad Friend's excellent New Yorker profile of Sam Altman, the current head of Y Combinator:

This matches the impression I've gotten from most of the people I've talked to about their startups, that Y Combinator is singularly important as a certifier of potential, and therefore gatekeeper to the kinds of network connections that can enable a fledgling business - especially one building business tools, the ostensible means of production - get off the ground.

When interviewed by Tyler Cowen, Altman expressed a desire to move from being a singularly important gatekeeper to being the exclusive gatekeeper:

Given Graham's values, one might have expected a sort of proactive talent scouting, to find people who've deeply invested in interesting ventures that don't fit the mold, and offer them some help scaling up. But the actual process is very different.

Selection Effects

Y Combinator, like the prestigious colleges Graham criticizes in his essay, has a formal application process. It receives many more applications for admission than it can accept, so it has to apply some strong screening filters. It seems to filter for people who are anxiously obsessed with quickly obtaining the approval of the test evaluators, who have been acculturated into upper-middle-class test-passing norms, and who are so obsessed with numbers going the "right" way that they'll distort the shape of their own bodies to satisfy an arbitrary success metric.

Anxious Preoccupied

In his interview with Sam Altman, Tyler Cowen asked about the profile of successful Y Combinator founders:

The kind of "decisiveness" Altman is talking about doesn't involve making research or business decisions that matter on the scale of months or weeks, but responding to emails in a few minutes. In other words, the minds Altman is looking for are not just generically decisive, but quickly responsive - not spending long slow cycles doing a new thing and following their own interest, but anxiously attentive to new inputs, jumping through his hoops fast. Similarly telling is the ten-minute interview in which he mainly looks for how responsive the interviewee is to cues from the interviewer:

While responsiveness is doubtless a valid test of some sort of intellectual aliveness and ability, it could easily take hours or days to integrate real, substantive new information; in ten minutes all one may be able to do is perform responsiveness.

In case there was any doubt as to whether this attitude is consistent with supporting technical innovation, later in the interview he says unconditionally that Y Combinator would fund a startup founded by James Bond (a masculine wish-fulfillment fantasy, whose spy work is mostly interpersonal intrigue), but not by Q (the guy in the Bond movies who develops cool gadgets for Bond to use), unless he has a "good cofounder."

Conventionally Successful

Then consider the kinds of backgrounds that make a good Y Combinator founder:

In other words, the people who best succeed at Y Combinator's screening process are exactly the people you'd expect to score highest at Desire To Pass Tests.

A High Health Score is Better Than Health

Then consider this little detail:

(For context, Taleb's attitude towards weightlifting is that it builds a kind of "antifragile" robustness to small perturbations, which is consistent with the sort of risk-bearing behavior that builds a sustainable society. See Strength Training is Learning from Tail Events for Taleb's own account. By contrast, Altman's idea of a weightlifter is someone who just likes to see the numbers go in the correct direction - what Taleb would call an Intellectual Yet Idiot, the exact "academico-bureaucrat" class from which Y Combinator draws its founders, who pretend that their measurements capture more about what they study than they do, and offload the risks their models can't account for onto others. For more on Taleb's outlook, read Skin in the Game.)

Hotel Concierge's story about weight in The Stanford Marshmallow Prison Test makes an interesting comparison:

Treatment Effects

The admissions process is not the end of the acculturation. In Even artichokes have doubts, Martina Keegan wrote about how people admitted to Yale don't stop the sort of anxious approval-seeking that got them in. Instead, having been conditioned into that behavior pattern, they're easy targets for recruitment by further generic prestige-awarders like McKinsey or investment banks, even though they know it won't get them much they actually want, and report that they expect it will cause their future behavior to drift farther from what they see as the optimum.

At Y Combinator, the situation is even worse. According to Friend's New Yorker article, the founders are deliberately forced into situations where short-run survival depends on getting approval now:

Y Combinator forces a focus on "growth" feedback short-run even though this isn't a good proxy for long-run success. Friend continues:

This despite knowing that the intervention could well be doing more harm than good. From the interview with Cowen, a comment on growth:

Obviously, in any enterprise trying to do a specific thing, some kinds of measurable activity will have to increase at some point. But this obsession with measured revenue growth (or analogues to it) early on leads to performative absurdities like “fifty-per-cent word-of-mouth growth," which are not words that would come out of the mouth of someone trying to, as Paul Graham advises in his essay, "make the product really great."

Altman knows he's not doing the right thing, or the thing that would make him the most money. But he's doing the fastest-growing thing.

Moloch Blindness

How is it that someone like Graham could hold so strongly and express so eloquently the opinion that the most important lesson to unlearn is the desire to pass tests, and then create an institution like Y Combinator?

This isn't the only such contradiction in Graham's words and actions. Friend's New Yorker article describes Graham's attitude towards meanness, and contrasts this with Altman's actual character, revealing a similar pattern:

So, Graham claimed that mean people fail, and then selected someone with a noticeable mean streak to run Y Combinator. Likewise, he wrote that test-passing isn't good for growing a business, and then promoting an obligate test-passer, who then remade his institution to optimize for test-passing.

I think the root process generating this sort of bait-and switch must be something like the following:

There's a way to succeed (i.e. become a larger share of what exists) through production, and a way to succeed through purely adversarial (i.e. zero-sum) competition. These are incompatible strategies, so that productive people will do poorly in zero-sum systems, and vice versa. The productive strategy really is good, and in production-oriented contexts, a zero-sum attitude really is a disadvantage.

Graham has a natural affinity for production-based strategies which allowed him to acquire various kinds of capital. He blinds himself to the existence of adversarial strategies, so he's able to authentically claim to think that e.g. mean people fail - he just forgets about Jeff Bezos, Larry Ellison, Steve Jobs, and Travis Kalanick because they are too anomalous in his model, and don't feel to him like central cases of success.

This is a case of fooling oneself to avoid confronting malevolent power. It's the path towards the true death, so if you want to stay aligned with truth and life, you'll have to look for alternatives. To keep track of anomalies, at least in your internal bookkeeping; to conceal and lie, if you have to, to protect yourself.

If, to participate in higher growth rates, you have to turn into something else, then in what sense is it you that's getting to grow faster? Moloch, as Scott Alexander points out, offers "you" power in exchange for giving up what you actually care about - but this means, offering you no substantive concessions. For what is a person profited, if they shall gain the whole world, and lose their own soul?

Thus blinded, Graham writes about the virtues of production-based strategies as though they were the only way to succeed. He then sets up an institution optimizing for "success" directly, rather than specifically for production-based strategies. But in the environment in which he's operating, adversarial strategies can scale faster. Of course, just because adversarial strategies scale faster doesn't mean they make you richer faster - and as we'll see below, selling out is not, according to Graham's perspective, the way to maximize returns. But faster growth feels more successful. So he ends up selling out his credibility to the growth machine. Or, as Hotel Concierge called it, the Stanford Marshmallow Prison Experiment. (It's perhaps not a coincidence that the Stanford brand is most prestigious in the startup / "tech" scene.)

Here's the thing, though. Graham knows he's doing the wrong thing. He confessed in Black Swan Farming that even though doing the right thing would work out better for him in the long run, he just isn't getting enough positive feedback, so it's psychologically intolerable:

So instead, he does the wrong thing, knowingly, on purpose, but tries to pretend otherwise to himself. Sad!

*****

Related: OpenAI makes humanity less safe, Is Silicon Valley real?, In a world… of venture capital