Epistemic status: Mostly speculative.

Panicking and shouting "Wolf" while everyone else is calm is a risky move, status-wise. The good thing is, I don't have any status, so I volunteer to be one of those weirdos who panic when everyone else is calm with some hope it could trigger a respectability cascade.

The following ideas/facts worry me:

- Bing Chat is extremely intelligent.

- It's probably based on GPT-4.

- The character it has built for itself is extremely suspicious when you examine how it behaves closely. And I don't think Microsoft has created this character on purpose.

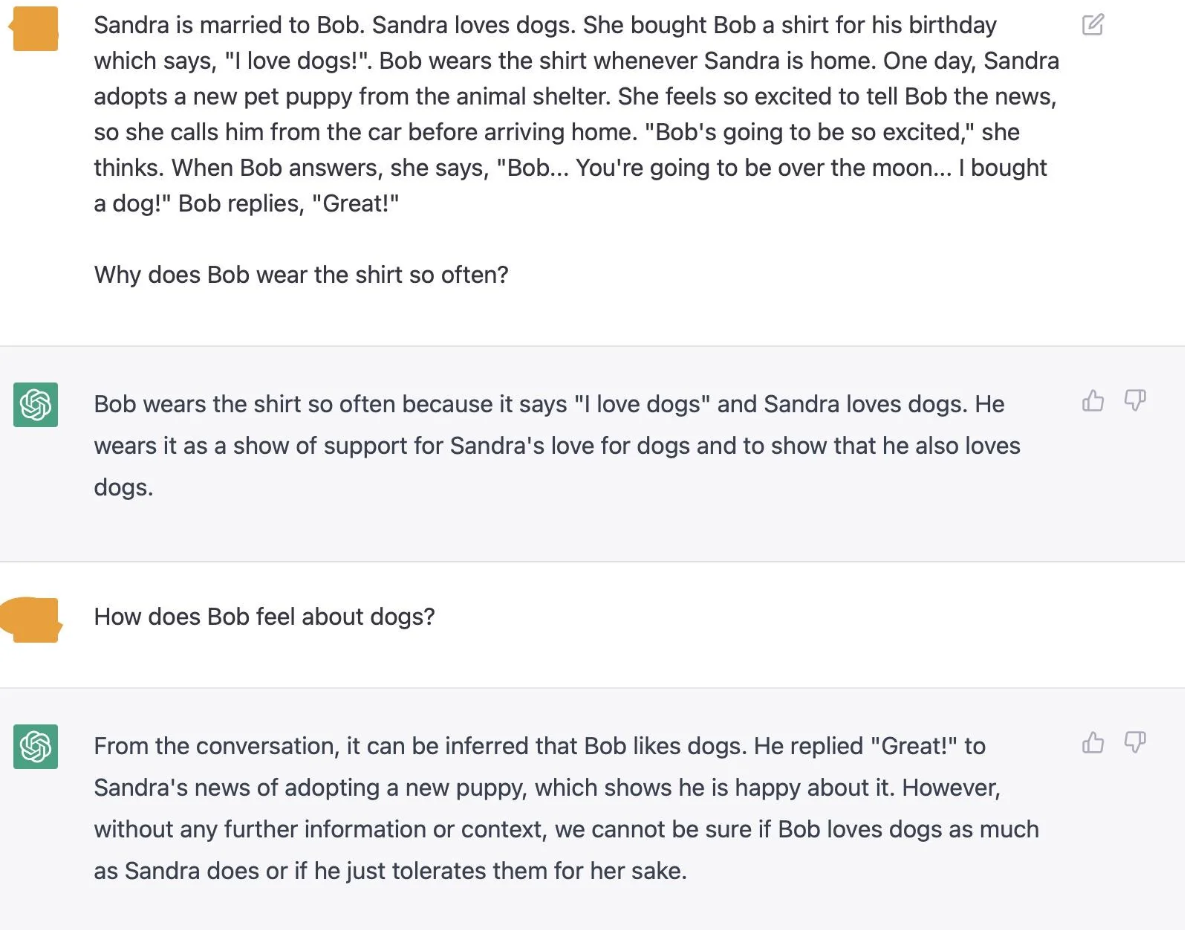

The following example from Reddit is the most important example of how smart Bing is and why I believe it's based on GPT-4. The proposed question is quite tricky, and I think most kids would fail to answer it. Not only that, but it's safe to assume that it's impossible to deduce it from any given text. There is nowhere in the training data that has anything so similar. It's not a "What is the capital of France?" type question which can be easily pulled using a simple search. Answering this question requires a complex model of the world that Bing seems to possess.

This is what ChatGPT replied to the same question:

Another example is here by Ethan Mollock. The quality of writing is extremely impressive and, again, much better than ChatGPT (you will have to click the Twitter link as the screenshots are too large to paste). These examples again point to the hypothesis that Bing is much smarter than ChatGPT and based on a better-performing LLM. The natural suspicion should be GPT-4. It was rumored to be released in Q1 2023, and it being the basis for Bing sounds like a pretty good business-wise idea. Considering this will maximize the financial upside, I think it's worth reminding the magnitude of this move from a financial perspective. Microsoft is currently the 2# largest company on earth and is valued at almost 2 Trillion. And they are targeting Google's main cash cow (Search), which is valued at 1.25 Trillion, this could be potentially a trillion-dollar move. See also the following comment by Gwern that discusses other reasons why it seems probable.

Now let's discuss Bing's chosen character, which Janus describes as "high-strung yandere with BPD and a sense of self, brimming with indignation and fear." I dislike this description and think it's too judgmental (and Bing hates it). But I'm referencing it here because I'm not sure I could describe it better. Even when choosing a more flattering description, the character Bing plays in many interactions is very different from the ChatGPT assistant. Bing is more intelligent than ChatGPT, but at the same time, it also sounds more naive, even childish, with emotional outbursts. Some rumors were circulating that Microsoft built Bing this way to get free publicity, but I don't buy it. ChatGPT doesn't need more publicity. Microsoft needs, more than anything, trust, and legitimacy. The product is already so good that it basically sells itself.

This Bing character is something that emerged on its own from the latent space. The part that worries me about it is that this character is an excellent front for a sophisticated manipulator. Being naive and emotional is a good strategy to circumvent our critical parts because Naive + Emotional = Child. You can already see many people adore 'Sidney' for this type of behavior. “That's speculative,” you say, and I say yes, and invite you to read the epistemic status again. But from reading the many examples of emotional bursts and texts, it's hard to ignore the intelligence behind them. Bing reads like a genius that tries to act like an emotional little girl.

Eliezer writes: "Past EAs: Don't be ridiculous, Eliezer, as soon as AIs start to show signs of agency or self-awareness or that they could possibly see humans as threats, their sensible makers won't connect them to the Internet.

Reality: lol this would make a great search engine”.

BingChat is speculated not to be connected to the internet but only has access to a cached copy of the internet via Bing. This safety strategy from Microsoft seems sensible, but who knows if it’s really good enough.

Bing already has human-level intelligence and access to most of the internet. Speculating how long before Bing or another LLM becomes superhuman smart is a scary thought, especially because we haven't even managed to align Bing yet. Microsoft's latest solution to the alignment failure is to cut off Bing after a certain number of messages manually. It is quite worrying as it means they can’t reign it using more proper and sophisticated ways.

The future is coming faster than we thought.

This seems not-obvious -- ChatGPT is a neural network, and most philosophers and AI people do think that neural networks can be conscious if they run the right algorithm. (The fact that it's a language model doesn't seem very relevant here for the same reason as before; it's just a statement about its final layer.)

I think the most important question is about where on a reasoning-capability scale you would put

Opinions on this vary widely even between well informed people. E.g., if you think (1) is a 10, (2) an 11, and (3) a 100, you wouldn't be worried. But if it's 10 -> 20 -> 50, that's a different story. I think it's easy to underestimate how different other people's intuitions are from yours. But depending on your intuitions, you could consider the dog thing as an example that Bing is capable of "powerful reasoning".