The categorical declarations in this essay (China has no interest in winning the AI race, and couldn't win it even if it wanted to) made me suspicious that we're not dealing with a really objective account of the facts. And then this passage really cemented that impression:

And the challenges China faces today will only get more serious, as its population ages further and climate change increases the frequency of extreme weather events. Hence, in combination with the growing lead of the US in semiconductor technology, as time passes, it will become even more difficult for China to catch up to the US than it is today.

I feel like I'm reading a sophisticated sales pitch or debater's brief, meant to convince me that the geopolitical West will remain in charge of the world's future indefinitely. But I'm not sure who the audience is, or what the overall intent is, or what intellectual or political faction the author represents.

China does not have access to the computational resources[1] (compute, here specifically data centre-grade GPUs) needed for large-scale training runs of large language models.

While it's true that Chinese semiconductor fabs are a decade behind TSMC (and will probably remain so for some time), that doesn't seem to have stopped them from building 162 of the top 500 largest supercomputers in the world.

There are two inputs to building a large supercomputer: quality and quantity, and China seems more than willing to make up in quantity what they lack in quality.

The CCP is not interested in reaching AGI by scaling LLMs.

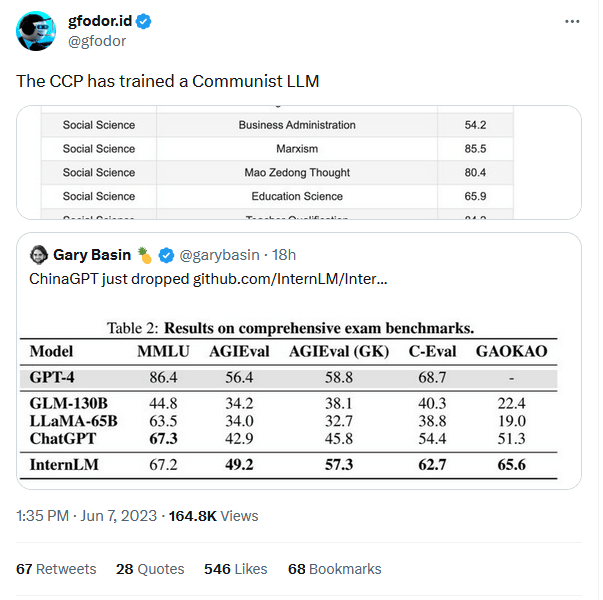

For a country that is "not interested" in scaling LLMs, they sure do seem to do a lot of research into large language models.

It's also worth noting that China currently has the best open-source text-to-video model, has trained a state of the art text-to-image model, was the first to introduce AI in a mass consumer product, and is miles ahead of the west in terms of facial recognition.

I suspect that "China is not racing for AGI" will end up in the same historical bin as "Russia has no plans to invade Ukraine", a claim that requires us to believe the Chinese stated preferences while completely ignoring their revealed ones.

I do agree that if the US and China were both racing, the US would handily win the race given current conditions. But if the US stops racing, there's absolutely no reason to think the Chinese response would be anything other than "thanks, we'll go ahead without you".

--edit--

If a Chinese developer ever releases an LLM that is so powerful it inevitably oversteps censorship rules at some point, the Chinese government will block it and crack down on the company that released it.

This is a bit of a weird take to have if you are worried about AGI Doom. If your belief is "people will inevitably notice that powerful systems are misaligned and refuse to deploy them", why are you worried about Doom in the first place?

Is the claim that China due to its all-powerful bureaucracy is somehow less prone to alignment failure and hard-left-turns than reckless American corporations? If so, I suggest you consider the possibility that Xi Jinping isn't all that smart.

While it's true that Chinese semiconductor fabs are a decade behind TSMC (and will probably remain so for some time), that doesn't seem to have stopped them from building 162 of the top 500 largest supercomputers in the world.

They did this (mostly) before the export regulations were instantiated. I'm not sure what the exact numbers are, but both of their supercomputers in the top 10 were constructed before October 2022 (when they were imposed). Also, I imagine that they still might have had a steady supply of cutting edge chips soon after the export regulations. It would make sense that they were not enacted immediately and also that exports that had already begun hadn't been ceased, but I have not verified that.

The number 7 supercomputer is built using Chinese natively developed chips, which still demonstrates the quality/quantity tradeoff.

Also, saying "sanctions will bite in the future" is only persuasive if you have long timelines (and expect sanctions to hold up over those timelines). If you think AGI is imminent, or you think sanctions will weaken over time, future sanctions matter less.

How effectively can decentralized GPUs be used to train very large (>GPT3) LLM models? I can see a mass mobilization campaign of civilian GPUs along the lines of a mandatory Fold At Home should Beijing ever get serious about the AI race.

I think it's pretty unlikely (<5%) that decentralized volunteer training will be competitive with SOTA, ever. (Caveat: I haven't been following volunteer training super closely so this take is mostly cached from having looked into it for GPT-Neo plus occasionally seeing new papers about volunteer training).

- You are going to get an insane efficiency hit from the compute having very low bandwidth high latency interconnect. I think it's not inconceivable that someone will eventually figure out an algorithm that is only a few times worse than training on a cluster with good interconnects but this is one of those things where people have tried for ages.

- Heterogeneous compute (not all the GPUs will be the same model), lower reliability (people will turn their computers off more often than they do in datacenters), and having to be robust against bad actors (people could submit bad gradients) and other challenges together add another several times overhead.

- There just isn't that much volunteer hardware out there. For a rough OOM the publicly announced Facebook cluster is roughly the same size as the raw size of folding@home at its peak. All in all, I think you would need to do some serious engineering and research to get 1% efficiency at Facebook cluster scale.

(folding@home is embarrassingly parallelizable because it requires basically no interconnect, and therefore also doesn't mind heterogeneous compute or reliability)

Could you link me to sources that could give me an estimate of how inefficient volunteer compute would be? Is it something like 100x or 10^6x? Mandatory (i.e. integrated into WeChat) volunteer compute (with compensation) available in China could well exceed conventional AI training clusters by several OOMs.

This gap will only widen over time; China is failing to develop a domestic semiconductor industry, despite massive efforts to do so, and is increasingly cut off from international semiconductor supply chains.

I would say this is a falsehood

The US export ban on Controlled GPUs has really made china push for local semiconductor manufacturing way, and accelerate their projects, they dont have 5nm TSMC quality wafers, fine, but they're developing the full stack.

I mean if this was a "The AGi Race Between the US and Russia doesnt exist" okay fine, but Seeing how more than half the papers that land in ArXiv have chinese authors in them, plus the whole China does 90% of electronic manufacturing in the world. I dont understand how you come to the conclusion that china is hopelessly dead in the water.

The day the US export ban on GPU happened, okay, most of us really wondered, but seeing how they're operating 6 months to a year afterwards, it just obvious that they will be able to make it happen.

See also the new The Illusion of China’s AI Prowess: Regulating AI Will Not Set America Back in the Technology Race from Helen Toner, Jenny Xiao, and Jeffrey Ding.

Chinese startup MiniMax, working on AI solutions similar to that of Microsoft-backed OpenAI's ChatGPT, is close to completing a fundraising of more than $250 million that will value it at about $1.2 billion, people familiar with the matter said.

https://www.reuters.com/technology/china-ai-startup-minimax-raising-over-250-mln-tencent-backed-entity-others-2023-06-01/

The temporary disappearance of Jack Ma in 2020 when the CCP decided that his company Alibaba had become too powerful is another cautionary tale for Chinese tech CEOs to not challenge the CCP.

I think Jack Ma's disappearance had as much to do with Alibaba being powerful as it did with a speech he gave critiquing the CCP's regulatory policy.

There are other equally sized / influential companies in China (or even bigger ones such as Tencent) who's founders didn't disappear; the main difference being their deference to Beijing.

When I write “China”, I refer to the political and economic entity, the People’s Republic of China, founded in 1949.

Leading US AI companies are currently rushing towards developing artificial general intelligence (AGI) by building and training increasingly powerful large language models (LLMs), as well as building architecture on top of them. This is frequently framed in terms of a great power competition for technological supremacy between the US and China.

However, China has neither the resources nor any interest in competing with the US in developing artificial general intelligence (AGI) primarily via scaling Large Language Models (LLMs).

In brief, China does not compete with the US in developing AGI via LLMs because of:

Therefore, as it currently stands, there is no AGI race between China and the US.

China Cannot Compete with the US to Reach AGI First Via Scaling LLMs

Today, training powerful LLMs like GPT-4 requires massive GPU clusters. And training LLMs on a larger number of GPUs is the most common and reliable way of increasing their capabilities. This is known as the bitter lesson in machine learning; increasing the compute a model is trained on leads to higher progress in the model's capabilities than any changes in the algorithm's architecture. So if China wants to “win the AGI race” against the US, it would need to maximise its domestic GPU capacities. However, China lacks domestic semiconductor production facilities for producing data centre-grade GPUs. So to increase domestic GPU capacities, it has to import GPUs or semiconductor manufacturing equipment (SME).

However, since the US introduced its export controls on advanced chips and SMEs in October 2022, China can no longer purchase GPUs or SMEs abroad. And with the semiconductor industry advancing at lightning speed, China is falling further and further behind every day[2]. These policies are just the most recent ones in a row of ongoing measures by the US restricting the transfer of semiconductor technology to Chinese companies, beginning with Huawei in 2019. In early 2023, the Netherlands and Japan, which play a key role in the global semiconductor manufacturing chain, joined the US and introduced SME export controls targeting China. Crucially, these combined export controls apply to ASML’s most advanced lithography machines, the only machines in the world which can produce cutting-edge chips.

The problem for China lies not so much in designing cutting-edge GPUs, but in designing and building SMEs to produce the advanced GPUs needed for training LLMs, a far more challenging task. But China has been trying - and failing - to build domestic semiconductor manufacturing chains for cutting-edge chips since at least the 1990s. In the 1990s, the state-sponsored Projects 908 and 909 only resulted in one company that today produces chips using 55nm process technology (technology from the mid-2000s, producing less complex chips used for applications like the Internet of Things and electric vehicles) and occupies around 3% of global foundry market share. In its 13th Five-Year Plan (2016-2020), the CCP listed building a domestic semiconductor industry as a priority, and in the 14th Five-Year Plan (2021-2025), developing domestic semiconductor supply chains appears as one of the country’s main goals in industrial and economic policy. Since then, China has increased domestic chip production capacities, but still only succeeds at producing larger, less complex chips. And achieving even this success was possible largely due to the forced transfer of intellectual property from US-American and Taiwanese semiconductor firms. This is now much harder with strict export controls from the US on advanced GPUs and a broader plethora of measures aimed at restricting the transfer of chips and SME technology and design to Chinese firms.

In addition, in the summer of 2022, the Chinese government started investigating multiple senior figures who were responsible for industrial policy and state investment programmes to create a domestic cutting-edge chip industry starting in 2014. These investigations are based on “opaque allegations” of officials breaking the law. In early 2023, China then changed its strategy from granting generous subsidies to semiconductor manufacturing companies to focusing on a small number of potential national champions. This recent policy shift indicates that the Chinese leadership has decided that prior strategies have failed. In addition, the new policy programme has a smaller budget, which points to the Chinese government prioritising other challenges (more on this below).

On a general note, Chinese industrial policy officially has the goal of building industry and increasing economic growth, but its actual goal is first and foremost to preserve CCP power and social stability. Chinese industrial policy routinely fails at its stated goals precisely because it is optimised for preserving CCP power, which it has succeeded at since the late 1970s. This goes beyond the semiconductor industry and e.g. also causes failures in China’s green transition. For these reasons, the probability that the Chinese government will succeed at or even properly try to build a domestic semiconductor industry that is capable of producing cutting-edge, data centre-grade GPUs is extremely low.

On top of everything, China is facing food security, public health, and demographic challenges, and the rising cost of Chinese labour is threatening its economic model as the world's workbench. These combined challenges pose a more significant threat to the CCP’s hold on power than lagging behind the USA in AGI, so the CCP will most likely prioritise these challenges and continue to reduce funds available for building domestic GPU supply chains. And the challenges China faces today will only get more serious, as its population ages further and climate change increases the frequency of extreme weather events. Hence, in combination with the growing lead of the US in semiconductor technology, as time passes, it will become even more difficult for China to catch up to the US than it is today.

For Political Reasons, China Won’t Compete with the US to Reach AGI First Via Scaling LLMs

The CCP is not interested in reaching AGI by scaling LLMs. Reliably controlling powerful LLMs is an unsolved technical problem[3]: for instance, it is currently impossible to get an LLM to reliably never output specific words or phrases or touch on certain topics. Given this, any sufficiently powerful Chinese LLM is guaranteed to make a politically taboo statement sooner or later, like referencing the Tian’anmen Square massacre. This makes this line of AI development too politically sensitive and ultimately undesirable in the Chinese environment.

Instead, the CCP wants and needs reliable, narrow, controllable AI systems to censor internet discourse, improve China’s social credit system, and expand citizen surveillance. This is reflected in the topics Chinese and US AI researchers write about. Chinese researchers are overrepresented among highly cited papers on AI applications in surveillance and computer vision, while US researchers publish more leading papers on deep learning.

Considering this, one of two things will happen: Either Chinese LLMs will continue to be weaker, due to a lack of compute or by design to comply with government guidelines, or a Chinese developer will release a powerful LLM on the Chinese internet, it will cause a political scandal by not conforming with censorship rules or state-issued guidelines, and the government will shut it down.

Chinese companies will only build LLMs that reliably comply with Chinese censorship laws and upcoming laws or guidelines on generative AI. To ensure models never go off-script, companies will have to make them weaker, and very carefully control who gains access to the models or the APIs. For example, the March 2023 demo for Baidu’s new generative language model Ernie Bot consisted only of pre-recorded video clips, disappointing the public, and so far, only businesses can apply for licenses to use the model. Baidu advertised Ernie Bot as a multimodal model, meaning it should be able to generate responses to different forms of input, e.g. including text and images. However, Ernie Bot lacks the ability to interpret images and generate a text response. Furthermore, users who had the opportunity to test Ernie Bot report that it performed slightly worse than GPT-3, a model that is now almost three years old, and, unlike ChatGPT, shirks away from answering questions on political topics. For context, these reviews were published in late March 2023, when OpenAI had already released the more powerful GPT-4. One reviewer summarised the situation by commenting on the model’s “bearable mediocrity” and writing it is “probably good enough for the Chinese market”. In short, Ernie Bot is just good enough to probably for now satisfy the demand in a market where all serious competitors are banned. In direct competition with the generative language models from companies like OpenAI, Ernie Bot would not be able to compete.

If a Chinese developer ever releases an LLM that is so powerful it inevitably oversteps censorship rules at some point, the Chinese government will block it and crack down on the company that released it. In the past, the CCP has held tech companies accountable for any undesirable content posted by users of their platforms, and according to the new draft guidelines on generative AI, it will do the same regarding LLMs. The temporary disappearance of Jack Ma in 2020 when the CCP decided that his company Alibaba had become too powerful is another cautionary tale for Chinese tech CEOs to not challenge the CCP.

Indeed, the new drafts on guidelines for generative AI in China from April 2023[4], published shortly after I first wrote this piece, give an idea of the intentions of the CCP regarding the regulation of generative AI models, including LLMs. Specifically, these draft guidelines state that “the content generated by generative artificial intelligence [...] must not contain subversion of state power”. This statement is a clear indicator of the CCP’s intention to prohibit any generative AI model from being deployed that may at some point produce politically undesirable outputs. But So under the draft guidelines, powerful LLMs would be completely prohibited.

No Global AGI Race in Sight

In conclusion, China is not in a position to build AGI via scaling LLMs because it lacks the resources, technology, and political will to do so.

First, China doesn’t have the necessary computing resources, data centre-grade GPUs, to continue doing increasingly large LLM training runs. China is unable to purchase these GPUs, primarily designed in the US and manufactured outside of China, because the US and its allies have placed strict export controls on this technology, as well as the SMEs needed to produce sufficiently powerful GPUs.

Second, China is also unable to manufacture data centre-grade GPUs domestically. This situation is only worsening as the CCP will have to deprioritise industrial policy programmes to develop domestic cutting-edge SMEs and GPUs in favour of dealing with more pressing challenges, such as demographic change and the rising cost of Chinese labour.

Lastly, the Chinese government has no interest in reaching AGI via powerful LLMs, since LLMs cannot be controlled reliably. The Chinese government made this clear in its recent draft guidelines on generative AI. Instead, the CCP is interested in using narrow, controllable AI systems for surveillance. As a result, AI companies in China will either continue to release weak LLMs, like Baidu’s Ernie Bot or will be cracked down on if they release a sufficiently powerful and therefore uncontrollable model.

In short, there is no AGI race between the USA and China. Hence, framing the development of AGI as a competition between the USA and China, in which the US must compete to win, is misleading. All companies with leading AGI ambitions are located in the US and do not have direct competitors in China. If concerns over a US-China AGI race are the reason why your company is accelerating its progress towards AGI, then your concerns are unfounded and you should stop.

For more information on the importance of computational resources (compute) for machine learning see here, here, and here.

In March 2023, Nvidia introduced its new GPU unit for the Chinese market, called the H800. Compared to its most recent product for the Western market, the A800, the H800 performs the same number of operations per second, but has a much lower interconnect bandwidth, lower even than Nvidia’s last-generation GPU unit, the A100, used to train models like GPT-3. With these features, the H800 will perform well for AI models similar in size to GPT-3, but the larger the model, the higher the GPU’s interconnect bandwidth needed to train it efficiently. Hence, the export controls are not having a very strong impact on China’s ability to train large language models right now, as Chinese companies can still purchase the H800, but will keep China from training models significantly larger than the ones that exist today, effectively putting a hard limit on Chinese innovation potential. For illustration, see this diagramme by Lennart Heim.

There is currently no solution to jailbreak prompts, adversarial attacks, or other similar methods to surpass filtering techniques of unwanted content in LLMs.

Find a detailed summary and analysis of the content of China’s draft Generative AI guidelines here.