Posts

Wikitag Contributions

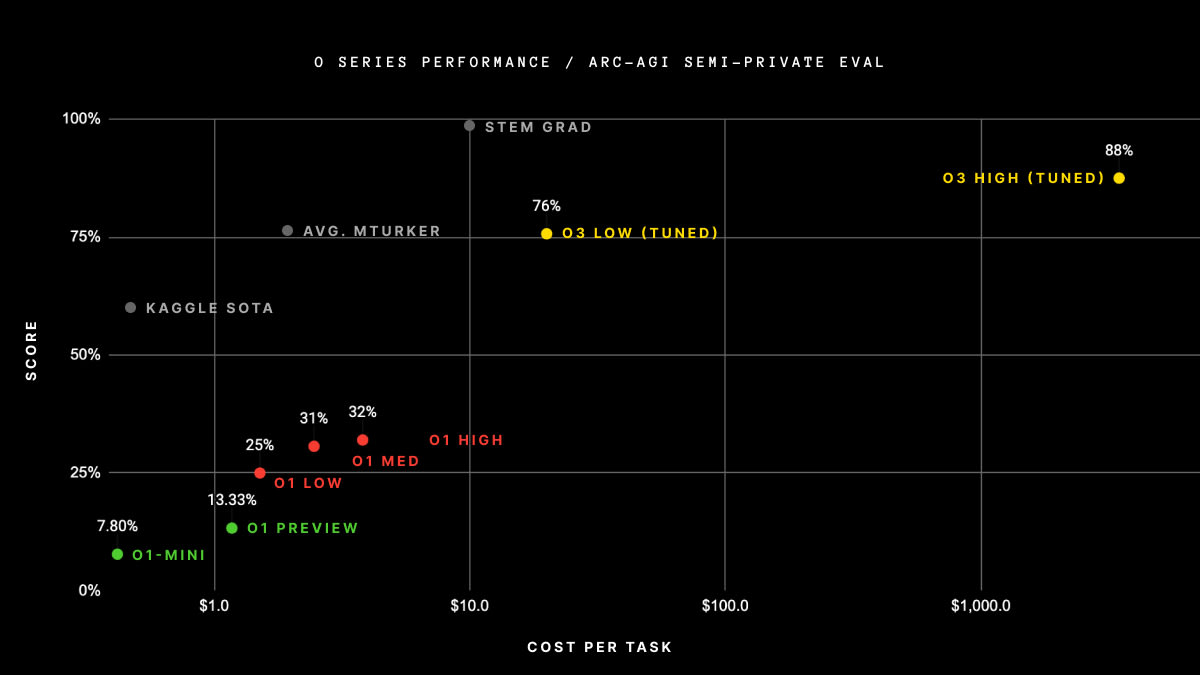

the reason why my first thought was that they used more inference is that ARC Prize specifies that that's how they got their ARC-AGI score (https://arcprize.org/blog/oai-o3-pub-breakthrough) - my read on this graph is that they spent $300k+ on getting their score (there's 100 questions in the semi-private eval). o3 high, not o3-mini high, but this result is pretty strong proof of concept that they're willing to spend a lot on inference for good scores.

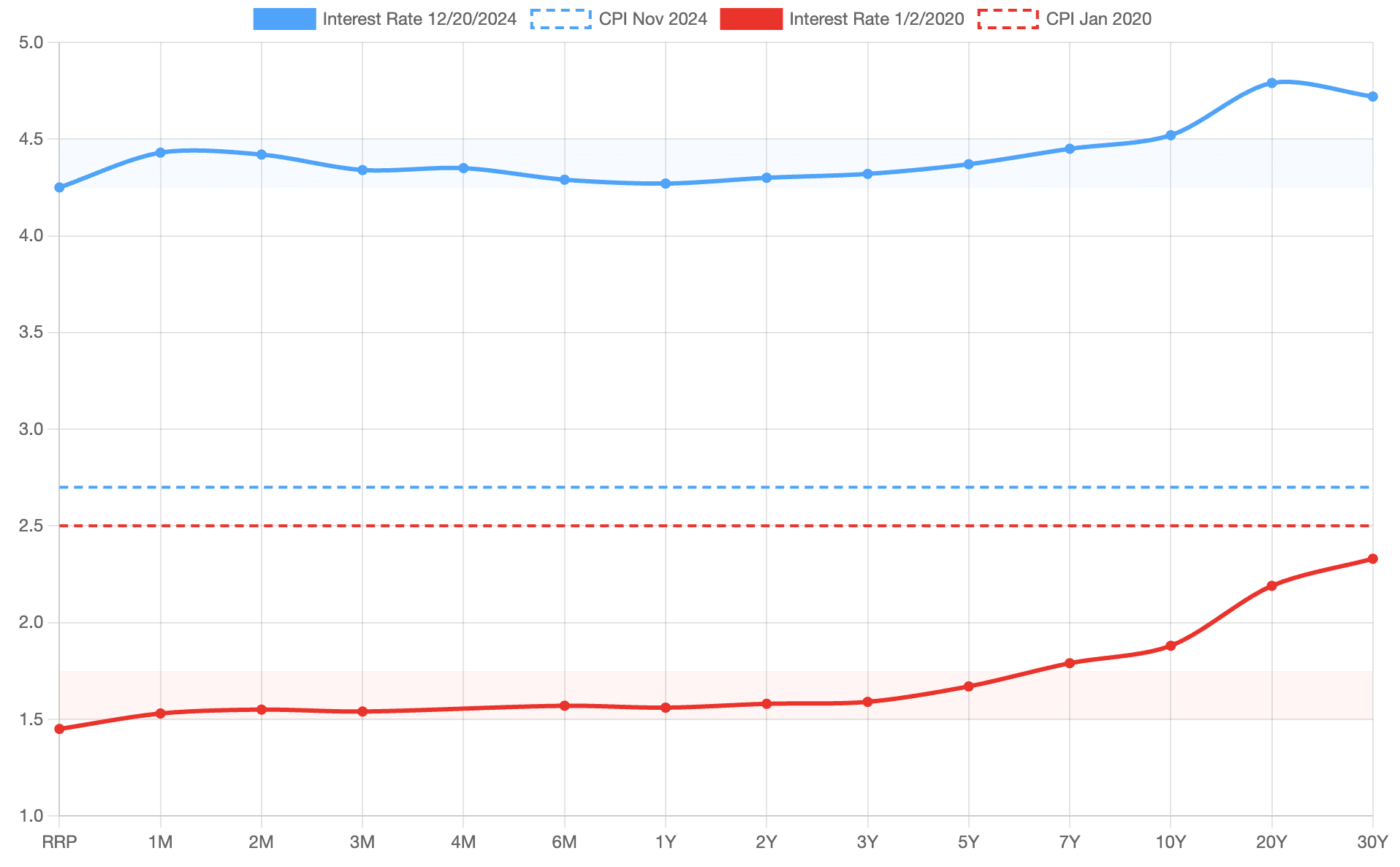

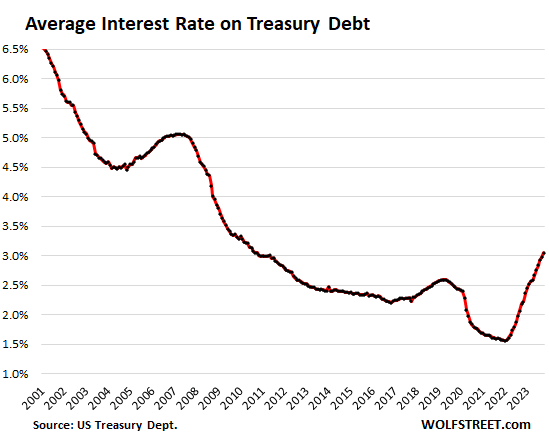

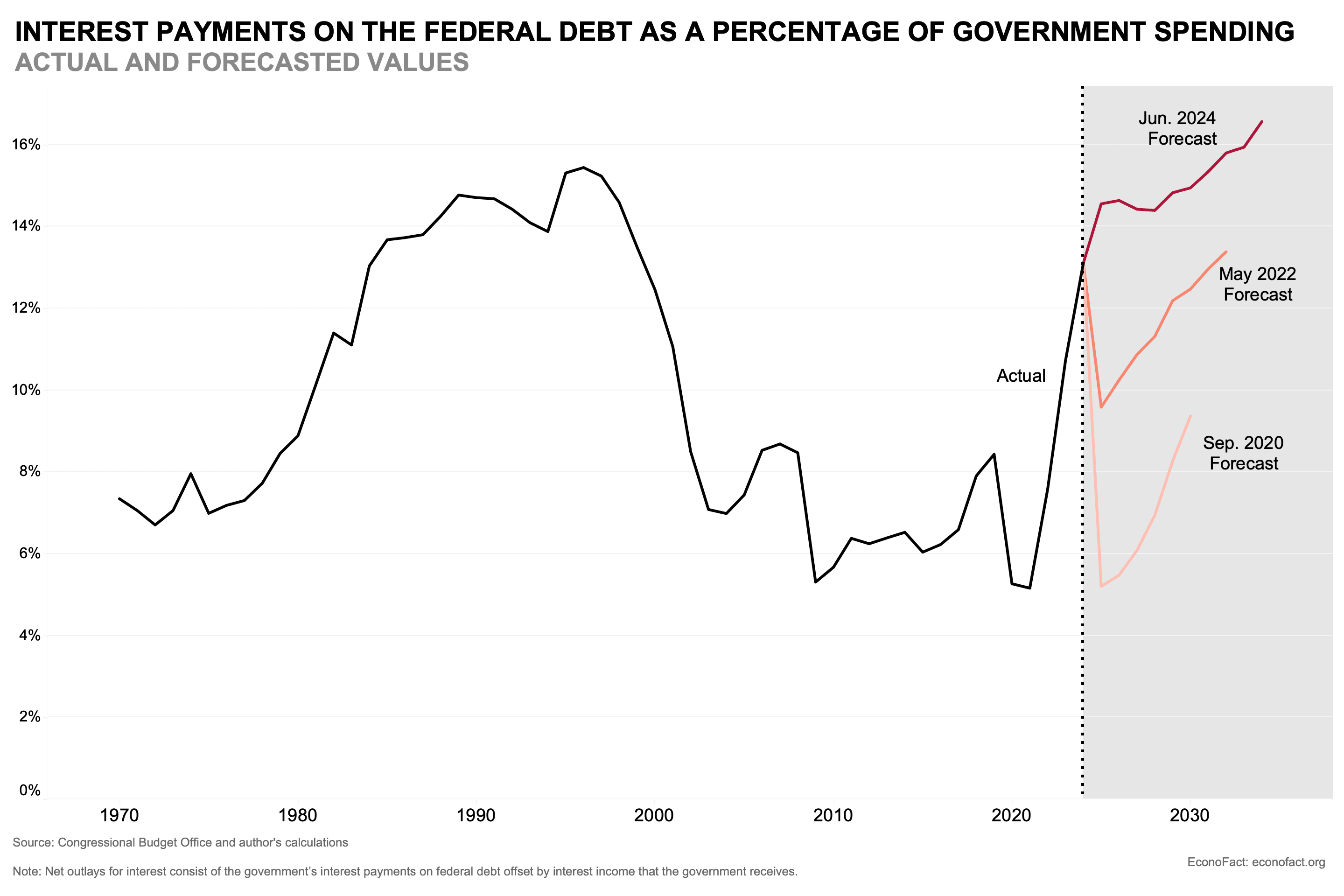

it's not about inflation expectations (which I think pretty well anchored), it's about interest rates, which have risen substantially over this period and which has increased (and is expected to continue to increase) the cost of the US maintaining its debt (first two figures are from sites I'm not familiar with but the numbers seem right to me):

fwiw, I do broadly agree with your overall point that the dollar value of the debt is a bad statistic to use, but:

- the 2020-2024 period was also a misleading example to point to because it was one where there the US position wrt its debt worsened by a lot even if it's not apparent from the headline number

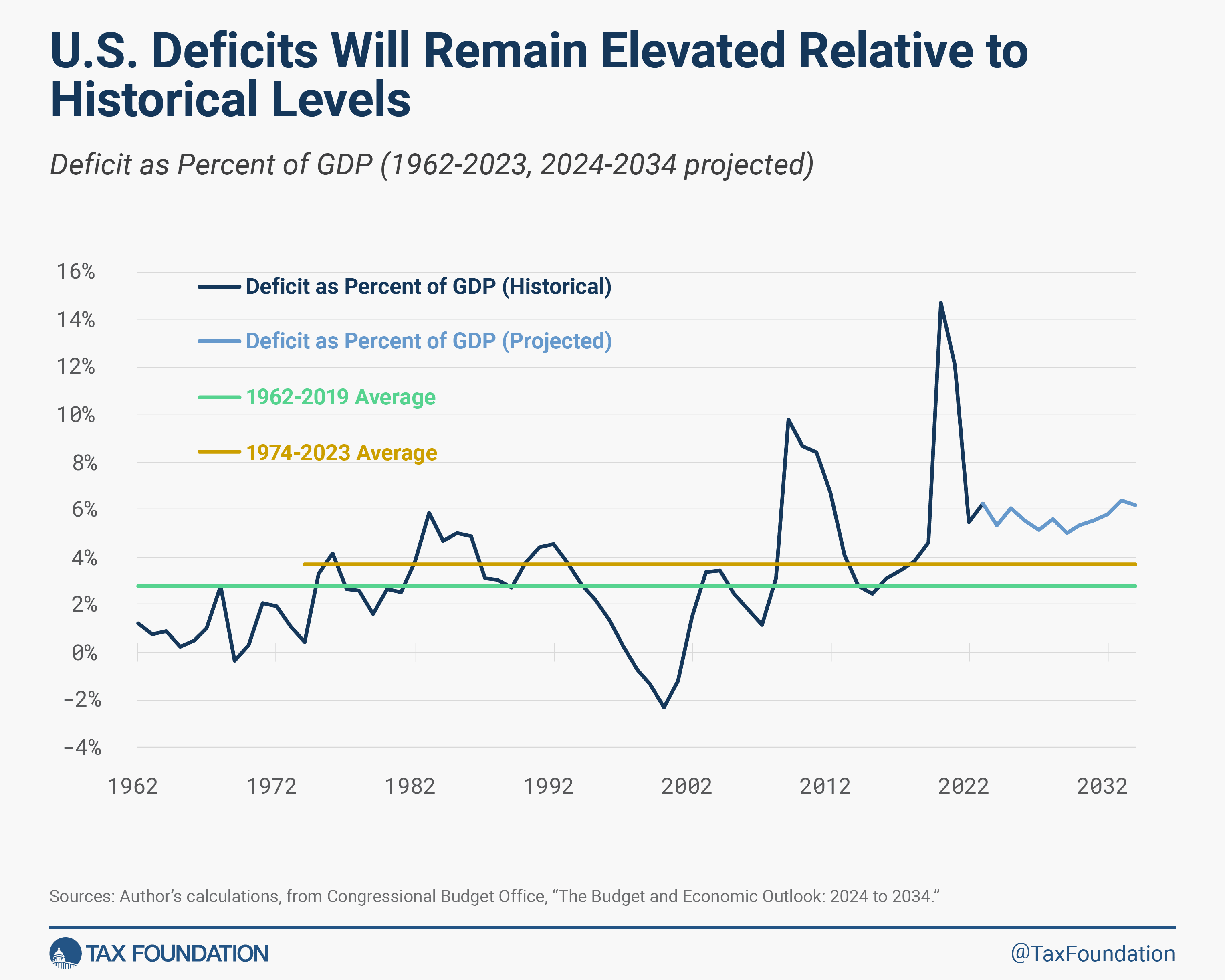

- I was going to say that the most concerning part of the debt is that that deficits are projected to keep going up, but actually they're projected to remain elevated but not keep rising? I have become marginally less concerned about the US debt over the course of writing this comment.

I am now wondering about the dynamics that happen if interest rates go way up a while before we see really high economic growth from AI, seems like it might lead to some weird dynamics here, but I'm not sure I think that's likely and this is probably enough words for now.

the debt/gdp ratio drop since 2020 I think was substantially driven by inflation being higher then expected rather than a function of economic growth - debt is in nominal dollars, so 2% real gdp growth + e.g. 8% inflation means that nominal gdp goes up by 10%, but we're now in a worse situation wrt future debt because interest rates are higher.

I do think that iterated with some unknown number of iterations is better than either single round or n-rounds at approximating what real world situations look like (and gets the more realistic result that cooperation is possible).

I agree that people are mostly not writing out things out this way when they're making real world decisions, but that applies equally to CDT and TDT, and being sensitive to small things like this seems like a fully general critique of game theory.

I haven't watched the entire interview, but in the article you linked, all of the quotes from Bukele here seem to be referring to whether he has the power to unilaterally cause Garcia to end up in the United States, not whether he has the power to cause him to be released from prison.