They are made of repeating patterns

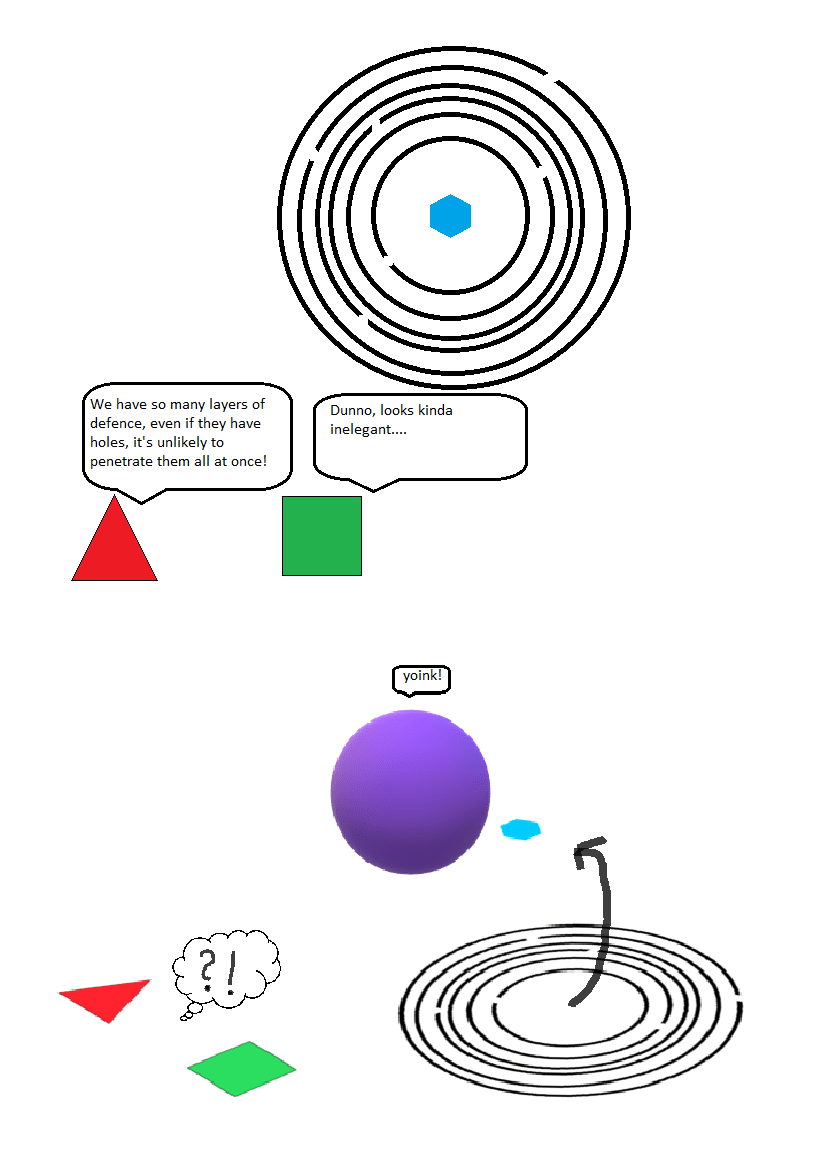

Epistemis status: an obvious parody. — You won't believe me. I've found them. — Whom? — Remember that famous discovery by Professor Prgh'zhyne about pockets of baryonic matter in open systems that minimize the production of entropy within them? They went further and claimed that goal-oriented systems could emerge within these pockets. Crazy idea, but... it seems I've found them near this yellow dwarf! — You're kidding. We know that a good optimizer of outcomes over systems' states should have a model of the system inside of itself. We have entire computable universes within ourselves and still barely make sense of this chaos. How can they fit valuable knowledge inside tiny sequences of 1023 atoms? — They repeat patterns of behavior. They have multiple encodings of them and slightly change them over time in response to environmental changes in a simple mechanistic way. — But that generalizes horribly! — Indeed. When a pattern interacts with a new aspect of the environment, it degrades with high probability. Their first mechanism for generating patterns was basically "throw a bunch of random numbers in the environment, keep those that survived, slightly change, repeat". — ... — Yeah, it's horrible from their perspective, I think. — How do they exist without an agent-environment boundary? I'd be pretty worried if some piece of baryonic matter could smash into my thoughts at any moment. — They kind of pretend they have an agent-environment boundary, using lipid layers. — Those "lipid layers" have such strong bonds that they don't let any piece of matter inside? That's impressive! — No, I was serious about them pretending. They need to pass matter through themselves; they're open systems and can't survive without external sources of free energy. They usually have specialized members of their population, an "immune system", that checks for alien patterns. — Like we check for signatures of malign hypotheses in the universal prior? — No, there's not enough c

It's tiling agents+embedded agency agenda. They wanted to find a non-trivial reflectively-stable embedded-in-environment structure and decision theory lies on intersection.