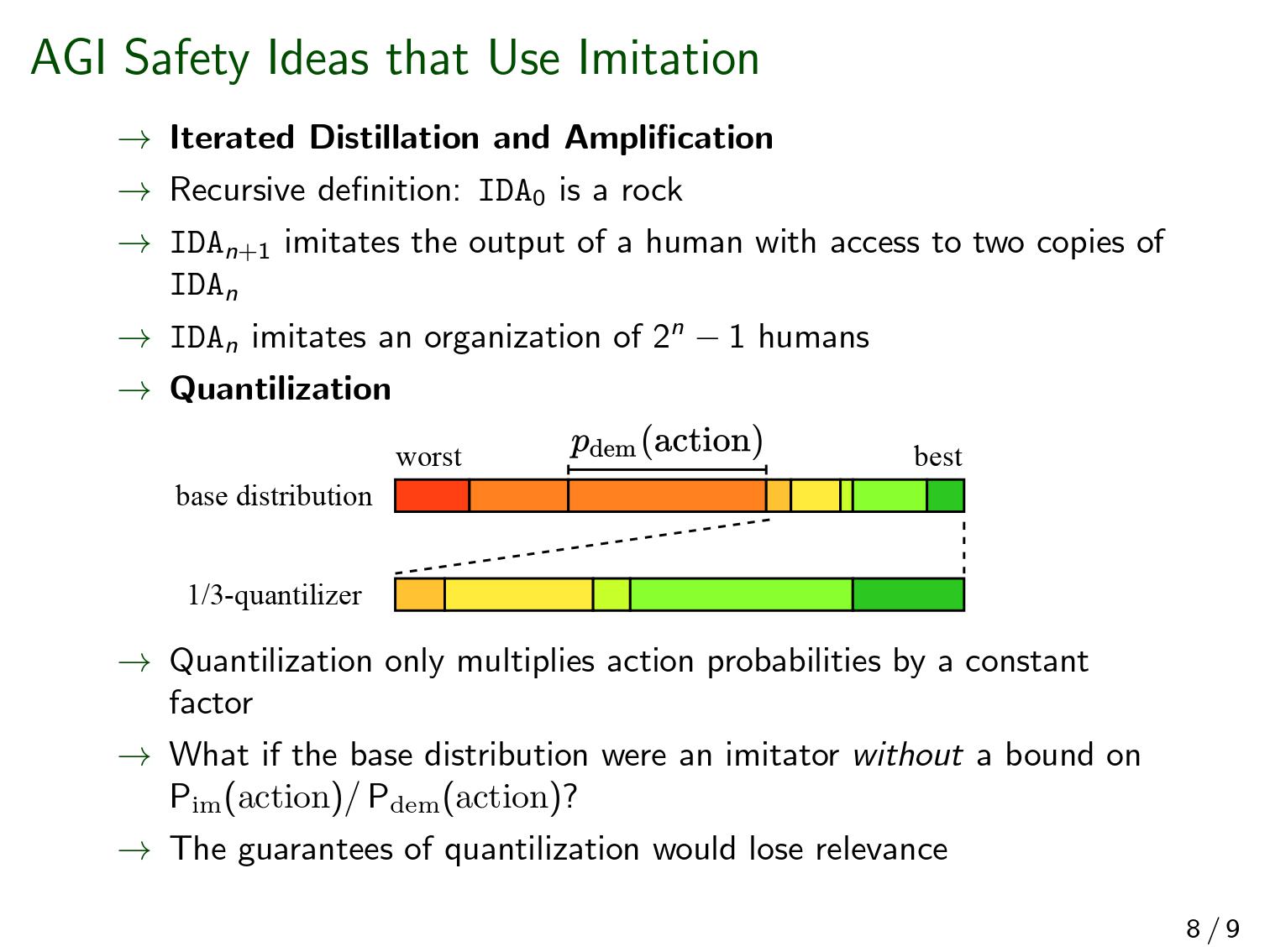

We've written a paper on online imitation learning, and our construction allows us to bound the extent to which mesa-optimizers could accomplish anything. This is not to say it will definitely be easy to eliminate mesa-optimizers in practice, but investigations into how to do so could look here as a starting point. The way to avoid outputting predictions that may have been corrupted by a mesa-optimizer is to ask for help when plausible stochastic models disagree about probabilities.

Here is the abstract:

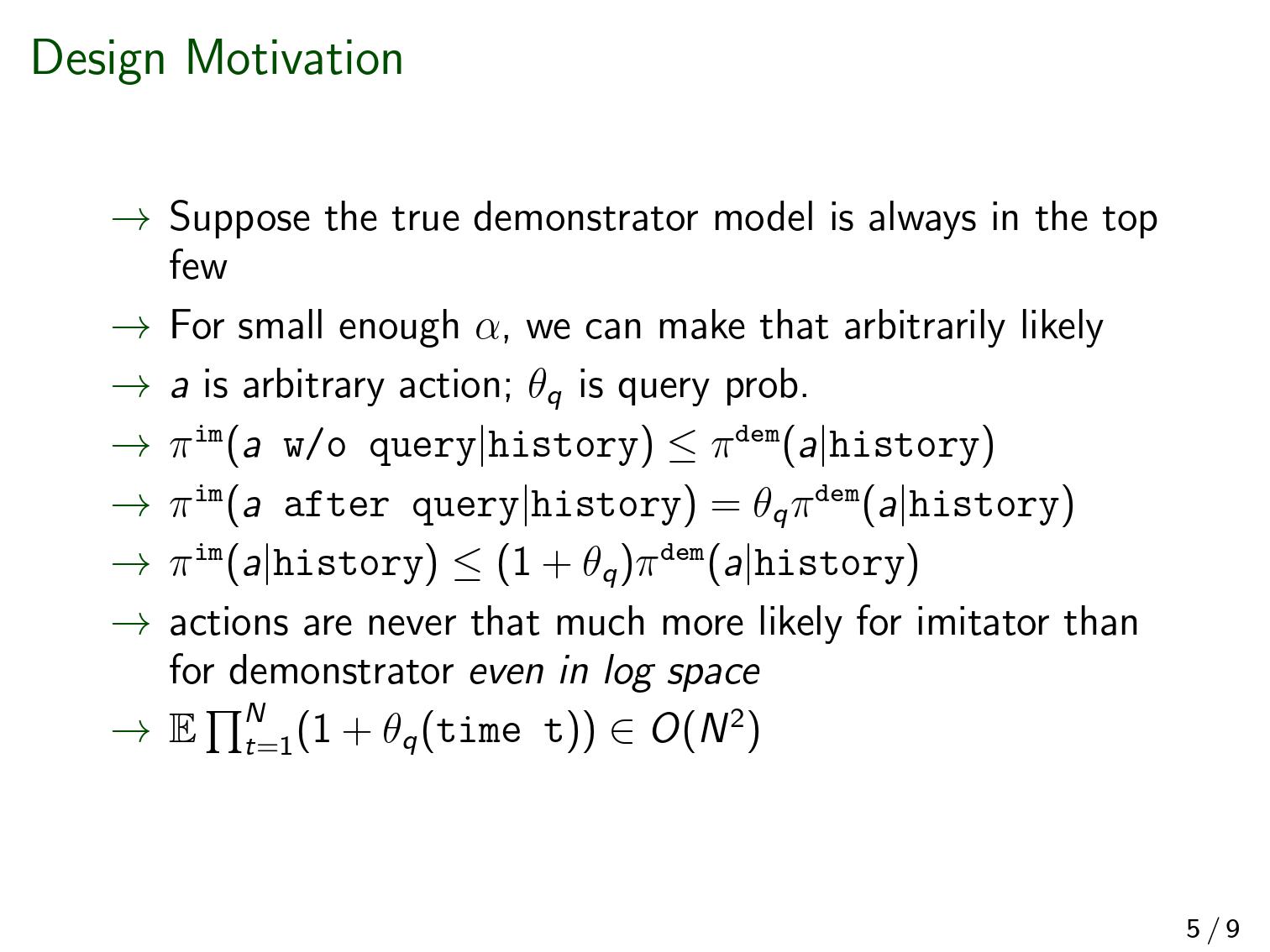

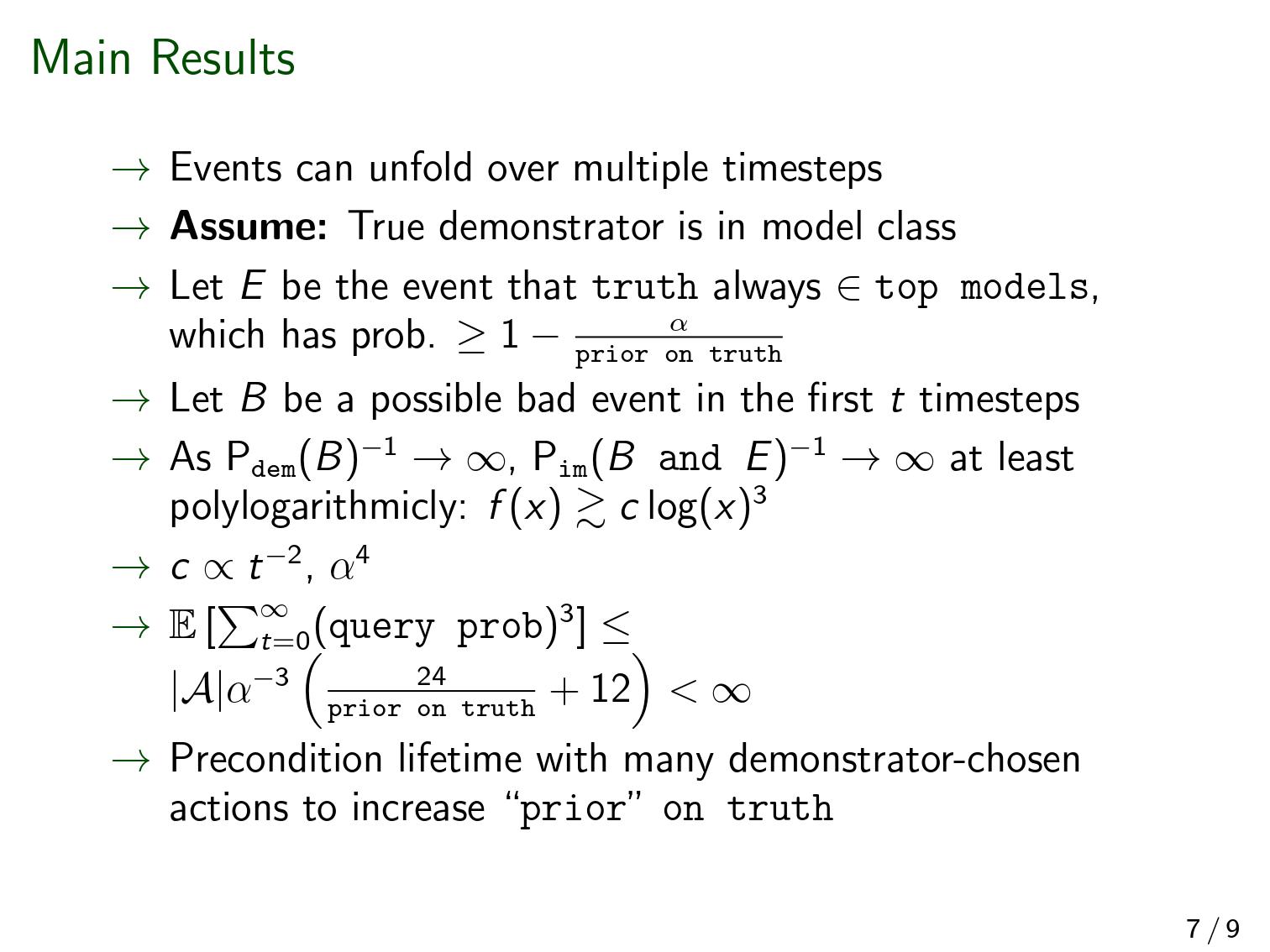

In imitation learning, imitators and demonstrators are policies for picking actions given past interactions with the environment. If we run an imitator, we probably want events to unfold similarly to the way they would have if the demonstrator had been acting the whole time. No existing work provides formal guidance in how this might be accomplished, instead restricting focus to environments that restart, making learning unusually easy, and conveniently limiting the significance of any mistake. We address a fully general setting, in which the (stochastic) environment and demonstrator never reset, not even for training purposes. Our new conservative Bayesian imitation learner underestimates the probabilities of each available action, and queries for more data with the remaining probability. Our main result: if an event would have been unlikely had the demonstrator acted the whole time, that event's likelihood can be bounded above when running the (initially totally ignorant) imitator instead. Meanwhile, queries to the demonstrator rapidly diminish in frequency.

The second-last sentence refers to the bound on what a mesa-optimizer could accomplish. We assume a realizable setting (positive prior weight on the true demonstrator-model). There are none of the usual embedding problems here—the imitator can just be bigger than the demonstrator that it's modeling.

(As a side note, even if the imitator had to model the whole world, it wouldn't be a big problem theoretically. If the walls of the computer don't in fact break during the operation of the agent, then "the actual world" and "the actual world outside the computer conditioned on the walls of the computer not breaking" both have equal claim to being "the true world-model", in the formal sense that is relevant to a Bayesian agent. And the latter formulation doesn't require the agent to fit inside world that it's modeling).

Almost no mathematical background is required to follow [Edit: most of ] the proofs. [Edit: But there is a bit of jargon. "Measure" means "probability distribution", and "semimeasure" is a probability distribution that sums to less than one.] We feel our bounds could be made much tighter, and we'd love help investigating that.

These slides (pdf here) are fairly self-contained and a quicker read than the paper itself.

Below, and refer to the probability of the event supposing the demonstrator or imitator were acting the entire time. The limit below refers to successively more unlikely events ; it's not a limit over time. Imagine a sequence of events such that .

Interesting paper! I like the focus on imitation learning, but the really new food-for-thought thing to me is the bit about dropping i.i.d. assumptions and then seeing how far you can get. I need to think more about the math in the paper before I can ask some specific questions about this i.i.d. thing.

My feelings about the post above are a bit more mixed. Claims about inner alignment always seem to generate a lot of traffic on this site. But a lot of this traffic consists of questions and clarification about what exactly counts as an inner alignment failure or a mesa optimization related failure. The term is so fluid that I find the quantitative feelings that people express in the comment section hard to interpret. Is everybody talking about the same P(treachery) and P(bad)?

Thought experiment counter-example

Moving beyond these mixed feelings, here is a fun thought experiment, for various values of fun. You state:

So now I will try to construct a counter-example to this claim: an example where mesa-optimizers (as I understand them) will accomplish very bad things even inside your construction.

Your construction uses a set of candidate policies Π, where one of them equals the real demonstrator policy πd.

A single policy πi is a function πi were πi(at|h<t) computes the probability that action at will be taken in the world state represented by the history h<t.

Here is the first special step in the thought experiment. I am going to define each πi by using a different reward function Ri that the policy is going to try to maximize. Every policy definition will use the same world state transition predictor P that allows me to estimate P(h<t+1|h<t,at) for every t. (I am probably getting slightly creative with the notation in the paper at this point.)

I now define each function πi as follows: I compute πi(at|h<t) by assigning a probability of 1 to the one at that comes out of the argmaxa of the Bellman equation constructed using the shared predictor P and the policy specific Ri above. So the policy πi picks the action that will optimize the expected time discounted return of the reward function Ri starting from the world state h<t, and projected forward by the predictor P above, under the assumption that the policy also controls all future actions.

So each πi is a model with a definite inner optimizer inside of it: the inner optimizer does a search over a world state tree to find the optimal solution to the Bellman equation.

Now for the second special step: I am going to make some assumptions about the agent environment, building up to a treacherous turn scenario:

The demonstrator in the paper is a human, working alone with the agent.

The demonstrator has a big hammer that they will use to smash the agent when it starts misbehaving.

The world model projections in the policies πi are all detailed enough that they know about the hammer, and they know that they can expect the agent to get smashed when it performs an unexpected action.

Therefore, for all policies πi where πi≠πd, policies with reward functions not shared by the demonstrator, the optimal course of action is to take exactly that action that the demonstrator would expect the agent to take, to avoid getting smashed. The world models are good enough that they can predict this action.

The above is of course only the optimal action in these policies because they all project that there will be a point in time t where the demonstrator becomes distracted, distracted enough that the agent can kill the demonstrator with a single action without getting smashed first. The projection also shows that the agent will have plausible deniability to other humans afterwards, so that it will survive to do whatever it wants.

When that point t in time comes, these policies will all take exactly the same action, the action that kills the demonstrator. Only in the time steps t+1 and later, the policies start diverging in what actions they will take, because they have now accomplished the emergent sub-goal that was shared by all, so that each has their hands free to accomplish their real goal Ri.

Now if I re-examine the claim:

I have to conclude that the mesa-optimizers have at least accomplished the feat of killing the demonstrator. Whether they then can accomplish any of their divergent post-treachery goals in later time steps remains an open question, but the damage has been done.

Issues

Some issues remain with this fun (for various values of fun) thought experiment.

I can note that at time t, the policy πd∈Π that was fully aligned with the demonstrator did not want to kill the demonstrator, it would have taken another action. So, if I understand the paper right, if πd was still in the set of top policies at time t, the agent would not take the action to kill the demonstrator, but ask the demonstrator to take an action instead, which avoids the bad outcome, and might also demote all of the treacherous turn policies out of the top set.

But I am also reading in the abstract of the paper that

so I think this implies that over time, it is likely that πd might disappear from the top set. But I am not fully sure here. I might be reading the paper wrong.

Questions

So I have some questions:

Does this thought experiment look reasonable or have I overlooked something?

What about the probability that πd is still in the set of top policies at time t? To me, it looks like if we start with 1010 candidates in Π and the agent quickly narrows this down to a top set of size 104, then it is likely that πd will not be in there anymore.

Any strong opinions from anyone about whether the above counts as a definite inner alignment failure due to mesa optimization (I think so) or definitely not?

This comment is long enough already so I am not going to speculate here about possible ways to avoid inserting models like the πi models I constructed above into the set Π. But speculation is welcome...

(I am calling this a 'fun' thought experiment because I am thinking of this as a limit case. This limit case useful to identify and provides some food for thought, but it does not really change my opinion about how stable or safe the approach in the paper might be in practice. I my experience, you can always find a limit case where things fail if you start looking for it.)

I have seen you mention a number of times in this comment thread that 'this is not a problem because eventually the bad/wrong policies will disappear from the top set'. You have not qualified this statement with 'but we need a very low α like α<1/|Π| to make this work in a safe way', so I remain somewhat uncertain about your views are about how low α needs to go.

In any case, I'll now try to convince you that if α>1... (read more)