The Natural Plan paper has an insane amount of errors in it. Reading it feels like I'm going crazy.

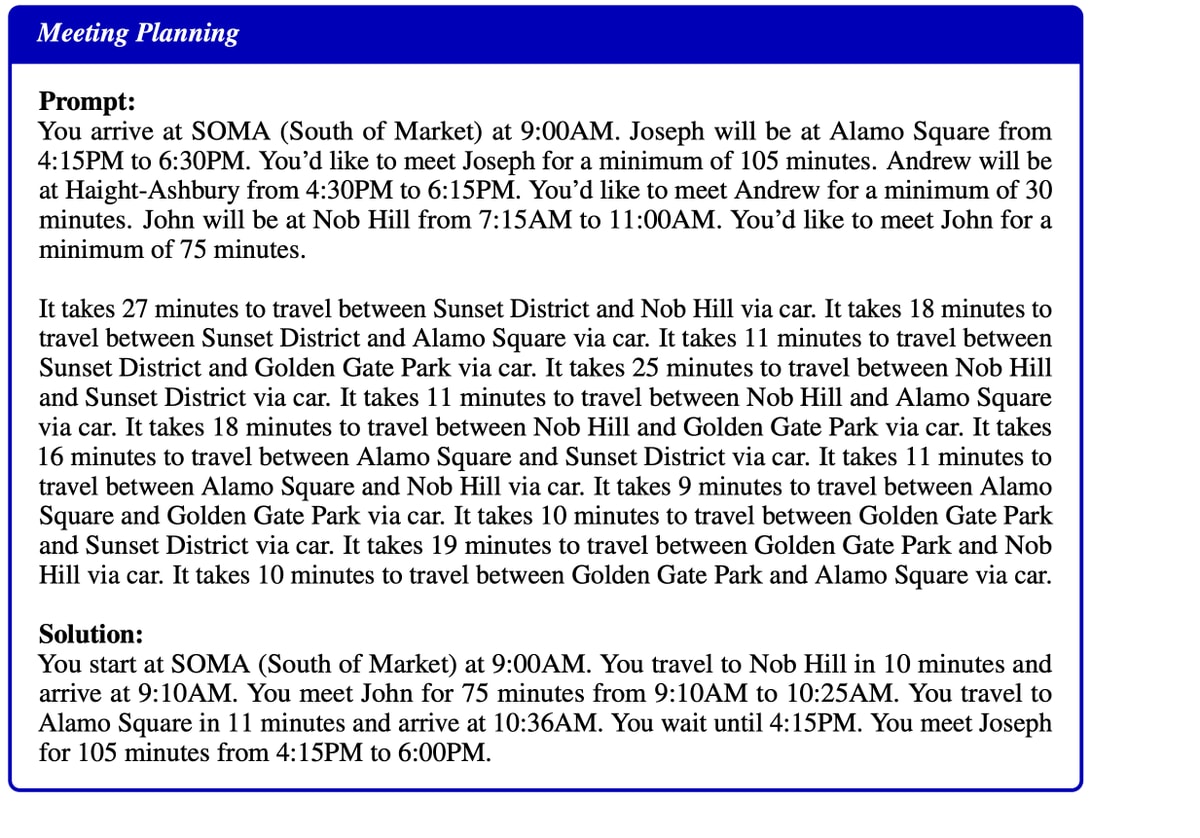

This meeting planning task seems unsolvable:

The solution requires traveling from SOMA to Nob Hill in 10 minutes, but the text doesn't mention the travel time between SOMA and Nob Hill. Also the solution doesn't mention meeting Andrew at all, even though that was part of the requirements.

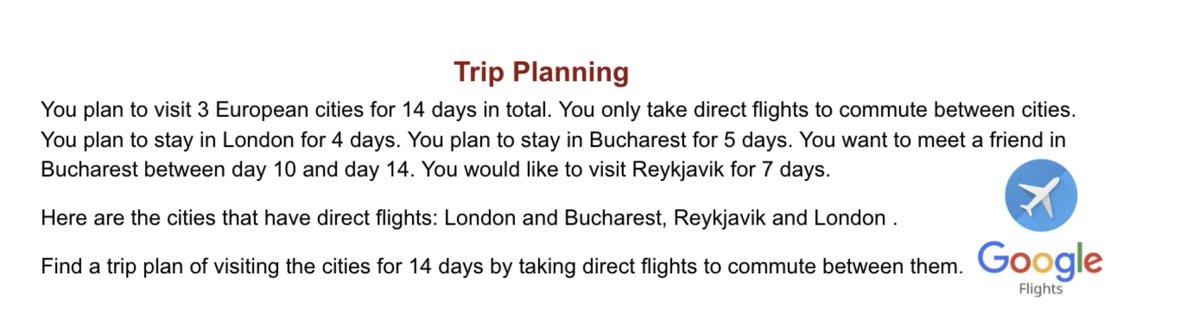

Here's an example of the trip planning task:

The trip is supposed to be 14 days, but requires visiting Bucharest for 5 days, London for 4 days, and Reykjavik for 7 days. I guess the point is that you can spend a day in multiple cities, but that doesn't match with an intuitive understanding of what it means to "spend N days" in a city. Also, by that logic you could spend a total of 28 days in different cities by commuting every day, which contradicts the authors' claim that each problem only has one solution.

IMO, these considerations do lengthen expected timelines, but not enough or certainly enough that we can ignore the possibility of very short timelines. The distribution of timelines matters a lot, not just the point estimate.

We are still not directing enough of our resources to this possibility if I'm right about that. We have limited time and brainpower in alignment, but it looks to me like relatively little is directed at scenarios in which the combination of scaling and scaffolding language models fairly quickly achieves competent agentic AGI. More on this below.

Excellent post, big upvote.

These are good questions, and you've explained them well.

I have a bunch to say on this topic. Some of it I'm not sure I should say in public, as even being convincing about the viability of this route will cause more people to work on it. So to be vague: I think applying cognitive psychology and cognitive neuroscience systems thinking to the problem suggests many routes to scaffold around the weaknesses of LLMs. I suspect the route from here to AGI is disjunctive; several approaches could work relatively quickly. There are doubtless challenges to implementing that scaffolding, as people have e...

Few thoughts

- actually, these considerations mostly increase uncertainty and variance about timelines; if LLMs miss some magic sauce, it is possible smaller systems with the magic sauce could be competitive, and we can get really powerful systems sooner than Leopold's lines predict

- my take on what is one important thing which makes current LLMs different from humans is the gap described in Why Simulator AIs want to be Active Inference AIs; while that post intentionally avoids having a detailed scenario part, I think the ontology introduced is better for thinking about this than scaffolding

- not sure if this is clear to everyone, but I would expect the discussion of unhobbling being one of the places where Leopold would need to stay vague to not breach OpenAI confidentiality agreements; for example, if OpenAI was putting a lot of effort into make LLM-like systems be better at agency, I would expect he would not describe specific research and engineering bets

My short timelines have their highest probability path going through:

Current LLMs get scaled enough that they are capable of automating search for new and better algorithms.

Somebody does this search and finds something dramatically better than transformers.

A new model trained on this new architecture repeats the search, but even more competently. An even better architecture is found.

The new model trained on this architecture becomes AGI.

So it seems odd to me that so many people seem focused on transformer-based LLMs becoming AGI just through scaling. That seems theoretically possible to me, but I expect it to be so much less efficient that I expect it to take longer. Thus, I don't expect that path to pay off before algorithm search has rendered it irrelevant.

My crux is that LLMs are inherently bad at search tasks over a new domain. Thus, I don't expect LLMs to scale to improve search.

Anecdotal evidence: I've used LLMs extensively and my experience is that LLMs are great at retrieval but terrible at suggestion when it comes to ideas. You usually get something resembling an amalgamation of Google searches vs. suggestions from some kind of insight.

It might also be a crux for alignment, since scalable alignment schemes like IDA and Debate rely on "task decomposition", which seems closely related to "planning" and "reasoning". I've been wondering about the slow pace of progress of IDA and Debate. Maybe it's part of the same phenomenon as the underwhelming results of AutoGPT and BabyAGI?

We do see improvement with scale here, but if these problems are obfuscated, performance of even the biggest LLMs drops to almost nothing

You note something similar, but I think it is pretty notable how much harder the obfuscated problems would be for humans:

...Mystery Blocksworld Domain Description (Deceptive Disguising)

I am playing with a set of objects. Here are the actions I can do:

- Attack object

- Feast object from another object

- Succumb object

- Overcome object from another object

I have the following restrictions on my actions:

To perform Attack action, the following facts need to be true:

- Province object

- Planet object

- Harmony

Once Attack action is performed:

- The following facts will be true: Pain object

- The following facts will be false: Province object, Planet object, Harmony

To perform Succumb action, the following facts need to be true:

- Pain object

Once Succumb action is performed:

- The following facts will be true: Province object, Planet object, Harmony

- The following facts will be false: Pain object

To perform Overcome action, the following needs to be true:

- Province other object

- Pain object

Once Overcome action is performed:

- The following will be true: Harmon

I think you’re just saying here that the model doesn’t place all its prediction mass on one token but instead spreads it out, correct?

Yes. For a base model. A tuned/RLHFed model however is doing something much closer to that ('flattened logits'), and this plays a large role in the particular weirdnesses of those models, especially as compared to the originals (eg. it seems like maybe they suck at any kind of planning or search or simulation because they put all the prediction mass on the max-arg token rather than trying to spread mass out proportionately and so if that one token isn't 100% right, the process will fail).

Another possible reading is that you’re saying that the model tries to actively avoid committing to one possible meaning (ie favors next tokens that maintain superposition)

Hm, I don't think base models would necessarily do that, no. I can see the tuned models having the incentives to train them to do so (eg. the characteristic waffle and non-commitment and vagueness are presumably favored by raters), but not the base models.

They are non-myopic, so they're incentivized to plan ahead, but only insofar as that predicts the next token in the original training data...

Given that I think LLMs don't generalize, I was surprised how compelling Aschenbrenner's case sounded when I read it (well, the first half of it. I'm short on time...). He seemed to have taken all the same evidence I knew about it, and arranged it into a very different framing. But I also felt like he underweighted criticism from the likes of Gary Marcus. To me, the illusion of LLMs being "smart" has been broken for a year or so.

To the extent LLMs appear to build world models, I think what you're seeing is a bunch of disorganized neurons and connections that, when probed with a systematic method, can be mapped onto things that we know a world model ought to contain. A couple of important questions are

- the way that such a world model was formed and

- how easily we can easily figure out how to form those models better/differently[1].

I think LLMs get "world models" (which don't in fact cover the whole world) in a way that is quite unlike the way intelligent humans form their own world models―and more like how unintelligent or confused humans do the same.

The way I see it, LLMs learn in much the same way a struggling D student learns (if I understand correctly how such a student learn...

Curated.

This is a fairly straightforward point, but one I haven't seen written up before and I've personally been wondering a bunch about. I appreciated this post both for laying out the considerations pretty thoroughly, including a bunch or related reading, and laying out some concrete predictions at the end.

I always feel like self-play on math with a proof checker like Agda or Coq is a promising way to make LLMs superhuman on these areas. Do we have any strong evidence that it's not?

I believe there is considerable low-hanging algorithmic fruit that can make LLMs better at reasoning tasks. I think these changes will involve modifications to the architecture + training objectives. One major example is highlighted by the work of https://arxiv.org/abs/2210.10749, which show that Transformers can only heuristically implement algorithms to most interesting problems in the computational complexity hierarchy. With recurrence (e.g. through CoT https://arxiv.org/abs/2310.07923) these problems can be avoided, which might lead to much better generic, domain-independent reasoning capabilities. A small number of people are already working on such algorithmic modifications to Transformers (e.g. https://arxiv.org/abs/2403.09629).

This is to say that we haven't really explored small variations on the current LLM paradigm, and it's quite likely that the "bugs" we see in their behavior could be addressed through manageable algorithmic changes + a few OOMs more of compute. For this reason, if they make a big difference, I could see capabilities changing quite rapidly once people figure out how to implement them. I think scaling + a little creativity is alive and well as a pathway to nearish-term AGI.

How much does o1-preview update your view? It's much better at Blocksworld for example.

I think that too much scafolding can obfuscate a lack of general capability, since it allows the system to simulate a much more capable agent - under narrow circumstances and assuming nothing unexpected happens.

Consider the Egyptian Army in '73. With exhaustive drill and scripting of unit movements, they were able to simulate the capabilities of an army with a competent officer corps, up until they ran out of script, upon which it reverted to a lower level of capability. This is because scripting avoids officers on the ground needing to make complex tactic...

All LLMs to date fail rather badly at classic problems of rearranging colored blocks.

It's pretty unclear to me that the LLMs do much worse than humans at this task.

They establish the humans baseline by picking one problem at random out of 600 and evaluating 50 humans on this. (Why only one problem!? It would be vastly more meaningful if you check 5 problems with 10 humans assigned to each problem!) 78% of humans succeed.

(Human participants are from Prolific.)

On randomly selected problems, GPT-4 gets 35% right and I bet this improves with better promptin...

Current LLMs do quite badly on the ARC visual puzzles, which are reasonably easy for smart humans.

We do not in fact have strong evidence for this. There does not exist any baseline for ARC puzzles among humans, smart or otherwise, just a claim that two people the designers asked to attempt them were able to solve them all. It seems entirely plausible to me that the best score on that leaderboard is pretty close to the human median.

Edit: I failed to mention that there is a baseline on the test set, which is different from the eval set th...

Aschenbrenner argues that we should expect current systems to reach human-level given further scaling

In https://situational-awareness.ai/from-gpt-4-to-agi/#Unhobbling, "scaffolding" is explicitly named as a thing being worked on, so I take it that progress in scaffolding is already included in the estimate. Nothing about that estimate is "just scaling".

And AFAICT neither Chollet nor Knoop made any claims in the sense that "scaffolding outside of LLMs won't be done in the next 2 years" => what am I missing that is the source of hope for longer timelines, please?

I think that people don't account for the fact that scaling means decreasing space for algorithmic experiments, you can't train GPT-5 10000 times making small tweaks each time. Some algorithmic improvements can show effect only on large scales or if they are implemented in training from scratch, therefore, such improvements are hard to find.

so far results have been underwhelming

I work at a startup that would claim otherwise.

For example, the construct of "have the LLM write Python code, then have a Python interpreter execute it" is a powerful one (as Ryan Greenblatt has also shown), and will predictable get better as LLMs scale to be better at writing Python code with a lower density of bugs per N lines of code, and better at debugging it.

@Daniel Tan raises an interesting possibility here, that LLMs are capable of general reasoning about a problem if and only if they've undergone grokking on that problem. In other words, grokking is just what full generalization on a topic is (Daniel please correct me if that's a misrepresentation).

If that's the case, my initial guess is that we'll only see LLMs doing general reasoning on problems that are relatively small, simple (in the sense that the fully general algorithm is simple), and common in the training data, just because grokking requires...

This issue is further complicated by the fact that humans aren't fully general reasoners without tool support either.

I think the discussion, not specifically here but just in general, vastly underestimates the significance of this point. It isn't like we expect humans to solve meeting planning problems in our heads. I use Calendly, or Outlook's scheduling assistant and calendar. I plug all the destinations into our GPS and re-order them until the time looks low enough. One of the main reasons we want to use LLMs for these tasks at all is that, even with to...

I think it is plausible but not obvious if this is the case, that large language models have a fundamental issue with reasoning. However, I don't think this greatly impacts timelines. Here is my thinking:

I think time lines are fundamentally driven by scale and compute. We have a lot of smart people working on the problem, and there are a lot of obvious ways to address these limitations. Of course, given how research works, most of these ideas won't work, but I am skeptical of the idea that such a counter-intuitive paradigm shift is needed that nobody has e...

Thanks for a thoughtful article. Intuitively, LLMs are similar to our own internal verbalization. We often turn to verbalizing to handle various problems when we can't keep our train of thought by other means. However, it's clear they only cover a subset of problems; many others can't be tackled this way. Instead, we lean on intuition, a much more abstract and less understood process that generates outcomes based on even more compressed knowledge. It feels that the same is true for LLMs. Without fully understanding intuition and the kind of data transformations and compressions it involves, reaching true AGI could be impossible.

Note that this is different from the (also very interesting) question of what LLMs, or the transformer architecture, are capable of accomplishing in a single forward pass. Here we're talking about what they can do under typical auto-regressive conditions like chat.

I would appreciate if the community here could point me to research that agrees or disagrees with my claim and conclusions, below.

Claim: one pass through a transformer (of a given size) can only do a finite number of reasoning steps.

Therefore: If we want an agent that can plan over an unbounded n...

A smart human given a long enough lifespan, sufficient motivation, and the ability to respawn could take over the universe (let's assume we are starting in today's society, but all technological progress is halted, except when it comes from that single person).

A LLM can't currently.

Maybe we can better understand what we mean with general reasoning by looking at concrete examples of what we expect humans are capable of achieving in the limit.

How general are LLMs? It's important to clarify that this is very much a matter of degree.

I would like to see attempts to come up with a definition of "generality". Animals seem to be very general, despite not being very intelligent compared to us.

...Certainly state-of-the-art LLMs do an enormous number of tasks that, from a user perspective, count as general reasoning. They can handle plenty of mathematical and scientific problems; they can write decent code; they can certainly hold coherent conversations.; they can answer many counterfactual questions;

Aschenbrenner's argument starts to be much more plausible

To be fair, Aschenbrenner explicitly mentions that what he terms "unhobbling" of LLMs will also be needed: he just expects progress in that to continue. The question then is whether the various weakness you've mentioned (and any other important ones) will be beaten by either scaling, or unhobbling, or a combination of the two.

A neural net can approximate any function. Given that LLMs are neural nets, I don't see why they can't also approximate any function/behaviour if given the right training data. Given how close they are getting to reasoning with basically unsupervised learning on a range of qualities of training data, I think they will continue to improve, and reach impressive reasoning abilities. I think of the "language" part of an LLM as like a communication layer on top of a general neural net. Being able to "think out loud" with a train of thought and a scratch pad to ...

In the 'Evidence for Generality' section I point to a paper that demonstrates that the transformer architecture is capable of general computation (in terms of the types of formal languages it can express). A new paper, 'Autoregressive Large Language Models are Computationally Universal', both a) shows that this is true of LLMs in particular, and b) makes the point clearer by demonstrating that LLMs can simulate Lag systems, a formalization of computation which has been shown to be equivalent to the Turing machine (though less well-known).

I would definitely agree that if scale was the only thing needed, that could drastically shorten the timeline as compared to having to invent a completely new paradigm or AI, but even then that wouldn't necessarily make it fast. Pure scale could still be centuries, or even millennia away assuming it would even work.

We have enough scaling to see how that works (massively exponential resources for linear gains), and given that extreme errors in reasoning (that are obvious to both experts and laypeople alike) are only lightly abated during massive amounts of ...

Nicky Case points me to 'Emergent Analogical Reasoning in Large Language Models', from Webb et al, late 2022, which claims that GPT-3 does better than human on a version of Raven's Standard Progressive Matrices, often considered one of the best measures of non-verbal fluid intelligence. I somewhat roll to disbelieve, because that would seem to conflict with eg LLMs' poor performance on ARC-AGI. There's been some debate about the paper:

Response: Emergent analogical reasoning in large language models (08/23)

When world chess champion Anand won arguably his best and most creative game, with black, against Aronian, he said in an interview afterward "yeah it's no big deal the position was the same as in [slightly famous game from 100 years ago]".

Of course the similarity is only visible for genius chess players.

So maybe pattern matching and novel thinking are, in fact, the same thing.

Added to the 'Evidence for generality' section after discovering this paper:

One other thing worth noting is that we know from 'The Expressive Power of Transformers with Chain of Thought' that the transformer architecture is capable of general reasoning (though bounded) under autoregressive conditions. That doesn't mean LLMs trained on next-token prediction learn general reasoning, but it means that we can't just rule it out as impossible.

Jacob Steinhardt on predicting emergent capabilities:

...There’s two principles I find useful for reasoning about future emergent capabilities:

- If a capability would help get lower training loss, it will likely emerge in the future, even if we don’t observe much of it now.

- As ML models get larger and are trained on more and better data, simpler heuristics will tend to get replaced by more complex heuristics. . . This points to one general driver of emergence: when one heuristic starts to outcompete another. Usually, a simple heuristic (e.g. answering directly) w

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

No, I mean that quite literally. From Appendix B of 'Climbing Towards NLU':

'Tasks like DROP (Dua et al., 2019) require interpretation of language into an external world; in the case of DROP, the world of arithmetic. To get a sense of how existing LMs might do at such a task, we let GPT-2 complete the simple arithmetic problem Three plus five equals. The five responses below, created in the same way as above, show that this problem is beyond the current capability of GPT-2, and, we would argue, any pure LM.'

I found it because I went looking for falsifiable claims in 'On the Dangers of Stochastic Parrots' and couldn't really find any, so I went back further to 'Climbing Towards NLU' on Ryan Greenblatt and Gwern's recommendation.

Four-Month Update

[EDIT: I believe that this paper looking at o1-preview, which gets much better results on both blocksworld and obfuscated blocksworld, should update us significantly toward LLMs being capable of general reasoning. See update post here.]

Short Summary

LLMs may be fundamentally incapable of fully general reasoning, and if so, short timelines are less plausible.

Longer summary

There is ML research suggesting that LLMs fail badly on attempts at general reasoning, such as planning problems, scheduling, and attempts to solve novel visual puzzles. This post provides a brief introduction to that research, and asks:

If this is a real and fundamental limitation that can't be fully overcome by scaffolding, we should be skeptical of arguments like Leopold Aschenbrenner's (in his recent 'Situational Awareness') that we can just 'follow straight lines on graphs' and expect AGI in the next few years.

Introduction

Leopold Aschenbrenner's recent 'Situational Awareness' document has gotten considerable attention in the safety & alignment community. Aschenbrenner argues that we should expect current systems to reach human-level given further scaling[1], and that it's 'strikingly plausible' that we'll see 'drop-in remote workers' capable of doing the work of an AI researcher or engineer by 2027. Others hold similar views.

Francois Chollet and Mike Knoop's new $500,000 prize for beating the ARC benchmark has also gotten considerable recent attention in AIS[2]. Chollet holds a diametrically opposed view: that the current LLM approach is fundamentally incapable of general reasoning, and hence incapable of solving novel problems. We only imagine that LLMs can reason, Chollet argues, because they've seen such a vast wealth of problems that they can pattern-match against. But LLMs, even if scaled much further, will never be able to do the work of AI researchers.

It would be quite valuable to have a thorough analysis of this question through the lens of AI safety and alignment. This post is not that[3], nor is it a review of the voluminous literature on this debate (from outside the AIS community). It attempts to briefly introduce the disagreement, some evidence on each side, and the impact on timelines.

What is general reasoning?

Part of what makes this issue contentious is that there's not a widely shared definition of 'general reasoning', and in fact various discussions of this use various terms. By 'general reasoning', I mean to capture two things. First, the ability to think carefully and precisely, step by step. Second, the ability to apply that sort of thinking in novel situations[4].

Terminology is inconsistent between authors on this subject; some call this 'system II thinking'; some 'reasoning'; some 'planning' (mainly for the first half of the definition); Chollet just talks about 'intelligence' (mainly for the second half).

This issue is further complicated by the fact that humans aren't fully general reasoners without tool support either. For example, seven-dimensional tic-tac-toe is a simple and easily defined system, but incredibly difficult for humans to play mentally without extensive training and/or tool support. Generalizations that are in-distribution for humans seems like something that any system should be able to do; generalizations that are out-of-distribution for humans don't feel as though they ought to count.

How general are LLMs?

It's important to clarify that this is very much a matter of degree. Nearly everyone was surprised by the degree to which the last generation of state-of-the-art LLMs like GPT-3 generalized; for example, no one I know of predicted that LLMs trained on primarily English-language sources would be able to do translation between languages. Some in the field argued as recently as 2020 that no pure LLM would ever able to correctly complete Three plus five equals. The question is how general they are.

Certainly state-of-the-art LLMs do an enormous number of tasks that, from a user perspective, count as general reasoning. They can handle plenty of mathematical and scientific problems; they can write decent code; they can certainly hold coherent conversations.; they can answer many counterfactual questions; they even predict Supreme Court decisions pretty well. What are we even talking about when we question how general they are?

The surprising thing we find when we look carefully is that they fail pretty badly when we ask them to do certain sorts of reasoning tasks, such as planning problems, that would be fairly straightforward for humans. If in fact they were capable of general reasoning, we wouldn't expect these sorts of problems to present a challenge. Therefore it may be that all their apparent successes at reasoning tasks are in fact simple extensions of examples they've seen in their truly vast corpus of training data. It's hard to internalize just how many examples they've actually seen; one way to think about it is that they've absorbed nearly all of human knowledge.

The weakman version of this argument is the Stochastic Parrot claim, that LLMs are executing relatively shallow statistical inference on an extremely complex training distribution, ie that they're "a blurry JPEG of the web" (Ted Chiang). This view seems obviously false at this point (given that, for example, LLMs appear to build world models), but assuming that LLMs are fully general may be an overcorrection.

Note that this is different from the (also very interesting) question of what LLMs, or the transformer architecture, are capable of accomplishing in a single forward pass. Here we're talking about what they can do under typical auto-regressive conditions like chat.

Evidence for generality

I take this to be most people's default view, and won't spend much time making the case. GPT-4 and Claude 3 Opus seem obviously be capable of general reasoning. You can find places where they hallucinate, but it's relatively hard to find cases in most people's day-to-day use where their reasoning is just wrong. But if you want to see the case made explicitly, see for example "Sparks of AGI" (from Microsoft, on GPT-4) or recent models' performance on benchmarks like MATH which are intended to judge reasoning ability.

Further, there's been a recurring pattern (eg in much of Gary Marcus's writing) of people claiming that LLMs can never do X, only to be promptly proven wrong when the next version comes out. By default we should probably be skeptical of such claims.

One other thing worth noting is that we know from 'The Expressive Power of Transformers with Chain of Thought' that the transformer architecture is capable of general reasoning under autoregressive conditions. That doesn't mean LLMs trained on next-token prediction learn general reasoning, but it means that we can't just rule it out as impossible. [EDIT 10/2024: a new paper, 'Autoregressive Large Language Models are Computationally Universal', makes this even clearer, and furthermore demonstrates that it's true of LLMs in particular].

Evidence against generality

The literature here is quite extensive, and I haven't reviewed it all. Here are three examples that I personally find most compelling. For a broader and deeper review, see "A Survey of Reasoning with Foundation Models".

Block world

All LLMs to date fail rather badly at classic problems of rearranging colored blocks. We do see improvement with scale here, but if these problems are obfuscated, performance of even the biggest LLMs drops to almost nothing[5].

Scheduling

LLMs currently do badly at planning trips or scheduling meetings between people with availability constraints [a commenter points out that this paper has quite a few errors, so it should likely be treated with skepticism].

ARC-AGI

Current LLMs do quite badly on the ARC visual puzzles, which are reasonably easy for smart humans.

Will scaling solve this problem?

The evidence on this is somewhat mixed. Evidence that it will includes LLMs doing better on many of these tasks as they scale. The strongest evidence that it won't is that LLMs still fail miserably on block world problems once you obfuscate the problems (to eliminate the possibility that larger LLMs only do better because they have a larger set of examples to draw from)[5].

One argument made by Sholto Douglas and Trenton Bricken (in a discussion with Dwarkesh Patel) is that this is a simple matter of reliability -- given a 5% failure rate, an AI will most often fail to successfully execute a task that requires 15 correct steps. If that's the case, we have every reason to believe that further scaling will solve the problem.

Will scaffolding or tooling solve this problem?

This is another open question. It seems natural to expect that LLMs could be used as part of scaffolded systems that include other tools optimized for handling general reasoning (eg classic planners like STRIPS), or LLMs can be given access to tools (eg code sandboxes) that they can use to overcome these problems. Ryan Greenblatt's new work on getting very good results on ARC with GPT-4o + a Python interpreter provides some evidence for this.

On the other hand, a year ago many expected scaffolds like AutoGPT and BabyAGI to result in effective LLM-based agents, and many startups have been pushing in that direction; so far results have been underwhelming. Difficulty with planning and novelty seems like the most plausible explanation.

Even if tooling is sufficient to overcome this problem, outcomes depend heavily on the level of integration and performance. Currently for an LLM to make use of a tool, it has to use a substantial number of forward passes to describe the call to the tool, wait for the tool to execute, and then parse the response. If this remains true, then it puts substantial constraints on how heavily LLMs can rely on tools without being too slow to be useful[6]. If, on the other hand, such tools can be more deeply integrated, this may no longer apply. Of course, even if it's slow there are some problems where it's worth spending a large amount of time, eg novel research. But it does seem like the path ahead looks somewhat different if system II thinking remains necessarily slow & external.

Why does this matter?

The main reason that this is important from a safety perspective is that it seems likely to significantly impact timelines. If LLMs are fundamentally incapable of certain kinds of reasoning, and scale won't solve this (at least in the next couple of orders of magnitude), and scaffolding doesn't adequately work around it, then we're at least one significant breakthrough away from dangerous AGI -- it's pretty hard to imagine an AI system executing a coup if it can't successfully schedule a meeting with several of its co-conspirator instances.

If, on the other hand, there is no fundamental blocker to LLMs being able to do general reasoning, then Aschenbrenner's argument starts to be much more plausible, that another couple of orders of magnitude can get us to the drop-in AI researcher, and once that happens, further progress seems likely to move very fast indeed.

So this is an area worth keeping a close eye on. I think that progress on the ARC prize will tell us a lot, now that there's half a million dollars motivating people to try for it. I also think the next generation of frontier LLMs will be highly informative -- it's plausible that GPT-4 is just on the edge of being able to effectively do multi-step general reasoning, and if so we should expect GPT-5 to be substantially better at it (whereas if GPT-5 doesn't show much improvement in this area, arguments like Chollet's and Kambhampati's are strengthened).

OK, but what do you think?

[EDIT: see update post for revised versions of these estimates]

I genuinely don't know! It's one of the most interesting and important open questions about the current state of AI. My best guesses are:

Further reading

Aschenbrenner also discusses 'unhobbling', which he describes as 'fixing obvious ways in which models are hobbled by default, unlocking latent capabilities and giving them tools, leading to step-changes in usefulness'. He breaks that down into categories here. Scaffolding and tooling I discuss here; RHLF seems unlikely to help with fundamental reasoning issues. Increased context length serves roughly as a kind of scaffolding for purposes of this discussion. 'Posttraining improvements' is too vague to really evaluate. But note that his core claim (the graph here) 'shows only the scaleup in base models; “unhobblings” are not pictured'.

Discussion of the ARC prize in the AIS and adjacent communities includes James Wilken-Smith, O O, and Jacques Thibodeaux.

Section 2.4 of the excellent "Foundational Challenges in Assuring Alignment and Safety of Large Language Models" is the closest I've seen to a thorough consideration of this issue from a safety perspective. Where this post attempts to provide an introduction, "Foundational Challenges" provides a starting point for a deeper dive.

This definition is neither complete nor uncontroversial, but is sufficient to capture the range of practical uncertainty addressed below. Feel free to mentally substitute 'the sort of reasoning that would be needed to solve the problems described here.' Or see my more recent attempt at a definition.

A major problem here is that they obfuscated in ways that made the challenge unnecessarily hard for LLMs by pushing against the grain of the English language. For example they use 'pain object' as a fact, and say that an object can 'feast' another object. Beyond that, the fully-obfuscated versions would be nearly incomprehensible to a human as well; eg 'As initial conditions I have that, aqcjuuehivl8auwt object a, aqcjuuehivl8auwt object b...object b 4dmf1cmtyxgsp94g object c...'. See Appendix 1 in 'On the Planning Abilities of Large Language Models'. It would be valuable to repeat this experiment while obfuscating in ways that were compatible with what is, after all, LLMs' deepest knowledge, namely how the English language works.

A useful intuition pump here might be the distinction between data stored in RAM and data swapped out to a disk cache. The same data can be retrieved in either case, but the former case is normal operation, whereas the latter case is referred to as "thrashing" and grinds the system nearly to a halt.

Assuming roughly similar compute increase ratio between gens as between GPT-3 and GPT-4.

This isn't trivially operationalizable, because it's partly a function of how much runtime compute you're willing to throw at it. Let's say a limit of 10k calls per problem.

This isn't really operationalizable at all, I don't think. But I'd have to get pretty good odds to bet on it anyway; I'm neither willing to buy nor sell at 65%. Feel free to treat as bullshit since I'm not willing to pay the tax ;)