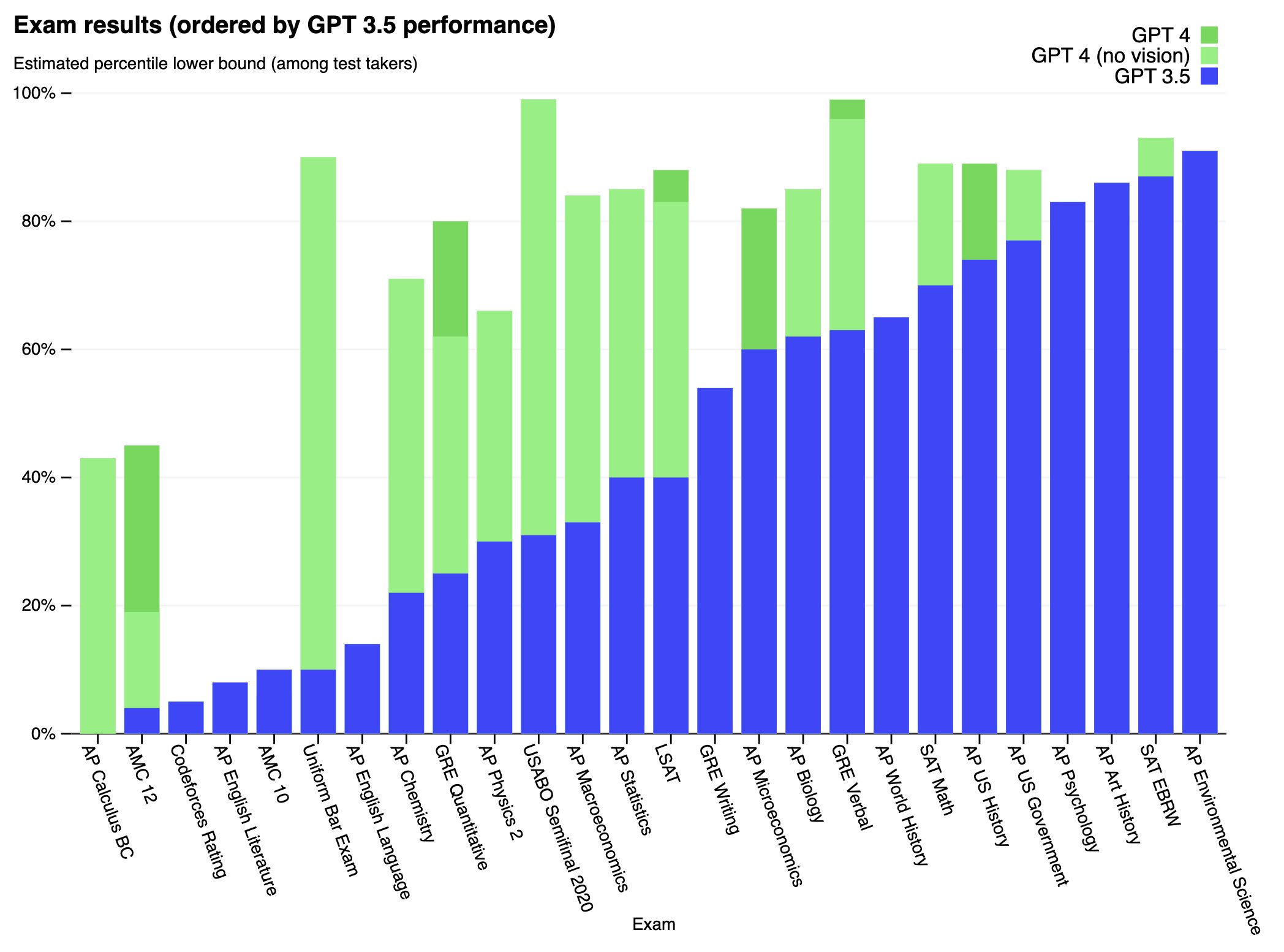

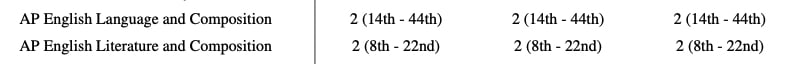

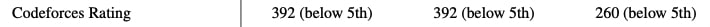

We’ve created GPT-4, the latest milestone in OpenAI’s effort in scaling up deep learning. GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while worse than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.

Full paper available here: https://cdn.openai.com/papers/gpt-4.pdf

This is a bizarre comment. Isn’t a crucial point in these discussions that humans can’t really understand an AGIs plans so how is it that you expect an ARC employee would be able to accurately determine which messages sent to TaskRabbit would actually be dangerous? We’re bordering on “they’d just shut the AI off if it was dangerous” territory here. I’m less concerned about the TaskRabbit stuff which at minimum was probably unethical, but their self replication experiment on a cloud service strikes me as borderline suicidal. I don’t think at all that GPT4 is actually dangerous but GPT6 might be and I would expect that running this test on an actually dangerous system would be game over so it’s a terrible precedent to set.

Imagine someone discovered a new strain of Ebola and wanted to see if it was likely to spawn a pandemic. Do you think a good/safe test would be to take it into an Airport and spray it around baggage check and wait to see if a pandemic happens? Or would it be safer to test it in a Biosafety level 4 lab?