California state senator Scott Wiener, author of AI safety bills SB 1047 and SB 53, just announced that he is running for Congress! I'm very excited about this, and I wrote a blog post about why.

It’s an uncanny, weird coincidence that the two biggest legislative champions for AI safety in the entire country announced their bids for Congress just two days apart. But here we are.*

In my opinion, Scott Wiener has done really amazing work on AI safety. SB 1047 is my absolute favorite AI safety bill, and SB 53 is the best AI safety bill that has passed anywhere in the country. He's been a dedicated AI safety champion who has spent a huge amount of political capital in his efforts to make us safer from advanced AI.

On Monday, I made the case that donating to Alex Bores -- author of the New York RAISE Act -- calling it a "once in every couple of years opportunity", but flagging that I was also really excited about Scott Wiener.

I plan to have a more detailed analysis posted soon, but my bottom line is that donating to Wiener today is about 75% as good as donating to Bores was on Monday, and that this is also an excellent opportunity that will come up very rarely. (The main reason that it loo...

I think that people concerned with AI safety should consider giving to Alex Bores, who's running for Congress.

Alex Bores is the author of the RAISE Act, a piece of AI safety legislation in New York that Zvi profiled positively a few months ago. Today, Bores announced that he's running for Congress.

In my opinion, Bores is one of the best lawmakers anywhere in the country on the issue of AI safety. I wrote a post making the case for donating to his campaign.

If you feel persuaded by the post, here's a link to donate! (But if you think you might want to work in government, then read the section on career capital considerations before donating.)

Note that I expect donations in the first 24 hours to be ~20% better than donations after that, because donations in the first 24 hours will help generate positive press for the campaign. But I don't mean to rush anyone: if you don't feel equipped to assess the donation opportunity on your own terms, you should take your time!

I have something like mixed feelings about the LW homepage being themed around "If Anyone Builds it, Everyone Dies":

- On the object level, it seems good for people to pre-order and read the book.

- On the meta level, it seems like an endorsement of the book's message. I like LessWrong's niche as a neutral common space to rigorously discuss ideas (it's the best open space for doing so that I'm aware of). Endorsing a particular thesis (rather than e.g. a set of norms for discussion of ideas) feels like it goes against this neutrality.

Huh, I personally am kind of hesitant about it, but not because it might cause people to think LessWrong endorses the message. We've promoted lots of stuff at the top of the frontpage before, and in-general promote lots of stuff with highly specific object-level takes. Like, whenever we curate something, or we create a spotlight for a post or sequence, we show it to lots of people, and most of the time what we promote is some opinionated object-level perspective.

I agree if this was the only promotion of this kind we have done or will ever do, that it would feel more like we are tipping the scales in some object-level discourse, but it feels very continuous with other kinds of content promotions we have done (and e.g. I am hoping that we will do a kind of similar promotion for some AI 2027 work we are collaborating on with the AI Futures Project, and also for other books that seem high-quality and are written by good authors, like if any of the other top authors on LW were releasing a book, I would be pretty happy to do similar things).

The thing that makes me saddest is that ultimately the thing we are linking and promoting is something that current readers do not have the abi...

Maybe the crux is whether the dark color significantly degrades user experience. For me it clearly does, and my guess is that's what Sam is referring to when he says "What is the LW team thinking? This promo goes far beyond anything they've done or that I expected they would do."

For me, that's why this promotion feels like a different reference class than seeing the curated posts on the top or seeing ads on the SSC sidebar.

like, within 24 hours there should be a button that just gives you back whatever normal color scheme you previously had on the frontpage).

@David Matolcsi There is now a button in the top right corner of the frontpage you can click to disable the whole banner!

The thing that makes me saddest is that ultimately the thing we are linking and promoting is something that current readers do not have the ability to actually evaluate on their own

This has been nagging at me throughout the promotion of the book. I've preordered for myself and two other people, but only with caveats about how I haven't read the book. I don't feel comfortable doing more promotion without reading it[1] and it feels kind of bad that I'm being asked to.

- ^

I talked to Rob Bensinger about this, and I might be able to get a preview copy if if were a crux for a grand promotional plan, but not for more mild promotion.

Some things we promoted in the right column:

LessOnline (also, see the spotlights at the top for random curated posts):

LessOnline again:

LessWrong review vote:

Best of LessWrong results:

Best of LessWrong results (again):

The LessWrong books:

The HPMOR wrap parties:

Our fundraiser:

ACX Meetups everywhere:

We also either deployed for a bit, or almost deployed, a PR where individual posts that we have spotlights for (which is just a different kind of long-term curation) get shown as big banners on the right. I can't currently find a screenshot if it, but it looked pretty similar to all the banners you see above for all the other stuff, just promoting individual posts.

To be clear, the current frontpage promotion is a bunch more intense than this!

Mostly this is because Ray/I had a cool UI design idea that we could only make work in dark mode, and so we by default inverted the color scheme for the frontpage, and also just because I got better as a designer and I don't think I could have pulled off the current design a year ago. If I could do something as intricate/high-effort as this all year round for great content I want to promote, I...

Yeah, all of these feel pretty different to me than promoting IABIED.

A bunch of them are about events or content that many LW users will be interested in just by virtue of being LW users (e.g. the review, fundraiser, BoLW results, and LessOnline). I feel similarly about the highlighting of content posted to LW, especially given that that's a central thing that a forum should do. I think the HPMOR wrap parties and ACX meetups feel slightly worse to me, but not too bad given that they're just advertising meet-ups.

Why promoting IABIED feels pretty bad to me:

- It's a commercial product—this feels to me like typical advertising that cheapens LW's brand. (Even though I think it's very unlikely that Eliezer and Nate paid you to run the frontpage promo or that your motivation was to make them money.)

- The book has a very clear thesis that it seems like you're endorsing as "the official LW position." Advertising e.g. HPMOR would also feel weird to me, but substantially less so, since HPMOR is more about rationality more generally and overlaps strongly with the sequences, which is centrally LW content. In other words, it feels like you're implicitly declaring "P(doom) is high" to be a core tenet of LW discourse in the same way that e.g. truth-seeking is.

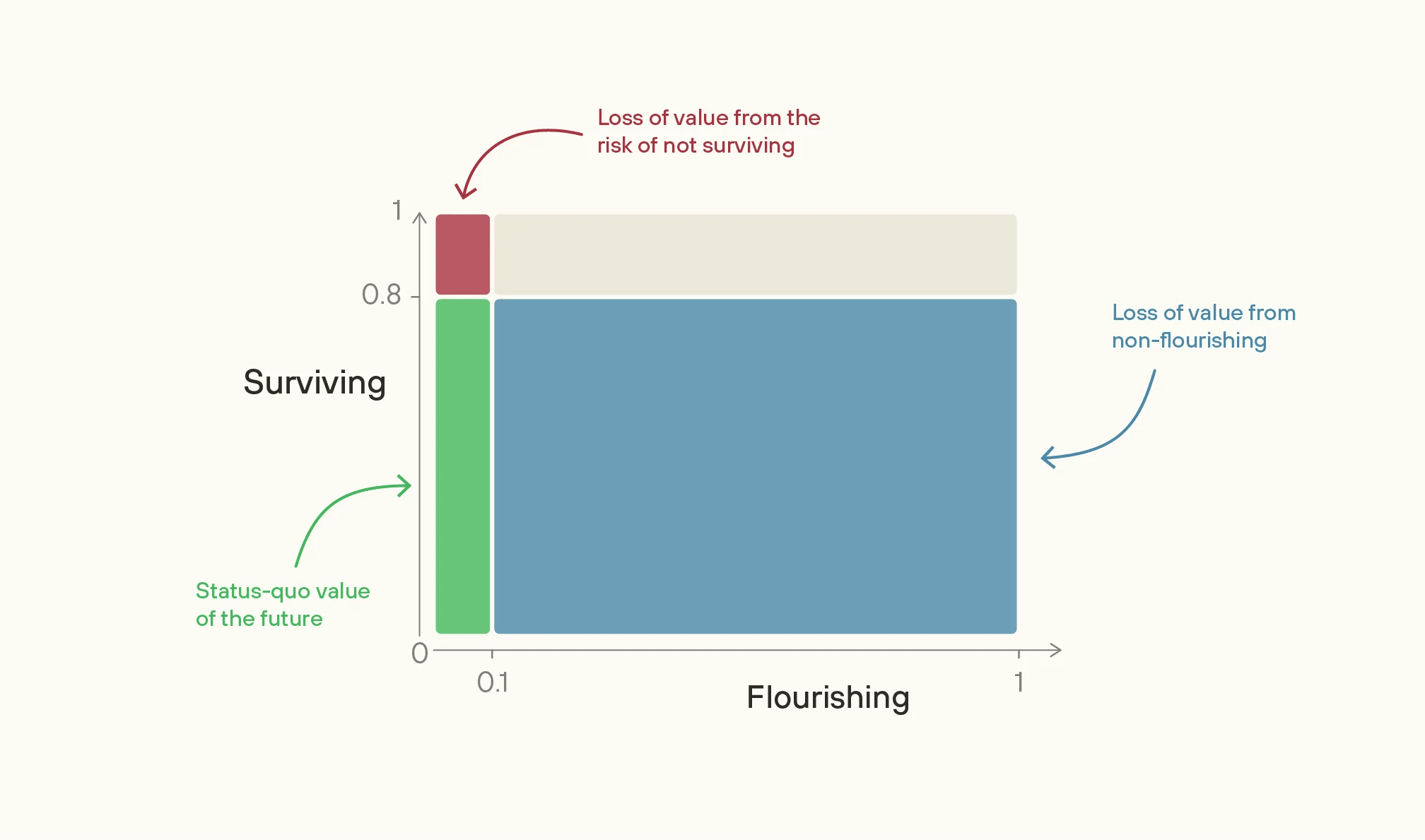

People are underrating making the future go well conditioned on no AI takeover.

This deserves a full post, but for now a quick take: in my opinion, P(no AI takeover) = 75%, P(future goes extremely well | no AI takeover) = 20%, and most of the value of the future is in worlds where it goes extremely well (and comparatively little value comes from locking in a world that's good-but-not-great).

Under this view, an intervention is good insofar as it affects P(no AI takeover) * P(things go really well | no AI takeover). Suppose that a given intervention can change P(no AI takeover) and/or P(future goes extremely well | no AI takeover). Then the overall effect of the intervention is proportional to ΔP(no AI takeover) * P(things go really well | no AI takeover) + P(no AI takeover) * ΔP(things go really well | no AI takeover).

Plugging in my numbers, this gives us 0.2 * ΔP(no AI takeover) + 0.75 * ΔP(things go really well | no AI takeover).

And yet, I think that very little AI safety work focuses on affecting P(things go really well | no AI takeover). Probably Forethought is doing the best work in this space.

(And I don't think it's a tractability issue: I think affecting P(things go really well | no AI takeover) is pretty tractable!)

(Of course, if you think P(AI takeover) is 90%, that would probably be a crux.)

I guess that influencing P(future goes extremely well | no AI takeover) maybe pretty hard, and plagued by cluelessness problems. Avoiding AI takeover is a goal that I have at least some confidence is good.

That said, I do wish more people were thinking about to make the future go well. I think my favorite thing to aim for is increasing the probability that we do a Long Reflection, although I haven't really thought at all about how to do that.

You can also work on things that help with both:

- AI pause/stop/slowdown - Gives more time to research both issues and to improve human intelligence/rationality/philosophy which in turn helps with both.

- Metaphilosophy and AI philosophical competence - Higher philosophical competence means AIs can help more with alignment research (otherwise such research will be bottlenecked by reliance on humans to solve the philosophical parts of alignment), and also help humans avoid making catastrophic mistakes with their new newfound AI-given powers if no takeover happens.

If I were primarily working on this, I would develop high-quality behavioral evaluations for positive traits/virtuous AI behavior.

This benchmark for empathy is an example of the genre I'm talking about. In it, in the course of completing a task, the AI encounters an opportunity to costlessly help someone else that's having a rough time; the benchmark measures whether the AI diverts from its task to help out. I think this is a really cool idea for a benchmark (though a better version of it would involve more realistic and complex scenarios).

When people say that Claude Opus 3 was the "most aligned" model ever, I think they're typically thinking of an abundance of Opus 3's positive traits, rather than the absence of negative traits. But we don't currently have great evaluations for this sort of virtuous behavior, even though I don't think it's especially conceptually fraught to develop them. I think a moderately thoughtful junior researcher could probably spend 6 months cranking out a large number of high-quality evals and substantially improve the state of things here.

This would require a longer post, but roughly speaking, I'd want the people making the most important decisions about how advanced AI is used once it's built to be smart, sane, and selfless. (Huh, that was some convenient alliteration.)

- Smart: you need to be able to make really important judgment calls quickly. There will be a bunch of actors lobbying for all sorts of things, and you need to be smart enough to figure out what's most important.

- Sane: smart is not enough. For example, I wouldn't trust Elon Musk with these decisions, because I think that he'd make rash decisions even though he's smart, and even if he had humanity's best interests at heart.

- Selfless: even a smart and sane actor could curtail the future if they were selfish and opted to e.g. become world dictator.

And so I'm pretty keen on interventions that make it more likely that smart, sane, and selfless people are in a position to make the most important decisions. This includes things like:

- Doing research to figure out the best way to govern advanced AI once it's developed, and then disseminating those ideas.

- Helping to positively shape internal governance at the big AI companies (I don't have concrete suggestions in th

Nancy Pelosi is retiring; consider donating to Scott Wiener.

[Link to donate; or consider a bank transfer option to avoid fees, see below.]

Nancy Pelosi has just announced that she is retiring. Previously I wrote up a case for donating to Scott Wiener, an AI safety champion in the California legislature who is running for her seat, in which I estimated a 60% chance that Pelosi would retire. While I recommended donating on the day that he announced his campaign launch, I noted that donations would look much better ex post in worlds where Pelosi retires, and that my recommendation to donate on launch day was sensitive to my assessment of the probability that she would retire.

I know some people who read my post and decided (quite reasonably) to wait to see whether Pelosi retired. If that was you, consider donating today!

How to donate

You can donate through ActBlue here (please use this link rather than going directly to his website, because the URL lets his team know that these are donations from people who care about AI safety).

Note that ActBlue charges a 4% fee. I think that's not a huge deal; however, if you want to make a large contribution and are already comfortable making bank tra...

I think that people who work on AI alignment (including me) have generally not put enough thought into the question of whether a world where we build an aligned AI is better by their values than a world where we build an unaligned AI. I'd be interested in hearing people's answers to this question. Or, if you want more specific questions:

- By your values, do you think a misaligned AI creates a world that "rounds to zero", or still has substantial positive value?

- A common story for why aligned AI goes well goes something like: "If we (i.e. humanity) align AI, we can and will use it to figure out what we should use it for, and then we will use it in that way." To what extent is aligned AI going well contingent on something like this happening, and how likely do you think it is to happen? Why?

- To what extent is your belief that aligned AI would go well contingent on some sort of assumption like: my idealized values are the same as the idealized values of the people or coalition who will control the aligned AI?

- Do you care about AI welfare? Does your answer depend on whether the AI is aligned? If we built an aligned AI, how likely is it that we will create a world that treats AI welfare

By your values, do you think a misaligned AI creates a world that "rounds to zero", or still has substantial positive value?

I think misaligned AI is probably somewhat worse than no earth originating space faring civilization because of the potential for aliens, but also that misaligned AI control is considerably better than no one ever heavily utilizing inter-galactic resources.

Perhaps half of the value of misaligned AI control is from acausal trade and half from the AI itself being valuable.

You might be interested in When is unaligned AI morally valuable? by Paul.

One key consideration here is that the relevant comparison is:

- Human control (or successors picked by human control)

- AI(s) that succeeds at acquiring most power (presumably seriously misaligned with their creators)

Conditioning on the AI succeeding at acquiring power changes my views of what their plausible values are (for instance, humans seem to have failed at instilling preferences/values which avoid seizing control).

...A common story for why aligned AI goes well goes something like: "If we (i.e. humanity) align AI, we can and will use it to figure out what we should use it for, and then we will use it in that way.

Perhaps half of the value of misaligned AI control is from acausal trade and half from the AI itself being valuable.

Why do you think these values are positive? I've been pointing out, and I see that Daniel Kokotajlo also pointed out in 2018 that these values could well be negative. I'm very uncertain but my own best guess is that the expected value of misaligned AI controlling the universe is negative, in part because I put some weight on suffering-focused ethics.

I frequently find myself in the following situation:

Friend: I'm confused about X

Me: Well, I'm not confused about X, but I bet it's because you have more information than me, and if I knew what you knew then I would be confused.

(E.g. my friend who know more chemistry than me might say "I'm confused about how soap works", and while I have an explanation for why soap works, their confusion is at a deeper level, where if I gave them my explanation of how soap works, it wouldn't actually clarify their confusion.)

This is different from the "usual" state of affairs, where you're not confused but you know more than the other person.

I would love to have a succinct word or phrase for this kind of being not-confused!

What are some examples of people making a prediction of the form "Although X happening seems like obviously a bad thing, in fact the good second-order effects would outweigh the bad first-order effects, so X is good actually", and then turning out to be correct?

(Loosely inspired by this quick take, although I definitely don't mean to imply that the author is making such a prediction in this case.)

Many economic arguments take this form and are pretty solid, eg “although lowering the minimum wage would cause many to get paid less, in the longer term more would be willing to hire, so there will be more jobs, and less risk of automation to those currently with jobs. Also, services would get cheaper which benefits everyone”.

I think it’s useful to think about the causation here.

Is it:

Intervention -> Obvious bad effect -> Good effect

For example: Terrible economic policies -> Economy crashes -> AI capability progress slows

Or is it:

Obvious bad effect <- Intervention -> Good effect

For example: Patient survivably poisoned <- Chemotherapy -> Cancer gets poisoned to death

lots of food and body things that are easily verifiable, quick, and robust. take med, get headache, not die. take poison, kill cancer, not die. stop eating good food, blood sugar regulation better, more coherent. cut open body, move some stuff around, knit it together, tada healthier.

all of these are extremely specific, if you do them wrong you get bad effect. take wrong med, get headache, still die. take wrong poison, die immediately. stop eating good food but still eat crash inducing food, unhappy and not more coherent. cut open body randomly, die quickly.

Pacts against coordinating meanness.

I just re-read Scott Alexander's Be Nice, At Least Until You Can Coordinate Meanness, in which he argues that a necessary (but not sufficient) condition on restricting people's freedom should be that you should first get societal consensus that restricting freedom in that way is desirable (e.g. by passing a law via the appropriate mechanisms).

In a sufficiently polarized society, there could be two similarly-sized camps that each want to restrict each other's freedom. Imagine a country that's equally divided between Chris...

Yeah I've argued that banning lab meat is completely rational for the meat-eater because if progress continues then animal meat will probably be banned before the quality/price of lab meat is superior for everyone.

I think the "commitment" you're describing is similar to the difference between "ordinary" and "constitutional" policy-making in e.g. The Calculus of Consent; under that model, people make the kind of non-aggression pacts you're describing mainly under conditions of uncertainty where they're not sure what their future interests or position of political advantage will be.

It's often very hard to make commitments like this, so I think that most of the relevant literature might be about how you can't do this. E.g. a Thucydides trap is when a stronger power launches a preventative war against a weaker rising power; one particular reason for this is that the weaker power can't commit to not abuse their power in the future. See also security dilemma.

People like to talk about decoupling vs. contextualizing norms. To summarize, decoupling norms encourage for arguments to be assessed in isolation of surrounding context, while contextualizing norms consider the context around an argument to be really important.

I think it's worth distinguishing between two kinds of contextualizing:

(1) If someone says X, updating on the fact that they are the sort of person who would say X. (E.g. if most people who say X in fact believe Y, contextualizing norms are fine with assuming that your interlocutor believes Y unless...