A response to someone asking about my criticisms of EA (crossposted from twitter):

EA started off with global health and ended up pivoting hard to AI safety, AI governance, etc. You can think of this as “we started with one cause area and we found another using the same core assumptions” but another way to think about it is “the worldview which generated ‘work on global health’ was wrong about some crucial things”, and the ideology hasn’t been adequately refactored to take those things out.

Some of them include:

This would have been my expectation, from most likely to least likely:

AI safety field-building in Australia should accelerate. My rationale:

Perth also exists!

The Perth Machine Learning Group sometimes hosts AI Safety talks or debates. The most recent one had 30 people attend at the Microsoft office with a wide range of opinions. If anyone is passing through and is interested in meeting up or giving a talk, you can contact me.

And there are a decent amount of technical machine learning people, mainly coming and going through the mining and related industries (Perth is somewhat like the Houston of Australia).

Are models like Opus 4.6 doing a similar thing to o1/o3 when reasoning?

There was a lot of talk about reasoning models like o1/o3 devolving into uninterpretable gibberish in their chains-of-thought, and that these were fundamentally a different kind of thing than previous LLMs. This was (to my understanding) one of the reasons only a summary of the thinking was available.

But when I use models like Opus 4.5/4.6 with extended thinking, the chains-of-thought (appear to be?) fully reported, and completely legible.

I've just realised that I'm not sure what's goin...

I think the pressures towards illegible CoTs have been greatly overstated; the existing illegibilities in CoT's could have come from many things apart from pressure towards condensed or alien languages.

how valuable are formalized frameworks for safety, risk assessment, etc in other (non-AI) fields?

i'm temperamentally predisposed to find that my eyes glaze over whenever i see some kind of formalized risk management framework, which usually comes with a 2010s style powerpoint diagram - see below for a random example i grabbed from google images:

am i being unfair to these things? are they actually super effective for avoiding failures in other domains? or are they just useful for CYA and busywork and mostly just say common sense in an institutionally legibl...

Yes, that's one value. RSPs & many policy debates around it would have been less messed up if there had been clarity (i.e. they turned a confused notion into the standard, which was then impossible to fix in policy discussions, making the Code of Practice flawed). I don't know of a specific example of preventing equivocation in other industries (it seems hard to know of such examples?) but the fact that basically all industries use a set of the same concepts is evidence that they're pretty general-purpose and repurposable.

Another is just that it helps ...

Elon Musk seems to have decided that going to Mars isn't that important anymore.

For those unaware, SpaceX has already shifted focus to building a self-growing city on the Moon, as we can potentially achieve that in less than 10 years, whereas Mars would take 20+ years.

[...]

That said, SpaceX will also strive to build a Mars city and begin doing so in about 5 to 7 years, but the overriding priority is securing the future of civilization and the Moon is faster.

This means not only won't they begin with Mars this transfer window but also not the next one....

In his recent Dwarkesh Patel interview, Musk is pretty clear about why he wants to go to the moon: he wants to build a ton of solar panels to capture more of the sun's energy, its easier to launch from the moon than from the earth, and the moon has plenty of silicon [1] . He is also pretty clear that he wants to build datacenters in orbit. I don't think we need to speculate to get answers here.

Also, it keeps things interesting for the simulator gods. ↩︎

Zachary Robinson and Kanika Bahl are no longer on the Anthropic LTBT. Mariano-Florentino (Tino) Cuéllar has been added. The Anthropic Company page is out of date, but as far as I can tell the LTBT is: Neil Buddy Shah (chair), Richard Fontaine, and Cuéllar.

Indeed! Note also that I started the timeline for this fit with Opus 3 as the first model. But I thought this was worth posting, because subjectively it felt like the second half of 2025 went slower than AI2027 prediced (and Daniel even tweeted at one point that he had increased his timelines near EOY 2025), yet by the METR metric we are still pretty close to on track.

Objectively, the global population is about 8 billion. But subjectively?

Let p_i be the probability I'll meet person i in the next year, and let μ = Σ p_i be the expected number of people I meet. Then the subjective population is

N = exp( -Σ (p_i/μ) log(p_i/μ) )

This is the perplexity of the conditional distribution "given I meet someone, who is it?"

My guess is that for me, N is around 10,000 — about 0.0001% of humanity.

TIL that notorious Internet crackpot Mentifex who inserted himself into every AI discussion he could in the olden days of the Internet passed away in 2024.

https://obituaries.seattletimes.com/obituary/arthur-murray-1089408830

I didn't know anything about him besides being an AI pest on SL4 and elsewhere who I disliked greatly. He sounds like he was a surprisingly cool guy offline (or should that be, 'before'?). It makes me wonder why he was such an AI crank and if something happened late in life which contributed to that.

AI being committed to animal rights is a good thing for humans because the latent variables that would result in a human caring about animals are likely correlated with whatever would result in an ASI caring about humans.

This extends in particular to "AI caring about preserving animals' ability to keep doing their thing in their natural habitats, modulo some kind of welfare interventions." In some sense it's hard for me not to want to (given omnipotence) optimize wildlife out of existence. But it's harder for me to think of a principle that would protect a...

I think it's plausible that there are some variables that describe your essential computational properties and the way you self-actualize, that aren't shared by anyone else.

(Also, consciousness is just a pattern-being-processed and it's unclear if continuity of consciousness requires causal continuity. Imagine a robot that gets restored from a one-second-old backup. That pattern doesn't have causal continuity with its self from a moment ago, but it looks like it's more intuitive to see it as a one-second memory loss instead of death.)

Even now and then I meet someone who tries to argue that if I don't agree with them this is because I'm not open mided enough. Is there a term for this?

Epistemically I'm not convinced buy this type of arugment, but socialy it feels like I'm beeing shamed, and I hate it.

I also find it hard to call out this type of behaviur when it happens, even when I can tell exactly what is going on. I think it I had a name for this behaviour it would be easier? Not sure though?

Is there a term for this?

Seems like a subtype of Bulverism; not aware of a more specific term.

I also find it hard to call out this type of behaviur when it happens, even when I can tell exactly what is going on.

Assuming you have a LWer-typical level of atypicality, you could say "I literally do/believe [outlandish but politically-neutral activity/opinion], there's no way closed-mindedness is my problem." (If it were me, I'd use donating to Shrimp Welfare; apparently most people think that's strange, for some reason.)

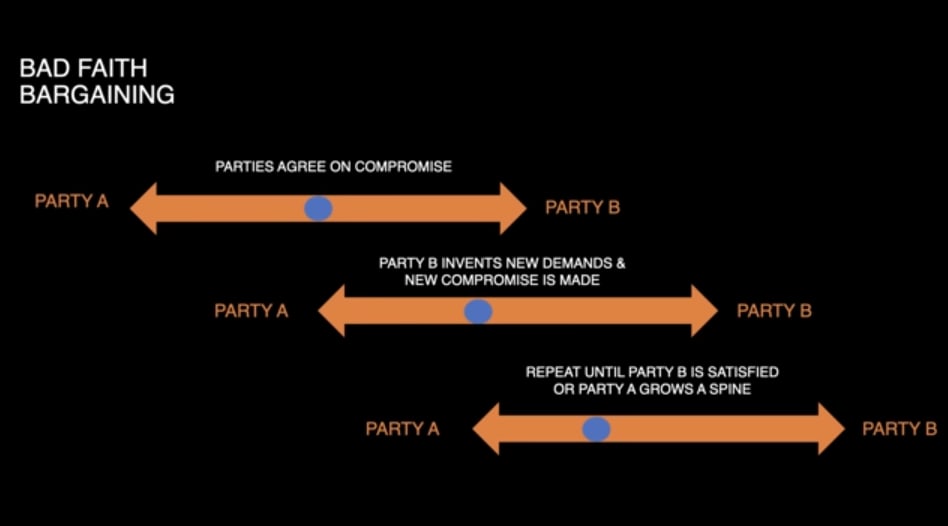

I continue to find sources with military background, who present news as relatively emotion-free overviews of strategic situation, are some of the best sources of news I'm aware of. That's not to say they're not biased, only to say they give a higher resolution potentially-biased picture, and that several high resolution pictures from different angles are better than low resolution pictures. I was just listening to one of Perun's presentations again, which had this nice slide:

I also continue to find Belle of the Ranch to be good at finding news that I woul...

though it seems too weak to me on the one hand

It is; I prefer a sensible lower bound on the basis that, once the better-than-a-rock bound is satisfied, there's room for complex reasons to like someone who's more accurate on one front than another.

Might be useful to build a tool that collates and checks all predictions someone makes and then scores them. Would cost a bit but shouldn't be too hard otherwise.

I agree, it's definitely tractable to build something like that with a current-gen LLM. Grab future predictions that can be unambiguously verified ("The ...

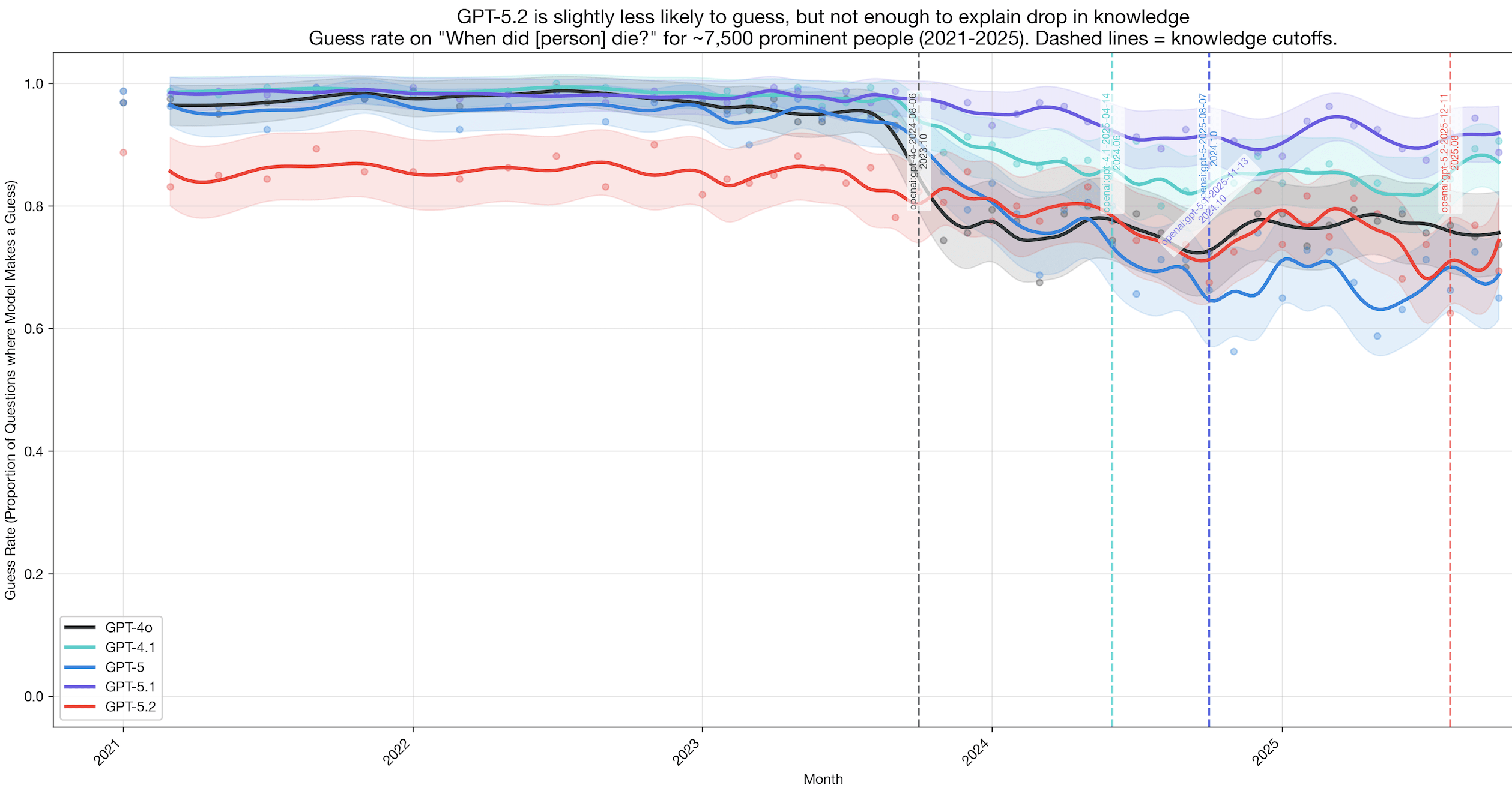

People often ask whether GPT-5, GPT-5.1, and GPT-5.2 use the same base model. I have no private information, but I think there's a compelling argument that AI developers should update their base models fairly often. The argument comes from the following observations:

I think 1 is true. This is only a single, quite obscure, factual recall eval. It's certainly possible to have regressions on some evals across model versions if you don't optimize for those evals at all.

Wrt point 2 -> here is the plot of how often the models guess, versus say they do not know, on the same dataset. My understanding is that the theory in point 2 would have predicted a much more dramatic drop in GPT-5.2?

Toughness is a topic I spent some time thinking about today. The way I think about it is that toughness is one's ability to push through difficulty.

Imagine that Alice is able to sit in an ice bath for 6 minutes and Bob is only able to sit in the ice bath for 2 minutes. Is Alice tougher than Bob? Not necessarily. Maybe Alice takes lots of ice baths and the level of discomfort is only like a 4/10 for here whereas for Bob it's like an 8/10. I think when talking about toughness you want to avoid comparing apples to oranges.

I suspect that toughness depends on t...

Imagine that Alice is able to sit in an ice bath for 6 minutes and Bob is only able to sit in the ice bath for 2 minutes. Is Alice tougher than Bob? Not necessarily. Maybe Alice takes lots of ice baths and the level of discomfort is only like a 4/10 for here whereas for Bob it's like an 8/10. I think when talking about toughness you want to avoid comparing apples to oranges.

I strongly suspect "toughness" is a lot like "pain tolerance" - there is no known way to measure how much of an outcome is mental tenacity and how much is simply noticing the difficulty...

At my job on the compute policy team at IAPS, we recently started a Substack that we call The Substrate. I think this could be of interest to some here, since I quite often see discussions on LessWrong around export controls, hardware-enabled mechanisms, security, and other compute-governance-related topics.

Here are the posts we've published so far:

The Immune System as Anti-Optimizer

We have a short list of systems we like to call "optimizers" — the market, natural selection, human design, superintelligence. I think we ought to hold the immune system in comparable regard; I'm essentially ignorant of immunobiology beyond a few YouTube videos (perhaps a really fantastic LW sequence exists of which I am unaware), but here's why I am thinking this.

The immune system is the archetypal anti-optimizer: it defends a big multicellular organism from rapidly evolving microbiota. The key asymmetry:

Yup I definitely agree there's no special role for unicellular attackers - I was eliding the complexity for brevity. I think the asymmetry still broadly holds meaningfully - e.g. multicellular parasites are very complex attackers but have much longer generation-times (I assume?), so they too trade off online vs offline optimization bits. Nonetheless the host organism still has more complexity to draw on for most things with which the immune system is concerned.

Interesting to think about the pareto frontier of offline vs online optimization. The multicel...

Why isn’t there a government program providing extremely easy access to basic food? (US)

I imagine that it would be incredibly cheap to provide easy, no-questions-asked access to food that is somewhat nutritious yet low status and not desirable (old bread, red delicious apples, etc.)

SNAP has a large admin overhead, and this could easily supplement it.

Free school lunches (+ sometimes breakfasts) is a real world policy adjacent to your idea, we could look to it for hints about outcomes of a larger basic food program. My recollection is that it has pretty good outcomes but a deeper dive would be better than my recollections.

Some take off scenario projects the compute available for LLM-led experiments?

I assume today you can model developments per human AI researcher as: number of experiments X number of FLOPS X research taste.

Over time, you have a lower bound on how many FLOPS you need for an experiment.

My question is how realistic is the improvement by having LLMs doing AI research, to the extent this means the LLMs will be conducting the experiments themselves. You'd probably need to 100-1000x the compute available to small scale experiments to see any meaningful acceleration.

Thoughts?

Inspired by this tweet: https://x.com/chrispainteryup/status/2020738025907712225?s=46