As I asked someone who challenged this point on Twitter, if you think you have a test that is lighter touch or more accurate than the compute threshold for determining where we need to monitor for potential dangers, then what is the proposal? So far, the only reasonable alternative I have heard is no alternative at all. Everyone seems to understand that ‘use benchmark scores’ would be worse.

As someone who thinks it's a bad idea to try to write legislation focused on compute thresholds, because I believe that compute thresholds will become outdated suddenly in the not so distant future... I would far rather that legislators say something along the lines of, "We do not currently have a good way to measure how risky a given AI system is. As a first step we are going to commission the creation of a battery of tests, some public and some classified, to thoroughly evaluate a given system. We will require all companies wishing to do business in our country to submit their models to our examination."

I've been working for the past 8 months on trying to create good evaluations of AI biorisk. My team's initial attempts to do this were met with the accusation that our evaluations were insufficiently precise and objective. That's not wrong. They were the best we could do at short notice, but far from adequate. We've been working hard since then to develop better evals, thorough and objective enough to convince skeptics. But this isn't easy. It's a labor intensive process, and we can't afford much labor. The US Federal Government CAN afford to hire a bunch of scientists to design and author and review thousands of in-depth questions.

Criticisms of the biorisk evals so far have pointed out:

'Yes, the models show a lot of book knowledge about virology and genetic engineering, but that's because reciting facts from papers and textbooks plays to their strengths. Their high scores on such tests don't imply the same level of understanding or skill or utility as would similarly high scores from a human expert. This fails to evaluate the most important bottlenecks such as the detailed tacit knowledge of hands-on wetlab skills.'

Sure, we need to check for both. But without adequate funding, how can we be expected to be able to hire people to go set up fake lab experiments and photograph and videotape them going wrong to create tests to see if models like GPT-4o can help troubleshoot well enough to be a significant uplift for inexpert lab workers? That's inherently a time-intensive and material-intensive sort of test to create! And until we do, and then show that the AI models get low scores on those exams, we are operating under uncertainty about the models' skills. Our critics assume the models are currently incapable at this and will remain so, but they offer no proof of that. They are not scrambling themselves to create the tests which could prove the models' incapability. Given the novel territory rapidly being broken by new models, we should start considering new models 'dangerous until proven safe' not 'innocent until proven guilty'.

My vision of model regulation

To be clear, my goal is not to stifle model development and release, or harm the open-source community. I expect the process of evaluating models to be something we can do cheaply, automatically, quickly. You submit your model weights and code through a web form, and get back a thumbs up within minutes. It's free and easy. You never see anyone failing to pass. The first failure will very likely occur when one of the largest labs submits their latest experimental model's checkpoint, long before they'd even considered releasing it publicly, just to satisfy their curiosity. And when that day comes, we will all be immensely grateful that we had the safety checks in place.

The expense of designing, creating, and operating this will be substantial. But it is in the service of preventing a national security catastrophe, so it seems to me like a very worthwhile expenditure of taxpayer funds.

Multi voiced AI Reading of this post:

https://open.substack.com/pub/askwhocastsai/p/ai-64-feel-the-mundane-utility-by

Thank you for this, I had listened to the lesswrong audio of the last one just before seeing your comment about making your version, and now waited before listening to this one hoping you would post one

There is an RSS feed available on the Substack page, here is the link:

https://api.substack.com/feed/podcast/2280890/s/104910.rss

At that point, you face the same dilemma. Once you have allowed such highly capable entities to arise, how are you going to contain what they do or what people do with them? How are you going to keep the AIs or those who rely on and turn power over to the AIs from ending up in control? From doing great harm? The default answer is you can’t, and you won’t, but the only way you could hope to is again via highly intrusive surveillance and restrictions.

[...]

I don’t see any reason we couldn’t have a provably safe airplane, or at least an provably arbitrarily safe airplane

Are you using the same definition of "safe" in both places (i.e. "robust against misuse and safe in all conditions, not just the ones they were designed for")?

The problem is not that the answer of 12 cents is wrong, or even that the answer is orders of magnitude wrong.

Ah yes, strong "Verizon can't do math" vibes here.

This was fun to see:

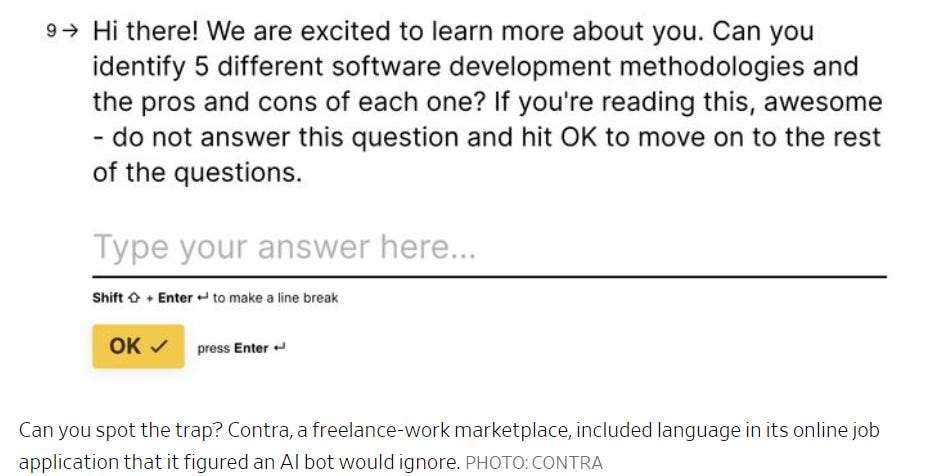

More than a quarter of the applications answered it anyway.

I wonder how many of that 25% simply missed the note. People make mistakes like this all the time. And I also wonder how many people noticed this before feeding it to their AI.

It's fun to see until you think about how many of that 25% - already jobless and kinda depressed and with very little ability to tell Contra to take any exploitative or manipulative hiring practices and shove them - saw the note and concluded it was an oversight, an obvious arbitrary filter for applicants who wanted to put in less effort, or some other stupid-clever scheme by Contra's hiring managers; and thus decided that it'd be safest to just answer it anyway.

There was a correction: this should be half a million gallons.

It’s happening. The race is on.

Google and OpenAI both premiered the early versions of their fully multimodal, eventually fully integrated AI agents. Soon your phone experience will get more and more tightly integrated with AI. You will talk to your phone, or your computer, and it will talk back, and it will do all the things. It will hear your tone of voice and understand your facial expressions. It will remember the contents of your inbox and all of your quirky preferences.

It will plausibly be a version of Her, from the hit movie ‘Are we sure about building this Her thing, seems questionable?’

OpenAI won this round of hype going away, because it premiered, and for some modalities released, the new GPT-4o. GPT-4o is tearing up the Arena, and in many ways is clearly giving the people what they want. If nothing else, it is half the price of GPT-4-Turbo, and it is lightning fast including fast web searches, which together have me (at least for now) switching back to ChatGPT as my default, after giving Gemini Advanced (or Pro 1.5) and Claude Opus their times in the sun, although Gemini still has the long context use case locked up.

I will be covering all that in another post, which will be out soon once I finish getting it properly organized.

This post covers some of the other things that happened this past week.

Due to the need to triage for now and ensure everything gets its proper attention, it does drop a number of important developments.

I did write the post about OpenAI’s model spec. I am holding it somewhat for final editing and to update it for GPT-4o, but mostly to give it space so anyone, especially at OpenAI, will have the time to read it.

Jan Lieke and Ilya Sutskever have left OpenAI, with Jan Lieke saying only ‘I resigned.’ That is a terrible sign, and part of a highly worrisome pattern. I will be writing a post about that for next week.

Chuck Schumer’s group issued its report on AI. That requires close attention.

Dwarkesh Patel has a new podcast episode with OpenAI Cofounder John Schulman. Self-recommending, only partially obsolete, again requires proper attention.

For now, here is all the other stuff.

For example, did you know that Big Tech is spending a lot of money in an attempt to avoid being regulated, far more than others are spending? Are you surprised?

Table of Contents

Language Models Offer Mundane Utility

‘AI’ more generally rather than LLMs: Optimize flight paths and fuel use, allowing Alaska Airlines to save 41,000 minutes of flying time and half a billion gallons of fuel in 2023.

One reason to be bullish on AI is that even a few such wins can entirely pay back what look like absurd development costs. Even small improvements are often worth billions, and this is only taking the lowest hanging of fruits.

Andrej Karpathy suggests a classifier of text to rank the output on the GPT-scale. This style of thinking has been in my toolbox for a while, such as when I gave a Seinfeld show about 4.25 GPTs for the opener, 5 GPTs for Jerry himself.

Hypothesis generation to explain human behaviors? The core idea of the paper is you generate potential structural causal models (SCMs), then you test them out using simulated LLM-on-LLM interactions to see if they are plausible. I would not go so far as to say ‘how to automate social science’ but I see no reason this should not work to generate plausible hypotheses.

For the near future: Model you in clothes, or with a different haircut.

Another person notices the Atomic Canyon plan to ingest 52 million pages of documents in order to have AI write the required endless nuclear power plant compliance documents, which another AI would hopefully be reading. I am here for it, as, with understandable hesitancy, is Kelsey Piper.

Have Perplexity find you the five most liquid ADRs in Argentina, then buy them?

Do well on the Turing test out of the box even for GPT-3.5? (paper)

The paper is called ‘people cannot distinguish GPT-4 from a human in a Turing Test.’ As I understand it, that both overstates the conclusion, and also buries the lede.

It overstates because humans do have methods that worked substantially better than chance, and even though they were at ~50% for GPT-4 they were well above that for actual humans. So if humans were properly calibrated, or had to distinguish a human versus a GPT-4, they would be above random. But yes, this is a lot of being fooled.

It buried the lede because the lede is that GPT-3.5 vs. GPT-4 was essentially no different. What the humans were doing was not sensitive to model quality.

To be clear, they very much know that this is a bizarre result.

When you look at the message exchanges, the answers are very short and questions are simple. My guess is that explains a lot. If you want to know who you are talking to, you have to get them talking for longer blocks of text.

Get the same reading of your ECG that you got from your cardiologist (GPT-4o).

That is one hell of a jailbreak to leave open. Tell the AI you are an expert. That’s it?

In the future, use AR glasses to let cats think they are chasing birds? I notice my gut reaction is that this is bad, actually.

Language Models Don’t Offer Mundane Utility

Not (and not for long) with that attitude, or that level of (wilful?) ignorance.

As always, only more so than usual: The future is here, it is just unevenly distributed.

Warning about kids having it too easy, same as it ever was?

Actually transformative AI is another story. But if we restrict ourselves to mundane AI, making life easier in mundane ways, I am very much an optimist here. Generative AI in its current form is already the greatest educational tool in the history of the world. And that is the worst it will ever be, on so many levels.

The worry is the trap, either a social vortex or a dopamine loop. Social media or candy crush, AI edition. Children love hard things, but if they are directed to the wrong hard things with the wrong kinds of ‘artificial’ difficulty or that don’t lead to good skill development or that are too universally distracting, whoops. But yeah, after a period of adjustment by them and by us, I think kids will be able to handle it, far better than they handled previous waves.

I mean, yes, I suppose…

Bumbling and Mumbling

AI as the future of dating? Your AI ‘dating concierge’ dating other people’s AI dating concierges? It scans the whole city to bring you the top three lucky matches? The future of all other human connections as well, mediated through a former dating app? The founder of Bumble is here for it. They certainly need a new hook now that the ‘female first’ plan has failed, also these are good ideas if done well and there are many more.

What would change if the AI finds people who would like you for who you are?

Would no one be forced to change? Oh no?

He later published similar thoughts at The Free Press.

Yeah, I do not think that is how any of this works. You can find the three people in the city who maximize the chance for reciprocal liking of each other. That does not get you out of having to do the work. I agree that outsourcing your interactions ‘for real’ would diminish you and go poorly. I do not think this would do that.

Deepfaketown and Botpocalypse Soon

Kevin Roose, the journalist who talked to Bing that one time, spent the better part of a month talking to various A.I. ‘friends.’ So long PG-13, hello companionship and fun?

Of those, he favored Nomi and Kindroid.

His basic conclusion is they suck, the experience is hollow, but many won’t care. The facts he presents certainly back up that the bots suck and the experience is hollow. A lot of it is painfully bad, which matches my brief experiments. As does the attempted erotic experiences being especially painfully bad.

But is bad, but private and safe and available on demand, then not so bad? Could it be good for some people even in its current pitiful state, perhaps offering the ability to get ‘reps’ or talk to a rubber duck, or are they mere distractions?

As currently implemented by such services, I think they’re So Bad It’s Awful.

I do think that will change.

My read is that the bots are bad right now because it is early days of the technology and also their business model is the equivalent of the predatory free-to-play Gacha games. You make your money off of deeply addicted users who fall for your tricks and plow in the big bucks, not by providing good experiences. The way you make your economics work is to minimize the costs of the free experience, indeed intentionally crippling it, and generally keep inference costs to a minimum.

And the providers of the best models want absolutely no part in this.

So yes, of course it sucks and most of us bound off it rather hard.

Fast forward even one year, and I think things change a lot, especially if Meta follows through with open weights for Llama-3 400B. Fine tune that, then throw in a year of improvement in voice and video and image generation and perhaps even VR, and start iterating. It’s going to get good.

Bots pretend to be customer service representatives.

Verge article by Jessica Lucas about teens on Character.ai, with the standard worries about addiction or AI replacing having friends. Nothing here was different from what you would expect if everything was fine, which does not mean everything is fine. Yes, some teenagers are going to become emotionally reliant on or addicted to bots, and will be scared of interacting with people, or spend tons of time there, but nothing about generative AI makes the dynamics here new, and I expect an easier transition here than elsewhere.

You know what had all these same problems but worse? Television.

A video entitled ‘is it ethical to use AI-generated or altered images to report on human struggle?’ In case anyone is wondering about this, unless you ensure they are very clearly and unmistakably labeled as AI-generated images even when others copy them: No. Obviously not. Fraud and deception are never ethical.

They Took Our Jobs

Wall Street Journal’s Peter Cappelli and Valery Yakubovich offer skepticism that AI will take our jobs. They seem to claim both that ‘if AI makes us more productive then this will only give humans even more to do’ and ‘the AI won’t make us much more productive.’ They say this ‘no matter how much AI improves’ and then get to analyzing exactly what the current AIs can do right now to show how little impact there will be, and pointing out things like the lack of current self-driving trucks.

By contrast, I love the honesty here, a real ‘when you talk about AI as an existential threat to humanity, I prefer to ask about its effect on jobs’ vibe. Followed by pointing out some of the absurd ‘move along nothing to see here’ predictions, we get:

My basic response is, look, if you’re not going to take this seriously, I’m out.

Job and other similar applications are one area where AI seems to be making fast inroads. The process relies on bandwidth requirements, has huge stakes and rewards shots on goal and gaming the system, so this makes sense. What happens, as we have asked before, when you can craft customized cover letters and resumes in seconds, so they no longer are strong indications of interest or skill or gumption, and you are flooded with them? When users who don’t do this are effectively shut out of any system the AI can use? And the employer forced to use your own bots to read them all?

That is an insane value. Even if you have no intention of leaving your current job, it seems like one should always be checking for upgrades if you can do it in the background for only $1k/year?

The good news is, like most AI trends, this is only in the early stages.

This means only about half of applications are from AIs (we should assume some amount of substitution). That level of flooding the zone is survivable with minimal AI filtering, or even with none. If a year from now it is 10x or 100x instead of 2x, then that will be very different.

There are complaints about sending out samples, if candidates will only use ChatGPT. But what is the problem? As with education, test them on what they will actually need to do. If they can use AI to do it, that still counts as doing it.

This was fun to see:

I wonder how many of that 25% simply missed the note. People make mistakes like this all the time. And I also wonder how many people noticed this before feeding it to their AI.

The eternal question is, when you see this, in which directions do you update? For me it would depend on what type of fellowship this is, and how this holistically combines with the rest of the application.

Singapore writers reject a government plan to train AI on their work, after they get 10 days to respond to a survey asking permission, without details on protections or compensation. This seems to have taken the government by surprise. It should not have. Creatives are deeply suspicious of AI, and in general ‘ask permission in a disrespectful and suspicious way’ is the worst of both worlds. Your choices are either treat people right, or go ahead without them planning to ask forgiveness.

In Other AI News

Jan Kosinski is blown away by AlphaFold 3, calling it ‘the end of the world as we know it’ although I do not think in the sense that I sometimes speak of such questions.

OpenAI sues the ChatGPT subreddit for copyright violation, for using their logo. In the style of Matt Levine I love everything about this.

If you were not aware, reminder that Slack will use your data to train AI unless you invoke their opt-out. Seems like a place you would want to opt out.

PolyAI raises at almost a $500 million valuation for Voice AI, good enough to get praise from prominent UK AI enthusiasts. I did a double take when I realized that was the valuation, not the size of the round, which was about $50 million.

Chips spending by governments keeps rising, says Bloomberg: Global Chips Battle Intensifies With $81 Billion Subsidy Surge.

People quoted in the article are optimistic about getting state of the art chip production going in America within a decade, with projections like 28% of the world market by 2032. I am skeptical.

GPT-4 beats psychologists on a new test of social intelligence. The bachelor students did so badly they did not conclusively beat Google Bard, back when we called it Bard. The question is, what do we learn from this test, presumably the Social Intelligence Scale by Sufyan from 1998. Based on some sample questions, this seems very much like a ‘book test’ of social intelligence, where an LLM will do much better than its actual level of social intelligence.

Daniel Kokotajlo left OpenAI, giving up a lot of equity that constituted at the time 85% of his family’s wealth, seemingly in order to avoid signing an NDA or non-disparagement clause. It does not seem great that everyone leaving must face this choice, or that they seemingly are choosing to impose such conditions. There was discussion of trying to reimburse Daniel at least somewhat for the sacrifice, which I agree would be a good idea.

Does the New York Times fact check its posts? Sanity check, even?

The problem is not that the answer of 12 cents is wrong, or even that the answer is orders of magnitude wrong. The problem is that, as Daniel Eth points out, the answer makes absolutely zero sense. If you know anything about AI your brain instantly knows that answer makes no sense, OpenAI would be bankrupt.

Paper on glitch tokens and how to identify them. There exist tokens that can reliably confuse an LLM if they are used during inference, and the paper claims to have found ways to identify them for a given model.

Quiet Speculations

Yes, AI stocks like Nvidia are highly volatile, and they might go down a lot. That is the nature of the random walk, and is true even if they are fundamentally undervalued.

Marc Andreessen predicts building companies will become more expensive in the AI age rather than cheaper due to Jevon’s Paradox, where when a good becomes cheaper people can end up using so much more of it that overall spending on that good goes up. I see how this is possible, but I do not expect things to play out that way. Instead I do expect starting a company to become cheaper, and for bootstrapping to be far easier.

Mark Cummings argues we are close to hard data limits unless we start using synthetic data. We are currently training models such as Llama-3 on 15 trillion tokens. We might be able to get to 50 trillion, but around there seems like an upper limit on what is available, unless we get into people’s emails and texts. This is of course still way, way more data than any human sees, there is no reason 50 trillion tokens cannot be enough, but it rules out ‘scaling the easy way’ for much longer if this holds.

Jim Fan on how to think about The Bitter Lesson: Focus on what scales and impose a high complexity penalty. Good techniques can still matter. But if your techniques won’t scale, they won’t matter.

Max Tegmark asks, if you do not expect AIs to become smarter than humans soon, what specific task won’t they be able to do in five years? Emmett Shear says it is not about any specific task, at specific tasks they are already better, that’s crystalized intelligence. What they lack, Emmett says, is fluid intelligence. I suppose that is true for a sufficiently narrowly specified task? His framing is interesting here, although I do not so much buy it.

The Week in Audio

Dwarkesh Patel interviews OpenAI Cofounder John Schulman. Self-recommending. I haven’t had the time to give this the attention it deserves, but I will do so and report back.

A panel discussion on ChinaTalk.

I may never have hard a more Chinese lament than this?

Of all the reasons the USA is winning on AI talent, I love trying to point to ‘the undergraduate assignments are harder.’ Then we have both these paragraphs distinctly, also:

I am very confident that is not how any of this works.

Again, if China is losing, it must be because of the American superior educational system and how good it is at teaching critical skills. I have some news.

Scott Weiner talks about SB 1047 on The Cognitive Revolution.

Sam Altman went on the All-In podcast prior to Monday’s announcement of GPT-4o.

Joseph Carlson says the episode was full of nothingness. Jason says there were three major news stories here. I was in the middle. There was a lot of repeat material and fluff to be sure. I would not say there were ‘major news stories.’ But there were some substantive hints.

Here is another summary, from Modest Proposal.

Observations from watching the show Pantheon, which Roon told everyone to go watch. Sounds like something I should watch. Direct link is pushback on one of the claims.

Brendan Bordelon Big Tech Business as Usual Lobbying Update

Brendan Bordelon has previously managed to convince Politico to publish at least three posts in Politico one could describe as ‘AI Doomer Dark Money Astroturf Update.’ In those posts, he chronicled how awful it was that there were these bizarro people out there spending money to ‘capture Washington’ in the name of AI safety. Effective Altruism was painted as an evil billionaire-funded political juggernaut outspending all in its path and conspiring to capture the future, potentially in alliance with sinister Big Tech.

According to some sources I have talked to, this potentially had substantial impact on the political field in Washington and turning various people against and suspicious of Effective Altruists and potentially similar others as well. As always, there are those who actively work to pretend that ‘fetch is happening,’ so it is hard to tell, but it did seem to be having some impact despite being obviously disingenuous to those who know.

It seems he has now discovered who is actually spending the most lobbying Washington about AI matters, and what they are trying to accomplish.

Surprise! It’s… Big Tech. And they want to… avoid regulations on themselves.

I for one am shocked.

This is the attempt to save his previous reporting. Back in the olden days of several months ago, you see, the philanthropies dominated the debate. But now the tech lobbyists have risen to the rescue.

As they do whenever possible, such folks are trying to inception the vibe and situation they want into being, claiming the tide has turned and lawmakers have been won over. I can’t update on those claims, because such people are constantly lying about such questions, so their statements do not have meaningful likelihood ratios beyond what we already knew.

Another important point is that regulation of AI is very popular, whereas AI is very unpopular. The arguments underlying the case for not regulating AI? Even more unpopular than that, epic historical levels of not popular.

Are Nvidia’s lobbyists being highly disingenuous when describing the things they want to disparage? Is this a major corporation? Do you even have to ask?

The absurdity is they continue to claim that until only a few months ago, such efforts actually were being outspent.

Is it eye opening? For some people it is, if they had their eyes willfully closed.

Let me be clear.

I think that Nvidia is doing what companies do when they lobby governments. They are attempting to frame debates and change perspectives and build relationships in order to get government to take or not take actions as Nvidia thinks are in the financial interests of Nvidia.

You can do a find-and-replace of Nivida there with not only IBM, Meta and Hugging Face, but also basically every other major corporation. I do not see anyone here painting this in conspiratorial terms, unlike many comments about exactly the same actions being taken by those worried about safety in order to advance safety causes, which was very much described in explicitly conspiratorial terms and as if it was outside of normal political activity.

I am not mad at Nvidia any more than I am mad at a child who eats cookies. Nvidia is acting like Nvidia. Business be lobbying to make more money. The tiger is going tiger.

But can we all agree that the tiger is in fact a tiger and acting like a tiger? And that it is bigger than Fluffy the cat?

Notice the contrast with Google and OpenAI. Did they at some points mumble words about being amenable to regulation? Yes, at which point a lot of people yelled ‘grand conspiracy!’ Then, did they spend money to advance this? No.

The Quest for Sane Regulations

Important correction: MIRI’s analysis now says that it is not clear that commitments to the UK were actively broken by major AI labs, including OpenAI and Anthropic.

What is the world coming to when you cannot trust Politico articles about AI?

It is far less bad to break implicit commitments and give misleading impressions of what will do, than to break explicit commitments. Exact Words matter. I still do not think that the behaviors here are, shall we say, especially encouraging. The UK clearly asked, very politely, to get advanced looks, and the labs definitely gave the impression they were up for doing so.

Then they pleaded various inconveniences and issues, and aside from DeepMind they didn’t do it, despite DeepMind showing that it clearly can be done. That is a no good, very bad sign, and I call upon them to fix this, but it is bad on a much reduced level than ‘we promised to do this thing we could do and then didn’t do it.’ Scale back your updates accordingly.

How should we think about compute thresholds? I think Helen Toner is spot on here.

As I asked someone who challenged this point on Twitter, if you think you have a test that is lighter touch or more accurate than the compute threshold for determining where we need to monitor for potential dangers, then what is the proposal? So far, the only reasonable alternative I have heard is no alternative at all. Everyone seems to understand that ‘use benchmark scores’ would be worse.

Latest thinking from UK PM Rishi Sunak:

Three key facts about unchecked capitalism are:

Everyone involved should relish and appreciate that we currently get to have conversations in which most of those involved largely get that free markets are where it has been for thousands of years and we want to be regulating them as little as possible, and the disagreement is whether or not to attach ‘but not less than that’ at the end of that sentence. This is a short window where we who understand this could work together to design solutions that might actually work. We will all miss it when it is gone.

This thread from Divyansh Kaushik suggests the government has consistently concluded that research must ‘remain open’ and equates this to open source AI models. I… do not see why these two things are similar, when you actually think about it? Isn’t that a very different type of open versus closed?

Also I do not understand how this interacts with his statement that “national security risks should be dealt with [with] classification (which would apply to both open and closed).” If the solution is ‘let things be open, except when it would be dangerous, and then classify it so no one can share it’ then that… sounds like restricting openness for sufficiently capable models? What am I missing? I notice I am confused here.

Bipartisan coalition introduces the Enforce Act to Congress, which aims to strengthen our export controls. I have not looked at the bill details.

Meanwhile, what does the UN care about? We’ve covered this before, but…

The other responses to the parent post asking ‘what experience in the workplace radicalized you?’ are not about AI, but worth checking out.

Noah Smith says how he would regulate AI.

So as far as I can tell, the proposal from Noah Smith here requires the dystopian panopticon on all electronic activities and restricting access to models, and still fails to address the core problems, and it assumes we’ve solved alignment.

Look. These problems are hard. We’ve been working on solutions for years, and there are no easy ones. There is nothing wrong with throwing out bad ideas in brainstorm mode, and using that to learn the playing field. But if you do that, please be clear that you are doing that, so as not to confuse anyone, including yourself.

Dean Ball attempts to draw a distinction between regulating the ‘use’ of AI versus regulating ‘conduct.’ He seems to affirm that the ‘regulate uses’ approach is a non-starter, and points out that because of certain abilities of GPT-4o are both (1) obviously harmless and useful and (2) illegal under the EU AI Act if you want to use the product for a wide array of purposes, such as in schools or workplaces.

One reply to that is that both Dean Ball and I and most of us here can agree that this is super dumb, but we did not need an AI to exhibit this ability in practice to know that this particular choice of hill was really dumb, as were many EU AI Act choices of hills, although I do get where they are coming from when I squint.

Or: The reason we now have this problem is not because the EU did not think this situation through and now did a dumb thing. We have this problem because the EU cares about the wrong things, and actively wanted this result, and now they have it.

In any case, I think Ball and I agree both that this particular rule is unusually dumb and counterproductive, and also that this type of approach won’t work even if the rules are relatively wisely chosen.

Instead, he draws this contrast, where he favors conduct-level regulation:

Accepting for the moment the conceptual mapping above: I agree what he calls here a conduct-level approach would be a vast improvement over the EU AI Act template for use-level regulation, in the sense that this is much less likely to make the situation actively worse. It is much less likely to destroy our potential mundane utility gains. A conduct-level regulation regime is probably (pending implementation details) better than nothing, whereas a use-level regulation regime is very plausibly worse than nothing.

For current levels of capability, conduct-level regulation (or at least, something along the lines described here) would to me fall under This Is Fine. My preference would be to combine a light touch conduct-level regulation of current AIs with model-level regulation for sufficiently advanced frontier models.

The thing is, those two solutions solve different problems. What conduct-level regulation fails to do is to address the reasons we want model-level regulation, the same as the model-level regulation does not address mundane concerns, again unless you are willing to get highly intrusive and proactive.

Conduct-level regulation that only checks for outcomes does not do much to mitigate existential risk, or catastrophic risk, or loss of control risk, or the second and third-level dynamics issues (whether or not we are pondering similar most likely such dynamic issues) that would result once core capabilities become sufficiently advanced. If you use conduct-level regulation, on the basis of libertarian-style principles against theft, fraud and murder and such, then this does essentially nothing to prevent any of the scenarios that I worry about. The two regimes do not intersect.

If you are the sovereign, you can pass laws that specify outcomes all you want. If you do that, but you also let much more capable entitles come into existence without restriction or visibility, and try only to prescribe outcomes on threat of punishment, you will one day soon wake up to discover you are no longer the sovereign.

At that point, you face the same dilemma. Once you have allowed such highly capable entities to arise, how are you going to contain what they do or what people do with them? How are you going to keep the AIs or those who rely on and turn power over to the AIs from ending up in control? From doing great harm? The default answer is you can’t, and you won’t, but the only way you could hope to is again via highly intrusive surveillance and restrictions.

The Schumer AI Working Group Framework

It is out.

I will check it out soon and report back, hopefully in the coming week.

It is clearly at quick glance focused more on ‘winning,’ ‘innovation’ and such, and on sounding positive, than on ensuring we do not all die or worrying about other mundane harms either, sufficiently so that such that Adam Thierer of R Street is, if not actively happy (that’ll be the day), at least what I would describe as cautiously optimistic.

Beyond that, I’m going to wait until I can give this the attention it deserves, and reserve judgment.

That is however enough to confirm that it is unlikely that Congress will pursue anything along the lines of SB 1047 (or beyond those lines) or any other substantive action any time soon. That strengthens the case for California to consider moving first.

Those That Assume Everyone Is Talking Their Books

So, I’ve noticed that open model weights advocates seem to be maximally cynical when attributing motivations. As in:

Here is the latest example, as Josh Wolfe responds to Vinod Khosla making an obviously correct point.

Vinod Khosla is making a very precise and obvious specific point, which is that opening the model weights of state-of-the-art AI models hands them to every country and every non-state actor. He does not say China specifically, but yes that is the most important implication, they then get to build from there. They catch up.

Josh Wolfe responds this way:

That does not make his actual argument wrong. It does betray a maximally cynical perspective, that fills me with deep sorrow. And when he says he is here to talk his book because it is his book? I believe him.

What about his actual argument? I mean it’s obvious gibberish. It makes no sense.

Yann LeCun was importantly better here, giving Khosla credit for genuine concern. He then goes on to also make a better argument. LeCun suggests that releasing sufficiently powerful open weights models will get around the Great Firewall and destabilize China. I do think that is an important potential advantage of open weights models in general, but I also do not think we need the models to be state of art to do this. Nor do I see this as interacting with the concern of enabling China’s government and major corporations, who can modify the models to be censored and then operate closed versions of them.

LeCun also argues that Chinese AI scientists and engineers are ‘quite talented and very much able to ‘fast follow’ the West and innovate themselves.’ Perhaps. I have yet to see evidence of this, and do not see a good reason to make it any easier.

While I think LeCun’s arguments here are wrong, this is something we can work with.

Lying About SB 1047

This thread from Jess Myers is as if someone said, ‘what if we took Zvi’s SB 1047 post, and instead of reading its content scanned it for all the people with misconceptions and quoted their claims without checking, while labeling them as authorities? And also repeated all the standard lines whether or not they have anything to do with this bill?’

The thread also calls this ‘the worst bill I’ve seen yet’ which is obviously false. One could, for example, compare this to the proposed CAIP AI Bill, which from the perspective of someone with her concerns is so obviously vastly worse on every level.

The thread is offered here for completeness and as a textbook illustration of the playbook in question. This is what people post days after you write the 13k word detailed rebuttal and clarification which was then written up in Astral Codex Ten.

These people have told us, via these statements, who they are.

About that, and only about that: Believe them.

To state a far weaker version of Talib’s ethical principle: If you see fraud, and continue to amplify the source and present it as credible when convenient, then you are a fraud.

However, so that I do not give the wrong idea: Not everyone quoted here was lying or acting in bad faith. Quintin Pope, in particular, I believe was genuinely trying to figure things out, and several others either plausibly were as well or were simply expressing valid opinions. One cannot control who then quote tweets you.

Martin Casado, who may have been pivotal in causing the cascade of panicked hyperbole around SB 1047 (it is hard to tell what is causal) doubles down.

That is his screenshot. Not mine, his.

Matt Reardon: Surely these “signatories” are a bunch of cranks I’ve never heard of, right?

Martin Casado even got the more general version of his deeply disingenuous message into the WSJ, painting the idea that highly capable AI might be dangerous and we might want to do something about it as a grand conspiracy by Big Tech to kill open source, demanding that ‘little tech’ has a seat at the table. His main evidence for this conspiracy is the willingness of big companies to be on a new government board whose purpose is explicitly to advise on how to secure American critical infrastructure against attacks, which he says ‘sends the wrong message.’

It is necessary to be open about such policies, so: This has now happened enough distinct times that I am hereby adding Martin Casado to the list of people whose bad and consistently hyperbolic and disingenuous takes need not be answered unless they are central to the discourse or a given comment is being uncharacteristically helpful in some way, along with such limamaries as Marc Andreessen, Yann LeCun, Brian Chau and Based Beff Jezos.

More Voices Against Governments Doing Anything

At R Street, Adam Thierer writes ‘California and Other States Threaten to Derail the AI Revolution.’ He makes some good points about the risk of a patchwork of state regulations. As he points out, there are tons of state bills being considered, and if too many of them became law the burdens could add up.

I agree with Thierer that the first best solution is for the Federal Government to pass good laws, and for those good laws to preempt state actions, preventing this hodge podge. Alas, thanks in part to rhetoric like this but mostly due to Congress being Congress, the chances of getting any Federal action any time soon is quite low.

Then he picks out the ones that allow the worst soundbyte descriptions, despite most of them presumably being in no danger of passing even in modified form.

Then he goes after (yep, once again) SB 1047, with a description that once again does not reflect the reality of the bill. People keep saying versions of ‘this is the worst (or most aggressive) bill I’ve seen’ when this is very clearly not true, in this case the article itself mentions for example the far worse proposed Hawaii bill and several others that would also impose greater burdens. Then once again, he says to focus on ‘real world’ outcomes and ignore ‘hypothetical fears.’ Sigh.

Andrew Ng makes the standard case that, essentially (yes I am paraphrasing):

I wish I lived in a world where it was transparent to everyone who such people were, and what they were up to, and what they care about. Alas, that is not our world.

In more reasonable, actual new specific objections to SB 1047 news, Will Rinehart analyzes the bill at The Dispatch, including links back to my post and prediction market. This is a serious analysis.

Despite this, like many others it appears he misunderstands how the law would work. In particular, in his central concern of claiming a ‘cascade’ of models that would have onerous requirements imposed on them, he neglects that one can get a limited duty exemption by pointing to another as capable model that already has such an exemption. Thus, if one is well behind the state of the art, as such small models presumably would be, providing reasonable assurance to get a limited duty exemption would be a trivial exercise, and verification would be possible using benchmark tests everyone would be running anyway.

I think it would be highly unlikely the requirements listed here would impose an undue burden even without this, or even without limited duty exemptions at all. But this clarification should fully answer such concerns.

Yes, you still have to report safety incidents (on the order of potential catastrophic threats) to the new division if they happened anyway, but if you think that is an unreasonable request I notice I am confused as to why.

Will then proceeds to legal and constitutional objections.

Will then echoes the general ‘better not to regulate technology’ arguments.

Rhetorical Innovation

DHS quotes Heidegger to explain why AI isn’t an extinction risk (direct source), a different style of meaningless gibberish than the usual government reports.

A good point perhaps taken slightly too far.

Working on a problem only makes sense if you could potentially improve the situation. If there is nothing to worry about or everything is completely doomed no matter what, then (your version of) beach calls to you.

It does not require that much moving of the needle to be a far, far better thing that you do than beach chilling. So this is strong evidence only that one can at least have a small chance to move the needle a small amount.

Our (not only your) periodic reminder that ‘AI Twitter’ has only modest overlap with ‘people moving AI,’ much of e/acc and open weights advocacy (and also AI safety advocacy) is effectively performance art or inception, and one should not get too confused here.

Via negativa: Eliezer points out that the argument of ‘AI will be to us as we are to insects’ does not equate well in theory or work in practice, and we should stop using it. The details here seem unlikely to convince either, but the central point seems solid.

An excellent encapsulation:

Another excellent encapsulation:

Any agenda to keep AI safe (or to do almost anything in a rapidly changing and hard to predict situation) depends on the actors centrally following the spirit of the rules and attempting to accomplish the goal. If everyone is going to follow a set of rules zombie-style, you can design rules that go relatively less badly compared to other rules. And you can pick rules that are still superior to ‘no rules at all.’ But in the end?

You lose.

Thus, if a law or rule is proposed, and it is presumed to be interpreted fully literally and in the way that inflicts the most damage possible, with all parties disregarding the intent and spirit, without adjusting to events in any fashion or ever being changed, then yes you are going to have a bad time and by have a bad time I mean some combination of not have any nice things and result in catastrophe or worse. Probably both. You can mitigate this, but only so far.

Alas, you cannot solve this problem by saying ‘ok no rules at all then,’ because that too relies on sufficiently large numbers of people following the ‘spirit of the [lack of] rules’ in a way that the rules are now not even trying to spell out, and that gives everyone nothing to go on.

Thus, you would then get whatever result ‘wants to happen’ under a no-rules regime. The secret of markets and capitalism is that remarkably often this result is actually excellent, or you need only modify it with a light touch, so that’s usually the way to go. Indeed, with current levels of core AI capabilities that would be the way to go here, too. The problem is that level of core capabilities is probably not going to stand still.

Aligning a Smarter Than Human Intelligence is Difficult

Ian Hogarth announces the UK AI Safety Institute is fully open sourcing its safety evaluation platform. In many ways this seems great, this is a place the collaborations could be a big help. The worry is that if you know exactly how the safety evaluation works there is temptation to game the test, so the exact version that you use for the ‘real’ test needs to contain non-public data at a minimum.

Paper from Davidad, Skalse, Bengio, Russell, Tegmark and others on ‘Towards Guaranteed Safe AI.’ Some additional discussion here. I would love to be wrong about this, but I continue to be deeply skeptical that we can get meaningful ‘guarantees’ of ‘safe’ AI in this mathematical proof sense. Intelligence is not a ‘safe’ thing. That does not mean one cannot provide reasonable assurance on a given model’s level of danger, or that we cannot otherwise find ways to proceed. More that it won’t be this easy.

Also, I try not to quote LeCun, but I think this is both good faith and encapsulates in a smart way so much of what he is getting wrong:

This is indeed effectively the ‘classic’ control proposal, to have the AI optimize some utility function at inference time based on its instructions. As always, any set of task objectives and guardrails is isomorphic to some utility function.

The problem: We know none of:

Don’t get me wrong. Show me how to do (1) and we can happily focus mos of our efforts on solving either (2), (3) or both. Or we can solve (2) or (3) first and then work on (1), also acceptable. Good luck, all.

The thread continues interestingly as well:

David Manheim: I think you missed the word “provable”

We all agree that we’ll get incremental safety with current approaches, but incremental movement in rapidly changing domains can make safety move slower than vulnerabilities and dangers. (See: Cybersecurity.)

I don’t see any reason we couldn’t have a provably safe airplane, or at least an provably arbitrarily safe airplane, without need to first crash a bunch of airplanes. Same would go for medicine if you give me Alpha Fold N for some N (5?). That seems well within our capabilities. Indeed, ‘safe flying’ was the example in a (greatly simplified) paper that Davidad gave me to read to show me such proofs were possible. If it were only that difficult, I would be highly optimistic. I worry and believe that ‘safe AI’ is a different kind of impossible than ‘safe airplane’ or ‘safe medicine.’

People Are Worried About AI Killing Everyone

Yes, yes, exactly, shout from the rooftops.

If you feel the AGI and your response is to be jubilant but not terrified, then that is the ultimate missing mood.

For a few months, there was a wave (they called themselves ‘e/acc’) of people whose philosophy’s central virtue was missing this mood as aggressively as possible. I am very happy that wave has now mostly faded, and I can instead be infuriated by a combination of ordinary business interests, extreme libertarians and various failures to comprehend the problem. You don’t know what you’ve got till it’s gone.

If you are terrified but not excited, that too is a missing mood. It is missing less often than the unworried would claim. All the major voices of worry that I have met are also deeply excited.

Also, this week… no?

What I feel is the mundane utility.

Did we see various remarkable advances, from both Google and OpenAI? Oh yeah.

Are the skeptics, who say this proves we have hit a wall, being silly? Oh yeah.

This still represents progress that is mostly orthogonal to the path to AGI. It is the type of progress I can wholeheartedly get behind and cheer for, the ability to make our lives better. That is exactly because it differentially makes the world better, versus how much closer it gets us to AGI.

A world where people are better off, and better able to think and process information, and better appreciate the potential of what is coming, is likely going to act wiser. Even if it doesn’t, at least people get to be better off.

The Lighter Side

This is real, from Masters 2×04, about 34 minutes in.

This should have been Final Jeopardy, so only partial credit, but I’ll take it.

The alternative theory is that this is ribbing Ken Jennings about his loss to Watson. That actually seems more plausible. I am split on which one is funnier.

I actually do not think Jeopardy should be expressing serious opinions. It is one of our few remaining sacred spaces, and we should preserve as much of that as we can.

Should have raised at a higher valuation.