Note that the goal of "work on long-term research bets now so that a workforce of AI agents can automate it in a couple of years" implies somewhat different priorities than "work on long-term research bets to eventually have them pay off through human labor", notably:

- The research direction needs to be actually pursued by the agents, either through the decision of the human leadership, or through the decision of AI agents that the human leadership defers to. This means that if some long-term research bet isn't respected by lab leadership, it's unlikely to be pursued by their workforce of AI agents.

- This implies that a major focus of current researchers should be on credibility and having a widely agreed-on theory of change. If this is lacking, then the research will likely never be pursued by the AI agent workforce and all the work will likely have been for nothing.

- Maybe there is some hope that despite a research direction being unpopular among lab leadership, the AI agents will realize its usefulness on their own, and possibly persuade the lab leadership to let them expend compute on the research direction in question. Or maybe the agents will have so much free reign over research that they don't even need to ask for permission to pursue new research directions.

- This implies that a major focus of current researchers should be on credibility and having a widely agreed-on theory of change. If this is lacking, then the research will likely never be pursued by the AI agent workforce and all the work will likely have been for nothing.

- Setting oneself up for providing oversight to AI agents. There might be a period during which agents are very capable at research engineering / execution but not research management, and leading AGI companies are eager to hire human experts to supervise large numbers of AI agents. If one doesn't have legible credentials or good relations with AGI companies, they are less likely to be hired during this period.

- Delaying engineering-heavy projects until engineering is cheap relative to other types of work.

(some of these push in opposite directions, e.g., engineering-heavy research outputs might be especially good for legibility)

The research direction needs to be actually pursued by the agents, either through the decision of the human leadership, or through the decision of AI agents that the human leadership defers to. This means that if some long-term research bet isn't respected by lab leadership, it's unlikely to be pursued by their workforce of AI agents.

I think you're starting from a good question here (e.g. "Will the research direction actually be pursued by AI researchers?"), but have entirely the wrong picture; lab leaders are unlikely to be very relevant decision-makers here. The key is that no lab has a significant moat, and the cutting edge is not kept private for long, and those facts look likely to remain true for a while. Assuming even just one cutting-edge lab continues to deploy at all like they do today, basically-cutting-edge models will be available to the public, and therefore researchers outside the labs can just use them to do the relevant research regardless of whether lab leadership is particularly invested. Just look at the state of things today: one does not need lab leaders on board in order to prompt cutting-edge models to work on one's own research agenda.

That said, "Will the research direction actually be pursued by AI researchers?" remains a relevant question. The prescription is not so much about convincing lab leadership, but rather about building whatever skills will likely be necessary in order to use AI researchers productively oneself.

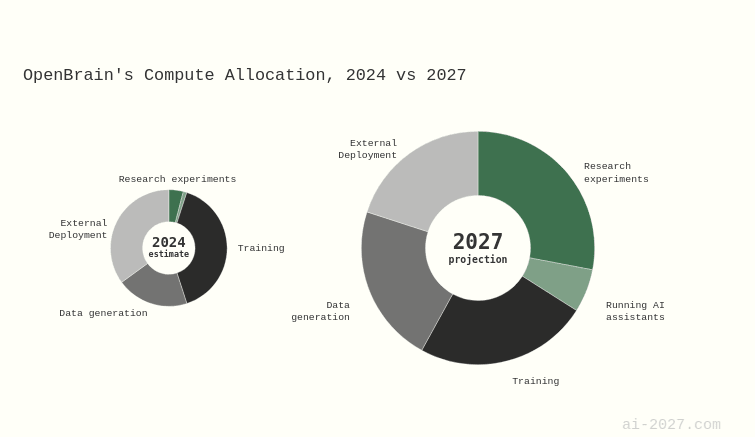

Labs do have a moat around compute. In the worlds where automated R&D gets unlocked I would expect compute allocation to substantially pivot, making non-industrial automated research efforts non-competitive.

"Labs" are not an actor. No one lab has a moat around compute; at the very least Google, OpenAI, Anthropic, xAI, and Facebook all have access to plenty of compute. It only takes one of them to sell access to their models publicly.

Sure, but I think that misses the point that I was trying to convey. If we end up in a world similar to the ones forecasted in ai-2027, the fraction of compute which labs allocate towards speeding up their own research threads will be larger than the amount of compute which labs will sell for public consumption.

My view is that even in worlds with significant speed ups in R&D, we still ultimately care about the relative speed of progress on scalable alignment (in the Christiano sense) compared to capabilities & prosaic safety; doesn't matter if we finish quicker if catastrophic ai is finished quickest. Thus, an effective TOC for speeding up long horizon research would still route through convincing lab leadership of the pursuitworthiness of research streams.

I have no idea what you're picturing here. Those sentences sounded like a sequence of nonsequiturs, which means I probably am completely missing what you're trying to say. Maybe spell it out a bit more?

Some possibly-relevant points:

- The idea that all the labs focus on speeding up their own research threads rather than serving LLMs to customers is already pretty dubious. Developing LLMs and using them are two different skillsets; it would make economic sense for different entities to specialize in those things, with the developers selling model usage to the users just as they do today. More capable AI doesn't particularly change that economic logic. I wouldn't be surprised if at least some labs nonetheless keep things in-house, but all of them?

- The implicit assumption that alignment/safety research will be bottlenecked on compute at all likewise seems dubious at best, though I could imagine an argument for it (routing through e.g. scaling inference compute).

- It sounds like maybe you're assuming that there's some scaling curve for (alignment research progress as a function of compute invested) and another for (capabilities progress as a function of compute invested), and you're imagining that to keep the one curve ahead of the other, the amount of compute aimed at alignment needs to scale in a specific way with the amount aimed at capabilities? (That model sounds completely silly to me, that is not at all how this works, but it would be consistent with the words you're saying.)

The idea that all the labs focus on speeding up their own research threads rather than serving LLMs to customers is already pretty dubious. Developing LLMs and using them are two different skillsets; it would make economic sense for different entities to specialize in those things

I can maybe see it. Consider the possibility that the decision to stop providing public access to models past some capability level is convergent: e. g., the level at which they're extremely useful for cyberwarfare (with jailbreaks still unsolved) such that serving the model would drown the lab in lawsuits/political pressure, or the point at which the task of spinning up an autonomous business competitive with human businesses, or making LLMs cough up novel scientific discoveries, becomes trivial (i. e., such that the skill level required for using AI for commercial success plummets – which would start happening inasmuch as AGI labs are successful in moving LLMs to the "agent" side of the "tool/agent" spectrum).

In those cases, giving public access to SOTA models would stop being the revenue-maximizing thing to do. It'd either damage your business reputation[1], or it'd simply become more cost-effective to hire a bunch of random bright-ish people and get them to spin up LLM-wrapper startups in-house (so that you own 100% stake in them).

Some loose cannons/open-source ideologues like DeepSeek may still provide free public access, but those may be few and far between, and significantly further behind. (And getting progressively scarcer; e. g., the CCP probably won't let DeepSeek keep doing it.)

Less extremely, AGI labs may move to a KYC-gated model of customer access, such that only sufficiently big, sufficiently wealthy entities are able to get access to SOTA models. Both because those entities won't do reputation-damaging terrorism, and because they'd be the only ones able to pay the rates (see OpenAI's maybe-hype maybe-real whispers about $20,000/month models).[2] And maybe some EA/R-adjacent companies would be able to get in on that, but maybe not.

Also,

no lab has a significant moat, and the cutting edge is not kept private for long, and those facts look likely to remain true for a while

This is a bit flawed, I think. I think the situation is that runner-ups aren't far behind the leaders in wall-clock time. Inasmuch as the progress is gradual, this translates to runner-ups being not-that-far-behind the leaders in capability level. But if AI-2027-style forecasts come true, with the capability progress accelerating, a 90-day gap may become a "GPT-2 vs. GPT-4"-level gap. In which case alignment researchers having privileged access to true-SOTA models becomes important.

(Ideally, we'd have some EA/R-friendly company already getting cozy with e. g. Anthropic so that they can be first-in-line getting access to potential future research-level models so that they'd be able to provide access to those to a diverse portfolio of trusted alignment researchers...)

- ^

Even if the social benefits of public access would've strictly outweighed the harms on a sober analysis, the public outcry at the harms may be significant enough to make the idea commercially unviable. Asymmetric justice, etc.

- ^

Indeed, do we know it's not already happening? I can easily imagine some megacorporations having had privileged access to o3 for months.

P. P. S.

In the month since writing the previous comment I have read the following article by @Abhishaike Mahajan and believe it illustrates well why the non-tech world is so difficult for AI, can recommend: https://www.owlposting.com/p/what-happened-to-pathology-ai-companies

hire a bunch of random bright-ish people and get them to spin up LLM-wrapper startups in-house (so that you own 100% stake in them).

I doubt it's really feasible. These startups will require significant infusion of capital so AI companies CEOs and CFOs will have a say on how they develop. But tech CEOs and CFOs have no idea how developments in other industries work and why they are slow so they will mismanage such startups.

P. S. Oh, and also I realized the other day: whether you are an AI agent or just a human, imagine the temptation to organize a Theranos-type fraud if details of your activity are mostly secret and you only report to tech bros believing in the power of AGI/ASI!

I strongly agree, and as I've argued before, long timelines to ASI are possible even if we have proto-AGI soon, and aligning AGI doesn't necessarily help solve ASI risks. It seems like people are being myopic, assuming their modal outcome is effectively certain, and/or not clearly holding multiple hypotheses about trajectories in their minds, so they are undervaluing conditionally high value research directions.

"Short Timelines means the value of Long Horizon Research is prompting future AIs"

Would be a more accurate title for this, imo

this runs into the "assumes powerful ai will be low/non agentic" fallacy

or "assumes ai's that can massively assist in long horizon alignment research will be low/non agentic"

Right, the way I'm looking at this post is through the lens of someone making decisions about AI safety research – like an independent researcher, or maybe a whole research organization. Anyone who's worried about AI safety and trying to figure out where to put their effort. The core goal, the 'cost function' is fundamentally about cutting down the risks from advanced AI.

Now, the standard thinking might have been: if you reckon we're in a 'short timelines world' where powerful AI is coming fast, then obviously you should focus on research that pays off quickly, things like AI control methods you can maybe apply soon.

The post points out that even if we are in that short timelines scenario, tackling the deeper, long-term foundational stuff might still be a good bet. The reasoning is that there's a chance this foundational work, even if we humans don't finish it, could get picked up and carried forward effectively by some future AI researcher. Of course, that whole idea hinges on a big 'if': if we can actually trust that future AI and be confident it's genuinely aligned with helping us.

This is an excellent observation, so let me underline it by repeating it in my own words: alignment research that humans can't do well or don't have time to do well, might still be done right and at high speed with AI assistance.

I disagree, Short Timelines Devalue at least a bit Long Horizon Research, and I think that practically this reduces the usefulness by probably a factor of 10.

Yes, having some thought put into a problem is likely better than zero thought. Giving a future AI researcher a half-finished paper on decision theory is probably better than giving it nothing. The question is how much better, and at what cost?

Opportunity Cost is Paramount: If timelines are actually short (months/few years), then every hour spent on deep theory with no immediate application is an hour not spent on:

- Empirical safety work on existing/imminent systems (LLM alignment, interpretability, monitoring).

- Governance, policy, coordination efforts.

- The argument implicitly assumes agent foundations and other moonshot offers the highest marginal value as a seed compared to progress in these other areas. I am highly skeptical of this.

Confidence in Current Directions: How sure are we that current agent foundations research is even pointing the right way? If it's fundamentally flawed or incomplete, seeding future AIs with it might be actively harmful, entrenching bad ideas. We might be better off giving them less, but higher-quality, guidance, perhaps focused on methodology or verification.

Cognitive Biases: Could this argument be motivated reasoning? Researchers invested in long-horizon theoretical work naturally seek justifications for its continued relevance under scenarios (short timelines) that might otherwise devalue it. This "seeding" argument provides such a justification, but its strength needs objective assessment, not just assertion.

The same is valid for life extension research. It requires decades, and many, including Brian Johnson, say that AI will solve aging and therefore human research in aging is not relevant. However, most of aging research is about collecting data about very slow processes. The more longitudinal data we collect, the easier it will be for AI to "take up the torch."

Does this argument extend to crazy ideas like Scanless Whole Brain Emulation, or would ideas like that require so much superintelligence to complete, that the value of initial human progress will end up sort of negligible.

The key is still to distinguish good from bad ideas.

In the linked post, you essentially make the argument that "Whole brain emulation artificial intelligence is safer than LLM-based artificial superintelligence". That's a claim that might be true or not true. On aspect of spending more time with that idea would be to think more critically about whether that's true.

However, even if it would be true, it wouldn't help in a scenario where we already have LLM-based artificial superintelligence.

There is a lot wrong with this post.

- The AI you want to assist you in research is misaligned and not trustworthy.

- AI is becoming less corrigible as it becomes more powerful.

- AI safety research almost certainly cannot outpace AI capabilities research from an equal start.

- AI safety research is way behind capabilities research.

- Solving the technical alignment problem on its own does not solve the AI doom crisis.

- Short timelines very likely mean we're just dead, so this is a conversation about what to do with the last years of your life, not what to do that stands a chance at being useful.

Overall, the argument in this post serves primarily to reinforce an existing belief and to make people feel better about what they are already doing. (In other words, it is just cope.)

Bonus:

- AI governance is strictly necessary in order to prevent the world from being destroyed.

- AI governance on its own is sufficient to prevent the world from being destroyed.

- AI governance is evidentially much more tractable than AI technical alignment.

That is, given that you get useful work out of AI-driven research before things fall apart (reasonable but not guarnteed).

That being said, this strategy relies on approaches that are fruitful for us and fruitful to AI assisted, accelerated, or done research to be the same approaches. (again reasonable, but not certain).

It also relies on work done now giving useful direction, especially if paralelism grows faster than serial speeds.

In short, this says that if time horizons to AI assistance are short, the most important things are A. The framework to be able to verify an approach, so we can hand it off. B. Information about whether it will ultimately be workable.

As always, it seems to bias towards long term approaches where you can do the hard part first.

That being said, this strategy relies on approaches that are fruitful for us and fruitful to AI assisted, accelerated, or done research to be the same approaches. (again reasonable, but not certain).

What is being excluded by this qualification?

Mainly things that we would never think of, as fruitful for AI and not for us.

Things that are useful for us but not for AI is things like investigating gaps in tokenization, hiding things from AI, and things that are hard to explain/judge, because we probably ought to trust the AI researchers less than we do human researchers with regards to good faith.

That seems correct, but I don't think any of those aren't useful to investigate with AI, despite the relatively higher bar.

Short AI takeoff timelines seem to leave no time for some lines of alignment research to become impactful. But any research rebalances the mix of currently legible research directions that could be handed off to AI-assisted alignment researchers or early autonomous AI researchers whenever they show up. So even hopelessly incomplete research agendas could still be used to prompt future capable AI to focus on them, while in the absence of such incomplete research agendas we'd need to rely on AI's judgment more completely. This doesn't crucially depend on giving significant probability to long AI takeoff timelines, or on expected value in such scenarios driving the priorities.

Potential for AI to take up the torch makes it reasonable to still prioritize things that have no hope at all of becoming practical for decades (with human effort). How well AIs can be directed to advance a line of research further (and the quality of choices about where they should be directed) depends on how well these lines of research are already understood. Thus it can be important to make as much partial progress as possible in developing (and deconfusing) them in the years (or months!) before AI takeoff. This notably seems to concern agent foundations / decision theory, in contrast with LLM interpretability or AI control, which more plausibly have short term applications.

In this sense current human research, however far from practical usefulness, forms the data for aligning the early AI-assisted or AI-driven alignment research efforts. The judgment of human alignment researchers who are currently working makes it possible to formulate more knowably useful prompts for future AIs (possibly in the run-up to takeoff) that nudge them in the direction of actually developing even preliminary theory into practical alignment techniques.