(Status: another point I find myself repeating frequently.)

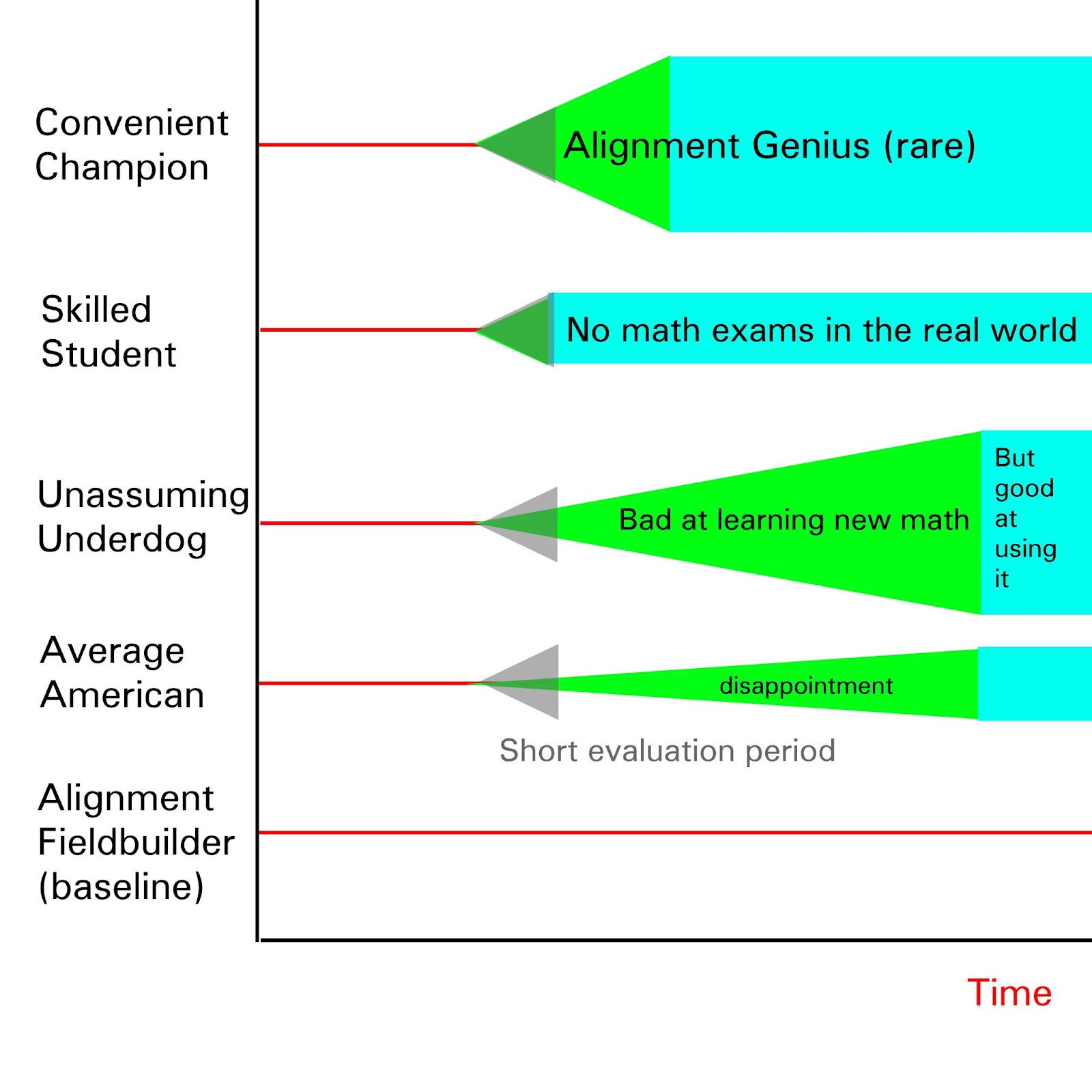

One of the reasons I suspect we need a lot of serial time to solve the alignment problem is that alignment researchers don't seem to me to "stack". Where “stacking” means something like, quadrupling the size of your team of highly skilled alignment researchers lets you finish the job in ~1/4 of the time.

It seems to me that whenever somebody new and skilled arrives on the alignment scene, with the sort of vision and drive that lets them push in a promising direction (rather than just doing incremental work that has little chance of changing the strategic landscape), they push in a new direction relative to everybody else. Eliezer Yudkowsky and Paul Christiano don't have any synergy between their research programs. Adding John Wentworth doesn't really speed up either of them. Adding Adam Shimi doesn't really speed up any of the previous three. Vanessa Kosoy isn't overlapping with any of the other four.

Sure, sometimes one of our visionary alignment-leaders finds a person or two that sees sufficiently eye-to-eye with them and can speed things along (such as Diffractor with Vanessa, it seems to me from a distance). And with ops support and a variety of other people helping out where they can, it seems possible to me to take one of our visionaries and speed them up by a factor of 2 or so (in a simplified toy model where we project ‘progress’ down to a single time dimension). But new visionaries aren't really joining forces with older visionaries; they're striking out on their own paths.

And to be clear, I think that this is fine and healthy. It seems to me that this is how fields are often built, with individual visionaries wandering off in some direction, and later generations following the ones who figured out stuff that was sufficiently cool (like Newton or Laplace or Hamilton or Einstein or Grothendieck). In fact, the phenomenon looks even more wide-ranging than that, to me: When studying the Napoleonic wars, I was struck by the sense that Napoleon could have easily won if only he'd been everywhere at once; he was never able to find other generals who shared his spark. Various statesmen (Bismark comes to mind) proved irreplaceable. Steve Jobs never managed to find a worthy successor, despite significant effort.

Also, I've tried a few different ways of getting researchers to "stack" (i.e., of getting multiple people capable of leading research, all leading research in the same direction, in a way that significantly shortens the amount of serial time required), and have failed at this. (Which isn't to say that you can't succeed where I failed!)

I don't think we're doing something particularly wrong here. Rather, I'd say: the space to explore is extremely broad; humans are sparsely distributed in the space of intuitions they're able to draw upon; people who have an intuition they can follow towards plausible alignment-solutions are themselves pretty rare; most humans don't have the ability to make research progress without an intuition to guide them. Each time we find a new person with an intuition to guide them towards alignment solutions, it's likely to guide them in a whole new direction, because the space is so large. Hopefully at least one is onto something.

But, while this might not be an indication of an error, it sure is a reason to worry. Because if each new alignment researcher pursues some new pathway, and can be sped up a little but not a ton by research-partners and operational support, then no matter how many new alignment visionaries we find, we aren't much decreasing the amount of time it takes to find a solution.

Like, as a crappy toy model, if every alignment-visionary's vision would ultimately succeed, but only after 30 years of study along their particular path, then no amount of new visionaries added will decrease the amount of time required from “30y since the first visionary started out”.

And of course, in real life, different paths have different lengths, and adding new people decreases the amount of time required at least a little in expectation. But not necessarily very much, and not linearly.

(And all this is to say nothing of how the workable paths might not even be visible to the first generation of visionaries; the intuitions that lead one to a solution might be the sort of thing that you can only see if you've been raised with the memes generated by the partial-successes and failures of failed research pathways, as seems-to-me to have been the case with mathematics and physics regularly in the past. But I digress.)

I agree with the explicitly presented evidence and reasoning steps, but one implied prior/assumption seems to me so obscenely wrong (compared to my understanding about social reality) that I have to explain myself before making a recommendation. The following statement:

implies a possibility that approximately neg-linear correlation between number of people and time could exist (in multidisciplinary software project management in particular and/or in general for most collective human endeavors). The model of Nate that I have in my mind believes that reasonable readers ought to believe that:

I am making a claim about the social norm that it's socially OK to assume other people can believe in linear scalability, not a belief whether other people actually believe that 4x the people will actually finish in 1/4 time by default.

Individually, we are well calibrated to throw a TypeError at the cliche counterexamples to the linear scalability assumption like "a pregnant woman delivers one baby in 9 months, how many ...".

And professional managers tend to have an accurate model of applicability of this assumption, individually they all know how to create the kind of work environment that may open the possibility for time improvements (blindly quadrupling the size of your team can bring the project to a halt or even reverse the original objective, more usually it will increase the expected time because you need to lower other risks, and you have to work very hard for a hope of 50% decrease in time - they are paid to believe in the correct model of scalability, even in cases when they are incentivized to say more optimistic professions of belief in public).

Let's say 1000 people can build a nuclear power plant within some time unit. Literally no one will believe that one person will build it a thousand times slower or that a million people will build it a thousand times faster.

I think it should not be socially acceptable to say things that imply that other people can assume that others might believe in linear scalability for unprecedented large complex software projects. No one should believe that only one person can build Aligned AGI or that a million people can build it thousand times faster than a 1000 people. Einstein and Newton were not working "together", even if one needed the other to make any progress whatsoever - the nonlinearity of "solving gravity" is so qualitatively obvious, no one would even think about it in terms of doubling team size or halving time. That should be the default, a TypeError law of scalability.

If there is no linear scalability by default, Alignment is not an exception to other scalability laws. Building unaligned AGI, designing faster GPUs, physically constructing server farms, or building web apps ... none of those are linearly scalable, it's always hard management work to make a collective human task faster when adding people to a project.

Why is this a crux for me? I believe the incorrect assumption leads to rationally-wrong emotions in situations like these:

Let me talk to you (the centeroid of my models of various AI researchers, but not any one person in particular). You are a good AI researcher and statistically speaking, you should not expect you to also be an equally good project manager. You understand maths and statistically speaking, you should not expect you to also be equally good at social skills needed to coordinate groups of people. Failling at a lot of initial attempts to coordinate teams should be the default expectation - not one or two attempts and then you will nail it. You should expect to fail more ofthen than the people who are getting the best money in the world for aligning groups of people towards a common goal. If those people who made themselves successful in management initially failed 10 times before they became billionaires, you should expect to fail more times than that.

Recommendation

You can either dilute your time by learning both technical and social / management skills or you can find other experts to help you and delegate the coordination task. You cannot solve Alignment alone, you cannot solve Alignment without learning, and you cannot learn more than one skill at a time.

The surviving worlds look like 1000 independent alignment ideas, each pursued by 100 different small teams. Some of the teams figured out how to share knowledge between some of the other teams and connect one or two ideas and merge teams iff they figure out explicit steps how to shorten time by merging teams.

We don't need to "stack", we need to increase the odds of a positive black swan.

Yudkowsky, Christiano, and the person who has the skills to start figuring out the missing piece to unify their ideas are at least 10,000 different people.