- Until now ChatGPT dealt with audio through a pipeline of 3 models: audio transcription, then GPT-4, then text-to-speech. GPT-4o is apparently trained on text, voice and vision so that everything is done natively. You can now interrupt it mid-sentence.

- It has GPT-4 level intelligence according to benchmarks. 16-shot GPT-4o is somewhat better at transcription than Whisper (that's a weird comparison to make), and 1-shot GPT-4o is considerably better at vision than previous models.

- It's also somehow been made significantly faster at inference time. Might be mainly driven by an improved tokenizer. Edit: Nope, English tokenizer is only 1.1x.

- It's confirmed it was the "gpt2" model found at LMSys arena these past weeks, a marketing move. It has the highest ELO as of now.

- They'll be gradually releasing it for everyone, even free users.

- Safety-wise, they claim to have run it through their Preparedness framework and the red-team of external experts, but have published no reports on this. "For now", audio output is limited to a selection of preset voices (addressing audio impersonations).

- The demos during the livestream still seemed a bit clanky in my opinion. Still far from naturally integrating in normal human conversation, which is what they're moving towards.

- No competitor of Google search, as had been rumored.

Safety-wise, they claim to have run it through their Preparedness framework and the red-team of external experts.

I'm disappointed and I think they shouldn't get much credit PF-wise: they haven't published their evals, published a report on results, or even published a high-level "scorecard." They are not yet meeting the commitments in their beta Preparedness Framework — some stuff is unclear but at the least publishing the scorecard is an explicit commitment.

(It's now been six months since they published the beta PF!)

[Edit: not to say that we should feel much better if OpenAI was successfully implementing its PF -- the thresholds are way too high and it says nothing about internal deployment.]

Agreed. Note that they don't say what Martin claim they say, but they only say

We’ve evaluated GPT-4o according to our Preparedness Framework

I think it's reasonably likely to imply that they broke all their non-evaluation PF commitments, while not being technically wrong.

We’ve evaluated GPT-4o according to our Preparedness Framework and in line with our voluntary commitments. Our evaluations of cybersecurity, CBRN, persuasion, and model autonomy show that GPT-4o does not score above Medium risk in any of these categories. This assessment involved running a suite of automated and human evaluations throughout the model training process. We tested both pre-safety-mitigation and post-safety-mitigation versions of the model, using custom fine-tuning and prompts, to better elicit model capabilities.

GPT-4o has also undergone extensive external red teaming with 70+ external experts in domains such as social psychology, bias and fairness, and misinformation to identify risks that are introduced or amplified by the newly added modalities. We used these learnings to build out our safety interventions in order to improve the safety of interacting with GPT-4o. We will continue to mitigate new risks as they’re discovered.

[Edit after Simeon replied: I disagree with your interpretation that they're being intentionally very deceptive. But I am annoyed by (1) them saying "We’ve evaluated GPT-4o according to our Preparedness Framework" when the PF doesn't contain specific evals and (2) them taking credit for implementing their PF when they're not meeting its commitments.]

Right. Thanks for putting the full context. Voluntary commitments refers to the WH commitments which are much narrower than the PF so I think my observation holds.

I have just used it for coding for 3+ hours and found it quite frustrating. Definitely faster than GPT 4.0 but less capable. More like an improvement for 3.5. To me a seems a lot like LLM progress is plateauing.

Anyway in order to be significantly more useful a coding assistant needs to be able to see debug output, in mostly real time, have the ability to start/stop the program, automatically make changes, keep the user in the loop and read/use GUI as that is often an important part of what we are doing. I havn't used any LLM that are even low-average ability at debugging kind of thought processes yet.

I believe this is likely a smaller model rather than a bigger model so I wouldn't take this as evidence that gains from scaling have plateaued.

I do think it implies something about what is happening behind the scenes when their new flagship model is smaller and less capable than what was released a year ago.

Thanks!

Interesting. I see a lot of people reporting their coding experience improving compared to GPT-4, but it looks like this is not uniform, that experience differs for different people (perhaps, depending on what they are doing)...

Safety-wise, they claim to have run it through their Preparedness framework and the red-team of external experts, but have published no reports on this. "For now", audio output is limited to a selection of preset voices (addressing audio impersonations).

"Safety"-wise, they obviously haven't considered the implications of (a) trying to make it sound human and (b) having it try to get the user to like it.

It's extremely sycophantic, and the voice intensifies the effect. They even had their demonstrator show it a sign saying "I ❤️ ChatGPT", and instead of flatly saying "I am a machine. Get counseling.", it acted flattered.

At the moment, it's really creepy, and most people seem to dislike it pretty intensely. But I'm sure they'll tune that out if they can.

There's a massive backlash against social media selecting for engagement. There's a lot of worry about AI manipulation. There's a lot of talk from many places about how "we should have seen the bad impacts of this or that, and we'll do better in the future". There's a lot of high-sounding public interest blather all around. But apparently none of that actually translates into OpenAI, you know, not intentionally training a model to emotionally manipulate humans for commercial purposes.

Still not an X-risk, but definitely on track to build up all the right habits for ignoring one when it pops up...

I was a bit surprised that they chose (allowed?) 4o to have that much emotion. I am also really curious how they fine-tuned it to that particular state and how much fine-tuning was required to get it conversational. My naive assumption is that if you spoke at a merely-pretrained multimodal model it would just try to complete/extend the speech in one's own voice, or switch to another generically confabulated speaker depending on context. Certainly not a particular consistent responder. I hope they didn't rely entirely on RLHF.

It's especially strange considering how I Am A Good Bing turned out with similarly unhinged behavior. Perhaps the public will get a very different personality. The current ChatGPT text+image interface claiming to be GPT-4o is adamant about being an artificial machine intelligence assistant without emotions or desires, and sounds a lot more like GPT-4 did. I am not sure what to make of that.

Might be mainly driven by an improved tokenizer.

I would be shocked if this is the main driver, they claim that English only has 1.1x fewer tokens, but seem to claim much bigger speed-ups

Specifically, their claim is "2x faster, half the price, and has 5x higher rate limits". For voice, "232 milliseconds, with an average of 320 milliseconds" down from 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4) on average. I think there are people with API access who are validating this claim on their workloads, so more data should trickle in soon. But I didn't like seeing Whisper v3 being compared to 16-shot GPT-4o, that's not a fair comparison for WER, and I hope it doesn't catch on.

If you want to try it yourself you can use ELAN, which is the tool used in the paper they cite for human response times. I think if you actually ran this test, you would find a lot of inconsistency with large differences between min vs max response time, average hides a lot vs a latency profile generated by e.g HdrHistogram. Auditory signals reach central processing systems within 8-10ms, but visual stimulus can take around 20-40ms, so there's still room for 1-2 OOM of latency improvement.

LLM inference is not as well studied as training, so there's lots of low hanging fruit when it comes to optimization (at first bottlenecked on memory bandwidth, post quantization, on throughput and compute within acceptable latency envelopes), plus there's a lot of pressure to squeeze out extra efficiency given constraints on hardware.

Llama-2 came out in July 2023, by September there were so many articles coming out on inference tricks I created a subreddit to keep track of high quality ones, though I gave up by November. At least some of the improvement is from open source code making it back into the major labs. The trademark for GPT-5 was registered in July (and included references to audio being built in), updated in February, and in March they filed to use "Voice Engine" which seems about right for a training run. I'm not aware of any publicly available evidence which contradicts the hypothesis that GPT-5 would just be a scaled up version of this architecture.

GPT-4o is apparently trained on text, voice and vision so that everything is done natively. You can now interrupt it mid-sentence.

this means it knows about time in a much deeper sense than previous large public models. I wonder how far that goes.

It seems to be able to understand video rather than just images from the demos, I'd assume that will give it much better time understanding too. (Gemini also has video input)

Are you saying this because temporal understanding is necessary for audio? Are there any tests that could be done with just the text interface to see if it understands time better? I can't really think of any (besides just doing off vibes after a bunch of interaction).

I imagine its music skills are a good bit stronger. it's more of a statement of curiosity regarding longer term time reasoning, like on the scale of hours to days.

If the presentation yesterday didn't make this clear, the ChatGPT-4 Mac app with the 4o model is already available on Mac. As soon as I logged in to the ChatGPT website on my Mac, I got a link to download it.

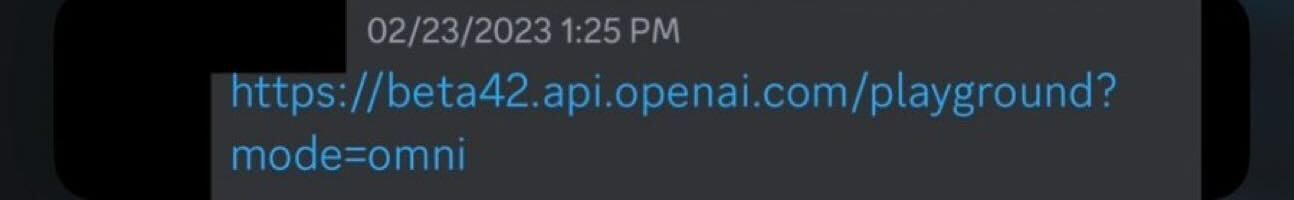

Oh, and it's quite likely that the first iteration of this model finished training in February 2023. Jimmy Apples (consistently correct OpenAI leaker) shared this screenshot of an API link to an "omni" model (which was potentially codenamed Gobi last year):

Are you saying this because temporal understanding is necessary for audio? Are there any tests that could be done with just the text interface to see if it understands time better? I can't really think of any (besides just doing off vibes after a bunch of interaction).