I found this paper which is interesting. But at the start he tells an interesting anecdote about existential risk:

...The first occurred at Los Alamos during WWII when we were designing atomic bombs. Shortly before the first field test (you realize that no small scale experiment can be done -- either you have the critical mass or you do not), a man asked me to check some arithmetic he had done, and I agreed, thinking to fob it off on some subordinate. When I asked what it was, he said, "it is the probability that the test bomb will ignite the whole atmosphere," I decided I would check it myself! The next day when he came for the answers I remarked to him, "The arithmetic was apparently correct but I do not know about the formulas for the capture cross sections for oxygen and nitrogen -- after all, there could be no experiments at the needed energy levels." He replied, like a physicist talking to a mathematician, that he wanted me to check the arithmetic not the physics, and left. I said to myself, "what have you done, Hamming, you are involved in risking all life that is known in the Universe, and you do not know much of an essential part?" I was pacing

His reply was, "Never mind, Hamming, no one will ever blame you."

This is a good example of optimizing for the wrong goal.

LW might find that interesting:

I'm becoming a Christian, not just one who occasionally went to church as a kid, but a real one that believes in Christ, loving God with all my heart, etc.

Most ex-atheists who become deists turn to Buddhism, so I thought I'd be clear why they are all wrong (Robert Wright!). I'd like to thank Mencius Moldbug, Dierdre McCloskey, Mike Behe, Tim Keller (four names probably never listed in sequence ever), and hundreds more...Below are snippets (top and bottom) from my Christian apology: I came to Christ via rational inference, not a personal crisis.

If evolution is untrue, it changes everything.

Just by reading this phrase, I can conclude that everything else is probably useless.

The experiments in 1953 created some of the amino acids found in all life forms, but this is a far cry from creating proteins.

But, apparently, it's not a far cry from a supernatural person to create all universe(s).

My two cents, extrapolating from this and other converts (e.g. UnequallyYoked, which I still follow): there's a certain tendency of the brain to want to believe in the supernatural. In those people who have this urge at a stronger level, but who come in contact with rationality, a sort of cognitive conflict is formed, like an addict trying to fight the urge to use drugs.

As soon as a hole in rationality is perceived, this gives the brain the excuse to switch to a preferred mode of thinking, wether the hole is real or not, and wether there exists more probable alternatives.

Admittedly, this is just a "neuronification" of a psychological phoenomenon, and it does lower believers' status by comparing them to drug addicts...

I'm a big Eliezer fan, and like reading this blog on occasion. I consider myself rational, Dunning-Kruger effect notwithstanding (ie, I'm too dumb or biased to know I'm not dumb or biased, trapped!). In any case, I think the above is pretty good, but I would stress the ID portion of my paper, which is in the PDF not the post, is that the evolutionary mechanism as observed empirically scales O(2^n), not O(n), generally, where n is the number of mutations needed to create a new function. Someday we may see evolution that scales, at which point I will change my mind, but thus far, I think Behe is correct in his 'edge of evolution' argument (eg, certain things, like anti-freeze in fish, are evolutionarily possible, others, like creating a flagellum, are not). As per the Christianity part, the emphasis on the will over reason gives a sustainable, evolutionarily stable 'why' to habits of character and thought that are salubrious, stoicism with real inspiration. Christianity also is the foundation for individualism and bourgeois morality that has generated flourishing societies, so, it works personally and for society.

My younger self disagreed with my current self, so I can empathize and respect those why find my reasoning unconvincing, but I don't think it's useful in figuring things out to simply attribute my belief to bias or insecurity.

You don't get large corporations without some kind of state, so why does decentralized evolution allow for people-states?

In the absence of any state holding the monopoly of power a large corporation automatically grows into a defacto state as the British East India company did in India. Big mafia organisations spring up even when the state doesn't want them to exist. The same is true for various terrorist groups.

From here I could argue that the economics establishment seems to fail at their job when they fail to understand how coorperation can infact arise but I think there good work on cooperation such as Sveriges Riksbank Prize winner Elinor Ostrom.

If I understand her right than the important thing for solving issues of tragedy of the commons isn't centralized decision making but good local decision making by people on-the-ground.

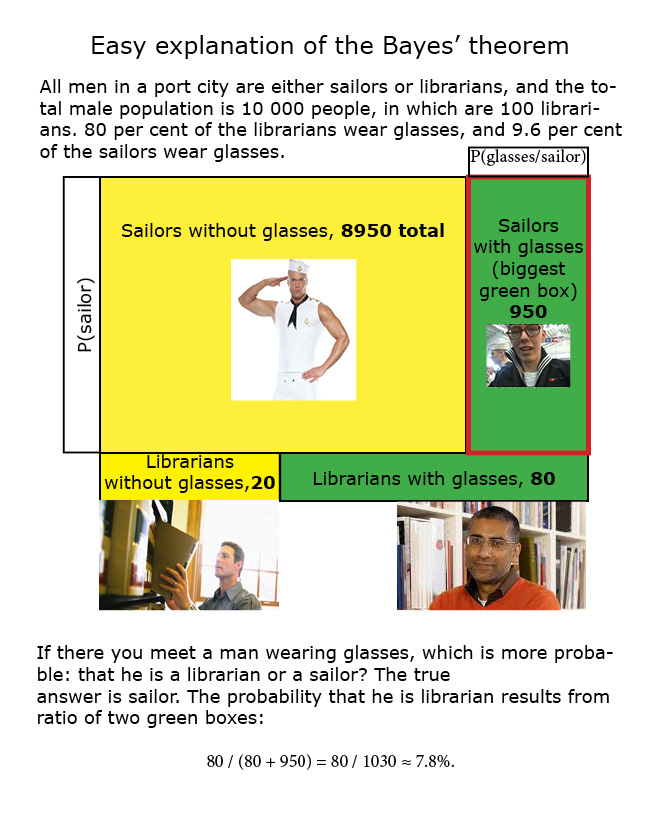

I created easy explanation of Bayes theorem as a small map: http://immortality-roadmap.com/bayesl.jpg (can't insert jpg into a comment)

It is based on following short story: All men in a port city are either sailors or librarians, and the total male population is 10 000 people, in which are 100 librarians. 80 per cent of the librarians wear glasses, and 9.6 per cent of the sailors wear glasses. If there you meet a man wearing glasses, which is more probable: that he is a librarian or a sailor? The true answer is sailor. The probability that he is librarian results from ratio of two green boxes:

80 / (80 + 950) = 80 / 1030 ≈ 7.8%.

The digits are the same as in original EY explanation, but I exclude the story about mammography, as it may be not easy to understand two complex scientific topics simultaneously. http://www.yudkowsky.net/rational/bayes

When I was thinking about "quantum immortality", I realized that I am bad at guessing the most likely very unlikely outcomes.

I don't mean the kind of "quantum immortality" when you throw a tantrum and kill yourself whenever you don't win a lottery, but rather the one that happens spontaneously, even if you are not aware of the concept. With sufficiently small probability (quantum amplitude) "miracles" happen, and in a thousand years all your surviving copies will be alive only thanks to some "miracle".

But of course, ...

I have large PR problems when talking about rationality with others unfamiliar with it, with the Straw Vulcan being the most common trap conversation will fall into.

Are there any guides out there in the vein of the EA Pitch Wiki that could help someone avoid these traps and portray rationality in a more positive light? If not, would it be worth creating one?

So far I've found, how rationality can make your life more awesome, rationality for curiosity sake, rationality as winning, PR problems and the contrary rationality isn't all that great.

There's actually a yearly cryonics festival held in Nederland, a little town near Boulder, Colorado. However, according to a relative who's been to the festival, the whole event is really just an excuse to drink beer, listen to music, and participate in silly cryonics-themed events like ice turkey bowling.

So, it seems like the type of people who attend this festival aren't quite the type who would be likely to sign up for cryonics after all, though I might be able to further evaluate this next year if I'm able to attend the festival while visiting a relati...

Anyone know of the best vitamins/minerals/supplements to take for promoting bone growth and healing?

It seems calcium and vitamin D are pretty commonly recommended. And rational dissent on this? Any others that should be taken?

I don't know how good this holds up, but: I noticed that in conversations I tend to barge out questions like an inquisitor, while some of my friends are more like story tellers. Some, I can't really talk with, and when we get together, it's more like 'just chilling out'.

I was wondering a, if people tend to fall into some category more than others b, if there are more such categories c, if overemphasis on one behavior is a significant factor of mine, (and presumably others') social skill deficit

If the last is true, I would like to diversify this portfol...

I figure this is a long shot, but: I'm looking for a therapist and possibly a relationship counselor. I'd like one who's in some way adjacent to the LW community. The idea is to have less inferential distance to cross when describing the shit that goes through my head. Finding someone with whom there isn't a large IQ gap is also a concern.

Can anyone give me a lead?

(also, the anonymous Username account appears to have had its password changed at some point. Hence this one.)

What are examples of complex systems people tend to ignore, that they still interact with every day? I am thinking of stuff like your body, the local infrastructure, your computer, your car - stuff, which you just assume works, and one could probably gain from trying to understand.

What I am going for here is a full list of things people actually interact with, hoping to have some sort of exhaustive guide for 'Elohim's Game of Life' and its mechanisms, like one would have on a game's wikia.

What are, if any, the contemporary names for each of Francis Bacon's idola and/or Roger Bacon's offendicula?

Know about geospatial analysis?

I'm building a story involving Jupiter's moon, Io. There's a geological map of it on the bottom half of this image. What I'd like to figure out is which sites have the widest variety of terrain types within the shortest distances? Or, put another way, which sites would be most worth dropping an automated factory down on, as they'd require the minimal amount of road-building to get to a useful variety of resources? Or, put a third way, what's the fewest number of sites that would be required to have a complete set of the terra...

You can put aaronsw into the LessWrong karma tool to see Aaron Swartz's post history, and read his most highly rated comments. I bet some of them would be good to spread more widely.

idea log

If you want a corporate job and don't know what kind, try go to your cbd at around 6pm and watch who looks the happiest. Ask them what they do and maybe even an interview or to take your cv if you’re a job searcher.

a commentor on 7chan concisely making a very epistemically and instrumentally complex claim: >'you only look for advice that confirms what you were going to do anyway'

How can more valuable social contributions capture that value in the form of economic rent, income or other forms of non-psychologica

[cross-posted from LW FB page. Seeking mentor or study pal for SICP]

Hello everyone,

I have decided to start learning programming, and I am beginning my journey with SICP-- I'm just a few weeks in.

I am looking for a study partner or someone experienced to chat for around 1 hour a week on Skype about the topics covered in the book to verify what I know and hopefully speed up the learning process.

Would anyone be willing to take on the above?

Thank you!

From http://lesswrong.com/lw/js/the_bottom_line/

Your effectiveness as a rationalist is determined by whichever algorithm actually writes the bottom line of your thoughts.

I remember a similar quotation regarding actions as opposed to thoughts. Does anyone remember how it went?

What would a man/female focused solely on their biological fitness do in the modern world? In animals, males would procreate with as many females, as they could, with the best scenario where they have different males raise their offsprings. Today, we have morning-after pills, and abortions, it is no longer enough for Pan to pinky-swear he would stay around. How does he alter his strategy? One I can quickly think of is sperm donation, but would that be his optimal strategy? I am certain that the hypothetical sultan from the old days could possibly produce ...

Are there any egoist arguments for (EA) aid in Africa? Does investment in Africa's stability and economic performance offer any instrumental benefit to a US citizen that does not care about the welfare of Africans terminally?

Anti-polyamory propoganda which clearly had some thought put into constructing a persuasive argument while doing lots of subtle or not so subtle manipulations. Always interesting to observe which emotional/psychological threads this kind of thing tries to pull on.

I have a question: It seems to me that Friendliness is a function of more than just an AI. To determine whether an AI is Friendly, it would seem necessary to answer the question: Friendly to whom? If that question is unanswered, then "Friendly" seems like an unsaturated function like "2+". In the LW context, the answer to that question is probably something along the lines of "humanity". However, wouldn't a mathematical definition of "humanity" be too complex to let us prove that some particular AI is Friendly to humanity? Even if the answer to "To whom?" is "Eliezer Yudkowsky", even that seems like it would be a rather complicated proof to say the least.

If it's worth saying, but not worth its own post (even in Discussion), then it goes here.

Notes for future OT posters:

1. Please add the 'open_thread' tag.

2. Check if there is an active Open Thread before posting a new one. (Immediately before; refresh the list-of-threads page before posting.)

3. Open Threads should be posted in Discussion, and not Main.

4. Open Threads should start on Monday, and end on Sunday.