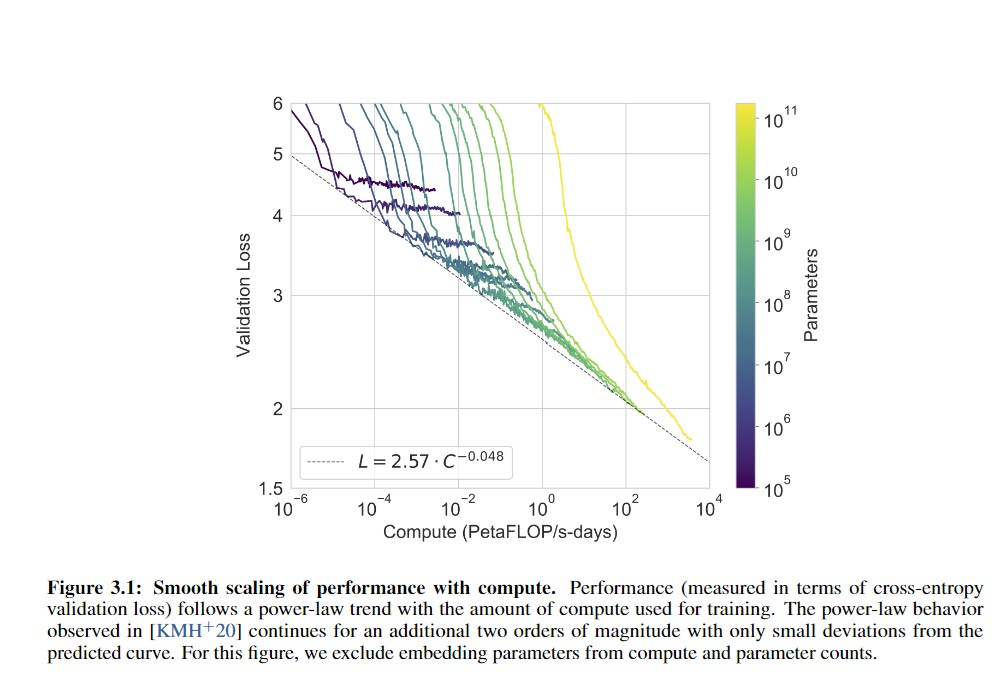

I look at graphs like these (From the GPT-3 paper), and I wonder where human-level is:

Gwern seems to have the answer here:

GPT-2-1.5b had a cross-entropy validation loss of ~3.3 (based on the perplexity of ~10 in Figure 4, and ). GPT-3 halved that loss to ~1.73 judging from Brown et al 2020 and using the scaling formula (). For a hypothetical GPT-4, if the scaling curve continues for another 3 orders or so of compute (100–1000×) before crossing over and hitting harder diminishing returns, the cross-entropy loss will drop, using to ~1.24 ().

If GPT-3 gained so much meta-learning and world knowledge by dropping its absolute loss ~50% when starting from GPT-2’s near-human level, what capabilities would another ~30% improvement over GPT-3 gain? What would a drop to ≤1, perhaps using wider context windows or recurrency, gain?

So, am I right in thinking that if someone took random internet text and fed it to me word by word and asked me to predict the next word, I'd do about as well as GPT-2 and significantly worse than GPT-3? If so, this actually lengthens my timelines a bit.

(Thanks to Alexander Lyzhov for answering this question in conversation)

To simplify Daniel's point: the pretraining paradigm claims that language draws heavily on important domains like logic, causal reasoning, world knowledge, etc; to reach human absolute performance (as measured in prediction: perplexity/cross-entropy/bpc), a language model must learn all of those domains roughly as well as humans do; GPT-3 obviously has not learned those important domains to a human level; therefore, if GPT-3 had the same absolute performance as humans but not the same important domains, the pretraining paradigm must be false because we've created a language model which succeeds at one but not the other. There may be a way to do pretraining right, but one turns out to not necessarily follow from the other and so you can't just optimize for absolute performance and expect the rest of it to fall into place.

(It would have turned out that language models can model easier or inessential parts of human corpuses enough to make up for skipping the important domains; maybe if you memorize enough quotes or tropes or sayings, for example, you can predict really well while still failing completely at commonsense reasoning, and this would hold true no matter how much more data was added to the pile.)

As it happens, GPT-3 has not reached the same absolute performance because we're just comparing apples & oranges. I was only talking about WebText in my comment there, but Omohundro is talking about Penn Tree Bank & 1BW. As far as I can tell, GPT-3 is still substantially short of human performance.