with the best models trained on close to all the high quality data we’ve got.

Is this including images, video and audio? Or just text?

Mostly just public text, I think. But I'm not sure how much more you get out of e.g. video transcripts. Maybe a lot! But it wouldn't surprise me if that was notably worse as a source.

Not video transcripts - video. 1 frame of Video contains much more data than 1 text token, and you can train an AI as a next frame predictor much as you can a next token predictor.

"Data wall" is not about running out of data, at least if we are targeting a level of capabilities rather than cheaper inference with overtrained models. In the sense of literally running out of data, there is no data wall because data can be repeated in training, and with Chinchilla scaling an increase in the amount of data (including repetition) results in quadratic increase in compute. This makes even publicly available data sufficient for $100 billion training runs. We are going to run out of compute/funding before we run out of data (absent autonomous long-horizon agents, but then resulting faster algorithmic progress makes straightforward scaling of LLMs less relevant).

The reason repeated data is rarely heard about is that there is still enough unique data at current scale. And until recently the prevailing wisdom was apparently that for LLMs repeating data is really bad, possibly until the Galactica paper, see Figure 6. But where data is more scarce, like code data in StarCoder 2, this technique is already in use (they train for up to 5 epochs). Repeating about 4 times is no worse than having 4 times more unique data, and repeating about 16 times is still highly useful (see Figure 5 in the above paper on scaling laws for repeated data).

So concerns about "data wall" are more about doing better on training quality per unit of data, whether natural or synthetic. It's about compute efficiency rather than sample efficiency with respect to external data. In which case it would be useful to apply such techniques even if there was unlimited unique data, as they should allow training smarter systems with less compute.

Modern AI is trained on a huge fraction of the internet

I want to push against this. The internet (or world wide web) is incredibly big. In fact we don't know exactly how big it is, and measuring the size is a research problem!

When they say this what they mean is it is trained on a huge fraction of Common Crawl. Common Crawl is a crawl of world wide web that is free to use. But there are other crawls, and you could crawl world wide web yourself. Everyone uses Common Crawl because it is decent and crawling world wide web is itself a large engineering project.

But Common Crawl is not at all a complete crawl of world wide web. It is very far from being complete. For example, Google has its own proprietary crawl of world wide web (which you can access as Google cache). Probabilistic estimate of size of Google's search index suggests it is 10x size of Common Crawl. And Google's crawl is also not complete. Bing also has its own crawl.

It is known that Anthropic runs its own crawler called ClaudeBot. Web crawl is highly non-trivial engineering project, but it is also kind of well understood. (Although I heard that you continue to encounter new issues as you approach more and more extreme scales.) There are also failed search engines with their own web crawls and you could buy them.

There is also another independent web crawl that is public! Internet Archive has its own crawl, it is just less well known than Common Crawl. Recently someone made a use of Internet Archive crawl and analyzed overlap and difference with Common Crawl, see https://arxiv.org/abs/2403.14009.

If the data wall is a big problem, making a use of Internet Archive crawl is like the first obvious thing to try. But as far as I know, that 2024 paper is first public literature to do so. At least, any analysis of data wall should take into account both Common Crawl and Internet Archive crawl with overlap excluded, but I have never seen anyone doing this.

My overall point is that Common Crawl is not world wide web. It is not complete, and there are other crawls, both public and private. You can also crawl yourself, and we know AI labs do. How much does it help is unclear, but I think 10x is very likely, although probably not 100x.

I'm guessing that the sort of data that's crawled by Google but not common crawl is usually low quality? I imagine that if somebody put any effort into writing something then they'll put effort into making sure it's easily accessible, and the stuff that's harder to get to is usually machine generated?

Of course that's excluding all the data that's private. I imagine that once you add private messages (e.g. WhatsApp, email) + internal documents that ends up being far bigger than the publicly available web.

Unclear. I think there is a correlation, but: one determinant of crawl completeness/quality/etc is choice of seeds. It is known that Internet Archive crawl has better Chinese data than Common Crawl, because they made specific effort to improve seeds for Chinese web. Such missing data originating from choice of seeds bias probably is not particularly low quality than average of what is in Common Crawl.

(That is, to clarify, yes in general effort is spent for quality writing to be easily accessible (hence easily crawlable), but accessibility is relative to choice of seeds, and it in fact is the case that being easily accessible from Chinese web does not necessarily entail being easily accessible from English web.)

Thanks for this - helpful and concrete, and did change my mind somewhat. Of course, if it really is just 10x, in terms of orders of magnitude/hyper fast scaling we are pretty close to the wall.

Here is a report from epoch on the quantity of data and when we will likely run out.

(I think you probably meant to link this in a footnote.)

Unless...

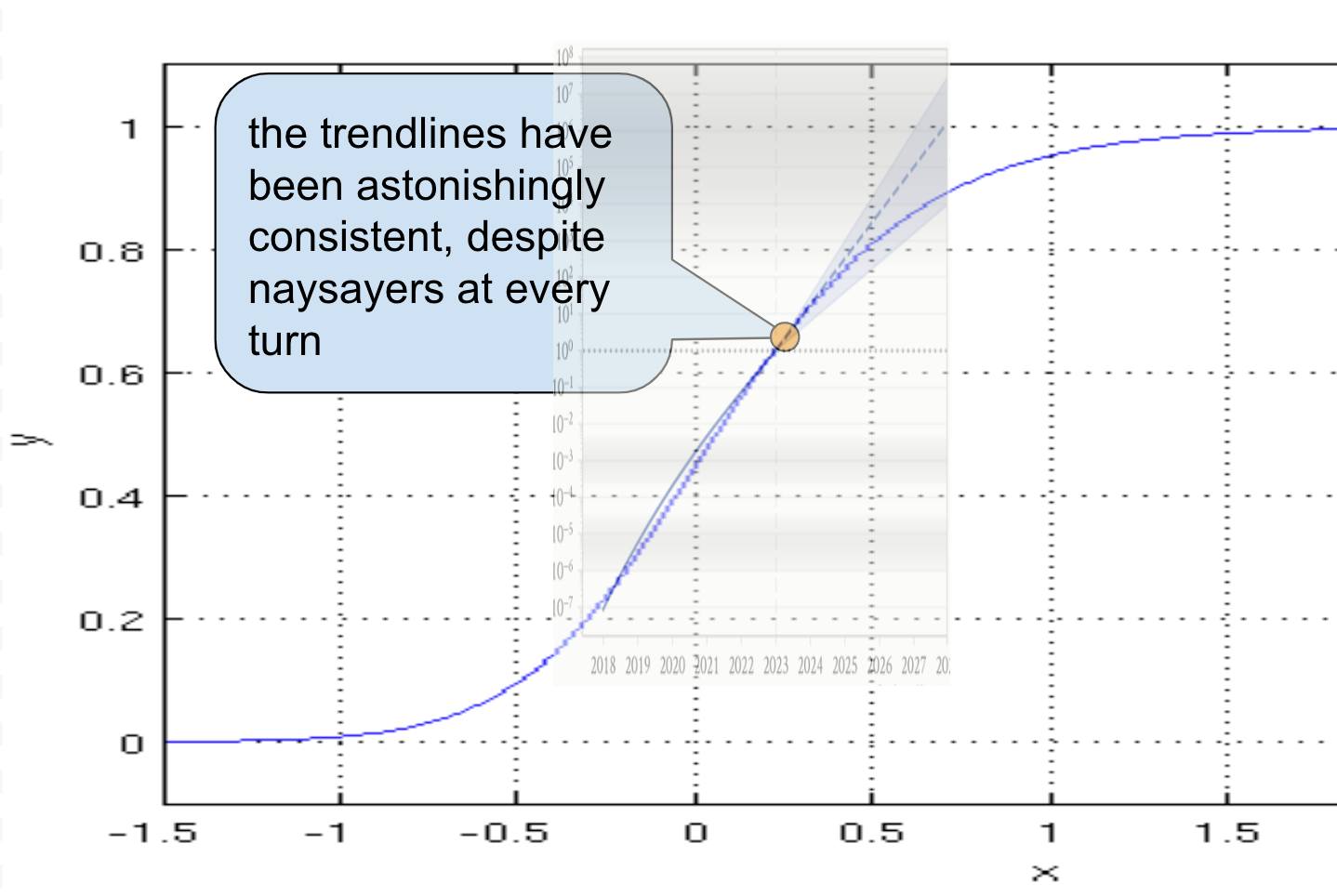

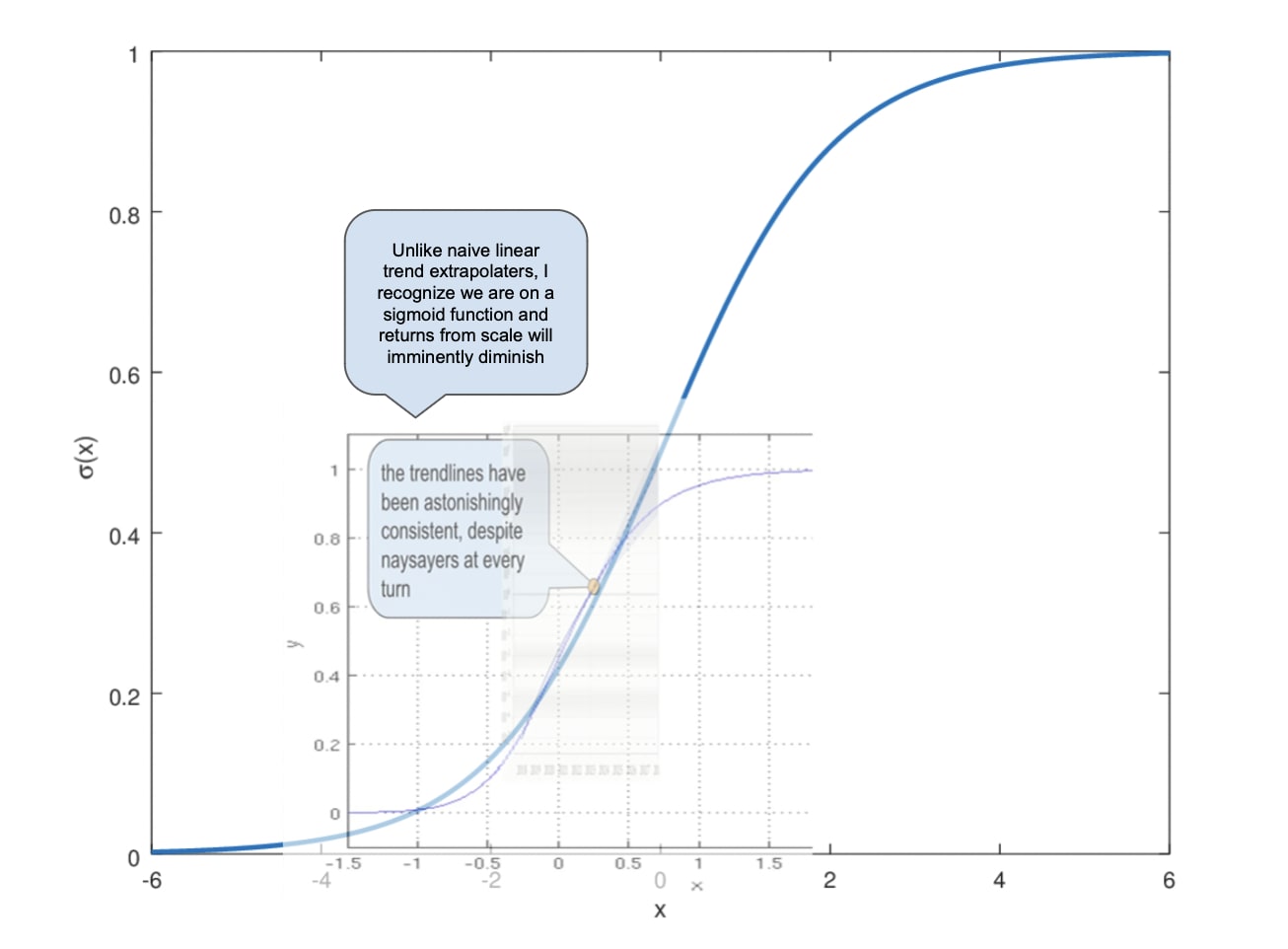

Made with love — I just think a lot of critics of the "straight line go up" thought process are straw manning the argument. The question is really when we start to hit diminishing returns, and I (like the author of this post) don't know if anyone has a good answer to that. I do think the data wall would be a likely cause if it is the case that progress slows in the coming years. But progress continuing in a roughly linear fashion between now and 2027 seems, to me, totally "strikingly plauisble."

I don't think my meme image is a good argument against all arguments that stuff will go up. But I don't think it's "straw manning the argument". The argument given is often pretty much literally just "look, it's been going up", maybe coupled with some mumbling about how "people are working on the data problem something something self-play something something synthetic data".

But progress continuing in a roughly linear fashion between now and 2027 seems, to me, totally "strikingly plauisble."

Do you think my image disagrees with that? Look again.

Sorry, I'm kinda lumping your meme in with a more general line of criticism I've seen that casts doubt on the whole idea of extrapolating an exponential trend, on account of the fact that we should eventually expect diminishing returns. But such extrapolation can still be quite informative, especially in the short term! If you had done it in 2020 to make guesses about where we'd end up in 2024, it would have served you well.

The sense in which it's straw-manning, in my mind, is that even the trend-extrapolaters admit that we can expect diminishing returns eventually. The question is where exactly we are on the curve, and that much is very uncertain. Given this, assuming the past rate of growth will hold constant for three more years isn't such a bad strategy (especially if you can tell plausible stories about how we'll e.g. get enough power to support the next 3 OOMs).

It's true — we might run into issues with data — but we also might solve those issues. And I don't think this is just mumbling and hand waving. My best guess is that there are real, promising efforts going on to solve this within labs, but we shouldn't expect to hear them in convincing detail because they will be fiercely guarded as a point of comparative advantage over other companies hitting the wall, hence why it just feels like mumbling from the outside.

I'm not sure what you want me to see by looking at your image again. Is it about the position of 2027 on the X-axis of the graph from Leopold's essay? If so, yeah, I assumed that placement wasn't rigorous or intentional and the goal of the meme was to suggest that extrapolating the trend in a linear fashion through 2027 was naive.

I suspect that this will result in more spying on people, because that's where more human-generated data are.

I assume that everyone already trains on the public internet, plus Library Genesis, plus Sci-Hub. Where to get more data? Google can read most people's e-mails, and will probably use YouTube videos for video. Microsoft can read Teams messages, plus there is the new feature in Windows 11 that records everything you do. Microphones and cameras on your computers and smartphones can get more data, so I wonder if we will soon get devices where you cannot turn these off. Every other device (e.g. a smart TV) will probably also spy on you as much as they can, because even if they are not in the AI business, they can still sell the data to those who are.

We're definitely on/past the inflection point of the GPT S-curve, but at the same time there's been work on other AI methods and we know they're in principle possible. What is needed to break the data wall is some AI that can autonomously collect new data, especially in subjects where it's weak.

One key question is how much investment AI companies make into this. E.g. the obvious next step to me would be to use a proof assistant to become superhuman at math by self-play. I know there's been a handful of experiments with this, but AFAIK it hasn't been done at huge scale or incorporated into current LLMs. Obviously they're not gonna break into the next S-curve until they try to do so.

I wonder if it even economically pays to break the data-wall. Like, let's say an AI company could pivot their focus to breaking the data-wall rather than focusing on productivizing their AIs. That means they're gonna lag behind in users, which they'd have to hope they could make up for by having a better AI. But once they break the data-wall, competitors are presumably gonna copy their method.

But once they break the data-wall, competitors are presumably gonna copy their method.

Is the assumption here that corporate espionage is efficient enough in the AI space that inventing entirely novel methods of training doesn't give much of a competitive advantage?

Hm, I guess this was kind of a cached belief from how it's gone down historically, but I guess they can ( and have) increased their secrecy, so I should probably invalidate the cache.

Modern AI is trained on a huge fraction of the internet, especially at the cutting edge, with the best models trained on close to all the high quality data we’ve got.[1] And data is really important! You can scale up compute, you can make algorithms more efficient, or you can add infrastructure around a model to make it more useful, but on the margin, great datasets are king. And, naively, we’re about to run out of fresh data to use.

It’s rumored that the top firms are looking for ways to get around the data wall. One possible approach is having LLMs create their own data to train on, for which there is kinda-sorta a precedent from, e.g. modern chess AIs learning by playing games against themselves.[2] Or just finding ways to make AI dramatically more sample efficient with the data we’ve already got: the existence of human brains proves that this is, theoretically, possible.[3]

But all we have, right now, are rumors. I’m not even personally aware of rumors that any lab has cracked the problem: certainly, nobody has come out and say so in public! There’s a lot of insinuation that the data wall is not so formidable, but no hard proof. And if the data wall is a hard blocker, it could be very hard to get AI systems much stronger than they are now.

If the data wall stands, what would we make of today’s rumors? There’s certainly an optimistic mood about progress coming from AI company CEOs, and a steady trickle of not-quite-leaks that exciting stuff is going on behind the scenes, and to stay tuned. But there are at least two competing explanations for all this:

Top companies are already using the world’s smartest human minds to crack the data wall, and have all but succeeded.

Top companies need to keep releasing impressive stuff to keep the money flowing, so they declare, both internally and externally, that their current hurdles are surmountable.

There’s lots of precedent for number two! You may have heard of startups hard coding a feature and then scrambling to actually implement it when there’s interest. And race dynamics make this even more likely: if OpenAI projects cool confidence that it’s almost over the data wall, and Anthropic doesn’t, then where will all the investors, customers, and high profile corporate deals go? There also could be an echo chamber effect, where one firm acting like the data wall’s not a big deal makes other firms take their word for it.

I don’t know what a world with a strong data wall looks like in five years. I bet it still looks pretty different than today! Just improving GPT-4 level models around the edges, giving them better tools and scaffolding, should be enough to spur massive economic activity and, in the absence of government intervention, job market changes. We can’t unscramble the egg. But the “just trust the straight line on the graph” argument is ignoring that one of the determinants of that line is running out. There’s a world where the line is stronger than that particular constraint, and a new treasure trove of data appears in time. But there’s also a world where it isn’t, and we’re near the inflection of an S-curve.

Rumors and projected confidence can’t tell us which world we’re in.

For good analysis of this, search for the heading “The data wall” here.

But don’t take this parallel too far! Chess AI (or AI playing any other game) has a signal of “victory” that it can seek out - it can preferentially choose moves that systematically lead to the “my side won the game” outcome. But the core of a LLM is a text predictor: “winning” for it is correctly guessing what comes next in human-created text. What does self-play look like there? Merely making up fake human-created text has the obvious issue of amplifying any weaknesses the AI has - if an AI thought, for example, that humans said “fiddle dee dee” all the time for no reason, then it would put “fiddle dee dee” in lots of its synthetic data, and then AIs trained to predict on that dataset would “learn” this false assumption, and “fiddle dee dee” prevalence would go way up in LLM outputs. And this would apply to all failure modes, leading to wacky feedback loops that might make self-play models worse instead of better.

Shout out to Steven Byrnes, my favorite researcher in this direction.