Use this comment to find a partner to dialogue with.

Recommended actions:

- Set a 2-5 timer and brainstorm questions you'd be interested in discussing / questions you have been thinking about lately. Put them into a reply to this comment, and await DMs.

- Alternatively (or as well as): DM some users who left comments, saying which topics you'd like to discuss with them.

- If you find a match (i.e. you both want to discuss it with each other): open a dialogue, invite your partner, and write some opening thoughts of yours / questions for them.

- After a few rounds of writing back-and-forth, check in and (if you found the conversation interesting/substantive) agree to publish.

Good luck!

(People may of course say no for whatever reason. For an extra challenge you can also try to find a time to both show up together and write synchronously. You can also suggest topics to people that they didn't list.)

Here are some topics that I would be interested in talking about:

- I would be interested in just talking with some people about "the basic case for AI X-risk".

- I've found it quite valuable to go back and forth with people on just going quite slow and without much of any references to long-chained existing explanations, try to explain what the exact mechanisms for AI risk are, and what our current models of the hope and plausibility of various approaches are.

- Is there much of any substance to stuff like Anthropic's "Responsible Scaling Policy"?

- I like some of the concrete commitments, but when I read it, I mostly get a vibe of some kind of abstract governance document that doesn't actually commit Anthropic to much, and is more designed to placate someone's concerns instead of being intended to concrete set incentives or help solve a specific problem. It feels too abstract and meta to me (whereas a paper or post with the title "when should we halt further capabilities scaling?" seems like it would have gone for the throat and said something concrete, but instead we got a document that implicity, in the margins, assumed some stuff about when the right place to halt is, but that's really hard to argue with). But also, I don't have much experience with governing large organizations and coordinating industries, so maybe you do have to talk at this level of abstraction.

- How to build a community that integrates character evidence?

- One of the biggest lessons I took away from FTX was that if I want to build communities and infrastructure that doesn't end up taken advantage of, I somehow need better tools for sharing character-evidence, by which I mean evidence about behavioral patterns that didn't necessarily directly harm someone, but are correlated with people causing bad outcomes later. In the case of FTX, tons of people had access to important character evidence, but we had no infrastructure in place for sharing it, and indeed very few people knew about it (and the ones who knew usually were asked to treat the evidence as confidential). I have some ideas here, but still feel quite confused about what to actually do.

- I've been bouncing off of the Quintin Pope critiques of evolution and sharp-left-turn stuff.

- I would be pretty interested in debating this with someone who thinks I should spend more time on the critiques. Currently my feeling is they make some locally valid points, but don't really engage with any of my cruxes, but I do have a feeling they are supposed to respond to people with opinions like mine, so I would be excited about chatting with someone about it.

- Lots of people keep saying that AI Alignment research has accelerated a ton since people have started doing experiments on large models. I feel quite confused by this and I currently feel like alignment research in the last 2-3 years has stayed the same or slowed. I don't really know what research people are referring to when they talk about progress.

- This might just go into some deep "prosaic vs. nonprosaic" alignment research disagreement, but that also seems fine. I feel like basically the only thing that has happened in the last few years is some interpretability progress (though the speed of that seems roughly the same as it was back in 2017 when Chris Olah was working on Distill), and a bunch of variations on RLHF, which IMO don't really help at all with the hard parts of the problem. I can imagine something cool coming out of Paul's ELK-adjacent research, but nothing has so far, so that doesn't really sound like progress to me.

- How do we actually use AI to make LessWrong better?

- While I assign more probability to a faster takeoff than other people, giving us less time to benefit from the fruits of AI before things go badly, I still assign two-digit probabilities to slower takeoffs, and it does seem worth trying to take advantage of that to make AI Alignment go better. Short of automating researchers, what coordination and communication technology exists that could help make AI and the development of the art of rationality go better?

If you've written high-karma, high-quality posts or comments on LessWrong, or you've done other cool things that I've heard about, I would likely be up for giving a dialogue a try and to set aside like 5-10 hours to make something good happen (though you should feel free to spend less time on it).

- I would be interested in just talking with some people about "the basic case for AI X-risk".

- I've found it quite valuable to go back and forth with people on just going quite slow and without much of any references to long-chained existing explanations, try to explain what the exact mechanisms for AI risk are, and what our current models of the hope and plausibility of various approaches are.

I might be interested in this, depending on what qualifies as "basic", and what you want to emphasize.

I feel like I've been getting into the weeds lately, or watching others get into the weeds, on how various recent alignment and capabilities developments affect what the near future will look like, e.g. how difficult particular known alignment sub-problems are likely to be or what solutions for them might look like, how right various peoples' past predictions and models were, etc.

And to me, a lot of these results and arguments look mostly irrelevant to the core AI x-risk argument, for which the conclusion is that once you have something actually smarter than humans hanging around, literally everyone drops dead shortly afterwards, unless a lot of things before then have gone right in a complicated way.

(Some of these developments might have big implications for how things are likely to go before we get to the simultaneous-death point, e.g. by affecting the likelihood that we screw up earlier and things go off the rails in some less predictable way.)

But basically everything we've recently seen looks like it is about the character of mind-space and the manipulability of minds in the below-human-level region, and this just feels to me like a very interesting distraction most of the time.

In a dialogue, I'd be interested in fleshing out why I think a lot of results about below-human-level minds are likely to be irrelevant, and where we can look for better arguments and intuitions instead. I also wouldn't mind recapitulating (my view of) the core AI x-risk argument, though I expect I have fewer novel things to say on that, and the non-novel things I'd say are probably already better said elsewhere by others.

I might also also be interested in having a dialogue on this topic with someone else if habryka isn't interested, though I think it would work better if we're not starting from too far apart in terms of basic viewpoint.

This sounds great! I'll invite you to a dialogue, and then if you can shoot off an opening statement, we can get started.

I'm interested in having a dialogue!

Some topics I've been thinking about lately:

- How much slack should one maintain in life?

- How much is the world shaped by human agency (vs other forces)?

- How evil ought one be? (My current answer: zero.)

I'm also open to DMs if there's another topic you would be interested to discuss with me that I didn't list :)

Edit: I am now working on one dialogue! Excited.

How evil ought one be? (My current answer: zero.)

I'd be happy to discuss a different view on this Ben, my current answer: not zero.

I'm up for a dialogue!

What I can offer others: Some people say I give good advice. I have a broad shallow knowledge of lots of things; If you're thinking "Has anyone looked at X yet?" or "There's no resources for problem Y!", chances are good I've already bookmarked something about the exact thing.

What I hope to gain: I'm most interested in any or all of the following topics:

- How precisely do human values/metaethics need to be encoded, rather than learned-later, to result in a non-doom-causing AGI? Can this be formalized/measured? (Context)

- How "smart" (experienced, talented, working-memory, already-up-to-speed) do I need to be about ML and/or maths, personally, to actually help with technical AI alignment? (Could discuss this question for either or both of the 3 main "assumption clusters", namely "QACI/agent-foundations/pessimism VS prosaic-alignment/LLMs/interpretability/whatever Quintin Pope is working on VS both sides (Wentworth(?))".)

- How deep in weirdness-points debt am I, already? Does this "block" me from doing anything actually helpful w.r.t. AI governance? (Basically the governance-version of the above topic.)

- Another version of the above 2 questions, but related to my personality/"intensity".

- Intelligence enhancement!

I'm interested in having dialogues/debates about deliberate practice and feedbackloops, applied to fuzzy domains.

This can take a number of forms, either more like a debate or more like an interview:

- If you currently think "my domain is too fuzzy, it's not amenable to feedbackloops, or not amenable to deliberate practice", I'm interested in exploring/arguing about that.

- If you think you have mastery over an interesting domain that many people think of as fuzzy/hard-to-train, I'd be interested in interviewing you about your process, and what little subskills go into that domain.

Might be interesting to chat about zen! While there is feedback to learn various things, I'll claim that the practice ultimately becomes about something more like letting feedback loops rest so that you can become a feedforward system when no feedback is needed.

I'd be up for a dialogue mostly in the sense of the first bullet about "making sense of technical debates as a non-expert". As far as it's "my domain" it's in the context of making strategic decisions in R&D, but for example I'd also consider things like understanding claims about LK-99 to fall in the domain.

I think on and off about how one might practice (here's one example) and always come away ambivalent. Case studies and retrospectives are valuable, but lately, I tend to lean more pragmatic—once you have a basically reasonable approach, it's often best to come to a question with a specific decision in mind that you want to inform, rather than try to become generically stronger through practice. (Not just because transfer is hard, but also for example because the best you can do often isn't that good, anyway—an obstacle for both pragmatic returns and for feedback loops.) And then I tend to think that the real problem is social—the most important information is often tacit or embedded in a community's network (as in these examples)—and while that's also something you can learn to navigate, it makes systematic deliberate practice difficult.

I'd like to have a dialogue with someone also speculating about:

- The current state of polygraph technology

- The scope and extent of modern intel agency interference in politics and regular life

- AI's current and near-term impact on state capacity and how it should affect the strategic thinking of AI safety advocates.

I'm potentially up for another dialogue.

I'm often very up for interviewing people (as I've done here and here) -- if I have some genuine interest in the topic, and if it seems like lesswrong readers would like to see the interview happen. So if people are looking for someone-to-help-you-get-your-thoughts-out, I might be down. (See also the interview request form.)

For interviewing folks, I'm interested in more stuff than I can list. I'll just check what others are into being interviewed about and if there's a match.

For personally dialogueing, topics I might want to do (writing these out, quickly and roughly, rather than not at all!)

- metaphors and isomorphisms between lean manufacturing <> functional programming <> maneuver warfare, as different operational philosophies in different domains that still seem to be "climbing the same mountain from different directions"

- state space metaphors applied to operations. i have some thoughts here, for thinking about how effective teams and organisations function, drawing upon a bunch of concepts and abstractions that I've mostly found around writing by wentworth and some agent foundations stuff... I can't summarise it succinctly, but if someone is curious upon hearing this sentence, we could chat

- strategically, what are technologies such that 1) in our timeline they will appear late (or too late) on the automation tree, 2) they will be blocking for accomplishing certain things, and 3) there's tractable work now for causing them to happen sooner? For example: will software and science automation progress to a point where we will be able to solve a hard problem like uploading, in a way that then leaves us blocked on something like "having 1000 super-microscopes"? And if so, should someone just go try to build those microscopes now? Are there are other examples like this?

- I like flying and would dialogue about it :)

- enumerative safety sounds kind of bonkers... but what if it isn't? And beyond that, what kind of other ambitious, automated, alignment experiments would it be good if someone tried?

- Cyborg interfaces. What are natural and/or powerful ways of exploring latent space? I've been thinking about sort of mindlessly taking some interfaces over which I have some command -- a guitar, a piano, a stick-and-rudder -- and hooking them up something allowing me to "play latent space" or "cruise through latent space". What other metaphors are there here? What other interfaces might be cool to play around with?

Lots of these sound interesting, even though I'm not sure how much I can add to the discussion. Perhaps...?

If you don't want to put your questions in public, there's a form you can fill in, where only the lesswrong team sees your suggestions, and will do the matchmaking to connect you with someone only if it seems like a good mutual fit :)

Here's the dialogue matchmaking form: https://forms.gle/aNGTevDJxxbAZPZRA

I'd be interested in doing something resembling an interview/podcast, where my main role is to facilitate someone else talking about their models (and maybe asking questions that get them to look closer at blurry parts of their own models). If you have something you want to talk/write about, but don't feel like making a whole post about it, consider asking me to do a dialogue with you as a relatively low-effort way to publish your takes.

Some potential topics:

- What's your organization trying to do? What are some details about the world that are informing your current path to doing that?

- What are you spending your time on, and why do you think it's useful?

- What's a common mistake (or class of mistake) you see people making, and how might one avoid it?

So, if you have something you want to talk about, and want to recruit me as an interlocutor/facilitator, let me know.

Ideas I'm interested in playing with:

- experiment with using this feature for one-on-one coaching / debugging; I'd be happy to help anyone with their current bugs... (I suspect video call is the superior medium but shrug, maybe there will be benefits to this way)

- talk about our practice together (if you have a 'practice' and know what that means)

Topics I'd be interested in exploring:

- Why meditation? Should you meditate? (I already meditate, a lot. I don't think everyone should "meditate". But everyone would benefit from something like "a practice" that they do regularly. Where 'practice' I'm using in an extremely broad sense and can include rationality practices.)

- Is there anything I don't do that you think I should do?

- How to develop collective awakening through technology

- How to promote, maintain, develop, etc. ethical thoughts, speech, and action through technology

I'm interested in having a dialogue. Things I'm interested in:

-

Deep learning theory, from high level abstract stuff like singular learning theory and tensor programs, to mechanistic interpretability[1], to probably relevant empirical results in ML which seem weird or surprising which theories seem like they have to explain in order to succeed.

-

Whether or not, and under what conditions interpretability (and deep learning theory more generally) is good, and more broadly: how can we do interpretability & deep learning theory in the most beneficial way possible.

-

Alignment strategy. I have many opinions, and lots of confusions.

-

What caused the industrial revolution? I don't know much about this (though from my understanding, nobody knows much about it), but I am curious about it.

-

I'm also very curious about the history of science, and am always open to having someone talk to me about this, even outside dialogues. If you see me randomly on the street, and I have my headphones in, and am on the other side of the street from you, but you know something about the history of science that you want to tell someone, come to the other side of the street, get my attention, and tell me so! I may not necessarily in that moment want to talk, but I will at least schedule a meet-up with you to talk.

-

Topics in theories of optimization & agency. Ranging from the most concrete & narrow topics in mathematical programming, to broad MIRI-esque questions about the core of consequentialism, and everything in between.

-

Lots of other stuff! I think I'm usually pretty interested in peoples' niche interest they really want to talk about, but I haven't ever subjected that statement to pressure under the kind of adverse selection you get when putting out such a general call.

A side comment: I really love the dialogues idea. The dialogues I've read so far have been really good, and they seem like a fast way of producing lots of really high-quality public ideas and explainers. Hopefully Plato was right that the best way to transmit and consume philosophy is via watching and having dialogues, and the ideas on LessWrong get better.

Which I think of as being strategically very similar to deep learning theory, as it tries to answer many very similar questions. ↩︎

Topics I would be excited to have a dialogue about [will add to this list as I think of more]:

- I want to talk to someone who thinks p(human extinction | superhuman AGI developed in next 50 years) < 50% and understand why they think that

- I want to talk to someone who thinks the probability of existential risk from AI is much higher than the probability of human extinction due to AI (ie most x-risk from AI isn't scenarios where all humans end up dead soon after)

- I want to talk to someone who has thoughts on university AI safety groups (are they harmful or helpful?)

- I want to talk to someone who has pretty long AI timelines (median >= 50 years until AGI)

- I want to have a conversation with someone who has strong intuitions about what counts as high/low integrity behaviour. Growing up I sort of got used to lying to adults and bureaucracies and then had to make a conscious effort to adopt some rules to be more honest. I think I would find it interesting to talk to someone who has relevant experiences or intuitions about how minor instances of lying can be pretty harmful.

- If you have a rationality skill that you think can be taught over text, I would be excited to try learning it.

I mostly expect to ask questions and point out where and why I'm confused or disagree with your points rather than make novel arguments myself, though am open to different formats that make it easier/more convenient/more useful for the other person to have a dialogue with me.

I'm interested in having dialogues about various things, including:

- Responsible scaling policies

- Pros and cons of the Bay Area//how the vibe in Bay Area community has changed [at least in my experience]

- AI policy stuff in general (takes on various policy ideas, orgs, etc.)

- Takeaways from talking to various congressional offices (already have someone for this one but I think it'd likely be fine for others to join)

- General worldview stuff & open-ended reflection stuff (e.g., things I've learned, mistakes I've made, projects I've been proudest of, things I'm uncertain about, etc.)

I would be interested in dialoguing about:

- why the "imagine AI as a psychopath" analogy for possible doom is or isn't appropriate (I brought this up a couple months ago and someone on LW told me Eliezer argued it was bad, but I couldn't find the source and am curious about reasons)

- how to maintain meaning in life if it seems that everything you value doing is replaceable by AI (and mere pleasure doesn't feel sustainable)

I'd love to have a dialogue.

Topics:

- Alignment strategy. From here to a good outcome for humanity

- Alignment difficulty. I think this is a crux of 1) and that nobody has a good estimate right now

- Alignment stability. I think this is a crux of 2) and nobody has written about this much

- Alignment plans for RL agents, particularly the plan for mediocre alignment

- Alignment plans for language model agents (not language models), for instance, this set of plans

I like learning and am generally interested in playing the role of learner in a conversation where someone else plays the role of teacher.

I'm interested in being a dialogue partner for any of the things I tend to post about, but maybe especially:

- Explosive economic growth—I'm skeptical of models like Tom Davidson's but curious to probe others' intuitions. For example, what would speeding up hardware research by a factor of 10 or more look like?

- Molecular nanotechnology, for example clarifying my critique of the use of equilibrium statistical mechanics in Nanosystems

- Maybe in more of an interviewer/rubber-duck role: Forecasting and its limits, professional ethics in EA, making sense of scientific controversies

- My own skepticism about AI x-risk as a priority

Maybe in more of an interviewer/rubber-duck role: Forecasting and its limits,

Doublechecking understanding: in this scenario you’re looking for someone who has opinions about forecasting, and interviewing them? Or did you wanna be interviewed?

The first! These are things I think about sometimes, but I suspect I'd be better at asking interesting questions than at saying interesting things myself.

We just made some Manifold markets on how dialogues will shape up:

And a similar market for arbitrage action:

And a meta-market for the fun of it :)

Warning: Dialogues seem like such a cool idea that we might steal them for Manifold (I wrote a quick draft proposal).

On that note, I'd love to have a dialogue on "How do the Manifold and Lightcone teams think about their respective lanes?"

It seems to me that these types of conversations would benefit if they were not chains but trees instead. Usually when two people have a disagreement/different point of view, there is usually some root cause of this disagreement. When the conversation is a chain, I think it likely results in one person explaining her arguments/making several points, another one having to expand on each, and then at some point in order for this to not result in massively long comments, the participants have to paraphrase, summarise or ignore some of the arguments to make it concise. If there was an option at some point to split the conversation into several branches, it could possibly make the comments shorter, easier to read, and go deeper. Also, for the reader not participating in the conversation it can be easier to follow the conversation and get to the main point influencing his view.

I'm not sure if something like this was done before and it would obviously require a lot more work on the UI, but I just wanted to share the idea as it might be worth considering.

Came here to comment this. As it is, this seems like just talking on discord/telegram, but with the notion of publishing it later. What I really lack when discussing something is the ability to branch out and backtrack easily and have a view of all conversation topics at once.

That being said, I really like the idea of dialogues, and I think this is a push in the right direction, I have enjoyed the dialogue I read so far greatly. Excited to see where this goes

Thinking more on this and to friends who voiced that non-trees/dag dialog makes it non appealing to them also (especially in contexts where I was inviting them to have dialogue together), would there be interests in making a PR for this feature? I might work on this directly

sure, I'm actually not suggesting that it should necessarily be a feature of dialogues on lw, it was just a suggestion for a different format (my comment generated almost opposite karma/agreement votes, so maybe this is the reason?). it also depends on frequency how often do you use the branching - my guess is that most don't require it in every point, but maybe a few times in the whole conversation might be useful.

Perhaps instead of a tree it would be better to have a directed acyclic graph, since IME even if the discussion splits off into branches one often wants at some point to respond to multiple endpoints with one single comment. But I don't know if there's really a better UI for that shape of discussion than a simple flat thread with free linking/quoting of earlier parts of discussions. I don't think I have ever seen a better UI for this than 4chan's.

yeah definitely, there could be a possibility for quoting/linking answers from other branches - i haven't seen any UI that would support something like it, but also my guess is that it wouldn't be too difficult to make one. my thinking about it was that there would be one main branch and several other smaller branches that could connect to the main one, so that some points can be discussed in greater depth. also, the branching should probably not happen always, but just when both participants occasionally agree on them.

PSA - at least as of March 2024, the way to create a Dialogue is by navigating to someone else's profile and to click the "Dialogue" option appearing near the right, next to the option to message someone.

I'm working on an adversarial collaboration with Abram Demski atm, but after that's finished, I'd be open to having a dialog here on decision theory.

Dialogues seem under-incentivised relative to comments, given the amount of effort involved. Maybe they would get more karma if we could vote on individual replies, so it's more like a comment chain?

This could also help with skimming a dialogue because you can skip to the best parts, to see whether it's worth reading the whole thing.

I don't see a reason to give dialogues more karma than posts, but I agree posts (including dialogues) are under-incentivized relative to comments.

I am sad they're not getting as much use. I have wondered if they would work well as part of the comment section UI, where if you're having a back-and-forth with someone, the site instead offers you "Would you like to have a dialogue instead?" with a single button.

Dialogues are more difficult to create (if done well between people with different beliefs), and are less pleasant to read, but are often higher value for reaching true beliefs as a group.

The dialogues I've done have all been substantially less time investment than basically any of my posts.

Hmm good point. Looking at your dialogues has changed my mind, they have higher karma than the ones I was looking at.

You might also be unusual on some axis that makes arguments easier. It takes me a lot of time to go over peoples words and work out what beliefs are consistent with them. And the inverse, translating model to words, also takes a while.

I'd enjoy having a dialogue.

Some topics that seem interesting to me:

Neuroscience, and how I see it being relevant to AI alignment.

Corrigibility (not an expert here, but excited to learn more and hear others ideas). Especially interested in figuring out if anyone has ideas about how to draw boundaries around what would constitute 'too much manipulation' from a model or how to measure manipulation.

Compute Governance, why it might be a good idea but also might be a bad idea.

Why I think simulations are valuable for alignment research. (I'm referring to simulations as in simulated worlds or game environments, not as in viewing LLMs as simulators of agents).

I'm a software developer and father interested in:

- General Rationality: eg. WHY does Occam's Razor work? Does it work in every universe?

- How rationality can be applied to thinking critically about CW/politics in a political-party agnostic way

- Concrete understanding of how weird arguments: Pascals Wager, The Simulation Hypothesis, Roko's B, etc. do or don't work

- AI SOTA, eg. what could/should/will OpenAI release next?

- AI Long Term arguments from "nothing burger" all the way to Yudkowsky

- Physics, specifically including Quantum Physics and Cosmology

- Lesswrong community expansion/outreach

Time zone is central US. I also regularly read Scott Alexander.

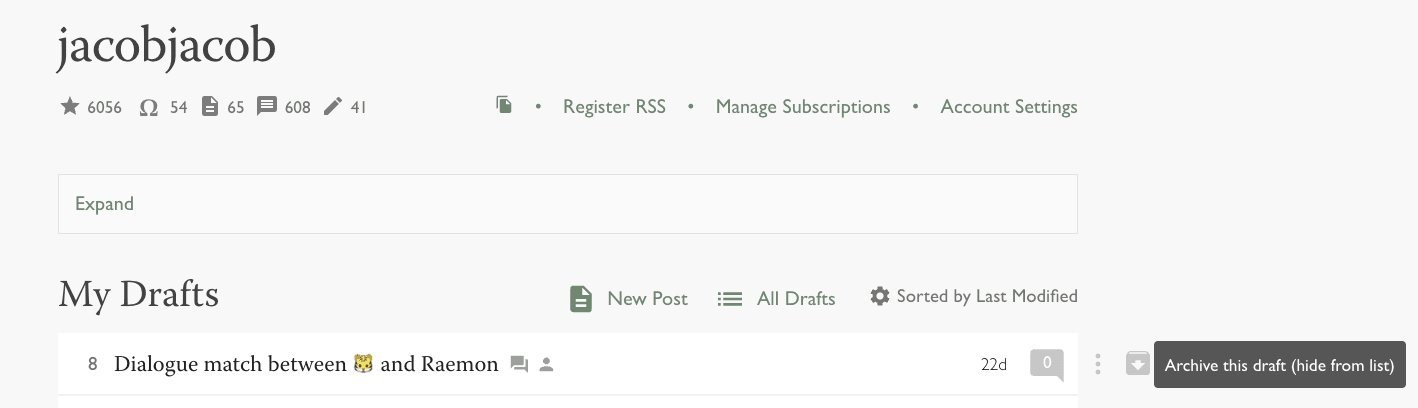

You can go to your profile page and press the "Archive" icon, that appears when hovering to the right of a dialogue.

Hello,

I did enjoy the dialogues I've read so far, and would like to join one.

I tend to read a lot of different things, and as such am much better at drawing parallels and seeing associative links between different concepts or structures of thought, than delving into one in particular. This is useful for finding novel solutions to problems that are almost impossible to solve with a hammer - but you might have more luck with if you use a pair of scissors

It is hard to know if the issue is one that is best solved with a hammer or with something else - but I do believe I can help in dismissing some fruitless avenues. So if you are just curious about having someone exploring different takes on something (as little or much as you want) let me know.

Besides the more general above, I care about these things. You don't have to be familiar with it per se to have a dialogue about it, but for it to work, I assume you will have to have an equal interest in something else that we can share our take on, and I want it to be decently advanced as well. Am not much of a teacher, and don't like to work on the basics, if it isn't related to a more fundamental, meta-level of understanding.

- MBTI - Cognitive functions and their very different inner "Logic". Would love to speak with sensors, especially about which skills they have developed, when, why etc.

- Nonviolent communication (practices, issues, uses) - Dyads, group processes, organizations

- Internal Family Systems -

- Spiral Dynamics - An outline theory about complexity-development; Complexity & abstraction of ideas, feelings, self, world etc

- Typical Mind Fallacy; What does it include or not, and could there be other explanations? What about neurodivergent vs neurotypical. How big are the differences in minds really, and are there ways to bridge the gap successfully?

Much, much more, but that is the general gist. I relate all these concepts together, and add in more as well if I find they fit somehow.

So hit me up/invite me if you are just mildly curious to curious, and we'll take it from there.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

I'm a former quant now figuring out how to talk to tech people about love (I guess it's telling that I feel a compelling pressure to qualify this).

Currently reading

https://www.nytimes.com/2023/10/16/science/free-will-sapolsky.html

Open to talking about anything in this ballpark!

Interested in dialoging on many topics, especially on economic/social policy. Threefold aims: Experiment with a favorite form of communication, and/or Real insight, and/or Fun. Particularly interested on:

- Nuclear: pro vs. anti-nuclear in the energy mix

- My ideal is to see whether my lukewarm skepticism against nuclear can be defeated with clear & good pro-nuclear arguments

- If there’s also a person with stronger-than-my anti-nuclear views, I’d also be keen to have a trialogue (the strong anti, the strong pro, and I'd moderate the dialogue as expert on energy systems & economics, but without deep expertise on nuclear)

- Refugee/immigration policy: i. how this could ideally look like, or ii. how one could realistically tweak the current system in the right direction

- Terrorism: Under what circumstances, if any, may terrorism be defensible?

- Geopolitics and Economics: How to deal with regimes we're wary of?

- Dictatorships vs. Democracy/Democrazy: i. Dictatorship as attractor state in today's era, or ii. "If I really were a powerful dictator, I'd have to be insane to voluntarily give up in today's world"

- Illusionism of Consciousness

As of today, everyone is able to create a new type of content on LessWrong: Dialogues.

In contrast with posts, which are for monologues, and comment sections, which are spaces for everyone to talk to everyone, a dialogue is a space for a few invited people to speak with each other.

I'm personally very excited about this as a way for people to produce lots of in-depth explanations of their world-models in public.

I think dialogues enable this in a way that feels easier — instead of writing an explanation for anyone who reads, you're communicating with the particular person you're talking with — and giving the readers a lot of rich nuance I normally only find when I overhear people talk in person.

In the rest of this post I'll explain the feature, and then encourage you to find a partner in the comments to try it out with.

What do dialogues look like?

Here is a screenshot of a dialogue with 3 users.

Behind the scenes, the editor interface is a bit different from other editors we've seen on the internet. It's inspired by collaborating in google docs, where you're all editing a document simultaneously, and you can see the other person's writing in-progress.

This also allows all participants to draft thoughtful replies simultaneously, and be able to see what the other person is planning to talk about next.

How do I create a dialogue?

First, hit the "New Dialogue" button in the menu in the top-right of the website.

This pops up a box where you are invited to give your dialogue a title and invite some people.

Then you'll be taken to the editor page!

Now you can start writing. The other dialogue participants will receive a notification and the dialogue will appear in their drafts. (Only invite people to dialogues with their consent!)

What are some already published dialogues?

Here are some links to published dialogues that have been using this feature.

Do you have any suggestions about how I can find dialogue partners and topics?

This is a new format for LessWrong, and we don't have much experience of people using it yet to learn from.

Nonetheless, I asked Oliver Habryka for his thoughts on the question, and he wrote the following answer.

Is there any etiquette for writing dialogues with a partner?

We've only run around 10 of these dialogues, so I'm sure we'll learn more as we go.

However, to give any guidance at all, I'll suggest the following things.

If you're talking synchronously, it's okay to be drafting a comment while the other person is doing the same. It's even okay to draft a reply to what they're currently writing, as long as you let them publish their reply first.

(I think this gives all the advantages of interrupt culture — starting to reply as soon as you've understood the gist of the other person's response, or starting to reply as soon as an idea hits you — with none of the costs, as the other person still gets to take their time writing their response in full.)

It's fine to occasionally do a little meta conversation in the dialogue. ("I've got dinner soon" "It seems like you're thinking, do you want time to reflect?" "Do you have more on this thread you want to discuss, or can I change topics?"). If you want to, at the end, you can also cut some of this out, as the post is still editable. However, if you want to make an edit to or cut anything that your partner writes, this will become a suggestion in the editor, for your partner to accept or reject.

If you start a dialogue with someone, it helps to open with either your position, or with some questions for them.

Don't leave the dialogue hanging for more than a day without letting your interlocutor know what time-scale to expect to get back to you on. Or let them know that you're no longer interested in finishing the dialogue.

How can I find a partner today to try out this new feature with?

One way is to use this comment section!

Reply to this comment with questions you're interested in having a dialogue about.

Then you can DM people who leave comments that you'd like talk with.

You can also reach out to friends and other people you know as well :-)