It's worthy of a (long) post, but I'll try to summarize. For what it's worth, I'll die on this hill.

General intelligence = Broad, cross-domain ability and skills.

Narrow intelligence = Domain-specific or task-specific skills.

The first subsumes the second at some capability threshold.

My bare bones definition of intelligence: prediction. It must be able to consistently predict itself & the environment. To that end it necessarily develops/evolves abilities like learning, environment/self sensing, modeling, memory, salience, planning, heuristics, skills, etc. Roughly what Ilya says about token prediction necessitating good-enough models to actually be able to predict that next token (although we'd really differ on various details)

Firstly, it's based on my practical and theoretical knowledge of AI and insights I believe to have had into the nature of intelligence and generality for a long time. It also includes systems, cybernetics, physics, etc. I believe a holistic view helps inform best w.r.t. AGI timelines. And these are supported by many cutting edge AI/robotics results of the last 5-9 years (some old work can be seen in new light) and also especially, obviously, the last 2 or so.

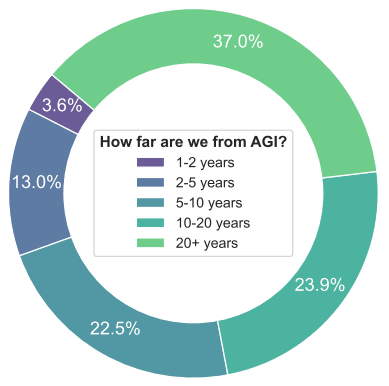

Here are some points/beliefs/convictions I have for thinking AGI for even the most creative goalpost movers is basically 100% likely before 2030, and very likely much sooner. A fast takeoff also, understood as the idea that beyond a certain capability threshold for self-improvement, AI will develop faster than natural, unaugmented humans can keep up with.

It would be quite a lot of work to make this very formal, so here are some key points put informally:

- Weak generalization has been already achieved. This is something we are piggybacking off of already, and there is meaningful utility since GPT-3 or so. This is an accelerating factor.

- Underlying techniques (transformers , etc) generalize and scale.

- Generalization and performance across unseen tasks improves with multi-modality.

- Generalist models outdo specialist ones in all sorts of scenarios and cases.

- Synthetic data doesn't necessarily lead to model collapse and can even be better than real world data.

- Intelligence can basically be brute-forced it looks like, so one should take Kurzweil *very* seriously (he tightly couples his predictions to increase in computation).

- Timelines shrunk massively across the board for virtually all top AI names/experts in the last 2 years. Top Experts were surprised by the last 2 years.

- Bitter Lesson 2.0.: there are more bitter lessons than Sutton's, which are that all sorts of old techniques can be combined for great increases in results. See the evidence in papers linked below.

- "AGI" went from a taboo "bullshit pursuit for crackpots", to a serious target of all major labs, publicly discussed. This means a massive increase in collective effort, talent, thought, etc. No more suppression of cross-pollination of ideas, collaboration, effort, funding, etc.

- The spending for AI only bolsters, extremely so, the previous point. Even if we can't speak of a Manhattan Project analogue, you can say that's pretty much what's going on. Insane concentrations of talent hyper focused on AGI. Unprecedented human cycles dedicated to AGI.

- Regular software engineers can achieve better results or utility by orchestrating current models and augmenting them with simple techniques(RAG, etc). Meaning? Trivial augmentations to current models increase capabilities - this low hanging fruit implies medium and high hanging fruit (which we know is there, see other points).

I'd also like to add that I think intelligence is multi-realizable, and generality will be considered much less remarkable soon after we hit it and realize this than some still think it is.

Anywhere you look: the spending, the cognitive effort, the (very recent) results, the utility, the techniques...it all points to short timelines.

In terms of AI papers, I have 50 references or so I think support the above as well. Here are a few:

SDS : See it. Do it. Sorted Quadruped Skill Synthesis from Single Video Demonstration, Jeffrey L., Maria S., et al. (2024).

DexMimicGen: Automated Data Generation for Bimanual Dexterous Manipulation via Imitation Learning, Zhenyu J., Yuqi X., et in. (2024).

One-Shot Imitation Learning, Duan, Andrychowicz, et al. (2017).

Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks, Finn et al., (2017).

Unsupervised Learning of Semantic Representations, Mikolov et al., (2013).

A Survey on Transfer Learning, Pan and Yang, (2009).

Zero-Shot Learning - A Comprehensive Evaluation of the Good, the Bad and the Ugly, Xian et al., (2018).

Learning Transferable Visual Models From Natural Language Supervision, Radford et al., (2021).

Multimodal Machine Learning: A Survey and Taxonomy, Baltrušaitis et al., (2018).

Can Generalist Foundation Models Outcompete Special-Purpose Tuning? Case Study in Medicine, Harsha N., Yin Tat Lee et al. (2023).

A Vision-Language-Action Flow Model for General Robot Control, Kevin B., Noah B., et al. (2024).

Open X-Embodiment: Robotic Learning Datasets and RT-X Models, Open X-Embodiment Collaboration, Abby O., et al. (2023).

Here's why I'm wary of this kind of argument:

First, we know that labs are hill-climbing on benchmarks.

Obviously, this tends to inflate model performance on the specific benchmark tasks used for hill-climbing, relative to "similar" but non-benchmarked tasks.

More generally and insidiously, it tends to inflate performance on "the sort of things that are easy to measure with benchmarks," relative to all other qualities that might be required to accelerate or replace various kinds of human labor.

If we suppose that amenability-to-benchmarking correlates with various other aspects of a given skill (which seems reasonable enough, "everything is correlated" after all), then we might expect that hill-climbing on a bunch of "easy to benchmark" tasks will induce generalization to other "easy to benchmark" tasks (even those that weren't used for hill-climbing), without necessarily generalizing to tasks which are more difficult to measure.

For instance, perhaps hill-climbing on a variety of "difficult academic exam" tasks like GPQA will produce models that are very good at exam-like tasks in general, but which lag behind on various other skills which we would expect a human expert to possess if that human had similar exam scores to the model.

Anything that we can currently measure in a standardized, quantified way becomes a potential target for hill-climbing. These are the "benchmarks," in the terms of your argument.

And anything we currently can't (or simply don't) measure well ends up as a "gap." By definition, we don't yet have clear quantitative visibility into how well we're doing on the gaps, or how quickly we're moving across them: if we did, then they would be "benchmarks" (and hill-climbing targets) rather than gaps.

It's tempting here to try to forecast progress on the "gaps" by using recent progress on the "benchmarks" as a reference class. But this yields a biased estimate; we should expect average progress on "gaps" to be much slower than average progress on "benchmarks."

The difference comes from the two factors I mentioned at the start:

Presumably things that are inherently harder to measure will improve more slowly – it's harder to go fast when you're "stumbling around in the dark" – and it's difficult to know how big this effect is in advance.

I don't get a sense that AI labs are taking this kind of thing very seriously at the moment (at least in their public communications, anyway). The general vibe I get is like, "we love working on improvements to measurable things, and everything we can measure gets better with scale, so presumably all the things we can't measure will get solved by scale too; in the meantime we'll work on hill-climbing the hills that are on our radar."

If the unmeasured stuff were simply a random sample from the same distribution as the measured stuff, this approach would make sense, but we have no reason to believe this is the case. Is all this scaling and benchmark-chasing really lifting all boats, simultaneously? I mean, how would we know, right? By definition, we can't measure what we can't measure.

Or, more accurately, we can't measure it in quantitative and observer-independent fashion. That doesn't mean we don't know it exists.

Indeed, some of this "dark matter" may well be utterly obvious when one is using the models in practice. It's there, and as humans we can see it perfectly well, even if we would find it difficult to think up a good benchmark for it.

As LLMs get smarter – and as the claimed distance between them and "human experts" diminishes – I find that these "obvious yet difficult-to-quantify gaps" increasingly dominate my experience of LLMs as a user.

Current frontier models are, in some sense, "much better at me than coding." In a formal coding competition I would obviously lose to these things; I might well perform worse at more "real-world" stuff like SWE-Bench Verified, too.

Among humans with similar scores on coding and math benchmarks, many (if not all) of them would be better at my job than I am, and fully capable of replacing me as an employee. Yet the models are not capable of this.

Claude-3.7-Sonnet really does have remarkable programming skills (even by human standards), but it can't adequately do my job – not even for a single day, or (I would expect) for a single hour. I can use it effectively to automate certain aspects of my work, but it needs constant handholding, and that's when it's on the fairly narrow rails of something like Cursor rather than in the messy, open-ended "agentic environment" that is the real workplace.

What is it missing? I don't know, it's hard to state precisely. (If it were easier to state precisely, it would be a "benchmark" rather than a "gap" and we'd be having a very different conversation right now.)

Something like, I dunno... "taste"? "Agency"?

"Being able to look at a messy real-world situation and determine what's important and what's not, rather than treating everything like some sort of school exam?"

"Talking through the problem like a coworker, rather than barreling forward with your best guess about what the nonexistent teacher will give you good marks for doing?"

"Acting like a curious experimenter, not a helpful-and-harmless pseudo-expert who already knows the right answer?"

"(Or, for that matter, acting like an RL 'reasoning' system awkwardly bolted on to an existing HHH chatbot, with a verbose CoT side-stream that endlessly speculates about 'what the user might have really meant' every time I say something unclear rather than just fucking asking me like any normal person would?)"

If you use LLMs to do serious work, these kinds of bottlenecks become apparent very fast.

Scaling up training on "difficult academic exam"-type tasks is not going to remove the things that prevent the LLM from doing my job. I don't know what those things are, exactly, but I do know that the problem is not "insufficient skill at impressive-looking 'expert' benchmark tasks." Why? Because the model is already way better than me at difficult academic tests, and yet – it still can't autonomously do my job, or yours, or (to a first approximation) anyone else's.

Or, consider the ascent of GPQA scores. As "Preparing for the Intelligence Explosion" puts it:

Well, that certainly sounds impressive. Certainly something happened here. But what, exactly?

If you showed this line to someone who knew nothing about the context, I imagine they would (A) vastly overestimate the usefulness of current models as academic research assistants, and (B) vastly underestimate the usefulness of GPT-4 in the same role.

GPT-4 already knew all kinds of science facts of the sort that GPQA tests, even if it didn't know them quite as well, or wasn't as readily able to integrate them in the exact way that GPQA expects (that's hill-climbing for you).

What was lacking was not mainly the knowledge itself – GPT-4 was already incredibly good at obscure book-learning! – but all the... other stuff involved in competent research assistance. The dark matter, the soft skills, the unmesaurables, the gaps. The kind of thing I was talking about just a moment ago. "Taste," or "agency," or "acting like you have real-world experience rather than just being a child prodigy who's really good at exams."

And the newer models don't have that stuff either. They can "do" more things if you give them constant handholding, but they still need that hand-holding; they still can't apply common sense to reason their way through situations that don't resemble a school exam or an interaction with a gormless ChatGPT user in search of a clean, decontextualized helpful-and-harmless "answer." If they were people, I would not want to hire them, any more than I'd want to hire GPT-4.

If (as I claim) all this "dark matter" is not improving much, then we are not going to get a self-improvement loop unless

I doubt that (1) will hold: the qualities that are missing are closely related to things like "ability to act without supervision" and "research/design/engineering taste" that seem very important for self-improvement.

As for (2), well, my best guess is that we'll have to wait until ~2027-2028, at which point it will become clear that the "just scale and hill-climb and increasingly defer to your HHH assistant" approach somehow didn't work – and then, at last, we'll start seeing serious attempts to succeed at the unmeasurable.