I was at MIRI Sept 2022 to Sept 2023, which was full time from Sept 2022 - March 2023 and 1/4 time afterwards. The team was Vivek Hebbar, Peter Barnett and me initially; we brought on James Lucassen and Thomas Larsen from MATS around January, and Jeremy Gillen a bit after that. We were loosely mentored by Nate Soares, and we spent 1 week out of 6 talking to him for like 4 hours a day, which I think was a pretty strange format.

We were working on a bunch of problems, but the overall theme/goal started off as "characterize the sharp left turn" and evolved into getting fundamental insights about idealized forms of consequentialist cognition. For most of the time, we would examine a problem or angle, realize that it was intractable or not what we really wanted, and abandon it after a few days to months.

I'm pretty underwhelmed with our output, and the research process was also frustrating. But I don't want to say I got zero output, because I definitely learned to think more concretely about cognition-- this was the main skill Nate was trying to teach.

I think the project did not generate enough concrete problems to tackle, and also had an unclear theory of change. (I realized the first one around March, which is why I went part-time.) This meant we had a severe lack of feedback loops. The fault probably lies with a combination of our inexperience, Nate's somewhat eccentric research methodology, and difficulty communicating with Nate.

Also, some more specific questions that come up are:

- What were some difficulties communicating with Nate?

- Is there anything you can say about Nate's research methodology or is that mostly private?

Oh, a bunch of things I wish were different, I'll just give a list. (After we wrote this, Nate gave some important context which you can find below).

Communication

- Nate is very disagreeable and basically changes nothing about his behavior for social reasons. This made it overall difficult to interact with him.

- There's this thing Nate and Eliezer do where they proclaim some extremely nonobvious take about alignment, say it in the same tone they would use to declare that grass is green, and don't really explain it. (I didn't talk to Eliezer much but I've heard this from others too.)

- Nate thinks in a different ontology from everyone, and often communicates using weird analogies

- e.g. he pointed out a flaw in some of our early alignment proposals, where we train the AI to have some property relevant to alignment, but it doesn't learn it well. He communicated this using the IMO unhelpful analogy that you could train me with the outer objective of predicting what chess move Magnus Carlsen would make, and I wouldn't be able to actually beat chess grandmasters.

- when Nate thinks you don't understand something or have a mistaken approach, he gets visibly distressed and sad. I think this conditioned us to express less disagreement with him. I have a bunch of disagreements from his world model, and could probably be convinced to his position on like 1/3 of them, but I'm too afraid to bring them all up and if I did he'd probably stop talking to me out of despair anyway.

- The structure where we would talk to Nate 4h/day for one out of every ~6 weeks was pretty bad for feedback loops. A short meeting every week would have been better, but Nate said this would be more costly for him.

- In my frustration at the lack of concrete problems I asked Nate what research he would approve of outside of the main direction. We thought of two ideas: getting a white-box system to solve Winograd schemas, and understanding style transfer in neural nets. I worked on these on and off for a few months without much progress, then went back to Nate to ask for advice. Nate clarified that he was not actually very excited about these directions himself, and it was more like "I don't see the relevance here, but if you feel excited by these, I could see this not being totally useless". (Nate gives his perspective on this below).

Other

- Someone on the project should have had more research experience. Peter has a MS but it's in physics; no one else had more than 1.5 years and many of us had only done SERI MATS.

- I wish we were like, spoon-fed concrete computer science problems. This is not very compatible with the goals of the project though, which were deconfusion.

- Nate wouldn't tell us about most of his object-level models, because they were adjacent to capabilities insights. Nate thought that there were some benefits to sharing with us, but if we were good at research we'd be able to do good work without it, and the probability of our succeeding was low enough that the risk-reward calculation didn't pan out.

- I think we were overly cautious with infosec. The model was something like: Nate and Eliezer have a mindset that's good for both capabilities and alignment, and so if we talk to other alignment researchers about our work, the mindset will diffuse into the alignment community, and thence to OpenAI, where it would speed up capabilities. I think we didn't have enough evidence to believe this, and should have shared more.

- Because we were stuck, the infohazards are probably not severe, and the value of mentorship increases.

- I think it's unlikely that we'd produce a breakthrough all at once. Therefore object level infohazards could be limited by being public until we start producing impressive results, then going private.

- We talked to Buck recently and he pointed out enough problems with our research and theory of change that I would have left the project earlier if I'd heard them earlier. (The main piece of information was that our ideas weren't sufficiently different from other CS people that there would be lots of low-hanging fruit to pluck.)

- Some of our work was about GOFAI (symbolic reasoning systems) and we could have engaged with the modern descendants of the field. We looked at SAT solvers enough to implement CDCL from scratch and read a few papers, but could have looked at other areas like logic. I had the impression that most efforts at GOFAI failed but didn't and still don't have a good idea why.

I feel annoyed or angry I guess? When I reflect I don't have much resentment towards Nate though, he just has different models and a different style than most researchers and was acting rationally given those. I hope I'm not misrepresenting anything for emotional reasons, and if I am we can go back and fix afterwards.

Edit 9/26: I still endorse everything above.

I maybe have two lines of thoughts when I read this.

One is something like "yeah, that kinda matches other things I've heard about Nate/MIRI, and it seems moderately likely to me that something should change there". But, all the conversations I've had about it had a similar confidentiality-cloud around them, so I don't know many details, and wouldn't be very confident about what sort of changes would actually be net beneficial.

Another train of thought is something like "what if you guys had just... not had Nate at all? Would that have been better or worse? Can you imagine plans where actually you guys just struck out on your own as an independent alignment research team?"

One of my models is that "Whelp, people are spiky. Often, the things that are inconvenient about people are tightly entwined with the things that are valuable about them. Often people can change, but not in many of the obvious ways you might naively think." So I'm sort of modeling Nate (or Eliezer, although he doesn't sound as relevant) as sort of a fixed cognitive resource, without too much flexibility on how they can deploy that resource.

I joined MIRI because I was impressed with Vivek, and was betting on a long tail outcome from his collaboration with Nate. This could have been a few things...

- Maybe everyone trying to understand cognition (capabilities and alignment) is screwing up, and we can just do it properly and build an aligned AGI, or get >20% of the way there.

- Maybe everyone in alignment is neglecting certain failure modes Nate understood, e.g. what would eventually become Nate's post on Deep Deceptiveness, or the sharp left turn. It turned out that ARC was already thinking about these (ontology translation problems, inner search, etc.).

- Maybe we could publish a thing distilling Nate's views like Shard Theory in Nine Theses. This would be because Nate is bad at communication (see eg MIRI dialogues failing to resolve a bunch of disagreements)

- Maybe working with Nate would make us much better at alignment research (empirical or conceptual). This is the closest to being true, and I feel like I have a much better view of the problem than a year ago (although there is the huge confounder of the whole field having more concrete research directions than ever before)

Basically I wanted to speed up whatever Vivek was doing here, and early on I had lots of good conversations with him. (I was pretty excited about this despite Nate saying his availability for mentorship would be limited, which he also touches on below).

About whether we'd have done better without Nate ex ante, definitely no. Understanding Nate's research style and views, and making a bet on it, was the entire point of the project. There would be nothing separating us from other junior researchers, and agent foundations is hard enough that we would be really unlikely to succeed.

Ok, maybe a few conversational tracts that seem interesting to me are:

- Is there more stuff you generally wish people knew about your experience, or about MIRI?

- …something like “what life lessons can you learn here, that don’t depend so much on changing MIRI/Nate?”

- Any object level meta-research-process stuff you learned that seems interesting?

- Any thoughts on… I dunno, basically anything not covered by the first three categories, but maybe a more specific option is “is there a problem here that you feel sort of confused about that you could use help thinking through?”

One big takeaway is that general research experience, that you gain over time, is a real thing. I’d heard this from others like Mark Xu, but I actually believe it in my heart now.

Furthermore, I think there are some research domains that are more difficult than others just based on how hard it is to come up with a line of attack on a problem that's both tractable and relevant. If you fail at either of these then your research is useless. Several times we abandoned directions because they would be intractable or irrelevant -- and I feel we learned very little from these experiences. It was super unforgiving and I now think this is a domain where you need way more experience to have a hope of succeeding.

I’m reminded about a scene in Oppenheimer. General Groves is pushing hard for compartmentalisation -- a strategy of, as I understood it, splitting the project into pieces where a person could work hard on one piece, but still lack enough other clues to piece together dangerous details about the plan as a whole (that could then presumably have been leaked to an adversary).

But at one point the researchers rebel against this by hosting cross-team seminars. They claim they can't think clearly about the problem if they're not able to wrangle the whole situation in their heads. Groves reluctantly accedes, but imposes limits on how many researchers can attend those seminars. (If I recall correctly.)

This is similar to complaints I’ve heard from past MIRI employees. It seemed pretty sensible to me for management to want to try a structure similar to the Manhattan Project one (not sure if directly inspired) to help with info-hazards, but also as a direct report I imagine it was pretty tricky to be asked to build Widget X, but without an understanding of what Widget X would ultimately be used for, and the tradeoff space surrounding how it was supposed to work.

I’m curious whether this dynamic also matches experiences you had at MIRI, and if you have any thoughts on it.

I have also heard this kind of thing from past MIRI employees. But I think our situation was different; we didn't have concrete pieces of the project that management had sliced off for us. Instead we had to, basically from scratch, come up with a solution to some chunk of the problem, without any object-level models.

There was one info bubble for the project. Ideas that Nate shared with us would not go outside this bubble without his approval. Our own ideas would be shared at our discretion. I'm not sure that the project would have been more successful if we had had full access to all of Nate's models, but it seems more likely.

So things Nate shared with you would not go outside the bubble without his permission. But given how open-ended the domain was, I'm confused how you made that delineation. If Nate said a thing that was a kind of a weird, abstract frame, and you then used that frame to have a derivative insight about agent foundations stuff… some of the insight was due to Nate and some due to your own reasoning… How do you know what you can and cannot share?

Yeah this was a problem. Since the entire point of the project was absorbing Nate's mindset, and all the info-hazards would come from this mindset defusing towards frontier labs, the sharing situation was really difficult. I think this really disincentivized us from sharing anything, because before doing so we would have to have a long conversation about exactly how much of the insights touched on which ideas, and which other ideas they were descended from, and so on. This was pretty bad.

Okay, so you say in the past tense that this disincentivized you from sharing anything. How, if at all, do you feel it's affecting you right now? Do parts of your cognition still feel tied up in promises of confidentiality, in handicapping ways? Or do the promises feel siloed to what you worked on back then, with fewer implications these days?

Yes. Here are some:

What are you doing next?

- Trying to find projects with better feedback loops. I'm most excited about interpretability and control, where there has been an explosion of really good work lately. I think my time at MIRI gave me a desire to work on problems that are clean in a computer science way and also tractable, and not as conceptually fucked as "abstractions", "terminal values", etc. I want to work my way up towards mildly confused concepts like "faithfulness", "mild optimization", or "lost purposes".

I'm actively looking for projects especially with people who have some amount of experience (a PhD in something computer science related, or two papers at top ML conferences). If this is you and you want to work with me, send me a DM.

Who should work at MIRI? (already touched on but still could answer)

- As far as I know MIRI is not hiring for the specific theory we were doing. But in a similar situation to mine, you should have a few years of research experience, have read the MIRI curriculum, and ideally start off with a better understanding of Nate's worldview and failure modes than I did. It might help to have deep experience in other areas that aren't in the MIRI curriculum, like modern ML or RL or microeconomics or something, for the chance to bring in new ideas. I don't know what the qualifications are for other roles like engineering.

Any object level insights about alignment from the last year?

- A couple of recurring themes were dealing with reflection / metacognition, and ensuring that a system's ontology continues to mean what you want it to mean. I wasn't super involved but I think these killed a bunch of proposals.

They're key to the problem of creating an agent that's robustly pointed at something, which I think is important (in the EA sense) but maybe not tractable. Superintelligences will surely have some kind of metacognition, and it seems likely that early AGIs will too, and metacognition adds so many potential failure modes. I think we should solve as many subproblems as tractable, even if in practice some won't be an issue or we'll be able to deal with them on the fly.

Solving the full problem despite reflection / metacognition seems pretty out of reach for now. In the worst case, if an agent reflects, it can be taken over by subagents, refactor all of its concepts into a more efficient language, invent a new branch of moral philosophy that changes its priorities, or a dozen other things. There's just way too much to worry about, and the ability to do these things is -- at least in humans -- possibly tightly connected to why we're good at science. Maybe in the future, we discover empirically that we can make assumptions about what form reflection takes, and get better results.

One of my major thought-processes right now is “How can we improve feedback loops for ‘confusing research’?”. I’m interested in both improving feedback for ‘training’ (so you’re, i.e. gaining skills faster/more-reliably, but not necessarily outputting an object level finished product that’s that valuable), and improving feedback for your outputs, so your on-the-job work can veer closer to your ultimate goal.

I think it’s useful to ask “how can we make feedback-loops a) faster, b) less noisy, c) richer, i.e. give you more data per unit-time.”

A few possible followup questions from here are:

- Do you have any general ideas of how to improve research feedback loops? Is there anything you think you could have done different last year in this department?

- What subskills do you think Alignment Research requires? Are there different subskills for Agent Foundations research?

- It seems right now you’re interested in “let’s work on some concrete computer science problems, where the ‘deconfusion/specification’ part is already done.” A) doublechecking that’s a good summary? B) While I have some guesses, I thought it’d be helpful to spell out why this seems helpful, or “the right next step?”.

Do you have any thoughts on any of those?

Yeah this seems a bit meta, but I have two thoughts:

First, I have a take on how people should approach preparadigmatic research, which I wrote up in a shortform here. When I read Kuhn's book, my takeaway was that paradigms are kind of fake. They're just approaches that catch on because they have been proven to solve lots of people's problems -- including people with initially different approaches. So I think that people should try to solve the problems that they think are important based on their view, and if this succeeds just try to solve other people's problems, and not worry too much about "confusing" research or "preparadigmatic" research

A big component of Nate's deconfusion research methodology was to take two intuitions in tension -- an intuition that X is possible, and an intuition that X is impossible -- and explore concrete cases and try to prove theorems until you resolve this tension. I talked to Lawrence Chan, and he said that CHAI people did something similar with inverse RL. The tensions there were the impossibility theorems about inferring utility functions from behavior, and the fact that in practice I could look at your demonstrations and get a good idea of your preferences. Now inverse RL didn't go anywhere, but I feel this validates the basic idea. Still, in CHAI's case, they exited the deconfusion stage fairly quickly and got to doing actual CS theory and empirical work. At MIRI we never got there.

My current line-of-inquiry, these past 2 months, is about “okay, what do you do when you have an opaque, intractable problem? Are there generalizable skills on how to break impossible problems down?”

A worry I have is that people see alignment theoretical work and go “well this just seems fucked”, and then end up doing machine learning research that’s basically just capabilities (or, less ‘differentially favoring alignment’) because it feels easier to get traction on.

I don't have much actual machine learning experience, but from talking to dozens of people at ICML, my view is that if the average ICML author reached this kind of opaque intractable problem, they would just give up and do something else that would yield a paper. Obviously in alignment our projects are constrained by our end goal, but I think it's still not crazy to give up and work on a different angle. Sometimes the problem is actually too hard, and even if the actual problem that you need to solve to align the AI is easier we don't know what simplifying assumptions to make; this might depend on theoretical advances we don't have yet or knowing more about the AGI architecture which we don't have.

As for people doing easier and more capabilities relevant work because the more alignment-relevant problems are too hard, this could just be a product of their different worldviews; maybe they think alignment is easier.

Here’s a mental model I have about this:

AGI capabilities are on the x-axis, and alignment progress on the y-axis. Some research agendas proceed like the purple arrow (lots of capabilities externalities), whereas others proceed like the tiny black arrow.

If your view is that the difficulty of alignment is the dotted line, pursuing the purple arrow will look much better, as it will reach alignment before it reaches AGI. However, if you believe the difficulty is the upper solid black line, the purple approach is net negative: since it differentially moves us closer to pure capability than alignment.

If it's ok, I'll now add other members of the team so they can share their experiences.

I'd like to push back somewhat against the vibe of Thomas Kwa's experience.

I joined the team in May 2023, via working on similar topics in a project with Thomas Larsen. My experience was strongly positive, I’ve learned more in the last six months than in any other part of my life. On the other hand, by the standard of actually solving any part of alignment, the project was a failure. More research experience would have been valuable for us, but this is always true. It does seem like communication problems between Nate and the team slowed down the project by ~2x, but from my perspective it looked like Nate did a good job given his goals.

The infosec requirements seemed basically reasonable to me. The mentorship Nate gave us on research methodology in the last few months was incredibly valuable (basically looking at our research and pointing out when it was going off the rails, different flags and pointers for avoiding similar mistakes, etc.).

I found Thomas Kwa's frustration at the lack of concrete problems to be odd, because a large part of the skill we were trying to learn was the skill of turning vague conceptual problems/confusion into concrete problems. IMO we had quite a number of successes at this (although of course the vast majority of the time is not spent doing the fun work of solving the concrete problems, and also the majority of the time the direction doesn't lead somewhere useful).

I agree with Thomas that we did make a number of research mistakes along the way that slowed us down, and one of them was sometimes not looking at past literature enough. But there were also times where I spent too much time going through irrelevant papers and should have just worked on the problem from scratch, so I think at least later on I mostly got the balance right.

My plan for the next couple of months is a) gaining research experience on as-similar-as-possible problems b) writing up and posting parts of my models I consider valuable (and safe), and c) exploring two research problems that came up last month that still seem promising to me.

The problems in alignment that seem highest value for me to continue to work on seem to be the “conceptually fucked” ones, mostly because they still seem neglected (where one central example IMO is formalizing abstractions). I am wary of getting stuck on intractable problems forever and think it’s important to recognize when no progress is being made (by me) and move on to something more valuable. I don’t think I’ve reached that point yet for the problems I’m working on, but the secondary goal of (a) is to test my own competence at a particular class of problems so I can better know whether to give up.

Thanks, appreciate adding your perspective here @Jeremy. I found this bit pretty interested

My plan for the next couple of months is a) gaining research experience on as-similar-as-possible problems b) writing up and posting parts of my model I consider valuable, and c) exploring two research problems that came up last month that seem promising to me.

The problems in alignment that seem highest value for me to continue to work on seem to be the “conceptually fucked” ones, mostly because they still seem neglected. I am wary of getting stuck on intractable problems forever and think it’s important to recognize when no progress is being made (by me) and move on to something more valuable. I don’t think I’ve reached that point yet for the problems I’m working on, but the secondary goals of (a) is to test my own competence at a particular class of problems so I can better know whether to give up.

I'd be interested in hearing more details about this. A few specific things:

My plan [...] is gaining research experience on as-similar-as-possible problems.

Could you say more about what sort of similar problems you're thinking of?

And here:

is to test my own competence at a particular class of problems so I can better know whether to give up.

Do you have a sense of how you'd figuring out when it's time to give up?

Could you say more about what sort of similar problems you're thinking of?

I only made a list of these the other day, so I don't have much detail on this. But here are some categories from that list:

- Soundness guarantees for optimization and inference algorithms, and an understanding of the assumptions needed to prove these.

- Toy environments where we have to specify difficult-to-observe goals for a toy-model-agent inside that environment, without cheating by putting a known-to-me-correct-ontology into the agent and specifying the goal directly in term of that ontology.

- Problems similar to formalizing heuristic arguments.

- Toy problems that involve relatively easy types of self reference.

I think experience with these sorts of problems would have been useful for lots of sub-problems we ran into. Some of them are a little idiosyncratic to me, others on the team disagree about their relevance. I haven't turned these categories into specific problems yet.

Do you have a sense of how you'd figuring out when it's time to give up?

I think mostly I judge by how excited I am about the value of the research even after internally simulating outside view perspectives, or arguing with people who disagree, and trying to sum it all together. This is similar to the advice Nate gave us a couple of weeks ago, to pursue whatever source of hope feels the most real.

I found Thomas Kwa's frustration at the lack of concrete problems to be odd, because a large part of the skill we were trying to learn was the skill of turning vague conceptual problems/confusion into concrete problems.

I think the lack of concrete problems bothers me for three reasons:

- If our goal is to get better at deconfusion, we're choosing problems where the deconfusion is too hard. When trying to gain skill at anything, you should probably match the difficulty to your skill level such that you succeed >=50% of the time, and we weren't hitting that.

- It indicates we're not making progress fast enough, and so the project is less good than we expected. Maybe this is due to inexperience, maybe there wasn't anything to find.

- It's less fun for me.

If our goal is to get better at deconfusion, we're choosing problems where the deconfusion is too hard. When trying to gain skill at anything, you should probably match the difficulty to your skill level such that you succeed >=50% of the time, and we weren't hitting that.

This point feels pretty central to what I was getting at with "what are the subskills, and what are the feedbackloops", btw.

I think "actually practice the deconfusion step" would be a good thing to develop better practices around (and to, like, design a curriculum for that actually gets people to a satisfactory level on it).

IMO we had quite a number of successes at this (although of course the vast majority of the time is not spent doing the fun work of solving the concrete problems, and also the majority of the time the direction doesn't lead somewhere useful).

@Jeremy Gillen I'd be interested in hearing a couple details about some of the more successful instances according to you (in particular where you feel like you successfully deconfused yourself on a topic. And, maybe then went on to solve the concrete problem that resulted?).

A thing I'm specifically interested in here is "What are the subskills that go into the deconfusion -> concrete-problem-solution pipeline? And how can we train those subskills?".

The actual topics might be confidential, but curious if you could share more metadata about how the process worked and which bits felt hard.

I'm a bit skeptical that early-stage deconfusion is worth investing lots of resources in. There are two questions here.

- Is getting much better at early stage deconfusion possible?

- Is it a bottleneck for alignment research?

I just want to get at the first question in this comment. I think deconfusion is basically an iterative process where you go back and forth between two steps, until you get enough clarity that you slowly generate theory and testable hypotheses:

- Generate a pair of intuitions that are in tension. [1]

- Poke at these intuitions by doing philosophy and math and computer science.

I never got step 1 and don't really know how Nate does it. Maybe Jeremy or Vivek are better at it, since they had more meetings with Nate. But it's pretty mysterious to me what mental moves you do to generate intuitions, and when other people on the team try to share them they either seem dumb or inscrutable. The problem is that your intuitions have to be amenable to rigorous analysis and development. But I do feel a lot more competent at step 2.

One exercise we worked through was resolving free will, which is apparently an ancient rationalist tradition. Suppose that Asher drives by a cliff on their way to work but didn't swerve. The conflicting intuitions are "Asher felt like they could have chosen to swerve off the cliff", and "the universe is deterministic, so Asher could not have swerved off the cliff". But to me, this felt like a confusion about the definition of the word "could", and not some exciting conflict-- it's probably only exciting when you're in a certain mindset. [edit: I elaborate in a comment]

I'm not sure how logical decision theory was initially developed, but it might fit this pattern of two intuitions in tension. Early on, people like Eliezer and Wei Dai realized some problems with non-logical decision theories. The intuitions here are that desiderata like the dominance principle imply a decision theory like CDT, but CDT loses in Newcomb's problem, which shouldn't happen predictably if you're actually rational. Eventually you become deconfused enough to say that "maximize utility under your priors" is a more important desideratum than "develop decision procedures that follow principles thought to be rational". Then you just need to generate more test cases and be concrete enough to notice subtle problems. [2]

IMO having some sort of exciting confusion to resolve is necessary for early stage deconfusion research.; not having one implies you have no line of attack. But it's really unclear to me how to reliably get one. Also the framing of "two intuitions in tension" might be overly specific.

I think step two-- examining these intuitions to get observations and eventually turn them into theory-- is basically just normal research, but doing this in the domain of AF is harder than average because we have few examples of the systems we're trying to study. I'm being vague and leaving things out due to uncertainty about exactly how much Nate thinks is ok to share, but my guess is that standard research advice is good: Polya's book, Mark Xu's post, et cetera.

Overall it seems reasonable that people could get way better at early stage deconfusion, though there's a kind of modesty argument to overcome.

Now the second question: is early-stage confusion a bottleneck for alignment research? I think the answer is not in AF but maybe in interpretability. AF is just not progressing fast enough and I'd guess it's not necessary for AF theory to race ahead of our ML knowledge in order to succeed at alignment.

But even in interpretability, maybe we need to focus on getting more data (empirical feedback loops) rather than squeezing more insights out of our existing data (early-stage confusion). I have several reasons for this.

- The field of ML at large is very empirical.

- The neuroscientists I've talked to say that a new scanning technology that could measure individual neurons would revolutionize neuroscience, much more than a theoretical breakthrough. But in interpretability we already have this, and we're just missing the software.

- Eliezer has written about how Einstein cleverly used very limited data to discover relativity. But we could have discovered relativity easily if we observed not only the precession of Mercury, but also the drifting of GPS clocks, gravitational lensing of distant galaxies, gravitational waves, etc. [edit: aysja's comment changed my mind on this]

- I heard David Bau say something interesting at the ICML safety workshop: in the 1940s and 1950s lots of people were trying to unlock the basic mysteries of life from first principles. How was hereditary information transmitted? Von Neumann designed a universal constructor in a cellular automaton, and even managed to reason that hereditary information was transmitted digitally for error correction, but didn't get further. But it was Crick, Franklin, and Watson who used crystallography data to discover the structure of DNA, unraveling far more mysteries. Since then basically all advances in biochemistry have been empirical. Biochemistry is a case study where philosophy and theory failed to solve the problems but empirical work succeeded, and maybe interpretability and intelligence are similar. [edit: aysja's comment adds important context; I suggest reading this and my reply]

- It's a compelling idea that there are simple principles behind intelligence that we could discover using theory, but it probably would have been equally compelling to Von Neumann that there are simple principles behind biochemistry that could be discovered using theory. In reality theory did not get us nearly far enough to even start designing artificial bacteria whose descendants can only survive on cellulose (or whatever), so probably theory won't get us far in designing artificial agents that will only ever want to maximize diamond.

I don't feel like writing a long comment for this dialogue right now, but want to note that:

- I'm still somewhat excited about the general direction I started on around end of June (which definitely wouldn't have happened without Nate's mentorship), and it's a live direction I'm working on

- I don't share the sentiment of not having enough of a concrete problem. I don't think the thing I'm working on is close to being a formally defined problem, but it still feels concrete enough to work on. I think I'm naturally more comfortable than Thomas with open-ended problems where there's some intuitive "spirit of the problem" that's load-bearing. It's also the case that non-formalized problems depend on having a particular intuition, so it's possible for one person to have enough sense of the problem to work on it but not be able to transfer that to anyone else.

I think for my feelings about the project I fall somewhere between Thomas Kwa and Jeremy Gillen. I’m pretty disappointed by our lack of progress on object level things, but I do feel like we managed to learn some stuff that would be hard to learn elsewhere.

Our research didn't initially focus on trying to understand cognition, although that is where we ended up. At the start we were nominally trying to do something like “understand the Sharp Left Turn”, maybe eventually with the goal of writing up the equivalent of Risks from Learned Optimization. Initially we were mainly asking the question “in what ways do various alignment agendas break (usually due to the SLT), and how can we get around these failures?”. I think we weren’t entirely unsuccessful here, but we also didn’t understand the SLT or Nate’s models at this point and so I don’t think our work was exceptional. Here we were still thinking of things from a more "mainstream alignment" perspective.

Following this we started focusing on trying to understand Nate’s worldview and models. This pushed the project in more of a direction of trying to understand cognition more. This is because Nate (or Peter’s Straw-Nate) thinks that you need to do this in order to see failure modes, and this is the ~only way to build a safe AGI.

Later it seemed like Nate thought that the goal of the project from the beginning was to understand cognition, but I don’t remember this being the case. I think if we had had this frame from the start then we would have probably focused on different (and in my opinion better) things early in the project. I guess that (Straw-)Nate didn’t want to say this explicitly because he either wanted us to arrive at good directions independently as a test of our research taste or because he thinks that telling people to do things doesn’t go well. I think the research taste thing is partially fair, although it would have been good to be explicit about this. I also think that you can tell people to do things and sometimes they will do them.

Later in the project (around June 2023) I felt like there was something of a step change, where we understood what Nate wanted out of us and also started trying to learn various specific research methods. (We had also done a fair but of learning Nate's research methods before this as well). At this point it was too little, too late though.

I think we often lost multiple months by working on things that Nate didn’t see as promising, and I think this should have been avoidable.

I also want to echo Thomas’s points about difficulties communicating. Nate is certainly brilliant, he’s able to think in a pretty unique way, but this often lead to us talking past each other using different ontologies. I think these conversations were also just pretty uncomfortable and difficult in the ways Thomas described.

I just said a lot of negative comments but I do think that we (or some of us) got a lot out of the project and working with Nate. There is a pretty specific worldview and picture of the alignment problem that I don’t think you can really get from just reading MIRI’s public outputs. I think I am just much better at thinking about alignment than I was at the start, and I can (somewhat) put on my Nate-hat and be like “ahh yes, this alignment proposal will break for these reasons”. I really feel like much less of an idiot when it comes to thinking about alignment, and looking back on what I thought a year ago I can see ways in which my thinking was confused or fuzzy.

I’m currently in a process of stepping back and trying to work out what I actually believe. I have some complicated feelings like “Yep, the project does seem to be a failure, but we do (somewhat) understand Nate’s picture of the alignment problem, and if it is correct then this is maybe the ~only kind of technical research that helps”. There’s some vibe that almost everyone else is working in some confused ontology that just can’t see the important parts. If you buy the MIRI worldview, this does mean that it is high value to work on problems that are “conceptually fucked”; but if you don’t then I think working on these are way less valuable.

I’m also much less pessimistic about the possibility of communicating with people than Nate/MIRI seem to be. It really does seem like if you’re correct about something, you should be able to talk to people and convince them. (”People” here being other alignment people, I’m a bit less optimistic about policy people, but not totally hopeless here). I am currently trying to work out even if we fully buy the MIRI worldview of alignment, is the technical side too hard and we should just do communications and politics instead.

I’m also excited for all the things Jeremy said he was planning on doing for the next couple of months.

@Jeremy Gillen I'd be interested in hearing a couple details about some of the more successful instances according to you (in particular where you feel like you successfully deconfused yourself on a topic. And, maybe then went on to solve the concrete problem that resulted?).

Here’s one example that I think was fairly typical, which helped me think more clearly about several things but didn’t lead to any insights that were new (for other people). I initially wanted a simple-as-possible model of an agent that could learn more accurate beliefs about the world while doing something like “keeping the same ontology” or at least “not changing what the goal means”. One model I started with was an agent built around a Bayes net model of the world. This lets it overwrite some parts of the model with a more detailed model, without affecting the goal (kinda, sometimes, there is some detail here about how it helps that I’m omitting because it could get long. Simple version is: in Solomonoff induction each hypothesis is completely separate, and in a Bayes net you can construct it such that models overlap and share variables).

There’s a couple of directions we went from there, but one of them that Peter pointed out was “it looks like this agent can’t plan to acquire evidence”. So the next step was working through a few examples and modifying the model until it looked like it could work. This lead us directly to the problem of the agent having to model itself planning in the future. So there were further iterations of proposing some patch, working through the implications in concrete problems, noticing ways that the new model was broken, and iterating further. Working through that gave me a lot more understanding and respect for work on Vingean reflection and logical induction, because we ran into a motivation for some of that work without really intending to.

This was typical in that it didn’t feel completely successful (there are still plenty of unpatched problems), but we did patch a few and gained a lot of understanding along the way. And the work meandered through several iterations/branches of “vague confusion → basic model that tries to solve just that problem → more concrete model → noticing issue with the concrete model (i.e. problems that it can’t solve or ways that it isn’t clean) → modifying to satisfy one of the other constraints”.

The main bottleneck for me was making a concrete model. Usually potential problems felt small and patchable until I made a tiny super concrete version of the problem and try to work through patching it. This was hard because it had to be simple enough to work through examples on paper while capturing the intuitions I wanted. I expect if I had a bigger library of toy models and toy problems in my head and had experience messing around with them on paper this would have helped. And building up my experience working with different types of toy models was a really valuable part of the work.

That's cool.

I'm not sure how easy this is to answer without getting extremely complicated, but I'd be interested in understanding what the model here actually "was". Like, was it a literal computer program that you could literally run that could do simple tasks, or like a sketch of a model that could hypothetically run with infinite compute that you reasoned about, or some third thing?

Gotcha.

Following up on Peter's comment:

At the start we were nominally trying to do something like “understand the Sharp Left Turn”, maybe eventually with the goal of writing up the equivalent of Risks from Learned Optimization. At the start we were mainly thinking asking the question “in what ways do various alignment agendas break (usually due to the SLT), and how can we get around these failures?”.

Did you ever write up anything close to "Risks from Learned Optimization [for 'the Sharp Left Turn']"?

(It sounds like the answer is mostly "no", but curious if there's like an 80/20 that you could do fairly easily that'd make for a better explanation of the concept than what's currently out there, even if it's not as comprehensive as Risks from Learned Optimization)

I think the sharp left turn is not really a well-defined failure mode. It's just the observation that under some conditions (e.g. the presence of internal feedback, or when alignment is enforced by some shallow overseer), capabilities will sometimes generalize farther than alignment, and the claim that practical AGI designs will very likely have such conditions. If generality of capabilities, dangerous capability levels, and something that breaks your safety invariants all come at the same time, alignment is harder. Fast takeoff makes it worse but is not strictly required. As for why this happens, Nate has models but I may or may not believe them, and haven't even heard some of them due to infosec.

As for whether we have RLO for the sharp left turn, the answer is no. We thought a bit about under what circumstances capabilities generalize farther than alignment, but I don't think our thoughts are groundbreaking here. There are also probably points related to the sharp left turn proper that I feel we have a better appreciation of. I think we'll write these up if we have any idea how to, or maybe it'll be another dialogue.

I will say that Nate's original post on the sharp left turn now feels perfectly natural to me rather than vague and confusing, even if I do have <80% that it is basically true. (I disagree with the part where Nate claims alignment is of comparable difficulty to other scientific problems humanity has solved because I think problem difficulties are ~power-law distributed, but it's not crazy if we have the ability to iterate on AGI designs and just need to solve the engineering problem.)

We wrote this dialogue without input from Nate Soares then reached out to him afterwards. I think his comment adds important context:

at risk of giving the false impression that i've done more than skim the beginning of this conversation plus a smattering of the replies from different people:

on an extremely sparse skim, the main thing that feels missing to me is context -- the context was not that i was like "hey, want some mentorship?", the context (iirc, which i may not at a year's remove) is that vivek was like "hey, what do you think of all these attempts to think concretely about sharp left turn?" and i was like "i'm not particularly sold on any of the arguments but it's more of an attempt at concrete thinking than i usually see" and vivek was like "maybe you should mentor me and some of my friends" and i was like "i'm happy to throw money at you but am reluctant to invest significant personal attention" and vivek was like "what if we settle for intermittent personal attention (while being sad about this)", and we gave it a sad/grudging shot. (as, from my perspective, explains a bunch of the suboptimal stuff. where, without that context, a bunch of the suboptimal stuff looks more like unforced errors.)

the other note i'd maybe make is that something feels off to me also about the context of the "no concrete problems" stuff, in a way that it's harder for me to quickly put my finger on. an inarticulate attempt to gesture at it is that it seemed to me like thomas kwa was often like "but how can i learn these abstract skills you speak of by studying GPT-2.5 small's weights" and i was like "boy wouldn't that be nice" and he was like "would this fake project work?" and i was like "i don't see how, but if it feels to you like you have something to learn from doing that project then go for it (part of what i'm trying to convey here is a method of following your own curiosity and learning from the results)" and thomas was like "ok i tried it but it felt very fake" and i was like "look dude if i saw a way to solve alignment by concrete personal experiments on GPT-2.5 small i'd be doing them"

a related inarticulate attempt is that the parts i have skimmed have caused me to want to say something like "*i'm* not the ones whose hopes were nominally driving this operation".

maybe that's enough to biangulate my sense that something was missing here, but probably not /shrug.

Meta: I don’t want this comment to be taken as “I disagree with everything you (Thomas) said.” I do think the question of what to do when you have an opaque, potentially intractable problem is not obvious, and I don’t want to come across as saying that I have the definitive answer, or anything like that. It’s tricky to know what to do, here, and I certainly think it makes sense to focus on more concrete problems if deconfusion work didn’t seem that useful to you.

That said, at a high-level I feel pretty strongly about investing in early-stage deconfusion work, and I disagree with many of the object-level claims you made suggesting otherwise. For instance:

The neuroscientists I've talked to say that a new scanning technology that could measure individual neurons would revolutionize neuroscience, much more than a theoretical breakthrough. But in interpretability we already have this, and we're just missing the software.

It seems to me like the history of neuroscience should inspire the opposite conclusion: a hundred years of increasingly much data collection at finer and finer resolution, and yet, we still have a field that even many neuroscientists agree barely understands anything. I did undergrad and grad school in neuroscience and can at the very least say that this was also my conclusion. The main problem, in my opinion, is that theory usually tells us which facts to collect. Without it—without even a proto-theory or a rough guess, as with “model-free data collection” approaches—you are basically just taking shots in the dark and hoping that if you collect a truly massive amount of data, and somehow search over it for regularities, that theory will emerge. This seems pretty hopeless to me, and entirely backwards from how science has historically progressed.

It seems similarly pretty hopeless to me to expect a “revolution” out of tabulating features of the brain at low-enough resolution. Like, I certainly buy that it gets us some cool insights, much like every other imaging advance has gotten us some cool insights. But I don’t think the history of neuroscience really predicts a “revolution,” here. Aside from the computational costs of “understanding” an object in such a way, I just don’t really buy that you’re guaranteed to find all the relevant regularities. You can never collect *all* the data, you have to make choices and tradeoffs when you measure the world, and without a theory to tell you which features are meaningfully irrelevant and can be ignored, it’s hard to know that you’re ultimately looking at the right thing.

I ran into this problem, for instance, when I was researching cortical uniformity. Academia has amassed a truly gargantuan collection of papers on the structural properties of the human neocortex. What on Earth do any of these papers say about how algorithmically uniform the brain is? As far as I can tell, pretty much close to zero, because we have no idea how the structural properties of the cortex relate to the functional ones, and so who’s to say that “neuron subtype A is more dense in the frontal cortex relative to the visual cortex” is a meaningful finding or not? I worry that other “shot in the dark” data collection methods will suffer similar setbacks.

Eliezer has written about how Einstein cleverly used very limited data to discover relativity. But we could have discovered relativity easily if we observed not only the precession of Mercury, but also the drifting of GPS clocks, gravitational lensing of distant galaxies, gravitational waves, etc.

It’s of course difficult to say how science might have progressed counterfactually, but I find it pretty hard to believe that relativity would have been “discovered easily” were we to have had a bunch of data staring us in the face. In general, I think it’s very easy to underestimate how difficult it is to come up with new concepts. I felt this way when I was reading about Darwin and how it took him over a year to go from realizing that “artificial selection is the means by which breeders introduce changes,” to realizing that “natural selection is the means by which changes are introduced in the wild.” But then I spent a long time in his shoes, so to speak, operating from within the concepts he had available to him at the time, and I became more humbled. For instance, among other things, it seems like a leap to go from “a human uses their intellect to actively select” to “nature ends up acting like a selector, in the sense that its conditions favor some traits for survival over others.” These feel like quite different “types” of things, in some ways.

In general, I suspect it’s easy to take the concepts we already have, look over past data, and assume it would have been obvious. But I think the history of science again speaks to the contrary: scientific breakthroughs are rare, and I don’t think it’s usually the case that they’re rare because of a lack of data, but because they require looking at that data differently. Perhaps data on gravitational lensing may have roused scientists to notice that there were anomalies, and may have eventually led to general relativity. But the actual process of taking the anomalies and turning that into a theory is, I think, really hard. Theories don’t just pop out wholesale when you have enough data, they take serious work.

I heard David Bau say something interesting at the ICML safety workshop: in the 1940s and 1950s lots of people were trying to unlock the basic mysteries of life from first principles. How was hereditary information transmitted? Von Neumann designed a universal constructor in a cellular automaton, and even managed to reason that hereditary information was transmitted digitally for error correction, but didn't get further. But it was Crick, Franklin, and Watson who used crystallography data to discover the structure of DNA, unraveling far more mysteries. Since then basically all advances in biochemistry have been empirical. Biochemistry is a case study where philosophy and theory failed to solve the problems but empirical work succeeded, and maybe interpretability and intelligence are similar.

This story misses some pretty important pieces. For instance, Schrödinger predicted basic features about DNA—that it was an aperiodic crystal—using first principles in his book What if Life? published in 1944. The basic reasoning is that in order to stably encode genetic information, the molecule should itself be stable, i.e., a crystal. But to encode a variety of information, rather than the same thing repeated indefinitely, it needs to be aperiodic. An aperiodic crystal is a molecule that can use a few primitives to encode near infinite possibilities, in a stable way. His book was very influential, and Francis and Crick both credited Schrödinger with the theoretical ideas that guided their search. I also suspect their search went much faster than it would have otherwise; many biologists at the time thought that the hereditary molecule was a protein, of which there are tens of millions in a typical cell.

But, more importantly, I would certainly not say that biochemistry is an area where empirical work has succeeded to nearly the extent that we might hope it to. Like, we still can’t cure cancer, or aging, or any of the myriad medical problems people have to endure; we still can’t even define “life” in a reasonable way, or answer basic questions like “why do arms come out basically the same size?” The discovery of DNA was certainly huge, and helpful, but I would say that we’re still quite far from a major success story with biology.

My guess is that it is precisely because we lack theory that we are unable to answer these basic questions, and to advance medicine as much as we want. Certainly the “tabulate indefinitely” approach will continue pushing the needle on biological research, but I doubt it is going to get us anywhere near the gains that, e.g., “the hereditary molecule is an aperiodic crystal” did.

And while it’s certainly possible that biology, intelligence, agency and so on are just not amenable to the cleave-reality-at-its-joints type of clarity one gets from scientific inquiry, I’m pretty skeptical that this the world we in fact live in, for a few reasons.

For one, it seems to me that practically no one is trying to find theories in biology. It is common for biologists (even bright-eyed, young PhDs at elite universities) to say things like (and in some cases this exact sentence): “there are no general theories in biology because biology is just chemistry which is just physics.” These are people at the beginning of their careers, throwing in the towel before they’ve even started! Needless to say, this take is clearly not true in all generality, because it would anti-predict natural selection. It would also, I think, anti-predict Newtonian mechanics (“there are no general theories of motion because motion is just the motion of chemicals which is just the motion of particles which is just physics”).

Secondly, I think that practically all scientific disciplines look messy, ad-hoc, and empirical before we get theories that tie it together, and that this does not on its own suggest biology is a theoretically bankrupt field. E.g., we had steam engines before we knew about thermodynamics, but they were kind of ad-hoc, messy contraptions, because we didn’t really understand what variables were causing the “work.” Likewise, naturalism before Darwin was largely compendiums upon compendiums of people being like “I saw this [animal/fossil/plant/rock] here, doing this!” Science before theory often looks like this, I think.

Third: I’m just like, look guys, I don’t really know what to tell you, but when I look at the world and I see intelligences doing stuff, I sense deep principles. It’s a hunch, to be sure, and kind of hard to justify, but it feels very obvious to me. And if there are deep principles to be had, then I sure as hell want to find them. Because it’s embarrassing that at this point we don’t even know what intelligence is, nor agency, nor abstractions: how to measure any of it, predict when it will increase or not. These are the gears that are going to move our world, for better or for worse, and I at least want my hands on the steering wheel when they do.

I think that sometimes people don’t really know what to envision with theoretical work on alignment, or “agent foundations”-style work. My own vision is quite simple: I want to do great science, as great science has historically been done, and to figure out what in god’s name any of these phenomena are. I want to be able to measure that which threatens our existence, such that we may learn to control it. And even though I am of course not certain this approach is workable, it feels very important to me to try. I think there is a strong case for there being a shot, here, and I want us to take it.

I did undergrad and grad school in neuroscience and can at the very least say that this was also my conclusion.

I remember the introductory lecture for the Cognitive Neuroscience course I took at Oxford. I won't mention the professor's name, because he's got his own lab and is all senior and stuff, and might not want his blunt view to be public -- but his take was "this field is 95% nonsense. I'll try to talk about the 5% that isn't". Here's a lecture slide:

Lol possibly someone should try to make this professor work for Steven Byrnes / on his agenda.

Thanks, I really like this comment. Here are some points about metascience I agree and disagree with, and how this fits into my framework for thinking about deconfusion vs data collection in AI alignment.

- I tentatively think you're right about relativity, though I also feel out of my depth here. [1]

- David Bau must have mentioned the Schrödinger book but I forgot about it, thanks for the correction. The fact that ideas like this told Watson and Crick where to look definitely seems important.

- Overall, I agree that a good theoretical understanding guides further experiment and discovery early on in a field.

- However, I don't think curing cancer or defining life are bottlenecked on deconfusion. [2]

- For curing cancer, we know the basic mechanisms behind cancer and understand that they're varied and complex. We have categorized dozens of oncogenes of about 7 different types, and equally many ways that organisms defend against cancer. It seems unlikely that the the cure for cancer will depend on some unified theory of cancer, and much more plausible that it'll be due to investments in experiment and engineering. It was mostly engineering that gave us mRNA vaccines, and a mix of all three that allowed CRISPR.

- For defining life, we already have edge cases like viruses and endosymbiotic organisms, and understand pretty well which things can maintain homeostasis, reproduce, etc. in what circumstances. It also seems unlikely that someone will draw a much sharper boundary around life, especially without lots more useful data.

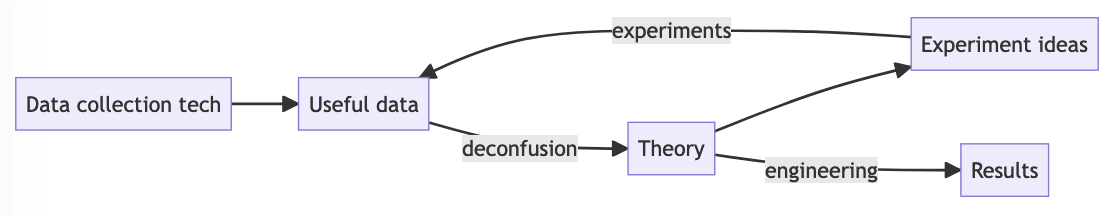

My model of the basic process of science currently looks like this:

Note that I distinguish deconfusion (what you can invest in) from theory (the output). At any time, there are various returns to investing in data collection tech, experiments, and deconfusion, and returns diminish with the amount invested. I claim that in both physics and biology, we went through an early phase where the bottleneck was theory and returns to deconfusion were high, and currently the fields are relatively mature, such that the bottlenecks have shifted to experiment and engineering, but with theory still playing a role.

In AI, we're in a weird situation:

- We feel pretty confused about basic concepts like agency, suggesting that we're early and deconfusion is valuable.

- Machine learning is a huge field. If there are diminishing returns to deconfusion, this means experiment and data collection are more valuable than deconfusion.

- Machine learning is already doing impressive things primarily on the back of engineering, without much reliance on the type of theory that deconfusion generates alone (deep, simple relationships between things).

- But even if engineering alone is enough to build AGI, we need theory for alignment.

- In biology, we know cancer is complex and unlikely to be understood by a deep simple theory, but in AI, we don't know whether intelligence is complex.

I'm not sure what to make of all this, and this comment is too long already, but hopefully I've laid out a frame that we can roughly agree on.

[1] When writing the dialogue I thought the hard part of special relativity was discovering the Lorentz transformations (which GPS clock drift observations would make obvious), but Lorentz did this between 1892-1904 and it took until 1905 for Einstein to discover the theory of special relativity. I missed the point about theory guiding experiment earlier, and without relativity we would not have built gravitational wave detectors. I'm not sure whether this also applies to gravitational lensing or redshift.

[2] I also disagree with the idea that "practically no one is trying to find theories in biology". Theoretical biology seems like a decently large field-- probably much larger than it was in 1950-- and biologists use mathematical models all the time.

I don't have the energy to contribute actual thoughts, but here are a few links that may be relevant to this conversation:

- Sequencing is the new microscope, by Laura Deming

- On whether neuroscience is primarily data, rather than theory, bottlenecked:

- Could a neuroscientist understand a microprocessor?, by Eric Jonas

- This footnote on computational neuroscience, by Jascha Sohl-Dickstein

we still can’t even define “life” in a reasonable way, or answer basic questions like “why do arms come out basically the same size?”

Such a definition seems futile (I recommend the rest of the word sequence also). Biology already does a great job explaining what and why some things are alive. We are not going around thinking a rock is "alive". Or what exactly did you have in mind there?

Same quote, emphasis on the basic question.

What’s wrong with « Left and right limbs come out basically the same size because it’s the same construction plan. »?

I wrote this dialogue to stimulate object-level discussion on things like how infohazard policies slow down research, how new researchers can stop flailing around so much, whether deconfusion is a bottleneck to alignment, or the sharp left turn. I’m a bit sad that most of the comments are about exactly how much emotional damage Nate tends to cause people and whether this is normal/acceptable: it seems like a worthwhile conversation to happen somewhere, but now that many people have weighed in with experiences from other contexts, including non-research colleagues, a romantic partner, etc., I think all the drama distracts from the discussions I wanted to have. The LW team will be moving many comments to an escape-valve post; after this I’ll have a pretty low bar for downvoting comments I think are off-topic here.

The model was something like: Nate and Eliezer have a mindset that's good for both capabilities and alignment, and so if we talk to other alignment researchers about our work, the mindset will diffuse into the alignment community, and thence to OpenAI, where it would speed up capabilities. I think we didn't have enough evidence to believe this, and should have shared more.

What evidence were you working off of? This is an extraordinary thing to believe.

First I should note that Nate is the one who most believed this; that we not share ideas that come from Nate was a precondition of working with him. [edit: this wasn't demanded by Nate except in a couple of cases, but in practice we preferred to get Nate's input because his models were different from ours.]

With that out of the way, it doesn't seem super implausible to us that the mindset is useful, given that MIRI had previously invented out of the box things like logical induction and logical decision theory, and that many of us feel like we learned a lot over the past year. On inside view I have much better ideas than I did a year ago, although it's unclear how much to attribute to the Nate mindset. To estimate this it's super unclear how to make a reference class-- I'd maybe guess at the base rate of mindsets transferring from niche fields to large fields and adjust from there. We spent way too much time discussing how. Let's say there's a 15%* chance of usefulness.

As for whether the mindset would diffuse conditional on it being useful, this seems pretty plausible, maybe 15% if we're careful and 50% if we talk to lots of people but don't publish? Scientific fields are pretty good at spreading useful ideas.

So I think the whole proposition is unlikely but not "extraordinary", maybe like 2.5-7.5%. Previously I was more confident in some of the methods so I would have given 45% for useful, making p(danger) 7%-22.5%. The scenario we were worried about is if our team had a low probability of big success (e.g. due to inexperience), but sharing ideas would cause a fixed 15% chance of big capabilities externalities regardless of success. The project could easily become -EV this way.

Some other thoughts:

- Nate trusts his inside view more than any of our attempts to construct an argument legible to me which I think distorted our attempts to discuss this.

- It's hard to tell 10% from 1% chances for propositions like this, which is also one of the problems in working on long-shot, hopefully high EV projects.

- Part of why I wanted the project to become less private as it went on is that we generated actual research directions and would only have to share object level to get feedback on our ideas.

* Every number in this comment is very rough

This isn't quite how I'd frame the question.

[edit: My understanding is that] Eliezer and Nate believe this. I think it's quite reasonable for other people to be skeptical of it.

Nate and Eliezer can choose to only work closely/mentor people who opt into some kind of confidentiality clause about it. People who are skeptical or don't think it's worth the costs can choose not to opt into it.

I have heard a few people talk about MIRI confidentiality norms being harmful to them in various ways, so I do also think it's quite reasonable for people to be more cautious about opting into working with Nate or Eliezer if they don't think it's worth the cost.

Presumably, Nate/Eliezer aren't willing to talk much about this precisely because they think it'd leak capabilities. You might think they're wrong, or that they haven't justified that, but, like, the people who have a stake in this are the people who are deciding whether to work with them. (I think there's also a question of "should Eliezer/Nate have a reputation as people who have a mindset that's good for alignment and capabilities that'd be bad to leak?", and I'd say the answer should be "not any moreso than you can detect from their public writings, and whatever your personal chains of trust with people who have worked closely with them that you've talked to.")

I do think this leaves some problems. I have heard about the MIRI confidentiality norms being fairly paralyzing for some people in important ways. But something about the Muireall's comment felt like a wrong frame to me.

(I am pretty uncomfortable with all the "Nate / Eliezer" going on here. Let's at least let people's misunderstandings of me be limited to me personally, and not bleed over into Eliezer!)

(In terms of the allegedly-extraordinary belief, I recommend keeping in mind jimrandomh's note on Fork Hazards. I have probability mass on the hypothesis that I have ideas that could speed up capabilities if I put my mind to it, as is a very different state of affairs from being confident that any of my ideas works. Most ideas don't work!)

(Separately, the infosharing agreement that I set up with Vivek--as was perhaps not successfully relayed to the rest of the team, though I tried to express this to the whole team on various occasions--was one where they owe their privacy obligations to Vivek and his own best judgements, not to me.)

(Separately, the infosharing agreement that I set up with Vivek--as was perhaps not successfully relayed to the rest of the team, though I tried to express this to the whole team on various occasions--was one where they owe their privacy obligations to Vivek and his own best judgements, not to me.)

That's useful additional information, thanks.

I made a slight edit to my previous comment to make my epistemic state more clear.

Fwiw, I feel like I have a pretty crisp sense of "Nate and Eliezers communication styles are actually pretty different" (I noticed myself writing out a similar comment about communication styles under the Turntrout thread that initially said "Nate and Eliezer" a lot, and then decided that comment didn't make sense to publish as-is), but I don't actually have much of a sense of the difference between Nate, Eliezer, and MIRI-as-a-whole with regards to "the mindset" and "confidentiality norms".

Sure. I only meant to use Thomas's frame, where it sounds like Thomas did originally accept Nate's model on some evidence, but now feels it wasn't enough evidence. What was originally persuasive enough to opt in? I haven't followed all Nate's or Eliezer's public writing, so I'd be plenty interested in an answer that draws only from what someone can detect from their public writing. I don't mean to demand evidence from behind the confidentiality screen, even if that's the main kind of evidence that exists.

Separately, I am skeptical and a little confused as to what this could even look like, but that's not what I meant to express in my comment.

Flagging inconvenient acronym clash between SLT used for Sharp Left Turn and Singular Learning Theory (have seem both)!

I vote singular learning theory gets priority (if there was ever a situation where one needed to get priority). I intuitively feel like research agendas or communities need an acronym more than concepts. Possibly because in the former case the meaning of the phrase becomes more detached from the individual meaning of the words than it does in the latter.

I’m also much less pessimistic about the possibility of communicating with people than Nate/MIRI seem to be.

FWIW this is also my take (I have done comms work for MIRI in the past, and am currently doing it part-time) but I'm not sure I have any more communication successes than Nate/MIRI have had, so it's not clear to me this is skill talking on my part instead of inexperience.

Thanks for sharing! I was wondering what happened with that project & found this helpful (and would have found it even more helpful if I didn't already know and talk with some of you).

I'd love to see y'all write more, if you feel like it. E.g. here's a prompt:

You said:

Solving the full problem despite reflection / metacognition seems pretty out of reach for now. In the worst case, if an agent reflects, it can be taken over by subagents, refactor all of its concepts into a more efficient language, invent a new branch of moral philosophy that changes its priorities, or a dozen other things. There's just way too much to worry about, and the ability to do these things is -- at least in humans -- possibly tightly connected to why we're good at science.

Can you elaborate? I'd love to see a complete list of all the problems you know of (the dozen things!). I'd also love to see it annotated with commentary about the extent to which you expect these problems to arise in practice vs. probably only if we get unlucky. Another nice thing to include would be a definition of reflection/metacognition. Finally it would be great to say some words about why ability to do reflection/metacognition might be tightly connected to ability to do science.

I'm less concerned about the fact that there might be a dozen different problems (and therefore don't have an explicit list), and more concerned about the fact that we don't understand the mathematical structure behind metacognition (if there even is something to find), and therefore can't yet characterize it or engineer it to be safe. We were trying to make a big list early on, but gradually shifted to resolving confusions and trying to make concrete models of the systems and how these problems arise.

Off the top of my head, by metacognition I mean something like: reasoning that chains through models of yourself, or applying your planning process to your own planning process.

On why reflection/metacognition might be connected to general science ability, I don't like to speculate on capabilities, but just imagine that the scientists in the Apollo program were unable to examine and refine their research processes-- I think they would likely fail.

I agree that just because we've thought hard and made a big list, doesn't mean the list is exhaustive. Indeed the longer the list we CAN find, the higher the probability that there are additional things we haven't found yet...

But I still think having a list would be pretty helpful. If we are trying to grok the shape of the problem, it helps to have many diverse examples.

Re: metacognition: OK, that's a pretty broad definition I guess. Makes the "why is this important for doing science" question easy to answer. Arguably GPT4 already does metacognition to some extent, at least in ARC Evals and when in an AutoGPT harness, and probably not very skillfully.

ETA: so, to be clear, I'm not saying you were wrong to move from the draft list to making models; I'm saying if you have time & energy to write up the list, that would help me along in my own journey towards making models & generally understanding the problem better. And probably other readers besides me also.

The issues you describe seem fairly similar to Academia, where you get a PhD advisor but don't talk to them very often, in large part because they're busy.

FWIW this depends on the advisor, sometimes you do get to talk to the advisor relatively often.

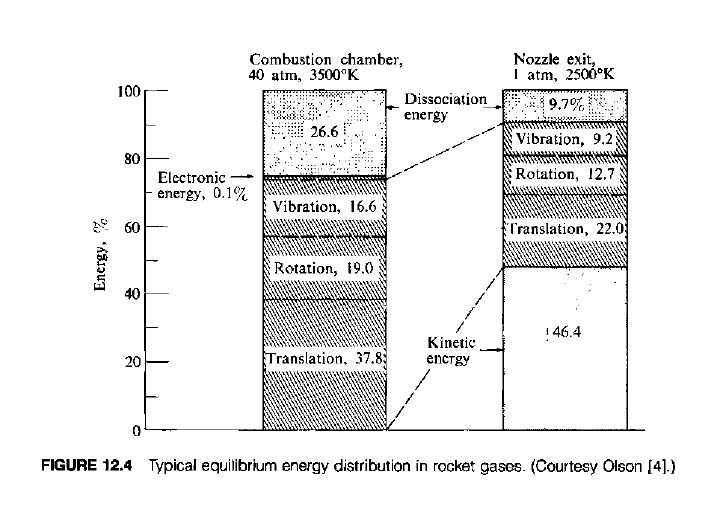

[1]: To clarify what I mean by "pairs of intuitions", here are two that seem more live to me:

- Is it viable to rely on incomplete preferences for corrigibility? Sami Petersen showed incomplete agents don't have to violate some consistency properties. But it's still super unclear how one would keep an agent's preferences naturally coming into conflict with yours, if the agent can build a moon rocket ten times faster than you.

- What's going on with low-molecular-weight exhaust and rocket engine efficiency? There's a claim on the internet that since rocket engine efficiency is proportional to velocity of exhaust molecules, and since , lighter exhaust molecules give you higher velocity for a given amount of energy. This is validated by the fact that in hydrogen-oxygen rockets, the optimum is achieved when using an excess of lighter hydrogen molecules. But this doesn't make sense, because energy is limited and you can't just double the number of molecules while keeping energy per molecule fixed. (I might write this up)

[2]: Note I'm not endorsing logical decision theory over CDT/EDT because there seem to be some problems and also LDT is not formalized.

Re rockets, I might be misunderstanding, but I’m not sure why you’re imagining doubling the number of molecules. Isn’t the idea that you hold molecules constant and covalent energy constant, then reduce mass to increase velocity? Might be worth disambiguating your comparator here: I imagine we agree that light hydrogen would be better than heavy hydrogen, but perhaps you’re wondering about kerosene?