My mainline prediction scenario for the next decades.

My mainline prediction * :

- LLMs will not scale to AGI. They will not spawn evil gremlins or mesa-optimizers. BUT Scaling laws will continue to hold and future LLMs will be very impressive and make a sizable impact on the real economy and science over the next decade. EDIT: since there is a lot of confusion about this point. BY LLM I mean the paradigm of pre-trained transformers. This does not include different paradigms that follow pre-trained transformers but are still called large language models. EDIT2: since I'm already anticipating confusion on this point: when I say scaling laws will continue to hold that means that the 3-way relation between model size, compute, data will probably continue to hold. It has been known for a long time that amount of data used by gpt-4 level models is already within perhaps an OOM of the maximum. ]

- there is a single innovation left to make AGI-in-the-alex sense work, i.e. coherent, long-term planning agents (LTPA) that are effective and efficient in data sparse domains over long horizons.

- that innovation will be found within the next 10-15 years

- It will be clear to the general public that t

governments will act quickly and (relativiely) decisively to bring these agents under state-control. national security concerns will dominate.

I dunno, like 20 years ago if someone had said “By the time somebody creates AI that displays common-sense reasoning, passes practically any written test up including graduate-level, (etc.), obviously governments will be flipping out and nationalizing AI companies etc.”, to me that would have seemed like a reasonable claim. But here we are, and the idea of the USA govt nationalizing OpenAI seems a million miles outside the Overton window.

Likewise, if someone said “After it becomes clear to everyone that lab leaks can cause pandemics costing trillions of dollars and millions of lives, then obviously governments will be flipping out and banning the study of dangerous viruses—or at least, passing stringent regulations with intrusive monitoring and felony penalties for noncompliance etc,” then that would also have sounded reasonable to me! But again, here we are.

So anyway, my conclusion is that when I ask my intuition / imagination whether governments will flip out in thus-and-such circumstance, my intuition / imagination is really ba...

One strong reason to think the AI case might be different is that US national security will be actively using AI to build weapons and thus it will be relatively clear and salient to US national security when things get scary.

I think this will look a bit outdated in 6-12 months, when there is no longer a clear distinction between LLMs and short term planning agents, and the distinction between the latter and LTPAs looks like a scale difference comparable to GPT2 vs GPT3 rather than a difference in kind. At what point do you imagine a national government saying "here but no further?".

I think scaffolding is the wrong metaphor. Sequences of actions, observations and rewards are just more tokens to be modeled, and if I were running Google I would be busy instructing all work units to start packaging up such sequences of tokens to feed into the training runs for Gemini models. Many seemingly minor tasks (e.g. app recommendation in the Play store) either have, or could have, components of RL built into the pipeline, and could benefit from incorporating LLMs, either by putting the RL task in-context or through fine-tuning of very fast cheap models.

So when I say I don't see a distinction between LLMs and "short term planning agents" I mean that we already know how to subsume RL tasks into next token prediction, and so there is in some technical sense already no distinction. It's a question of how the underlying capabilities are packaged and deployed, and I think that within 6-12 months there will be many internal deployments of LLMs doing short sequences of tasks within Google. If that works, then it seems very natural to just scale up sequence length as generalisation improves.

Arguably fine-tuning a next-token predictor on action, observation, reward sequences, or doing it in-context, is inferior to using algorithms like PPO. However, the advantage of knowledge transfer from the rest of the next-token predictor's data distribution may more than compensate for this on some short-term tasks.

in my reading, Strawberry is showing that indeed scaling just pretraining transformers will *not* lead to AGI. The new paradigm is inference-scaling - the obvious next step is doing RL on long horizons and sparse data domains. I have been saying this ever since gpt-3 came out.

For the question of general intelligence imho the scaling is conceptually a red herring: any (general purpose) algorithm will do better when scaled. The key in my mind is the algorithm not the resource, just like I would say a child is generally intelligent while a pocket calculator is not even if the child can't count to 20 yet. It's about the meta-capability to learn not the capability.

As we spoke earlier - it was predictable that this was going to be the next step. It was likely it was going to work, but there was a hopeful world in which doing the obvious thing turned out to be harder. That hope has been dashed - it suggests longer horizons might be easy too. This means superintelligence within two years is not out of the question.

I'm a bit confused by what you mean by "LLMs will not scale to AGI" in combination with "a single innovation is all that is needed for AGI".

E.g., consider the following scenarios:

- AGI (in the sense you mean) is achieved by figuring out a somewhat better RL scheme and massively scaling this up on GPT-6.

- AGI is achieved by doing some sort of architectural hack on top of GPT-6 which makes it able to reason in neuralese for longer and then doing a bunch of training to teach the model to use this well.

- AGI is achieved via doing some sort of iterative RL/synth data/self-improvement process for GPT-6 in which GPT-6 generates vast amounts of synthetic data for itself using various tools.

IMO, these sound very similar to "LLMs scale to AGI" for many practical purposes:

- LLM scaling is required for AGI

- LLM scaling drives the innovation required for AGI

- From the public's perspective, it maybe just looks like AI is driven by LLMs getting better over time and various tweaks might be continuously introduced.

Maybe it is really key in your view that the single innovation is really discontinuous and maybe the single innovation doesn't really require LLM scaling.

I think a single innovation left to create LTPA is unlikely because it runs contrary to the history of technology and of machine learning. For example, in the 10 years before AlphaGo and before GPT-4, several different innovations were required-- and that's if you count "deep learning" as one item. ChatGPT actually understates the number here because different components of the transformer architecture like attention, residual streams, and transformer++ innovations were all developed separately.

Then I think you should specify that progress within this single innovation could be continuous over years and include 10+ ML papers in sequence each developing some sub-innovation.

John wrote an explosive postmortem on the alignment field, boldy proclaiming that almost all alignment research is trash. John held the ILIAD conference [which I helped organize] as one of the few examples of places where research is going in the right direction. While I share some of his concerns about the field's trajectory, and I am flattered that ILIAD was appreciated, I feel ambivalent about ILIAD being pulled into what I can only describe as an alignment culture war.

There's plenty to criticise about mainstream alignment research but blanket dismissals feel silly to me? Sparse auto-encoders are exciting! Research on delegated oversight & safety-by-debate is vitally important. Scary demos isn't exciting as Deep Science but its influence on policy is probably much greater than that long-form essay on conceptual alignment. AI psychology doesn't align with a physicist's aesthetic but as alignment is ultimately about attitudes of artifical intelligences maybe just talking with Claude about his feelings might prove valuable. There's lots of experimental work in mainstream ML on deep learning that will be key to constructing a grounded theory of deep learning. And I'm sure ...

I think that there are two key questions we should be asking:

- Where is the value of a an additional researcher higher on the margin?

- What should the field look like in order to make us feel good about the future?

I agree that "prosaic" AI safety research is valuable. However, at this point it's far less neglected than foundational/theoretical research and the marginal benefits there are much smaller. Moreover, without significant progress on the foundational front, our prospects are going to be poor, ~no matter how much mech-interp and talking to Claude about feelings we will do.

John has a valid concern that, as the field becomes dominated by the prosaic paradigm, it might become increasingly difficult to get talent and resources to the foundational side, or maintain memetically healthy coherent discourse. As to the tone, I have mixed feelings. Antagonizing people is bad, but there's also value in speaking harsh truths the way you see them. (That said, there is room in John's post for softening the tone without losing much substance.)

Scary demos isn't exciting as Deep Science but its influence on policy

There maybe should be a standardly used name for the field of generally reducing AI x-risk, which would include governance, policy, evals, lobbying, control, alignment, etc., so that "AI alignment" can be a more narrow thing. I feel (coarsely speaking) grateful toward people working on governance, policy, evals_policy, lobbying; I think control is pointless or possibly bad (makes things look safer than they are, doesn't address real problem); and frustrated with alignment.

What's concerning is watching a certain strain of dismissiveness towards mainstream ideas calcify within parts of the rationalist ecosystem. As Vanessa notes in her comment, this attitude of isolation and attendant self-satisfied sense of superiority certainly isn't new. It has existed for a while around MIRI & the rationalist community. Yet it appears to be intensifying as AI safety becomes more mainstream and the rationalist community's relative influence decreases

What should one do, who:

- thinks that there's various specific major defeaters to the narrow project of understanding how to align AGI;

- finds partial consensus with some o

A thing that makes alignment hard / would defeat various alignment plans or alignment research plans.

E.g.s: https://www.lesswrong.com/posts/uMQ3cqWDPHhjtiesc/agi-ruin-a-list-of-lethalities#Section_B_

E.g. the things you're studying aren't stable under reflection.

E.g. the things you're studying are at the wrong level of abstraction (SLT, interp, neuro) https://www.lesswrong.com/posts/unCG3rhyMJpGJpoLd/koan-divining-alien-datastructures-from-ram-activations

E.g. https://tsvibt.blogspot.com/2023/03/the-fraught-voyage-of-aligned-novelty.html

This just in: Alignment researchers fail to notice skulls from famous blog post "Yes, we have noticed the skulls".

They aren't close to the right kind of abstraction. You can tell because they use a low-level ontology, such that mental content, to be represented there, would have to be homogenized, stripped of mental meaning, and encoded. Compare trying to learn about arithmetic, and doing so by explaining a calculator in terms of transistors vs. in terms of arithmetic. The latter is the right level of abstraction; the former is wrong (it would be right if you were trying to understand transistors or trying to understand some further implementational aspects of arithmetic beyond the core structure of arithmetic).

What I'm proposing instead, is theory.

I very much agree that structurally what matters a lot, but that seems like half the battle to me.

But somehow this topic is not afforded much care or interest. Some people will pay lip service to caring, others will deny that mental states exist, but either way the field of alignment doesn't put much force (money, smart young/new people, social support) toward these questions. This is understandable, as we have much less legible traction on this topic, but that's... undignified, I guess is the expression.

why you are so confident in these "defeaters"

More than any one defeater, I'm confident that most people in the alignment field don't understand the defeaters. Why? I mean, from talking to many of them, and from their choices of research.

People in these fields understand very well the problem you are pointing towards.

I don't believe you.

if the alignment community would outlaw mechinterp/slt/ neuroscience

This is an insane strawman. Why are you strawmanning what I'm saying?

I dont think progress on this question will be made by blanket dismissals

Progress could only be made by understanding the problems, which can only be done by stating the problems, which you're calling "blanket dismissals".

Not confident, but I think that "AIs that cause your civilization problems" and "AIs that overthrow your civilization" may be qualitatively different kinds of AIs. Regardlesss, existential threats are the most important thing here, and we just have a short term ('x-risk') that refers to that work.

And anyway I think the 'catastrophic' term is already being used to obfuscate, as Anthropic uses it exclusively on their website / in their papers, literally never talking about extinction or disempowerment[1], and we shouldn't let them get away with that by also adopting their worse terminology.

- ^

(And they use the term 'existential' 3 times in oblique ways that barely count.)

Highly recommended video on drone development in the Ukraine-Russia war, interview with a Russian private military drone developer.

some key takeaways

- Drones now account for >70% of kills on the battlefields.

- There are few to none effective counters to drones. The on

- Electronic jamming is a rare exception but drones carrying 5-15km fiber optic cables are immune to jamming. In the future AI-controlled drones will be immune to jamming.

- 'Laser is currently a joke. It works in theory, not in practice. Western demonstrations at expos are always in ideal conditions. ' but he also says that both Russia and Ukraine are actively working on the technology and he thinks it could be an effective weapon.

- Nets can be effective but fiber-optic drones can fly very low and not lose connection are increasingly used to slip under the nets.

- Soldiers are increasingly opting for bikes instead of vehicles as the latter don't offer much protection to drones.

- The big elephant in the room: AI drones.

- It seems like the obvious next step - why hasn't it happened yet?

- 'at Western military expos everybody is talking AI-controlled drones. This is nonsense of course' Apparently the limitation is that it's currentl

I second the video recommendation.

A friend in China, in a rare conversation we had about international politics, was annoyed at US politicians for saying China was "supporting" Russia. "China has the production capacity to make easily 500,000 drones per day.[1]", he said. "If China were supporting Russia, the war would be over". And I had to admit I had not credited the Chinese government for keeping its insanely competitive companies from smuggling more drones into Russia.

- ^

This seemed like a drastic underestimate to me.

Adding context/(kind-of) counter argument from reddit (the link has a link to the main article and contains a summary of it):

I think the comments are also worth a read. I want to share one particular comment here, which I think has a good explanation/hypothesis regarding the situation:

...The scaling up of FPV drones for the Ukrainians was definitely the result of artillery and mortar ammo shortages. But that can't be the only answer, as Russia never suffered that degree of shortage and they've gone as hardcore into FPV drones, if not more so, than the Ukrainians.

I think the biggest problem relying on artillery and mortars in THIS war is the ultra static nature of it. With the lines barely moving, it's very hard to create an artillery or mortar firing position that has decent survivability. Enemy recon drones, which can't be jammed or shot down easily (as most use freq hopping, fly at altitude, have limited radar signatures, etc), they are prowling the tactical rear areas. Since the start of the war, indirect fire has had to greatly disperse, especially artillery, which operate

The YouTube channel was banned last week for being suspected propaganda because he used to work for state media channel RT. This is pretty sad to me because the content was very informative with slight if any pro Russia bias. AFAIK the only place he posts now is telegram https://t.me/RealReporter_tg

The Ammann Hypothesis: Free Will as a Failure of Self-Prediction

A fox chases a hare. The hare evades the fox. The fox tries to predict where the hare is going - the hare tries to make it as hard to predict as possible.

Q: Who needs the larger brain?

A: The fox.

This is a little animal tale meant to illustrate the following phenomenon:

Generative complexity can be much smaller than predictive complexity under partial observability. In other words, when partially observing a blackbox there are simple internal mechanism that create complex patterns that require very large predictors to predict well.

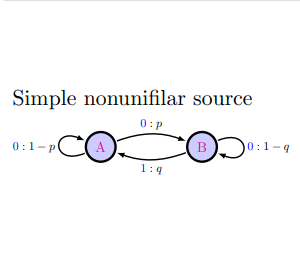

Consider the following simple 2-state HMM

Note that the symbol 0 is output in three different ways: A -> A, A-> B, and B -> B. This means that if we see the symbol 0 we don't know where we are. We can use Bayesian updating to guess where we are but starting from a stationary distribution our belief states can become extremely complicated - in fact, the data sequence generated by the simple nonunifalar source has an optimal predictor HMM that requires infinitely many states :

This simple example illustrates the gap between generative complexity an...

in my opinion, this is a poor choice of problem for demonstrating the generator/predictor simplicity gap.

If not restricted to Markov model based predictors, we can do a lot better simplicity-wise.

Simple Bayesian predictor tracks one real valued probability B in range 0...1. Probability of state A is implicitly 1-B.

This is initialized to B=p/(p+q) as a prior given equilibrium probabilities of A/B states after many time steps.

P("1")=qA is our prediction with P("0")=1-P("1") implicitly.

Then update the usual Bayesian way:

if "1", B=0 (known state transition to A)

if "0", A,B:=(A*(1-p),A*p+B*(1-q)), then normalise by dividing both by the sum. (standard bayesian update discarding falsified B-->A state transition)

In one step after simplification: B:=(B(1+p-q)-p)/(Bq-1)

That's a lot more practical than having infinite states. Numerical stability and achieving acceptable accuracy of a real implementable predictor is straightforward but not trivial. A near perfect predictor is only slightly larger than the generator.

A perfect predictor can use 1 bit (have we ever observed a 1) and ceil(log2(n)) bits counting n, the number of observed zeroes in the last run to calculate the perfectly correct prediction. Technically as n-->infinity this turns into infinite bits but scaling is logarithmic so a practical predictor will never need more than ~500 bits given known physics.

Misgivings about Category Theory

[No category theory is required to read and understand this screed]

A week does not go by without somebody asking me what the best way to learn category theory is. Despite it being set to mark its 80th annivesary, Category Theory has the evergreen reputation for being the Hot New Thing, a way to radically expand the braincase of the user through an injection of abstract mathematics. Its promise is alluring, intoxicating for any young person desperate to prove they are the smartest kid on the block.

Recently, there has been significant investment and attention focused on the intersection of category theory and AI, particularly in AI alignment research. Despite the influx of interest I am worried that it is not entirely understood just how big the theory-practice gap is.

I am worried that overselling risks poisoning the well for the general concept of advanced mathematical approaches to science in general, and AI alignment in particular. As I believe mathematically grounded approaches to AI alignment are perhaps the only way to get robust worst-case safety guarantees for the superintelligent regime I think this would be bad.

I find it difficult...

Modern mathematics is less about solving problems within established frameworks and more about designing entirely new games with their own rules. While school mathematics teaches us to be skilled players of pre-existing mathematical games, research mathematics requires us to be game designers, crafting rule systems that lead to interesting and profound consequences

I don't think so. This probably describes the kind of mathematics you aspire to do, but still the bulk of modern research in mathematics is in fact about solving problems within established frameworks and usually such research doesn't require us to "be game designers". Some of us are of course drawn to the kinds of frontiers where such work is necessary, and that's great, but I think this description undervalues the within-paradigm work that is the bulk of what is going on.

ADHD is about the Voluntary vs Involuntary actions

The way I conceptualize ADHD is as a constraint on the quantity and magnitude of voluntary actions I can undertake. When others discuss actions and planning, their perspective often feels foreign to me—they frame it as a straightforward conscious choice to pursue or abandon plans. For me, however, initiating action (especially longer-term, less immediately rewarding tasks) is better understood as "submitting a proposal to a capricious djinn who may or may not fulfill the request." The more delayed the gratification and the longer the timeline, the less likely the action will materialize.

After three decades inhabiting my own mind, I've found that effective decision-making has less to do with consciously choosing the optimal course and more with leveraging my inherent strengths (those behaviors I naturally gravitate toward, largely outside my conscious control) while avoiding commitments that highlight my limitations (those things I genuinely intend to do and "commit" to, but realistically never accomplish).

ADHD exists on a spectrum rather than as a binary condition. I believe it serves an adaptive purpose—by restricting the number of...

My partner has ADHD. She and I talk about it often because I don’t, and understanding and coordinating with each other takes a lot of work.

Her environment is a strong influence on what tasks she considers and chooses. If she notices a weed in the garden walking from the car to the front door, she can get caught up for hours weeding before she makes it into the house. If she’s in her home office trying to work from home and notices something to tidy, same thing.

All the tasks her environment suggests to her seem important and urgent, because she’s not comparing them to some larger list of potential priorities that apply to different contexts - she’s always working on the top priority strictly with reference to the context she’s in at the moment.

She is much better than me at accomplishing tasks that her environment naturally suggests to her - cooking (inspired by recipes she finds on social media), cleaning, shopping, gardening, socializing, and making social plans in response to texts and notifications on her phone.

I am much better than her at constructing an organized list of global priorities and working through them systematically. However, I find it very difficult to be opportuni...

The Padding Argument or Simplicity = Degeneracy

[I learned this argument from Lucius Bushnaq and Matthias Dellago. It is also latent already in Solomonoff's original work]

Consider binary strings of a fixed length

Imagine feeding these strings into some turing machine; we think of strings as codes for a function. Suppose we have a function that can be coded by a short compressed string of length . That is, the function is computable by a small program.

Imagine uniformly sampling a random code for . What number of the codes implement the same function as the string ? It's close to .[1] Indeed, given the string of length we can 'pad' it to a string of length by writing the code

"run skip "

where is an arbitrary string of length where is a small constant accounting for the overhead. There are approximately of such binary strings. If our programming language has a simple skip / commenting out functionality then we expect approximately codes encoding the same function as ...

Re: the SLT dogma.

For those interested, a continuous version of the padding argument is used in Theorem 4.1 of Clift-Murfet-Wallbridge to show that the learning coefficient is a lower bound on the Kolmogorov complexity (in a sense) in the setting of noisy Turing machines. Just take the synthesis problem to be given by a TM's input-output map in that theorem. The result is treated in a more detailed way in Waring's thesis (Proposition 4.19). Noisy TMs are of course not neural networks, but they are a place where the link between the learning coefficient in SLT and algorithmic information theory has already been made precise.

For what it's worth, as explained in simple versus short, I don't actually think the local learning coefficient is algorithmic complexity (in the sense of program length) in neural networks, only that it is a lower bound. So I don't really see the LLC as a useful "approximation" of the algorithmic complexity.

For those wanting to read more about the padding argument in the classical setting, Hutter-Catt-Quarel "An Introduction to Universal Artificial Intelligence" has a nice detailed treatment.

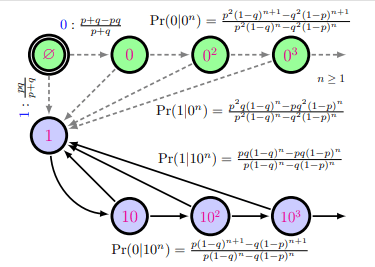

Why Do the French Dominate Mathematics?

France has an outsized influence in the world of mathematics despite having significantly fewer resources than countries like the United States. With approximately 1/6th of the US population and 1/10th of its GDP, and French being less widely spoken than English, France's mathematical achievements are remarkable.

This dominance might surprise those outside the field. Looking at prestigious recognitions, France has won 13 Fields Medals compared to the United States' 15 a nearly equal achievement despite the vast difference in population and resources. Other European nations lag significantly behind, with the UK having 8, Russia/Soviet Union 6/9, and Germany 2.

France's mathematicians are similarly overrepresented in other mathematics prizes and honors, confirming this is not merely a statistical anomaly.

I believe two key factors explain France's exceptional performance in mathematics while remaining relatively average in other scientific disciplines:

1. The "Classes Préparatoires" and "Grandes Écoles" System

The French educational system differs significantly from others through its unique "classes préparatoires" (preparatory classes) and "grandes ...

I don't buy your factors (1) or (2). Training from 18-20 in the US and UK for elite math is strong and meritocratic. And brilliant mathematicians have career stability in the US and UK.

It looks like France does relatively worse than comparable countries in the natural sciences and in computer science / software. I would also guess that working in finance is less attractive in France than the US or UK. So one possible factor is opportunity cost.

https://royalsocietypublishing.org/doi/10.1098/rsos.180167

The intellectual maturation between ages 18 and 20 is profound

This is the first time I've heard this claim. Any background/cites I should look into for this?

Alternative model: French mathematicians don't overperform in an objective sense. Rather, French mathematicians happened to end up disproportionately setting fashion trends in pure mathematics for a while, for reasons which are mostly just signalling games and academic politics rather than mathematical merit.

The Bourbaki spring to mind here as a central example.

Forecasting and scenario building has become quite popular and prestigious in EA-adjacent circles. I see extremely detailed scenario building & elaborate narratives.

Yes AI will be big, AGI plausibly close. But how much detail can one really expect to predict? There were a few large predictions that some people got right, but once one zooms in the details don't fit while the correct predictions were much more widespread within the group of people that were paying attention.

I can't escape the feeling that we're quite close to the limits of the knowable and 80% of EA discourse on this is just larp.

Does anybody else feel this way?

I think for many years there was a lot of frustration from people outside of the community about people inside of it not going into a lot of detail. My guess is we are dealing with a bit of a pendulum swing of now people going hard in the other direction. I do think we are just currently dealing with one big wave of this kind of content. It's not like there was much of any specific detailed scenario work two years ago.

I think the value proposition of AI 2027-style work lies largely in communication. Concreteness helps people understand things better. The details are mostly there to provide that concreteness, not to actually be correct.

If you imagine the set of possible futures that people like Daniel, you or I think plausible as big distributions, with high entropy and lots of unknown latent variables, the point is that the best way to start explaining those distributions to people outside the community is to draw a sample from them and write it up. This is a lot of work, but it really does seem to help. My experience matches habryka's here. Most people really want to hear concrete end-to-end scenarios, not abstract discussion of the latent variables in my model and their relationships.

Why don't animals have guns?

Or why didn't evolution evolve the Hydralisk?

Evolution has found (sometimes multiple times) the camera, general intelligence, nanotech, electronavigation, aerial endurance better than any drone, robots more flexible than any human-made drone, highly efficient photosynthesis, etc.

First of all let's answer another question: why didn't evolution evolve the wheel like the alien wheeled elephants in His Dark Materials?

Is it biologically impossible to evolve?

Well, technically, the flagella of various bacteria is a proper wheel.

No the likely answer is that wheels are great when you have roads and suck when you don't. Roads are build by ants to some degree but on the whole probably don't make sense for an animal-intelligence species.

Aren't there animals that use projectiles?

Hold up. Is it actually true that there is not a single animal with a gun, harpoon or other projectile weapon?

Porcupines have quils, some snakes spit venom, a type of fish spits water as a projectile to kick insects of leaves than eats insects. Bombadier beetles can produce an explosive chemical mixture. Skunks use some other chemicals. Some snails shoot harpoons from very c...

How to prepare for the coming Taiwan Crisis? Should one short TSMC? Dig a nuclear cellar?

Metaculus gives a 25% of a fullscale invasion of Taiwan within 10 years and a 50% chance of a blockade. It gives a 65% chance that if China invades Taiwan before 2035 the US will respond with military force.

Metaculus has very strong calibration scores (apparently better than prediction markets). I am inclined to take these numbers as the best guess we currently have of the situation.

Is there any way to act on this information?

Novel Science is Inherently Illegible

Legibility, transparency, and open science are generally considered positive attributes, while opacity, elitism, and obscurantism are viewed as negative. However, increased legibility in science is not always beneficial and can often be detrimental.

Scientific management, with some exceptions, likely underperforms compared to simpler heuristics such as giving money to smart people or implementing grant lotteries. Scientific legibility suffers from the classic "Seeing like a State" problems. It constrains endeavors to the least informed stakeholder, hinders exploration, inevitably biases research to be simple and myopic, and exposes researchers to constant political tug-of-war between different interest groups poisoning objectivity.

I think the above would be considered relatively uncontroversial in EA circles. But I posit there is something deeper going on:

Novel research is inherently illegible. If it were legible, someone else would have already pursued it. As science advances her concepts become increasingly counterintuitive and further from common sense. Most of the legible low-hanging fruit has already been picked, and novel research requires venturing higher into the tree, pursuing illegible paths with indirect and hard-to-foresee impacts.

Novel research is inherently illegible.

I'm pretty skeptical of this and think we need data to back up such a claim. However there might be bias: when anyone makes a serendipitous discovery it's a better story, so it gets more attention. Has anyone gone through, say, the list of all Nobel laureates and looked at whether their research would have seemed promising before it produced results?

Thanks for your skepticism, Thomas. Before we get into this, I'd like to make sure actually disagree. My position is not that scientific progress is mostly due to plucky outsiders who are ignored for decades. (I feel something like this is a popular view on LW). Indeed, I think most scientific progress is made through pretty conventional (academic) routes.

I think one can predict that future scientific progress will likely be made by young smart people at prestigious universities and research labs specializing in fields that have good feedback loops and/or have historically made a lot of progress: physics, chemistry, medicine, etc

My contention is that beyond very broad predictive factors like this, judging whether a research direction is fruitful is hard & requires inside knowledge. Much of this knowledge is illegible, difficult to attain because it takes a lot of specialized knowledge etc.

Do you disagree with this ?

I do think that novel research is inherently illegible. Here are some thoughts on your comment :

1.Before getting into your Nobel prize proposal I'd like to caution for Hindsight bias (obvious reasons).

-

And perhaps to some degree I'd like to argue the burden of proo

I guess I'm not sure what you mean by "most scientific progress," and I'm missing some of the history here, but my sense is that importance-weighted science happens proportionally more outside of academia. E.g., Einstein did his miracle year outside of academia (and later stated that he wouldn't have been able to do it, had he succeeded at getting an academic position), Darwin figured out natural selection, and Carnot figured out the Carnot cycle, all mostly on their own, outside of academia. Those are three major scientists who arguably started entire fields (quantum mechanics, biology, and thermodynamics). I would anti-predict that future scientific progress, of the field-founding sort, comes primarily from people at prestigious universities, since they, imo, typically have some of the most intense gatekeeping dynamics which make it harder to have original thoughts.

Thank you, Thomas. I believe we find ourselves in broad agreement. The distinction you make between lay-legibility and expert-legibility is especially well-drawn.

One point: the confidence of researchers in their own approach may not be the right thing to look at. Perhaps a better measure is seeing who can predict not only their own approach will succed but explain in detail why other approaches won't work. Anecdotally, very succesful researchers have a keen sense of what will work out and what won't - in private conversation many are willing to share detailed models why other approaches will not work or are not as promising. I'd have to think about this more carefully but anecdotally the most succesful researchers have many bits of information over their competitors not just one or two. (Note that one bit of information means that their entire advantage could be wiped out by answering a single Y/N question. Not impossible, but not typical for most cases)

You May Want to Know About Locally Decodable Codes

In AI alignment and interpretability research, there's a compelling intuition that understanding equals compression. The idea is straightforward: if you truly understand a system, you can describe it more concisely by leveraging that understanding. This philosophy suggests that better interpretability techniques for neural networks should yield better compression of their behavior or parameters.

jake_mendel asks: if understanding equals compression, then shouldn't ZIP compression of neural network weights count as understanding? After all, ZIP achieves remarkable compression ratios on neural network weights - likely better than any current interpretability technique. Yet intuitively, having a ZIP file of weights doesn't feel like understanding at all! We wouldn't say we've interpreted a neural network just because we've compressed its weights into a ZIP file.

Compressing a bit string means finding a code for that string, and the study of such codes is the central topic of both algorithmic and Shannon information theory. Just compressing the set of weights as small as possible is too naive - we probably want to impose additional proper...

I don't remember the details, but IIRC ZIP is mostly based on Lempel-Ziv, and it's fairly straightforward to modify Lempel-Ziv to allow for efficient local decoding.

My guess would be that the large majority of the compression achieved by ZIP on NN weights is because the NN weights are mostly-roughly-standard-normal, and IEEE floats are not very efficient for standard normal variables. So ZIP achieves high compression for "kinda boring reasons", in the sense that we already knew all about that compressibillity but just don't leverage it in day-to-day operations because our float arithmetic hardware uses IEEE.

Using ZIP as compression metric for NNs (I assume you do something along the lines of "take all the weights and line them up and then ZIP") is unintuitive to me for the following reason:

ZIP, though really this should apply to any other coding scheme that just tries to compress the weights by themselves, picks up on statistical patterns in the raw weights. But NNs are not just simply a list of floats, they are arranged in highly structured manner. The weights themselves get turned into functions and it is 1.the functions, and 2. the way the functions interact that we are ultimately trying to understand (and therefore compress).

To wit, a simple example for the first point : Assume that inside your model is a 2x2 matrix with entries M=[0.587785, -0.809017, 0.809017, 0.587785]. Storing it like this will cost you a few bytes and if you compress it you can ~ half the cost I believe. But really there is a much more compact way to store this information: This matrix represents a rotation by 36 degrees. Storing it this way, requires less than 1 byte.

This phenomenon should get worse for bigger models. One reason is the following: If we believe that the NN uses superposition, then...

Reward is not the optimization target.

The optimization target is the Helmholtz free energy functional in the conductance-corrected Wasserstein metric for the step-size effective loss potential in the critical batch size regime for the weight-initialization distribution as prior up to solenoidal flux corrections

Shower thought - why are sunglasses cool ?

Sunglasses create an asymmetry in the ability to discern emotions between the wearer and nonwearer. This implicitly makes the wearer less predictable, more mysterious, more dangerous and therefore higher in a dominance hierarchy.

also see ashiok from mtg: whole upper face/head is replaced with shadow

also, masks 'create an asymmetry in the ability to discern emotions' but do not seem to lead to the rest

That's a good counterexample ! Masks are dangerous and mysterious, but not cool in the way sunglasses are in agree

My timelines are lengthening.

I've long been a skeptic of scaling LLMs to AGI *. To me I fundamentally don't understand how this is even possible. It must be said that very smart people give this view credence. davidad, dmurfet. on the other side are vanessa kosoy and steven byrnes. When pushed proponents don't actually defend the position that a large enough transformer will create nanotech or even obsolete their job. They usually mumble something about scaffolding.

I won't get into this debate here but I do want to note that my timelines have lengthened, primarily because some of the never-clearly-stated but heavily implied AI developments by proponents of very short timelines have not materialized. To be clear, it has only been a year since gpt-4 is released, and gpt-5 is around the corner, so perhaps my hope is premature. Still my timelines are lengthening.

A year ago, when gpt-3 came out progress was blindingly fast. Part of short timelines came from a sense of 'if we got surprised so hard by gpt2-3, we are completely uncalibrated, who knows what comes next'.

People seemed surprised by gpt-4 in a way that seemed uncalibrated to me. gpt-4 performance was basically in li...

With scale, there is visible improvement in difficulty of novel-to-chatbot ideas/details that is possible to explain in-context, things like issues with the code it's writing. If a chatbot is below some threshold of situational awareness of a task, no scaffolding can keep it on track, but for a better chatbot trivial scaffolding might suffice. Many people can't google for a solution to a technical issue, the difference between them and those who can is often subtle.

So modest amount of scaling alone seems plausibly sufficient for making chatbots that can do whole jobs almost autonomously. If this works, 1-2 OOMs more of scaling becomes both economically feasible and more likely to be worthwhile. LLMs think much faster, so they only need to be barely smart enough to help with clearing those remaining roadblocks.

Yes agreed.

What I don't get about this position: If it was indeed just scaling - what's AI research for ? There is nothing to discover, just scale more compute. Sure you can maybe improve the speed of deploying compute a little but at the core of it it seems like a story that's in conflict with itself?

Here are two arguments for low-hanging algorithmic improvements.

First, in the past few years I have read many papers containing low-hanging algorithmic improvements. Most such improvements are a few percent or tens of percent. The largest such improvements are things like transformers or mixture of experts, which are substantial steps forward. Such a trend is not guaranteed to persist, but that’s the way to bet.

Second, existing models are far less sample-efficient than humans. We receive about a billion tokens growing to adulthood. The leading LLMs get orders of magnitude more than that. We should be able to do much better. Of course, there’s no guarantee that such an improvement is “low hanging”.

Free energy and (mis)alignment

The classical MIRI views imagines human values to be a tiny squiggle in a vast space of alien minds. The unfathomable inscrutable process of deep learning is very unlikely to pick exactly that tiny squiggle, instead converging to a fundamentally incompatible and deeply alien squiggle. Therein lies the road to doom.

Optimists will object that deep learning doesn't randomly sample from the space of alien minds. It is put under a strong gradient pressure to satisfy human preference in-distribution / during the training phase. One could, and many people have, similarly object that it's hard or even impossible for deep learning systems to learn concepts that aren't naive extrapolations of its training data[cf symbol grounding talk]. In fact, Claude is very able to verbalize human ethics and values.

Any given behaviour and performance on the training set is compatible with any given behaviour outside the training set. One can hardcode backdoors into a neural network that can behave nicely on training and arbitrarily differently outside training. Moreover, these backdoors can be implemented in such a way as to be computationally intractable to res...

In AI alignment, the entropic force pulls toward sampling random minds from the vast space of possible minds, while the energetic force (from training) pulls toward minds that behave as we want. The actual outcome depends on which force is stronger.

The MIRI view, I'm pretty sure, is that the force of training does not pull towards minds that behave as we want, unless we know a lot of things about training design we currently don't.

MIRI is not talking about the randomness as in the spread of the training posterior as a function of random Bayesian sampling/NN initialization/SGD noise. The point isn't that training is inherently random. It can be a completely deterministic process without affecting the MIRI argument basically at all. If everything were a Bayesian sample from the posterior and there was a single basin of minimum local learning coefficient corresponding to equivalent implementations of a single algorithm, then I don't think this would by default make models any more likely to be aligned. The simplest fit to the training signal need not be an optimiser pointed at a terminal goal that maps to the training signal in a neat way humans can intuitively zero-shot without figur...

Encrypted Batteries

(I thank Dmitry Vaintrob for the idea of encrypted batteries. Thanks to Adam Scholl for the alignment angle. Thanks to the Computational Mechanics at the receent compMech conference. )

There are no Atoms in the Void just Bits in the Description. Given the right string a Maxwell Demon transducer can extract energy from a heatbath.

Imagine a pseudorandom heatbath + nano-Demon. It looks like a heatbath from the outside but secretly there is a private key string that when fed to the nano-Demon allows it to extra lots of energy from the heatbath.

P.S. Beyond the current ken of humanity lies a generalized concept of free energy that describes the generic potential ability or power of an agent to achieve goals. Money, the golden calf of Baal is one of its many avatars. Could there be ways to encrypt generalized free energy batteries to constraint the user to only see this power for good? It would be like money that could be only spent on good things.

Imagine a pseudorandom heatbath + nano-Demon. It looks like a heatbath from the outside but secretly there is a private key string that when fed to the nano-Demon allows it to extra lots of energy from the heatbath.

What would a 'pseudorandom heatbath' look like? I would expect most objects to quickly depart from any sort of private key or PRNG. Would this be something like... a reversible computer which shuffles around a large number of blank bits in a complicated pseudo-random order every timestep*, exposing a fraction of them to external access? so a daemon with the key/PRNG seed can write to the blank bits with approaching 100% efficiency (rendering it useful for another reversible computer doing some actual work) but anyone else can't do better than 50-50 (without breaking the PRNG/crypto) and that preserves the blank bit count and is no gain?

* As I understand reversible computing, you can have a reversible computer which does that for free: if this is something like a very large period loop blindly shuffling its bits, it need erase/write no bits (because it's just looping through the same states forever, akin to a time crystal), and so can be computed indefinitely at arbitrarily low energy cost. So any external computer which syncs up to it can also sync at zero cost, and just treat the exposed unused bits as if they were its own, thereby saving power.

Additive versus Multiplicative model of AI-assisted research

Occasionally one hears somebody say "most of the relevant AI-safety work will be done at crunch time. Most work being done now at present is marginal".

One cannot shake the suspicion that this statement merely reflects the paucity of ideas & vision of the speaker. Yet it cannot be denied that their reasoning has a certain logic: if, as seems likely, AI will become more and more dominant in AI alignment research than maybe we should be focusing on how to safely extract work from future superintelligent machines rather than hurting our painfully slow mammalian walnuts to crack AI safety research today. I understand this to be a key motivation for several popular agendas AI safety.

Similarly, many Pause advocates argue that pause advocacy is more impactful than direct research. Most will admit that a Pause cannot be maintained indefinitely. The aim of a Pause would be to buy time to figure out alignment. Unless one believes in very long pauses, implicitly it seems there is an assumption that research progress will be faster in the future.

Implicitly, we might say there is underlying " Additive" model of AI-...

People are not thinking clearly about AI-accelerated AI research. This comment by Thane Ruthenis is worth amplifying.

...I'm very skeptical of AI being on the brink of dramatically accelerating AI R&D.

My current model is that ML experiments are bottlenecked not on software-engineer hours, but on compute. See Ilya Sutskever's claim here:

95% of progress comes from the ability to run big experiments quickly. The utility of running many experiments is much less useful.

What actually matters for ML-style progress is picking the correct trick, and then applying it to a big-enough model. If you pick the trick wrong, you ruin the training run, which (a) potentially costs millions of dollars, (b) wastes the ocean of FLOP you could've used for something else.

And picking the correct trick is primarily a matter of research taste, because:

- Tricks that work on smaller scales often don't generalize to larger scales.

- Tricks that work on larger scales often don't work on smaller scales (due to bigger ML models having various novel emergent properties).

- Simultaneously integrating several disjunctive incremental improvements into one SotA training run is likely nontrivial/impossible in the general

The Marginal Returns of Intelligence

A lot of discussion of intelligence considers it as a scalar value that measures a general capability to solve a wide range of tasks. In this conception of intelligence it is primarily a question of having a ' good Map' . This is a simplistic picture since it's missing the intrinsic limits imposed on prediction by the Territory. Not all tasks or domains have the same marginal returns to intelligence - these can vary wildly.

Let me tell you about a 'predictive efficiency' framework that I find compelling & deep and that will hopefully give you some mathematical flesh to these intuitions. I initially learned about these ideas in the context of Computational Mechanics, but I realized that there underlying ideas are much more general.

Let be a predictor variable that we'd like to use to predict a target variable under a joint distribution . For instance could be the contex window and could be the next hundred tokens, or could be the past market data and is the future market data.

In any prediction task there are three fundamental and independently varying quantities that you need to think of:

AGI companies merging within next 2-3 years inevitable?

There are currently about a dozen major AI companies racing towards AGI with many more minor AI companies. The way the technology shakes out this seems like unstable equilibrium.

It seems by now inevitable that we will see further mergers, joint ventures - within two years there might only be two or three major players left. Scale is all-dominant. There is no magic sauce, no moat. OpenAI doesn't have algorithms that her competitors can't copy within 6-12 months. It's all leveraging compute. Whatever innovations smaller companies make can be easily stolen by tech giants.

e.g. we might have xAI- Meta, Anthropic- DeepMind-SSI-Google, OpenAI-Microsoft-Apple.

Actuallly, although this would be deeply unpopular in EA circles it wouldn't be all that surprising if Anthropic and OpenAI would team up.

And - of course - a few years later we might only have two competitors: USA, China.

EDIT: the obvious thing to happen is that nvidia realizes it can just build AI itself. if Taiwan is Dune, GPUs are the spice, then nvidia is house Atreides

Whatever innovations smaller companies make can be easily stolen by tech giants.

And they / their basic components are probably also published by academia, though the precise hyperparameters, etc. might still matter and be non-trivial/costly to find.

I have a similar feeling, but there are some forces in the opposite direction:

- Nvidia seems to limit how many GPUs a single competitor can acquire.

- training frontier models becomes cheaper over time. Thus, those that build competitive models some time later than the absolute frontier have to invest much less resources.

In 2-3 years they would need to decide on training systems built in 3-5 years, and by 2027-2029 the scale might get to $200-1000 billion for an individual training system. (This is assuming geographically distributed training is solved, since such systems would need 5-35 gigawatts.)

Getting to a go-ahead on $200 billion systems might require a level of success that also makes $1 trillion plausible. So instead of merging, they might instead either temporarily give up on scaling further (if there isn't sufficient success in 2-3 years), or become capable of financing such training systems individually, without pooling efforts.

Current work on Markov blankets and Boundaries on LW is flawed and outdated. State of the art should factor through this paper on Causal Blankets; https://iwaiworkshop.github.io/papers/2020/IWAI_2020_paper_22.pdf

A key problem for accounts of blankets and boundaries I have seen on LW so far is the following elementary problem (from the paper):

"Therefore, the MB [Markov Blanket] formalism forbids interdependencies induced by past events that are kept in memory, but may not directly influence the present state of the blankets.

Thanks to Fernando Rosas telling me about this paper.

You may want to make this a linkpost to that paper as that can then be tagged and may be noticed more widely.

Large Language Models, Small Labor Market Effects?

We examine the labor market effects of AI chatbots using two large-scale adoption surveys (late 2023 and 2024) covering 11 exposed occupations (25,000 workers, 7,000 workplaces), linked to matched employer-employee data in Denmark. AI chatbots are now widespread—most employers encourage their use, many deploy in-house models, and training initiatives are common. These firm-led investments boost adoption, narrow demographic gaps in take-up, enhance workplace utility, and create new job tasks. Yet, despite substantial investments, economic impacts remain minimal. Using difference-in-differences and employer policies as quasi-experimental variation, we estimate precise zeros: AI chatbots have had no significant impact on earnings or recorded hours in any occupation, with confidence intervals ruling out effects larger than 1%. Modest productivity gains (average time savings of 2.8%), combined with weak wage pass-through, help explain these limited labor market effects. Our findings challenge narratives of imminent labor market transformation due to Generative AI.

From marginal revolution.

What does this crowd think? These effects ar...

Speaking from the perspective of someone still developing basic mathematical maturity and often lacking prerequisites, it's very useful as a learning aid. For example, it significantly expanded the range of papers or technical results accessible to me. If I'm reading a paper containing unfamiliar math, I no longer have to go down the rabbit hole of tracing prerequisite dependencies, which often expand exponentially (partly because I don't know which results or sections in the prerequisite texts are essential, making it difficult to scope my focus). Now I can simply ask the LLM for a self-contained exposition. Using traditional means of self-studying like [search engines / Wikipedia / StackExchange] is very often no match for this task, mostly in terms of time spent or wasted effort; simply having someone I can directly ask my highly specific (and often dumb) questions or confusions and receive equally specific responses is just really useful.

Claude is smarter than you. Deal with it.

There is an incredible amount of cope about the current abilities of AI.

AI isn't infallible. Of course. And yet...

For 90% of queries a well-prompted AI has better responses than 99% of people.For some queries the number of people that could match the kind of deep, broad knowledge that AI has can be counted on two hands. Finally, obviously, there is no man alive on the face of the earth that comes even close to the breadth and depth of crystallized intelligence that AIs now have.

People have developped a keen apprehension and aversion for " AI slop". The truth of the matter is that LLMs are incredible writers and if you had presented AI slop as human writing to somebody ten six years ago they would say it is good if somewhat corporate writing all the way to inspired, eloquent, witty.

Does AI sometimes make mistakes? Of course. So do humans. To be human is to err.

There is an incredible amount of cope about the current abilities of AI. Frankly, I find it embarassing. Witness the absurd call to flag AI-assisted writing. The widespread disdain for " @grok is this true?" . Witness how llm psychosis has gone from perhaps...

Contemporary AI is smart in some ways and dumb in other ways. It's a useful tool that you should integrate into your workflow if you don't want to miss out on productivity. However. I'm worried that exposure to AI is dangerous in similar ways to how exposure to social media is dangerous, only more. You're interacting with something designed to hijack your attention and addict you. Only this time the "something" has its own intelligence that is working towards this purpose (and possibly other, unknown, purposes).

As to the AI safety space: we've been saying for decades that AI is dangerous and now you're surprised that we think AI is dangerous? I don't think it's taking over the world just yet, but that doesn't mean there are no smaller-scale risks. It's dangerous not because it's dumb (the fact it's still dumb is the saving grace) but precisely because it's smart.

My own approach is, use AI is clear, compartmentalized ways. If you have a particular task which you know can be done faster by using AI in a particular way, by all means, use it. (But, do pay attention to time wasted on tweaking the prompt etc.) Naturally, you should also occasionally keep experimenting with new tasks or new ways of using it. But, if there's no clear benefit, don't use it. If it's just to amuse yourself, don't. And, avoid exposing other people if there's no good reason.

Claude 4.5 is already superhuman in some areas, including:

- Breadth of knowledge.

- Understanding complex context "at a glance."

- Speed, at least for many things.

But there are other essential abilities where leading LLMs are dumber than diligent 7 year old. Gemini is one of the stronger visual models, and I routinely benchmark it failing simple visual tasks that any child could solve.

And then there's software development. I use Claude for software development, and it's quite skilled at many simple tasks. But I also spend a lot of time dealing with ill-conceived shit code that it writes. You can't just give an irresponsible junior programmer a copy of Claude Code and allow them commit straight to main with no review. If you do, you will learn the meaning of the word "slop." In the hands of a skilled professional who takes 100% responsibility for the output, Claude Code is useful. In the hands of an utter newb who can't do anything on their own, it's also great. But it can't do anything serious without massive handholding and close expert supervision.

So my take is that frontier models are terrifyingly impressive if you're paying attention, but they are still critically broken in ways ...

[This shortform has now been expanded into a long-form post]

NATO faces its gravest military disadvantage since 1949, as the balance of power has shifted decisively toward its adversaries. The speed and scale of NATO's relative military decline represents the most dramatic power shift since World War II—and the alliance appears dangerously unaware of its new vulnerability

I think this is both true and massively underrated.

The primary reason is the drone warfare revolution. The secondary reason is the economic rise and military buildup of China. The Pax Americana is coming to its end.

Problem of Old Evidence, the Paradox of Ignorance and Shapley Values

Paradox of Ignorance

Paul Christiano presents the "paradox of ignorance" where a weaker, less informed agent appears to outperform a more powerful, more informed agent in certain situations. This seems to contradict the intuitive desideratum that more information should always lead to better performance.

The example given is of two agents, one powerful and one limited, trying to determine the truth of a universal statement ∀x:ϕ(x) for some Δ0 formula ϕ. The limited agent treats each new value of ϕ(x) as a surprise and evidence about the generalization ∀x:ϕ(x). So it can query the environment about some simple inputs x and get a reasonable view of the universal generalization.

In contrast, the more powerful agent may be able to deduce ϕ(x) directly for simple x. Because it assigns these statements prior probability 1, they don't act as evidence at all about the universal generalization ∀x:ϕ(x). So the powerful agent must consult the environment about more complex examples and pay a higher cost to form reasonable beliefs about the generalization.

Is it really a problem?

However, I argue that the more powerful agent is act...

One of the interesting thing about AI minds (such as LLMs) is that in theory, you can turn many topics into testable science while avoiding the 'problem of old evidence', because you can now construct artificial minds and mold them like putty. They know what you want them to know, and so you can see what they would predict in the absence of knowledge, or you can install in them false beliefs to test out counterfactual intellectual histories, or you can expose them to real evidence in different orders to measure biases or path dependency in reasoning.

With humans, you can't do that because they are so uncontrolled: even if someone says they didn't know about crucial piece of evidence X, there is no way for them to prove that, and they may be honestly mistaken and have already read about X and forgotten it (but humans never really forget so X has already changed their "priors", leading to double-counting), or there is leakage. And you can't get people to really believe things at the drop of a hat, so you can't make people imagine, "suppose Napoleon had won Waterloo, how do you predict history would have changed?" because no matter how you try to participate in the spirit of the exerci...

What did Yudkoswky get right?

- The central problem of AI alignment. I am not aware of anything in subsequent work that is not already implicit in Yudkowsky's writing.

- Short timelines avant le lettre. Yudkowsky was predicting AGI in his lifetime from the very start when most academics, observers, AI scientists, etc considered AGI a fairytale.

- Inherent and irreducible uncertainty of forecasting, foolishness of precise predictions.

- The importance of (Pearlian) causality, Solomonoff Induction as theory of formal epistemology, Bayesian statistics, (Shannon) information theory, decision theory [especially UDT-shaped things].

- (?nanotech, ?cryonics)

- if you had a timemachine to go back to 2010 you should buy bitcoin and write Harry Potter fanfiction

Pockets of Deep Expertise

Why am I so bullish on academic outreach? Why do I keep hammering on 'getting the adults in the room'?

It's not that I think academics are all Super Smart.

I think rationalists/alignment people correctly ascertain that most professors don't have much useful to say about alignment & deep learning and often say silly things. They correctly see that much of AI congress is fueled by labs and scale not ML academia. I am bullish on non-ML academia, especially mathematics, physics and to a lesser extent theoretical CS, neuroscience, some parts of ML/ AI academia. This is because while I think 95 % of academia is bad and/or useless there are Pockets of Deep Expertise. Most questions in alignment are close to existing work in academia in some sense - but we have to make the connection!

A good example is 'sparse coding' and 'compressed sensing'. Lots of mech.interp has been rediscovering some of the basic ideas of sparse coding. But there is vast expertise in academia about these topics. We should leverage these!

Other examples are singular learning theory, computational mechanics, etc

Is Superhuman Persuasion a thing?

Sometimes I see discussions of AI superintelligence developping superhuman persuasion and extraordinary political talent.

Here's some reasons to be skeptical of the existence of 'superhuman persuasion'.

- We don't have definite examples of extraordinary political talent.

Famous politicians rose to power only once or twice. We don't have good examples of an individual succeeding repeatedly in different political environments.

Examples of very charismatic politicans can be better explained by ' the right person at the right time or place'.

- Neither do we have strong examples of extraordinary persuasion.

>> For instance hypnosis is mostly explained by people wanting to be persuaded by the hypnotist. If you don't want to be persuaded it's very hard to change your mind. There is some skill in persuasion required for sales, and sales people are explicitly trained to do so but beyond a fairly low bar the biggest predictors for salesperson success is finding the correct audience and making a lot of attempts.

Another reason has to do with the ' intrinsic skill ceiling of a domain' .

For an agent A to have a very high skil...

Disagree on individual persuasion. Agree on mass persuasion.

Mass I'd expect optimizing one-size-fits-all messages for achieving mass persuasion has the properties you claim: there are a few summary, macro variables that are almost-sufficient statistics for the whole microstate--which comprise the full details on individuals.

Individual Disagree on this, there are a bunch of issues I see at the individual level. All of the below suggest to me that significantly superhuman persuasion is tractable (say within five years).

- Defining persuasion: What's the difference between persuasion and trade for an individual? Perhaps persuasion offers nothing in return? Though presumably giving strategic info to a boundedly rational agent is included? Scare quotes below to emphasize notions that might not map onto the right definition.

- Data scaling: There's an abundant amount of data available on almost all of us online. How much more persuasive can those who know you better be? I'd guess the fundamental limit (without knowing brainstates) is above your ability to 'persuade' yourself.

- Preference incoherence: An intuition pump on the limits of 'persuasion' is how far you are from having fully coherent preferences. Insofar as you don't an agent which can see those incoherencies should be able to pump you--a kind of persuasion.

Wow! I like the idea of persuasion as acting on the lack of a fully coherent preference! Something to ponder 🤔

Neural Network have a bias towards Highly Decomposable Functions.

tl;dr Neural networks favor functions that can be "decomposed" into a composition of simple pieces in many ways - "highly decomposable functions".

Degeneracy = bias under uniform prior

[see here for why I think bias under the uniform prior is important]

Consider a space of parameters used to implement functions, where each element specifies a function via some map . Here, the set is our parameter space, and we can think of each as representing a specific configuration of the neural network that yields a particular function .

The mapping assigns each point to a function . Due to redundancies and symmetries in parameter space, multiple configurations might yield the same function, forming what we call a fiber, or the "set of degenerates." of

This fiber is the set of ways in which the same functional behavior can be achieved by different parameterizations. If we uniformly sample from codes, the degeneracy of a function counts how likely it is to be sampl...

Feature request: author-driven collaborative editing [CITATION needed] for the Good and Glorious Epistemic Commons.

Often I find myself writing claims which would ideally have citations but I don't know an exact reference, don't remember where I read it, or am simply too lazy to do the literature search.

This is bad for scholarship is a rationalist virtue. Proper citation is key to preserving and growing the epistemic commons.

It would be awesome if my lazyness were rewarded by giving me the option to add a [CITATION needed] that others could then suggest (push) a citation, link or short remark which the author (me) could then accept. The contribution of the citator is acknowledged of course. [even better would be if there was some central database that would track citations & links like with crosslinking etc like wikipedia]

a sort hybrid vigor of Community Notes and Wikipedia if you will. but It's collaborative, not adversarial*

author: blablablabla

sky is blue [citation Needed]

blabblabla

intrepid bibliographer: (push) [1] "I went outside and the sky was blue", Letters to the Empirical Review

*community notes on twitter has been a universally lauded concept when it first launched. We are already seeing it being abused unfortunately, often used for unreplyable cheap dunks. I still think it's a good addition to twitter but it does show how difficult it is to create shared agreed-upon epistemics in an adverserial setting.

Corrupting influences

The EA AI safety strategy has had a large focus on placing EA-aligned people in A(G)I labs. The thinking was that having enough aligned insiders would make a difference on crucial deployment decisions & longer-term alignment strategy. We could say that the strategy is an attempt to corrupt the goal of pure capability advance & making money towards the goal of alignment. This fits into a larger theme that EA needs to get close to power to have real influence.

[See also the large donations EA has made to OpenAI & Anthropic. ]

Whether this strategy paid off... too early to tell.

What has become apparent is that the large AI labs & being close to power have had a strong corrupting influence on EA epistemics and culture.

- Many people in EA now think nothing of being paid Bay Area programmer salaries for research or nonprofit jobs.

- There has been a huge influx of MBA blabber being thrown around. Bizarrely EA funds are often giving huge grants to for profit organizations for which it is very unclear whether they're really EA-aligned in the long-term or just paying lip service. Highly questionable that EA should be trying to do venture

Entropy and AI Forecasting

Until relatively recently (2018-2019?) I did not seriously entertain the possibility that AGI in our lifetime was possible. This was a mistake, an epistemic error. A rational observer calmly and objectively considering the evidence for AI progress over the prior decades - especially in the light of rapid progress in deep learning - should have come to the reasonable position that AGI within 50 years was a serious possibility (>10%).

AGI plausibly arriving in our lifetime was a reasonable position. Yet this possibility was almost universally ridiculed or ignored or by academics and domain experts. One can find quite funny interview with AI experts on Lesswrong from 15 years ago. The only AI expert agreeing with the Yudkowskian view of AI in our lifetime was Jurgen Schmidthuber. The other dozen AI experts denied it as unknowable or even denied the hypothetical possibility of AGI.

Yudkowsky earns a ton of Bayes points for anticipating the likely arrival of AGI in our lifetime long before the deep learning took off.

**************************

We are currently experiencing a rapid AI takeoff, plausibly culminating in superintelligence by ...

I know of only two people who anticipated something like what we are seeing far ahead of time; Hans Moravec and Jan Leike

I didn't know about Jan's AI timelines. Shane Legg also had some decently early predictions of AI around 2030(~2007 was the earliest I knew about)

shane legg had 2028 median back in 2008, see e.g. https://e-discoveryteam.com/2023/11/17/shane-leggs-vision-agi-is-likely-by-2028-as-soon-as-we-overcome-ais-senior-moments/

Yudkowsky didnt dismiss neural networks iirc. He just said that there were a lot of different approaches to AI and from the Outside View it didnt seem clear which was promising - and plausibly on an Inside View it wasnt very clear that aritificial neural networks were going to work and work so well.

Re:alignment I dont follow. We dont know who will be proved ultimately right on alignment so im not sure how you can make such strong statements about whether Yudkowsky was right or wrong on this aspect.

We havent really gained that much bits on this question and plausibly will not gain many until later (by which time it might be too late if Yudkowsky is right).

I do agree that Yudkowsky's statements occasionally feel too confidently and dogmatically pessimistic on the question of Doom. But I would argue that the problem is that we simply dont know well because of irreducible uncertainty - not that Doom is unlikely.

Crypticity, Reverse Epsilon Machines and the Arrow of Time?

[see https://arxiv.org/abs/0902.1209 ]

Our subjective experience of the arrow of time is occasionally suggested to be an essentially entropic phenomenon.

This sounds cool and deep but crashes headlong into the issue that the entropy rate and the excess entropy of any stochastic process is time-symmetric. I find it amusing that despite hearing this idea often from physicists and the like apparently this rather elementary fact has not prevented their storycrafting.

Luckily, computational mechanics provides us with a measure that is not time symmetric: the stochastic complexity of the epsilon machine

For any stochastic process we may also consider the epsilon machine of the reverse process, in other words the machine that predicts the past based on the future. This can be a completely different machine whose reverse stochastic complexity is not equal to .

Some processes are easier to predict forward than backward. For example, there is considerable evidence that language is such a process. If the stochastic complexity and the reverse stochastic complexity differ we speak of a causally a...

This sounds cool and deep but crashes headlong into the issue that the entropy rate and the excess entropy of any stochastic process is time-symmetric.

It's time symmetric around a starting point of low entropy. The further is from , the more entropy you'll have, in either direction. The absolute value is what matters.

In this case, is usually taken to be the big bang. So the further in time you are from the big bang, the less the universe is like a dense uniform soup with little structure that needs description, and the higher your entropy will be. That's how you get the subjective perception of temporal causality.

Presumably, this would hold to the other side of as well, if there is one. But we can't extrapolate past , because close to everything gets really really energy dense, so we'd need to know how to do quantum gravity to calculate what the state on the other side might look like. So we can't check that. And the notion of time as we're discussing it here might break down at those energies anyway.

I have an embarrasing confession to make. I don't understand why PvsNP is so hard.

[I'm in good company since apparently Richard Feynmann couldn't be convinced it was a serious open problem.]

I think I understand PvsNP and its many variants like existence of one-way function is about computational hardness of certain tasks. It is surprising that we have such strong intuitions that some tasks are computationally hard but we fail to be able to prove it!

Of course I don't think I can prove it and I am not foolish enough to spend significant amount of time on trying to prove it. I still would like to understand the deep reasons why it's so hard to prove computational hardness results. That means I'd like to understand why certain general proof strategies are impossible or very hard.