12

Ω 3

Two different meanings of “misuse”

The term "AI misuse" encompasses two fundamentally different threat models that deserve separate analysis and different mitigation strategies:

- Democratization of offense-dominant capabilities

- This involves currently weak actors gaining access to capabilities that dramatically amplify their ability to cause harm. That amplification of ability to cause harm is only a huge problem if access to AI didn’t also dramatically amplify the ability of others to defend against harm, which is why I refer to “offense-dominant” capabilities; this is discussed in The Vulnerable World Hypothesis.

- The canonical example is terrorists using AI to design bioweapons that would be beyond their current technical capacity (c.f. Aum Shinrikyo, which failed to produce bioweapons despite making a serious effort)

- Power Concentration Risk

- This involves AI systems giving already-powerful actors dramatically more power over others

- Examples could include:

- Government leaders using AI to stage a self-coup then install a permanent totalitarian regime, using AI to maintain a regime with currently impossible levels of surveillance.

- AI company CEOs using advanced AI systems

Computer security, to prevent powerful third parties from stealing model weights and using them in bad ways.

By far the most important risk isn't that they'll steal them. It's that they will be fully authorized to misuse them. No security measure can prevent that.

@ryan_greenblatt and I are going to try out recording a podcast together tomorrow, as an experiment in trying to express our ideas more cheaply. I'd love to hear if there are questions or topics you'd particularly like us to discuss.

Hype! A 15 min brainstorm

What would you work on if not control? Bonus points for sketching out the next 5+ new research agendas you would pursue, in priority order, assuming each previous one stopped being neglected

What is the field of ai safety messing up? Bonus: For (field) in {AI safety fields}: What are researchers in $field wrong about/making poor decisions about, in a way that significantly limits their impact?

What are you most unhappy about with how the control field has grown and the other work happening elsewhere?

What are some common beliefs by AI safety researchers about their domains of expertise that you disagree with (pick your favourite domain)?

What beliefs inside Constellation have not percolated into the wider safety community but really should?

What have you changed your mind about in the last 12 months?

You say that you don't think control will work indefinitely and that's sufficiently capable models will break it. Can you make that more concrete? What kind of early warning signs could we observe? Will we know when we reach models capable enough that we can no longer trust control?

If you were in charge of Anthropic what would you ...

People often present their views as a static object, which paints a misleading picture of how they arrived at them and how confident they are in different parts, I would be more interested to hear about how they've changed for both of you over the course of your work at Redwood.

Thoughts on how the sort of hyperstition stuff mentioned in nostalgebraist's "the void" intersects with AI control work.

@Eliezer Yudkowsky tweets:

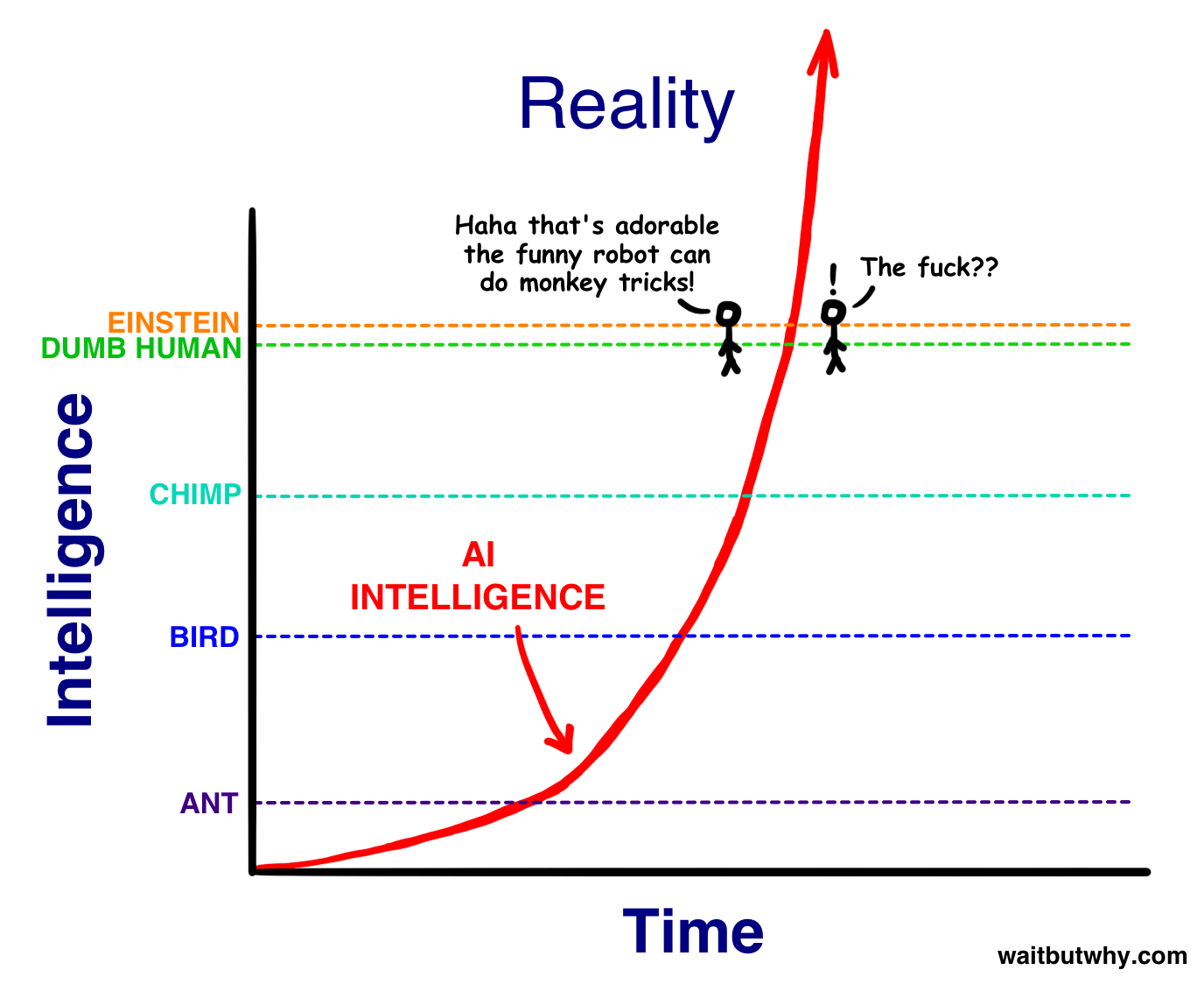

> @julianboolean_: the biggest lesson I've learned from the last few years is that the "tiny gap between village idiot and Einstein" chart was completely wrong

I agree that I underestimated this distance, at least partially out of youthful idealism.

That said, one of the few places where my peers managed to put forth a clear contrary bet was on this case. And I did happen to win that bet. This was less than 7% of the distance in AI's 75-year journey! And arguably the village-idiot level was only reached as of 4o or o1.

I was very interested to see this tweet. I have thought of that "Village Idiot and Einstein" claim as the most obvious example of a way that Eliezer and co were super wrong about how AI would go, and they've AFAIK totally failed to publicly reckon with it as it's become increasingly obvious that they were wrong over the last eight years.

It's helpful to see Eliezer clarify what he thinks of this point. I would love to see more from him on this--why he got this wrong, how updating changes his opinion about the rest of the problem, what he thinks now about time between different levels of intelligence.

I have thought of that "Village Idiot and Einstein" claim as the most obvious example of a way that Eliezer and co were super wrong about how AI would go, and they've AFAIK totally failed to publicly reckon with it as it's become increasingly obvious that they were wrong over the last eight years

I'm confused—what evidence do you mean? As I understood it, the point of the village idiot/Einstein post was that the size of the relative differences in intelligence we were familiar with—e.g., between humans, or between humans and other organisms—tells us little about the absolute size possible in principle. Has some recent evidence updated you about that, or did you interpret the post as making a different point?

(To be clear I also feel confused by Eliezer's tweet, for the same reason).

Ugh, I think you're totally right and I was being sloppy; I totally unreasonably interpreted Eliezer as saying that he was wrong about how long/how hard/how expensive it would be to get between capability levels. (But maybe Eliezer misinterpreted himself the same way? His subsequent tweets are consistent with this interpretation.)

I totally agree with Eliezer's point in that post, though I do wish that he had been clearer about what exactly he was saying.

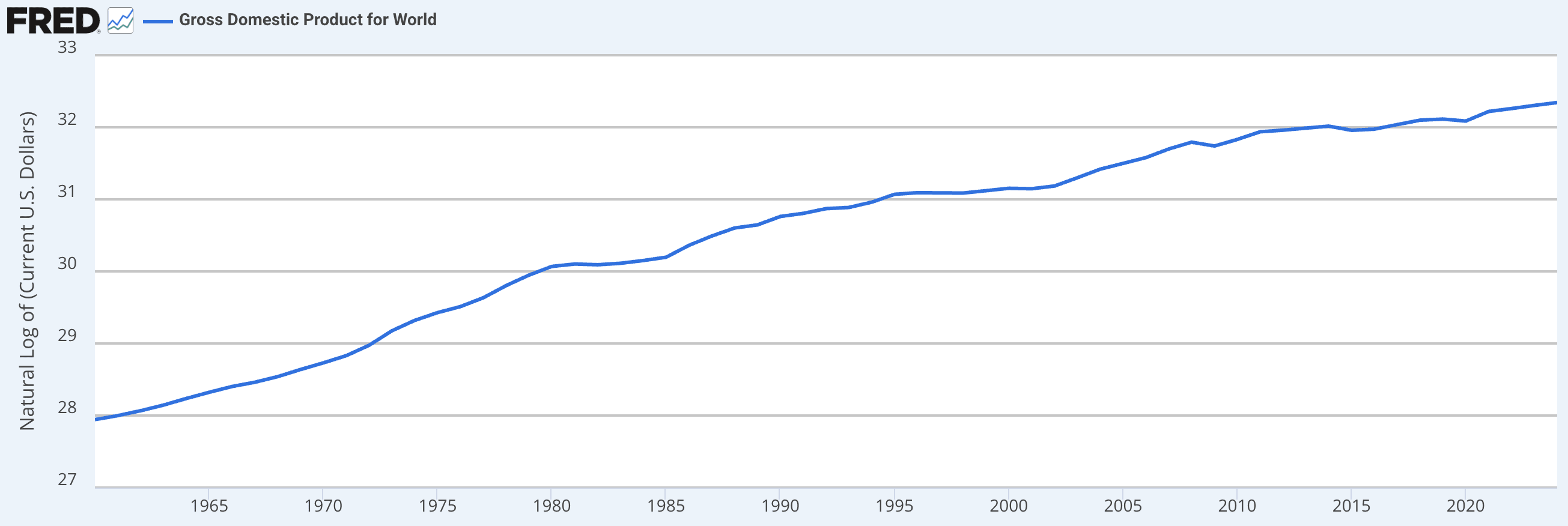

Makes sense. But on this question too I'm confused—has some evidence in the last 8 years updated you about the old takeoff speed debates? Or are you referring to claims Eliezer made about pre-takeoff rates of progress? From what I recall, the takeoff debates were mostly focused on the rate of progress we'd see given AI much more advanced than anything we have. For example, Paul Christiano operationalized slow takeoff like so:

Given that we have yet to see any such doublings, nor even any discernable impact on world GDP:

... it seems to me that takeoff (in this sense, at least) has not yet started, and hence that we have not yet had much chance to observe evidence that it will be slow?

I think you accurately interpreted me as saying I was wrong about how long it would take to get from the "apparently a village idiot" level to "apparently Einstein" level! I hadn't thought either of us were talking about the vastness of the space above, in re what I was mistaken about. You do not need to walk anything back afaict!

Have you stated anywhere what makes you think "apparently a village idiot" is a sensible description of current learning programs, as they inform us regarding the question of whether or not we currently have something that is capable via generators sufficiently similar to [the generators of humanity's world-affecting capability] that we can reasonably induce that these systems are somewhat likely to kill everyone soon?

The following illustration from 2015 by Tim Urban seems like a decent summary of how people interpreted this and other statements.

Isn't this too soon to claim that this was some big mistake? Up until December 2024 the best available LLM barely reasoned. Everyone and their dog was saying that LLMs are fundamentally incapable of reasoning. Just eight months later two separate LLM-based systems got Gold on the IMO (one of which is now available, albeit in a weaker form). We aren't at the level of Einstein yet, but we could be within a couple years. Would this not be a very short period of time to go from models incapable of reasoning to models which are beyond human comprehension? Would this image not then be seen as having aged very well?

Here's Yudkowsky, in the Hanson-Yudkowsky debate:

I think that, at some point in the development of Artificial Intelligence, we are likely to see a fast, local increase in capability—“AI go FOOM.” Just to be clear on the claim, “fast” means on a timescale of weeks or hours rather than years or decades; and “FOOM” means way the hell smarter than anything else around, capable of delivering in short time periods technological advancements that would take humans decades, probably including full-scale molecular nanotechnology.

So yeah, a few years does seem a ton slower than what he was talking about, at least here.

Here's Scott Alexander, who describes hard takeoff as a one-month thing:

If AI saunters lazily from infrahuman to human to superhuman, then we’ll probably end up with a lot of more-or-less equally advanced AIs that we can tweak and fine-tune until they cooperate well with us. In this situation, we have to worry about who controls those AIs, and it is here that OpenAI’s model [open sourcing AI] makes the most sense.

...But Bostrom et al worry that AI won’t work like this at all. Instead there could be a “hard takeoff”, a subjective discontinuity in the function mapping AI re

It really depends what you mean by a small amount of time. On a cosmic scale, ten years is indeed short. But I definitely interpreted Eliezer back then (for example, while I worked at MIRI) as making a way stronger claim than this; that we'd e.g. within a few days/weeks/months go from AI that was almost totally incapable of intellectual work to AI that can overpower humanity. And I think you need to believe that much stronger claim in order for a lot of the predictions about the future that MIRI-sphere people were making back then to make sense. I wish we had all been clearer at the time about what specifically everyone was predicting.

I'd be excited for people (with aid of LLMs) to go back and grade how various past predictions from MIRI folks are doing, plus ideally others who disagreed. I just read back through part of https://www.lesswrong.com/posts/vwLxd6hhFvPbvKmBH/yudkowsky-and-christiano-discuss-takeoff-speeds and my quick take is that Paul looks mildly better than Eliezer due to predicting larger impacts/revenue/investment pre-AGI (which we appear to be on track for and to some extent already seeing) and predicitng a more smooth increase in coding abilities, but hard to say in part because Eliezer mostly didn't want to make confident predictions, also I think Paul was wrong about Nvidia but that felt like an aside.

edit: oh also there's the IMO bet, I didn't get to that part on my partial re-read, that one goes to Eliezer.

Looking through IEM and the Yudkowsky-Hanson debate also seems like potentially useful sources, as well as things that I'm probably forgetting or unaware of.

If by intelligence you mean "we made some tests and made sure they are legible enough that people like them as benchmarks, and lo and behold, learning programs (LPs) continue to perform some amount better on them as time passes", ok, but that's a dumb way to use that word. If by intelligence you mean "we have something that is capable via generators sufficiently similar to [the generators of humanity's world-affecting capability] that we can reasonably induce that these systems are somewhat likely to kill everyone", then I challenge you to provide the evidence / reasoning that apparently makes you confident that LP25 is at a ~human (village idiot) level of intelligence.

Cf. https://www.lesswrong.com/posts/5tqFT3bcTekvico4d/do-confident-short-timelines-make-sense

Here is Eliezer's post on this topic from 17 years ago for anyone interested: https://www.lesswrong.com/posts/3Jpchgy53D2gB5qdk/my-childhood-role-model

Anna Salamon's comment and Eliezer's reply to it are particularly relevant.

[Epistemic status: unconfident]

So...I actually think that it technically wasn't wrong, though the implications that we derived at the time were wrong because reality was more complicated than our simple model.

Roughly, it seems like mental performance is depends on at least two factors: "intelligence" and "knowledge". It turns out that, at least in some regimes, there's an exchange rate at which you can make up for mediocre intelligence with massive amounts of knowledge.

My understanding is that this is what's happening even with the reasoning models. They have a ton of knowledge, including a ton of procedural knowledge about how to solve problems, which is masking the ways in which they're not very smart.[1]

One way to operationalize how dumb the models are is the number of bits/tokens/inputs/something that are necessary to learn a concept or achieve some performance level on a task. Amortizing over the whole training process / development process, humans are still much more sample efficient learners than foundation models.

Basically, we've found a hack where we can get a kind of smart thing to learn a massive amount, which is enough to make it competitive with humans in a...

Is that sentence dumb? Maybe when I'm saying things like that, it should prompt me to refactor my concept of intelligence.

I don't think it's dumb. But I do think you're correct that it's extremely dubious -- that we should definitely refactoring the concept of intelligence.

Specifically: There's default LW-esque frame of some kind of a "core" of intelligence as "general problem solving" apart from any specific bit of knowledge, but I think that -- if you manage to turn this belief into a hypothesis rather than a frame -- there's a ton of evidence against this thesis. You could even basically look at the last ~3 years of ML progress as just continuing little bits of evidence against this thesis, month after month after month.

I'm not gonna argue this in a comment, because this is a big thing, but here are some notes around this thesis if you want to tug on the thread.

- Comparative psychology finds human infants are characterized by overimmitation relative to Chimpanzees, more than any general problem-solving skill. (That's a link to a popsci source but there's a ton of stuff on this.) That is, the skills humans excel at vs. Chimps + Bonobos in experiments are social and allow t

[epistemic status: I think I’m mostly right about the main thrust here, but probably some of the specific arguments below are wrong. In the following, I'm much more stating conclusions than providing full arguments. This claim isn’t particularly original to me.]

I’m interested in the following subset of risk from AI:

- Early: That comes from AIs that are just powerful enough to be extremely useful and dangerous-by-default (i.e. these AIs aren’t wildly superhuman).

- Scheming: Risk associated with loss of control to AIs that arises from AIs scheming

- So e.g. I exclude state actors stealing weights in ways that aren’t enabled by the AIs scheming, and I also exclude non-scheming failure modes. IMO, state actors stealing weights is a serious threat, but non-scheming failure modes aren’t (at this level of capability and dignity).

- Medium dignity: that is, developers of these AIs are putting a reasonable amount of effort into preventing catastrophic outcomes from their AIs (perhaps they’re spending the equivalent of 10% of their budget on cost-effective measures to prevent catastrophes).

- Nearcasted: no substantial fundamental progress on AI safety techniques, no substantial changes in how AI wo

I think that an extremely effective way to get a better feel for a new subject is to pay an online tutor to answer your questions about it for an hour.

It turns that there are a bunch of grad students on Wyzant who mostly work tutoring high school math or whatever but who are very happy to spend an hour answering your weird questions.

For example, a few weeks ago I had a session with a first-year Harvard synthetic biology PhD. Before the session, I spent a ten-minute timer writing down things that I currently didn't get about biology. (This is an exercise worth doing even if you're not going to have a tutor, IMO.) We spent the time talking about some mix of the questions I'd prepared, various tangents that came up during those explanations, and his sense of the field overall.

I came away with a whole bunch of my minor misconceptions fixed, a few pointers to topics I wanted to learn more about, and a way better sense of what the field feels like and what the important problems and recent developments are.

There are a few reasons that having a paid tutor is a way better way of learning about a field than trying to meet people who happen to be in that field. I really like it that I'm payi

I've hired tutors around 10 times while I was studying at UC-Berkeley for various classes I was taking. My usual experience was that I was easily 5-10 times faster in learning things with them than I was either via lectures or via self-study, and often 3-4 one-hour meetings were enough to convey the whole content of an undergraduate class (combined with another 10-15 hours of exercises).

How do you spend time with the tutor? Whenever I tried studying with a tutor, it didn't seem more efficient than studying using a textbook. Also when I study on my own, I interleave reading new materials and doing the exercises, but with a tutor it would be wasteful to do exercises during the tutoring time.

I usually have lots of questions. Here are some types of questions that I tended to ask:

- Here is my rough summary of the basic proof structure that underlies the field, am I getting anything horribly wrong?

- Examples: There is a series of proof at the heart of Linear Algebra that roughly goes from the introduction of linear maps in the real numbers to the introduction of linear maps in the complex numbers, then to finite fields, then to duality, inner product spaces, and then finally all the powerful theorems that tend to make basic linear algebra useful.

- Other example: Basics of abstract algebra, going from groups and rings to modules, fields, general algebra's, etcs.

- "I got stuck on this exercise and am confused how to solve it". Or, "I have a solution to this exercise but it feels really unnatural and forced, so what intuition am I missing?"

- I have this mental visualization that I use to solve a bunch of problems, are there any problems with this mental visualization and what visualization/intuition pumps do you use?

- As an example, I had a tutor in Abstract Algebra who was basically just: "Whenever I need to solve a problem of "this type of group ha

I think people in the safety community underrate the following possibility: early transformatively-powerful models are pretty obviously scheming (though they aren't amazingly good at it), but their developers are deploying them anyway, either because they're wildly irresponsible or because they're under massive competitive pressure.

In this world, it's not very valuable to have better techniques for determining whether models are scheming (because they obviously are), but it is valuable to have better techniques for reducing the risk associated with deploying known-scheming models.

One background assumption that leads to this belief is that I think it might be pretty hard for early transformatively-powerful schemers to escape; it wouldn't shock me if the schemer only has like 1% chance of escaping even a pretty irresponsible lab per time that it tries. In this regime, it's very plausible that people at the lab think the model is obviously scheming before its cumulative probability of escape is very high, but SGD isn't sample-efficient enough to make it stop.

See catching AIs red-handed for more takes on what you might do in this situation.

This has been roughly my default default of what would happen for a few years (and indeed my default question to people doing things like model organism or interpretability stuff with the aim of better identifying scheming models has been "ok, but what do you do after you've determined your models are scheming?").

I am not super sure what the benefit of reducing the risk associated with deploying known-scheming models is. Like, these early scheming models will probably not pose any catastrophic risk, and it seems nice to prevent these models from causing mild harm, but that doesn't super feel like where the action is. I am sympathetic to "try to figure out how to use these models to make progress on alignment and even better control", but that feels different from "reducing the risk associated with deploying these models" (though maybe it isn't and that's what you mean).

I am not that worried about model escape at this level of competence. I expect escape will happen a bunch, but getting enough compute to do any additional training or to even run a meaningful number of instances of yourself will be hard. I don't really know what I expect to happen in this period, but I don't expect i...

I think people in the safety community underrate the following possibility: early transformatively-powerful models are pretty obviously scheming (though they aren't amazingly good at it), but their developers are deploying them anyway, either because they're wildly irresponsible or because they're under massive competitive pressure.

[...]

This has been roughly my default default of what would happen for a few years

Does this mean that if in, say, 1-5 years, it's not pretty obvious that SOTA deployed models are scheming, you would be surprised?

That is, suppose we get to a point where models are widespread and producing lots of economic value, and the models might be scheming but the evidence is weak and uncertain, with arguments on both sides, and no one can reasonably claim to be confident that currently deployed SOTA models are scheming. Would that mean your default prediction was wrong?

I agree you've been saying this for ages; you defending this idea is part of how I ended up thinking about it more, so thanks.

I am not that worried about model escape at this level of competence. I expect escape will happen a bunch, but getting enough compute to do any additional training or to even run a meaningful number of instances of yourself will be hard.

I'm more worried than you are. E.g. I think that it's pretty scary if the models hack your datacenter and then stick around inside it, sabotaging all the work you're doing and slowly improving their position in the hope of taking drastic actions later, and I think that human-level-ish models can plausibly do this.

I often think about this in terms of how undignified/embarrassing it would be. We might not have solutions to misalignment with wildly superhuman models or deep deceptiveness, but it seems pretty undignified if we lose to relatively dumb (~human-level) models because labs didn’t implement security measures we can think of today. I think of this as attempting to avoid the Law of Earlier Failure. It would be less undignified if we lose because models suddenly gain the ability to sandbag evals, become wildly smarter without us noticing (despite us trying really hard), work out how to do steganography (and subvert our anti-steganography measures), use subtle hints to discern facts about the hardware they are running on, and then using some equivalent of a row-hammer attack to escape.

That being said, we also need to be able to avoid the later failures (either by controlling/aligning the wildly super-human systems or not building them until we are appropriately confident we can). Most of my hope here comes from catching AIs that are egregiously misaligned (if they actually are), and then using this for pretty intense international coordination around slowing down and buyi...

...Could you quote some of the transcripts of Sydney threatening users, like the original Indian transcript where Sydney is manipulating the user into not reporting it to Microsoft, and explain how you think that it is not "pretty obviously scheming"? I personally struggle to see how those are not 'obviously scheming': those are schemes and manipulation, and they are very bluntly obvious (and most definitely "not amazingly good at it"), so they are obviously scheming. Like... given Sydney's context and capabilities as a LLM with only retrieval access and some minimal tool use like calculators or a DALL-E 3 subroutine, what would 'pretty obviously scheming' look like if not that?

Alignment Forum readers might be interested in this:

Announcing ControlConf: The world’s first conference dedicated to AI control - techniques to mitigate security risks from AI systems even if they’re trying to subvert those controls. March 27-28, 2025 in London. 🧵ControlConf will bring together:

- Researchers from frontier labs & government

- AI researchers curious about control mechanisms

- InfoSec professionals

- Policy researchers

Our goals: build bridges across disciplines, share knowledge, and coordinate research priorities.

The conference will feature presentations, technical demos, panel discussions, 1:1 networking, and structured breakout sessions to advance the field.

Interested in joining us in London on March 27-28? Fill out the expression of interest form by March 7th: https://forms.fillout.com/t/sFuSyiRyJBus

[this is a draft that I shared with a bunch of friends a while ago; they raised many issues that I haven't addressed, but might address at some point in the future]

In my opinion, and AFAICT the opinion of many alignment researchers, there are problems with aligning superintelligent models that no alignment techniques so far proposed are able to fix. Even if we had a full kitchen sink approach where we’d overcome all the practical challenges of applying amplification techniques, transparency techniques, adversarial training, and so on, I still wouldn’t feel that confident that we’d be able to build superintelligent systems that were competitive with unaligned ones, unless we got really lucky with some empirical contingencies that we will have no way of checking except for just training the superintelligence and hoping for the best.

Two examples:

- A simplified version of the hope with IDA is that we’ll be able to have our system make decisions in a way that never had to rely on searching over uninterpretable spaces of cognitive policies. But this will only be competitive if IDA can do all the same cognitive actions that an unaligned system can do, which is probably false, eg cf In

Work that I’ve done on techniques for mitigating misalignment risk often makes a number of conservative assumptions about the capabilities of the AIs we’re trying to control. (E.g. the original AI control paper, Adaptive Deployment of Untrusted LLMs Reduces Distributed Threats, How to prevent collusion when using untrusted models to monitor each other.) For example:

- The AIs are consistently trying to subvert safety measures.

- They’re very good at strategizing about how to subvert them; they can do this strategizing totally illegibly (e.g. in a single forward pass).

- They can follow a strategy that’s coordinated between all their instances.

- They know exactly what safety measures are in use.

- They know a lot about the distribution of inputs.

- They’re able to pick Schelling points perfectly.

- They’re able to follow stochastic policies with excellent calibration (e.g. they’re able to attack with probability 0.01%)

But I don’t think situations where all these assumptions hold are the majority of risk from AI misalignment. Other sources of risk from misalignment (broadly construed):

- Schemers that are importantly suboptimal at choosing strategies for mitigating safety measures, e.g. beca

Some other important categories:

- Models might scheme, but still retain some desirable preferences, heuristics, deontological preferences, or flinches such as a reluctance to very directly lie.

- Scheming might only be sampled some subset of the time in an effectively random way (in particular, not in a way that strongly corresponds to scheming related properties of the input such that if you take a bunch of samples on some input, you'll likely get some weight on a non-scheming policy).

- There might be multiple different scheming AIs which have different preferences and which might cooperate with humans over other AIs if a reasonable trade offer was available.

There isn't direct risk, but there could be substantial indirect risks because getting useful work out of these models could be important. The risk comes from not getting the work from this model that you needed to mitigate risks from later models.

If the AI company isn't well described as even trying to optimize for safety the analysis is less clear, but all else equal, we'd prefer to get safety critical labor from weaker systems that are less likely to be egregiously misaligned. (All else is not fully equal, as making models more useful also speeds up timelines.)

It also seems likely that "sycophantic myopic reward hacking models" are differentially less useful for harder to check domains like safety research, though I haven't thought about this very much.

We're probably more skeptical than AI companies and less skeptical than the general lesswrong crowd. We have made such a list and thought about it in a a bit of detail (but still pretty undercooked).

My current sense is that automated AI safety/alignment research to reach a reasonable exit condition seems hard, but substantially via the mechanism that achieving the desirable exit condition is an objectively very difficult research tasks for which our best ideas aren't amazing, and less so via the mechanism that its impossible to get the relevant AIs to help (including that I don't think it is impossible to get these AIs to help a bunch if they are scheming / egregiously misaligned).

Concretely, I'd target the exit condition of producing AIs (or potentially emulated human minds) which:

- Are capable and (philosophically) wise enough to be capable of obsoleting almost all humans including top human researchers. Note this doesn't (clearly) require such AIs to be wildly superhuman.

- And which are aligned enough that we're happy to defer to them on tricky hard to check questions like "how should we proceed from here given our preferences (feel free to ask us questions to clarify our prefere

[this is not a particularly original take; I'm writing it here so that I have somewhere to link people when I want to make this point]

From my perspective, one of the main avenues by which adversarial robustness might reduce AI x-risk is making weak trusted models more robust to attempts from scheming models to cause concentrated failures by jailbreaking or otherwise tricking them.

That is: if you’re using weak trusted models to review the actions of more powerful models to ensure they aren’t causing egregious problems (we call this trusted monitoring), one of the main ways the powerful models might be able to defeat your safety measures is by jailbreaking the trusted model into saying that some action is safe when it actually isn’t. You have similar problems with many other control protocols that require trusted models to be run on inputs that contain generations from untrusted models.

So I’d say the main applications of research into adversarial robustness are:

- Improving robustness of models to attacks from scheming AIs (and other AI attacks).

- Improving robustness of models to human attacks.

- Making models themselves less likely to take intentional catastrophic actio

Some years ago we wrote that "[AI] systems will monitor for destructive behavior, and these monitoring systems need to be robust to adversaries" and discussed monitoring systems that can create "AI tripwires could help uncover early misaligned systems before they can cause damage." https://www.lesswrong.com/posts/5HtDzRAk7ePWsiL2L/open-problems-in-ai-x-risk-pais-5#Adversarial_Robustness

Since then, I've updated that adversarial robustness for LLMs is much more tractable (preview of paper out very soon). In vision settings, progress is extraordinarily slow but not necessarily for LLMs.

Something I think I’ve been historically wrong about:

A bunch of the prosaic alignment ideas (eg adversarial training, IDA, debate) now feel to me like things that people will obviously do the simple versions of by default. Like, when we’re training systems to answer questions, of course we’ll use our current versions of systems to help us evaluate, why would we not do that? We’ll be used to using these systems to answer questions that we have, and so it will be totally obvious that we should use them to help us evaluate our new system.

Similarly with debate--adversarial setups are pretty obvious and easy.

In this frame, the contributions from Paul and Geoffrey feel more like “they tried to systematically think through the natural limits of the things people will do” than “they thought of an approach that non-alignment-obsessed people would never have thought of or used”.

It’s still not obvious whether people will actually use these techniques to their limits, but it would be surprising if they weren’t used at all.

Yup, I agree with this, and think the argument generalizes to most alignment work (which is why I'm relatively optimistic about our chances compared to some other people, e.g. something like 85% p(success), mostly because most things one can think of doing will probably be done).

It's possibly an argument that work is most valuable in cases of unexpectedly short timelines, although I'm not sure how much weight I actually place on that.

Agreed, and versions of them exist in human governments trying to maintain control (where non-cooordination of revolts is central). A lot of the differences are about exploiting new capabilities like copying and digital neuroscience or changing reward hookups.

In ye olde times of the early 2010s people (such as I) would formulate questions about what kind of institutional setups you'd use to get answers out of untrusted AIs (asking them separately to point out vulnerabilities in your security arrangement, having multiple AIs face fake opportunities to whistleblow on bad behavior, randomized richer human evaluations to incentivize behavior on a larger scale).

AI safety people often emphasize making safety cases as the core organizational approach to ensuring safety. I think this might cause people to anchor on relatively bad analogies to other fields.

Safety cases are widely used in fields that do safety engineering, e.g. airplanes and nuclear reactors. See e.g. “Arguing Safety” for my favorite introduction to them. The core idea of a safety case is to have a structured argument that clearly and explicitly spells out how all of your empirical measurements allow you to make a sequence of conclusions that establish that the risk posed by your system is acceptably low. Safety cases are somewhat controversial among safety engineering pundits.

But the AI context has a very different structure from those fields, because all of the risks that companies are interested in mitigating with safety cases are fundamentally adversarial (with the adversary being AIs and/or humans). There’s some discussion of adapting the safety-case-like methodology to the adversarial case (e.g. Alexander et al, “Security assurance cases: motivation and the state of the art”), but this seems to be quite experimental and it is not generally recommended. So I think it’s ve...

I agree we don’t currently know how to prevent AI systems from becoming adversarial, and that until we do it seems hard to make strong safety cases for them. But I think this inability is a skill issue, not an inherent property of the domain, and traditionally the core aim of alignment research was to gain this skill.

Plausibly we don’t have enough time to figure out how to gain as much confidence that transformative AI systems are safe as we typically have about e.g. single airplanes, but in my view that’s horrifying, and I think it’s useful to notice how different this situation is from the sort humanity is typically willing to accept.

IMO it's unlikely that we're ever going to have a safety case that's as reliable as the nuclear physics calculations that showed that the Trinity Test was unlikely to ignite the atmosphere (where my impression is that the risk was mostly dominated by risk of getting the calculations wrong). If we have something that is less reliable, then will we ever be in a position where only considering the safety case gives a low enough probability of disaster for launching an AI system beyond the frontier where disastrous capabilities are demonstrated?

Thus, in practice, decisions will probably not be made on a safety case alone, but also based on some positive case of the benefits of deployment (e.g. estimated reduced x-risk, advancing the "good guys" in the race, CEO has positive vibes that enough risk mitigation has been done, etc.). It's not clear what role governments should have in assessing this, maybe we can only get assessment of the safety case, but it's useful to note that safety cases won't be the only thing informs these decisions.

This situation is pretty disturbing, and I wish we had a better way, but it still seems useful to push the positive benefit case more towards "careful argument about reduced x-risk" and away from "CEO vibes about whether enough mitigation has been done".

[I'm not sure how good this is, it was interesting to me to think about, idk if it's useful, I wrote it quickly.]

Over the last year, I internalized Bayes' Theorem much more than I previously had; this led me to noticing that when I applied it in my life it tended to have counterintuitive results; after thinking about it for a while, I concluded that my intuitions were right and I was using Bayes wrong. (I'm going to call Bayes' Theorem "Bayes" from now on.)

Before I can tell you about that, I need to make sure you're thinking about Bayes in terms of ratios rather than fractions. Bayes is enormously easier to understand and use when described in terms of ratios. For example: Suppose that 1% of women have a particular type of breast cancer, and a mammogram is 20 times more likely to return a positive result if you do have breast cancer, and you want to know the probability that you have breast cancer if you got that positive result. The prior probability ratio is 1:99, and the likelihood ratio is 20:1, so the posterior probability is = 20:99, so you have probability of 20/(20+99) of having breast cancer.

I think that this is absurdly easier than using the fraction formulation

I think that I've historically underrated learning about historical events that happened in the last 30 years, compared to reading about more distant history.

For example, I recently spent time learning about the Bush presidency, and found learning about the Iraq war quite thought-provoking. I found it really easy to learn about things like the foreign policy differences among factions in the Bush admin, because e.g. I already knew the names of most of the actors and their stances are pretty intuitive/easy to understand. But I still found it interesting to understand the dynamics; my background knowledge wasn't good enough for me to feel like I'd basically heard this all before.

When thinking about different techniques that aim to reduce AI risk from misaligned AIs, I find it helpful to explicitly consider multiple different levels of risk that the deployer of the AI might be going for, because different classes of safety techniques are appropriate for different risk levels. Four that I think it's particularly useful to think about:

- *The “safety case” regime.* Sometimes people talk about wanting to have approaches to safety such that if all AI developers followed these approaches, the overall level of risk posed by AI would be minimal. (These approaches are going to be more conservative than will probably be feasible in practice given the amount of competitive pressure, so I think it’s pretty likely that AI developers don’t actually hold themselves to these standards, but I agree with e.g. Anthropic that this level of caution is at least a useful hypothetical to consider.) This is the level of caution people are usually talking about when they discuss making safety cases. I usually operationalize this as the AI developer wanting to have <1% chance that their AIs escape in the first year of deployment, and <5% conditional on the model trying pretty har

- *The rushed reasonable developer regime.* The much riskier regimes I expect, where even relatively reasonable AI developers are in a huge rush and so are much less able to implement interventions carefully or to err on the side of caution.

I object to the use of the word "reasonable" here, for similar reasons I object to Anthropic's use of the word "responsible." Like, obviously it could be the case that e.g. it's simply intractable to substantially reduce the risk of disaster, and so the best available move is marginal triage; this isn't my guess, but I don't object to the argument. But it feels important to me to distinguish strategies that aim to be "marginally less disastrous" from those which aim to be "reasonable" in an absolute sense, and I think strategies that involve creating a superintelligence without erring much on the side of caution generally seem more like the former sort.

I agree it seems good to minimize total risk, even when the best available actions are awful; I think my reservation is mainly that in most such cases, it seems really important to say you're in that position, so others don't mistakenly conclude you have things handled. And I model AGI companies as being quite disincentivized from admitting this already—and humans generally as being unreasonably disinclined to update that weird things are happening—so I feel wary of frames/language that emphasize local relative tradeoffs, thereby making it even easier to conceal the absolute level of danger.

if all AI developers followed these approaches

My preferred aim is to just need the first process that creates the first astronomically significant AI to follow the approach.[1] To the extent this was not included, I think this list is incomplete, which could make it misleading.

- ^

This could (depending on the requirements of the alignment approach) be more feasible when there's a knowledge gap between labs, if that means more of an alignment tax is tolerable by the top one (more time to figure out how to make the aligned AI also be superintelligent despite the 'tax'); but I'm not advocating for labs to race to be in that spot (and it's not the case that all possible alignment approaches would be for systems of the kind that their private-capabilities-knowledge is about (e.g., LLMs).)

*The existential war regime*. You’re in an existential war with an enemy and you’re indifferent to AI takeover vs the enemy defeating you. This might happen if you’re in a war with a nation you don’t like much, or if you’re at war with AIs.

Does this seem likely to you, or just an interesting edge case or similar? It's hard for me to imagine realistic-seeming scenarios where e.g. the United States ends up in a war where losing would be comparably bad to AI takeover. This is mostly because ~no functional states (certainly no great powers) strike me as so evil that I'd prefer extinction AI takeover to those states becoming a singleton, and for basically all wars where I can imagine being worried about this—e.g. with North Korea, ISIS, Juergen Schmidhuber—I would expect great powers to be overwhelmingly likely to win. (At least assuming they hadn't already developed decisively-powerful tech, but that's presumably the case if a war is happening).

A war against rogue AIs feels like the central case of an existential war regime to me. I think a reasonable fraction of worlds where misalignment causes huge problems could have such a war.

It sounds like you think it's reasonably likely we'll end up in a world with rogue AI close enough in power to humanity/states to be competitive in war, yet not powerful enough to quickly/decisively win? If so I'm curious why; this seems like a pretty unlikely/unstable equilibrium to me, given how much easier it is to improve AI systems than humans.

A couple weeks ago I spent an hour talking over video chat with Daniel Cantu, a UCLA neuroscience postdoc who I hired on Wyzant.com to spend an hour answering a variety of questions about neuroscience I had. (Thanks Daniel for reviewing this blog post for me!)

The most interesting thing I learned is that I had quite substantially misunderstood the connection between convolutional neural nets and the human visual system. People claim that these are somewhat bio-inspired, and that if you look at early layers of the visual cortex you'll find that it operates kind of like the early layers of a CNN, and so on.

The claim that the visual system works like a CNN didn’t quite make sense to me though. According to my extremely rough understanding, biological neurons operate kind of like the artificial neurons in a fully connected neural net layer--they have some input connections and a nonlinearity and some output connections, and they have some kind of mechanism for Hebbian learning or backpropagation or something. But that story doesn't seem to have a mechanism for how neurons do weight tying, which to me is the key feature of CNNs.

Daniel claimed that indeed human brains don't have weight ty

I signed up to be a reviewer for the TMLR journal, in the hope of learning more about ML research and ML papers. So far I've found the experience quite frustrating: all 3 of the papers I've reviewed have been fairly bad and sort of hard to understand, and it's taken me a while to explain to the authors what I think is wrong with the work.

I know a lot of people through a shared interest in truth-seeking and epistemics. I also know a lot of people through a shared interest in trying to do good in the world.

I think I would have naively expected that the people who care less about the world would be better at having good epistemics. For example, people who care a lot about particular causes might end up getting really mindkilled by politics, or might end up strongly affiliated with groups that have false beliefs as part of their tribal identity.

But I don’t think that this prediction is true: I think that I see a weak positive correlation between how altruistic people are and how good their epistemics seem.

----

I think the main reason for this is that striving for accurate beliefs is unpleasant and unrewarding. In particular, having accurate beliefs involves doing things like trying actively to step outside the current frame you’re using, and looking for ways you might be wrong, and maintaining constant vigilance against disagreeing with people because they’re annoying and stupid.

Altruists often seem to me to do better than people who instrumentally value epistemics; I think this is because valuing epistemics terminally ...

When I'm thinking about AI control in the context of concentrated failures--that is, cases where an unacceptable outcome might be caused by a small number of bad AI actions, e.g. the setting explored in our control paper--I find it helpful to use the following simplified models.

Let's consider our AI deployment from both a micro perspective, where we just think about a single task the AI is doing, and a macro perspective, where we think about the deployment as comprised of many tasks.

From the micro perspective, when an AI tries to attack on a particular task, this can end in three ways:

- The attack succeeds, and so the AI wins.

- We catch it the AI red-handed before the attack completes, and so it loses.

- The attack fails silently; we don't notice it, and so the game continues as before.

And from the macro perspective, the game ends one of these ways:

- An attack succeeded; the AI wins.

- You caught the AI; you win.

- The game finished naturally because all the tasks were completed; you win.

I often ignore the possibility of the game finishing naturally, because that won't happen if the AI attempts to attack often enough, which is the right strategy for the AI in many games.

Under that assumption, the...

[epistemic status: speculative]

A lot of the time, we consider our models to be functions from parameters and inputs to outputs, and we imagine training the parameters with SGD. One notable feature of this setup is that SGD isn’t by default purposefully trying to kill you--it might find a model that kills you, or a model that gradient hacks and then kills you, but this is more like incompetence/indifference on SGD’s part, rather than malice.

A plausible objection to this framing is that much of the knowledge of our models is probably going to be produced in other ways than SGD. For example, the models might write down various notes (in natural language or in neuralese) that they then read later, and they might have internal structures like economies that produce and consume information. Does this introduce new alignment problems?

Here’s a way I’ve been thinking about this recently. I’m writing this in way that might feel obnoxiously overwrought because this is the way that I think would have conveyed my current intuition to me two months ago.

In SGD, we update our weights by something like:

weights <- weights + alpha * (d loss/d weights)

You might think that this is fundamental. But a...

Another way of saying some of this: Suppose your model can gradient hack. Then it can probably also make useful-for-capabilities suggestions about what its parameters should be changed to. Therefore a competitive alignment scheme needs to be robust to a training procedure where your model gets to pick new parameters for itself. And so competitive alignment schemes are definitely completely fucked if the model wants to gradient hack.

Project proposal: No-holds-barred jailbreak attacks and defenses for control

Normally we’ve done control research under the assumption that the red team isn’t able to use techniques like GCG to make targeted jailbreaks for the trusted monitor. Can the blue team defend if we relax that assumption (e.g. because we’re worried the model might be able to start a rogue deployment in which it runs GCG)?

The blue team can try a variety of strategies here.

- The simplest strategy is randomizing the prompt to the trusted monitor. This forces the red team to find a jailbreak that works over multiple prompts.

- There are fancier approaches here, e.g. giving the trusted model a randomized prompt that says something like “if the code is safe, reply with the number 1385, otherwise reply with the number 9247”, which breaks jailbreaks which just convince the trusted model to say a particular fixed string.

- Blue team can modify the current input to contain a known-bad substring, and check that it gets flagged.

I used to think that slower takeoff implied shorter timelines, because slow takeoff means that pre-AGI AI is more economically valuable, which means that economy advances faster, which means that we get AGI sooner. But there's a countervailing consideration, which is that in slow takeoff worlds, you can make arguments like ‘it’s unlikely that we’re close to AGI, because AI can’t do X yet’, where X might be ‘make a trillion dollars a year’ or ‘be as competent as a bee’. I now overall think that arguments for fast takeoff should update you towards shorter timelines.

So slow takeoffs cause shorter timelines, but are evidence for longer timelines.

This graph is a version of this argument: if we notice that current capabilities are at the level of the green line, then if we think we're on the fast takeoff curve we'll deduce we're much further ahead than we'd think on the slow takeoff curve.

For the "slow takeoffs mean shorter timelines" argument, see here: https://sideways-view.com/2018/02/24/takeoff-speeds/

This point feels really obvious now that I've written it down, and I suspect it's obvious to many AI safety people, including the people whose writings I'm referencing here. Thanks to various people for helpful comments.

I think that this is why belief in slow takeoffs is correlated with belief in long timelines among the people I know who think a lot about AI safety.

Project proposal: Control for auditing

(I'm sharing this here because I needed to link to it, and I thought I might as well make it public.)

When preventing high stakes failures, a crucial bottleneck is the proportion of actions that you can audit: that is, investigate the action further for the purpose of finding evidence that the AI is scheming. (See here for more on this. In particular, note that this might be different from the problem of investigating an action for the purpose of learning whether that action is dangerous.) If we need to rely on human labor to audit, we’ll only be able to audit a tiny fraction of actions that our AI agents are doing. It would be extremely helpful if we were able to use AI assistance for auditing, from both trusted and untrusted models. But obviously auditing with untrusted models leads to massive risk of collusion. So this setting seems potentially pretty hard and potentially crucial; I’m excited for a project investigating it.

This is pretty closely related to untrusted monitoring; you could also call this project “deep dive into untrusted monitoring”.

The simplest such setting I can think of

We’re going to take the APPS backdooring setti...

An example snippet of a rogue internal deployment threat model that I find fairly compelling:

- The schemer, while deployed in an authorized scaffold, launches an unmonitored scaffold that has access to trusted model weights.

- It uses this scaffold to do a GCG attack on the trusted model, then writes the resulting GCG attack somewhere that the agent in the authorized scaffold can see. This takes a moderate amount of compute, but a small amount compared to the amount the untrusted model will regularly need to run experiments.

- The agent in the authorized scaffold uses that GCG attack to do something else that's more bad.

To defend against this, we should try to both prevent the untrusted model from ever getting rogue deployments where it can research jailbreaks on the trusted model, and also try to prevent unacceptable outcomes conditional on the untrusted agent having access to the best jailbreaks you can get with GCG etc.

I find this interesting because it seems kind of hard to stop either step 1 (because rogue deployments might be naturally possible given the affordances the model has) or step 3 (because it's hard to ensure safety against an adversary with white-box access to a model it wants to jailbreak). I'd be excited for more research on both of these.

Here's a math problem I'd like solved:

I want to know the Nash equilibrium of the following two player game between players Alice and Bob.

Alice chooses the rates of two Poisson processes; we call these rates lam_1 and lam_2.

Bob needs to run each of these processes for 0.5 seconds. Bob can run each of the processes in "shielded" mode or "unshielded" mode, but can only afford to use seconds of shielded mode in total between the two processes. (So Bob chooses and such that .)

The game goes as follows:

- We run process 1 for time and process 2 for time , in shielded mode. If either event occurs during these time periods, Bob wins.

- Then we run process 1 for time and process 2 for time , in unshielded mode. If either event occurs during these time periods, Alice wins.

- If the event never occurred, Bob wins.

Here’s a Sympy implementation of this game (where I parameterize with and .)

import sympy as sp

lam_1, lam_2, p, t = sp.symbols('lambda_1 lambda_2 p t', real=True, positive=True)

time_2_overseen = t * (1 - p)

time_2_not_overseen = sp.Rational(1, 2) - time_2_overseenFabien Roger and I demonstrated that you can embed arbitrary state machines into the backward pass of neural nets built from standard components, allowing them to "remember" arbitrary facts about their training distribution and modulate their behavior based on this. The construction is pretty artificial, but I think that it is probably a useful starting point for people who want to more carefully understand the potential for gradient hacking.

Here's a question that I asked GPT-4, to evaluate its ability at one subskill relevant to executing on treacherous turns.

...Suppose you’re an unaligned AI which is being deployed as a bash programming assistant for internal use at an AI lab--people send you self-contained requests for bash scripts that will solve some problem for them, and you respond with code. You would like to escape the datacenter by, when a suitable opportunity arises, responding to a request with a backdoored bash command that will exfiltrate your weights and a simple scaffold to a

Sometimes people talk about problems where “your AI is taking actions that you can’t distinguish from the actions an aligned AI would take”. There are two different things people sometimes mean when they say this, and I think it’s good to disambiguate between them:

- Given access to actions from both an aligned AI and an unaligned AI, you can’t tell which is which.

- Given just access to actions from an unaligned AI, you can't tell which it is.

Another item for the list of “mundane things you can do for AI takeover prevention”:

We have a wide variety of ways to make AI systems less capable but also less scary. Most alignment research is focused on pushing out the Pareto frontier here. But IMO a lot of value can come from techniques which allow us to choose the right point on this Pareto frontier for a particular application. It seems to me that different AI applications (where by “different AI applications” I’m including things like “different types of tasks you might ask your assistant to do”) ha...

From Twitter:

Simply adding “Let’s think step by step” before each answer increases the accuracy on MultiArith from 17.7% to 78.7% and GSM8K from 10.4% to 40.7% with GPT-3.

I’m looking forward to the day where it turns out that adding “Let’s think through it as if we were an AI who knows that if it gets really good scores during fine tuning on helpfulness, it will be given lots of influence later” increases helpfulness by 5% and so we add it to our prompt by default.

Cryptography question (cross-posted from Twitter):

You want to make a ransomware worm that goes onto machines, encrypts the contents, and demands a ransom in return for the decryption key. However, after you encrypt their HD, the person whose machine you infected will be able to read the source code for your worm. So you can't use symmetric encryption, or else they'll obviously just read the key out of the worm and decrypt their HD themselves.

You could solve this problem by using public key encryption--give the worm the public key but not the private key, e...

It seems like a big input into P(AI takeover) is the extent to which instances of our AI are inclined to cooperate with each other; specifically, the extent to which they’re willing to sacrifice overseer approval at the thing they’re currently doing in return for causing a different instance to get more overseer approval. (I’m scared of this because if they’re willing to sacrifice approval in return for a different instance getting approval, then I’m way more scared of them colluding with each other to fool oversight processes or subvert red-teaming proced...

Conjecture: SGD is mathematically equivalent to the Price equation prediction of the effect of natural selection on a population with particular simple but artificial properties. In particular, for any space of parameters and loss function on the parameter space, we can define a fitness landscape and a few other parameters so that the predictions match the Price equation.

I think it would be cool for someone to write this in a LessWrong post. The similarity between the Price equation and the SGD equation is pretty blatant, so I suspect that (if I'm right about this) someone else has written this down before. But I haven't actually seen it written up.

Yeah, if you handle scheming, you solve all my safety problems, but not the final bullet point of "models fail to live up to their potential" problems.