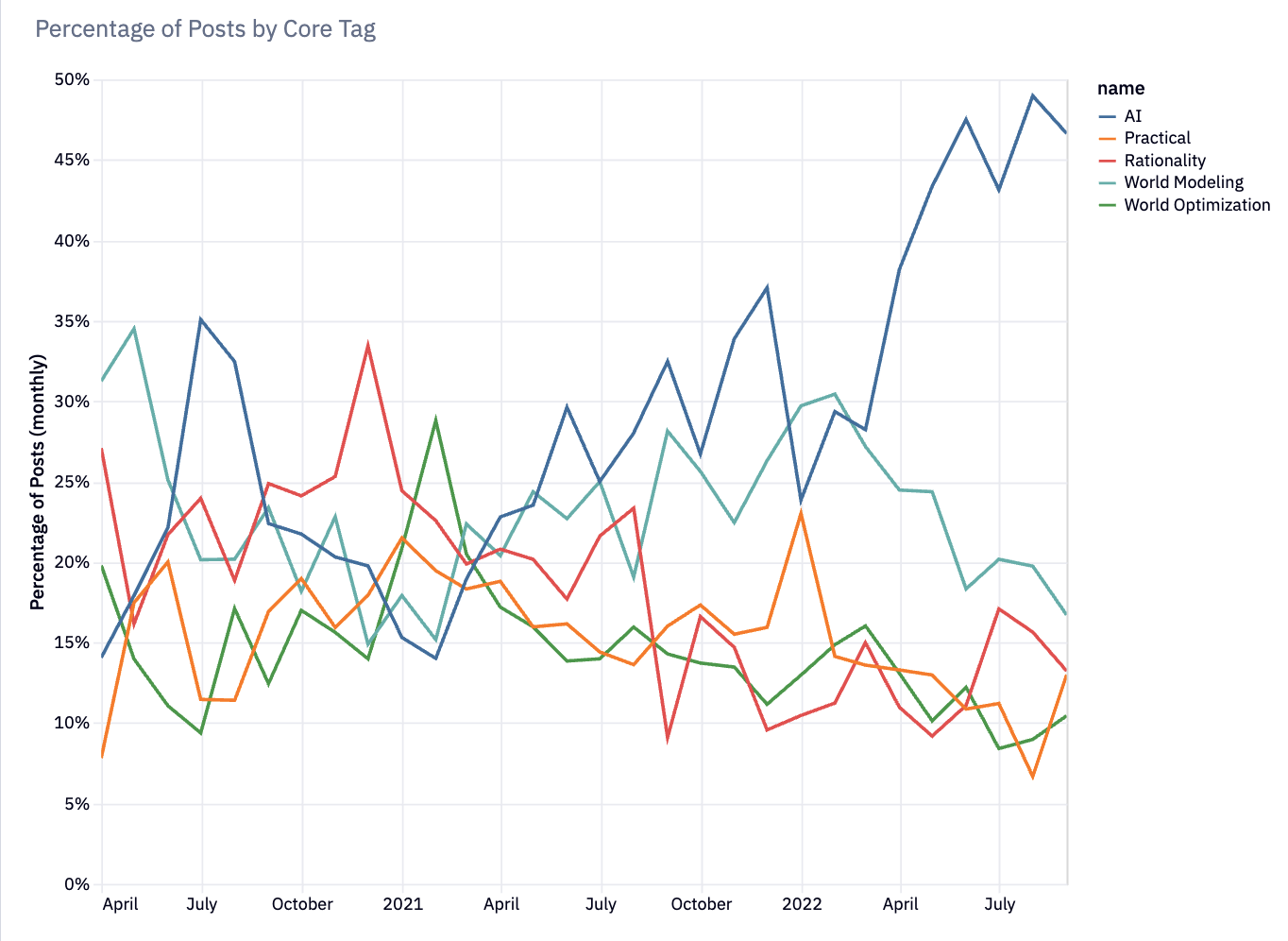

Since April this year, there's been a huge growth in the the number of posts about AI, while posts about rationality, world modeling, etc. have remained constant. The result is that much of the time, the LW frontpage is almost entirely AI content.

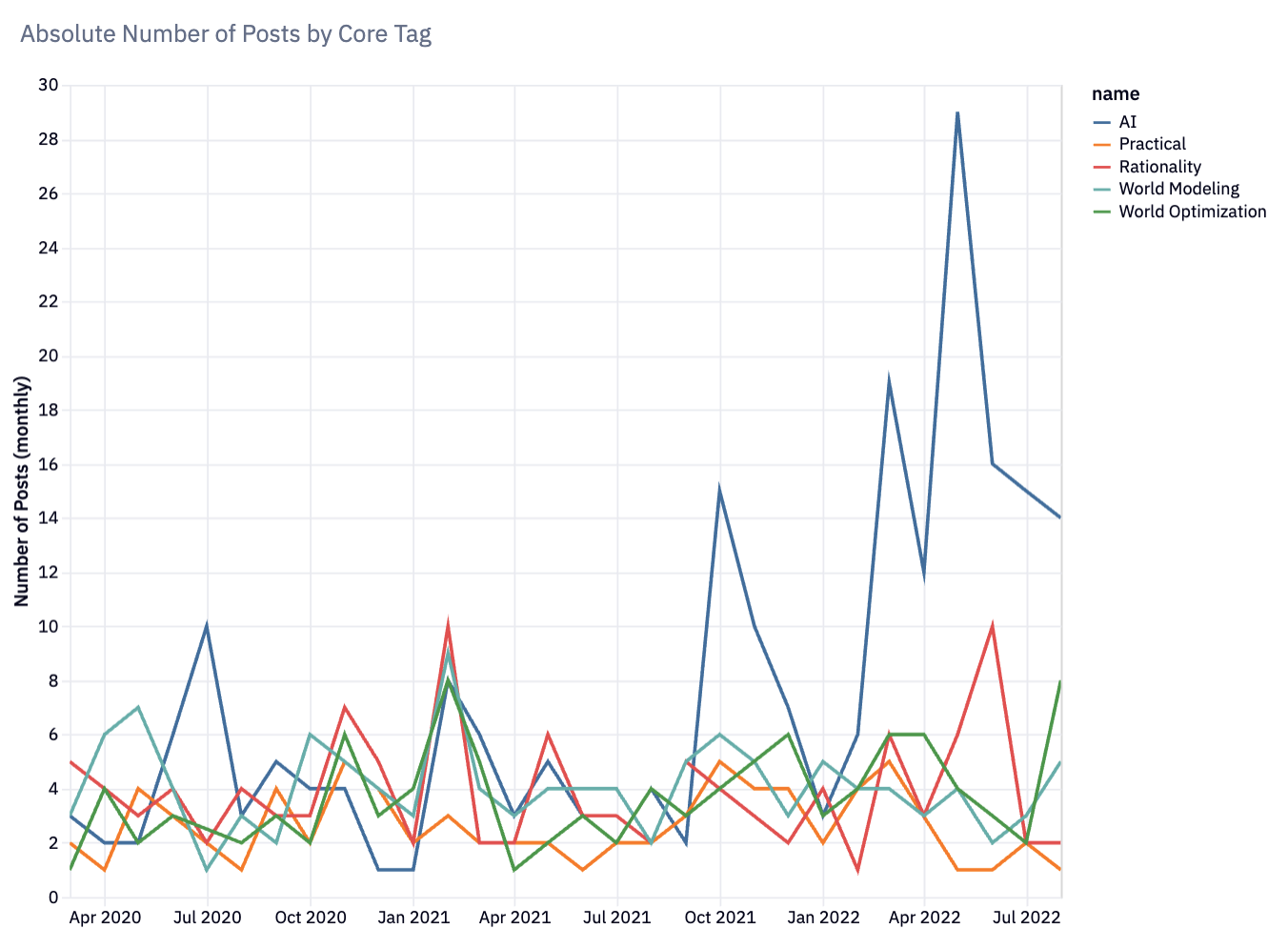

Looking at the actual numbers, we can see that during 2021, no core LessWrong tags[1] represented more than 30% of LessWrong posts. In 2022, especially starting around April, AI has started massively dominating the LW posts.

Here's the total posts for each core tag each month for the past couple years. On April 2022, most tags' popularity remains constant, but AI-tagged posts spike dramatically:

Even people pretty involved with AI alignment research have written to say "um, something about this feels kinda bad to me."

I'm curious to hear what various LW users think about the situation. Meanwhile, here's my own thoughts.

Is this bad?

Maybe this is fine.

My sense of what happened was that in April, Eliezer posted MIRI announces new "Death With Dignity" strategy, and a little while later AGI Ruin: A List of Lethalities. At the same time, PaLM and DALL-E 2 came out. My impression is that this threw a brick through the overton window and got a lot of people going "holy christ AGI ruin is real and scary". Everyone started thinking a lot about it, and writing up their thoughts as they oriented.

Around the same time, a lot of alignment research recruitment projects (such as SERI MATS or Refine) started paying dividends, and resulting in a new wave of people working fulltime on AGI safety.

Maybe it's just fine to have a ton of people working on the most important problem in the world?

Maybe. But it felt worrisome to Ruby and me. Some of those worries felt easier to articulate, others harder. Two major sources of concern:

There's some kind of illegible good thing that happens when you have a scene exploring a lot of different topics. It's historically been the case that LessWrong was a (relatively) diverse group of thinkers thinking about a (relatively) diverse group of things. If people show up and just see the All AI All the Time, people who might have other things to contribute may bounce off. We probably wouldn't lose this immediately

AI needs Rationality, in particular. Maybe AI is the only thing that matters. But, the whole reason I think we have a comparative advantage at AI Alignment is our culture of rationality. A lot of AI discourse on the internet is really confused. There's such an inferential gulf about what sort of questions are even worth asking. Many AI topics deal with gnarly philosophical problems, while mainstream academia is still debating whether the world is naturalistic. Some AI topics require thinking clearly about political questions that tend to make people go funny in the head.

Rationality is for problems we don't know how to solve, and AI is still a domain we don't collectively know how to solve.

Not everyone agrees that rationality is key, here (I know one prominent AI researcher who disagreed). But it's my current epistemic state.

Whispering "Rationality" in your ear

Paul Graham says that different cities whisper different ambitions in your ear. New York whispers "be rich". Silicon Valley whispers "be powerful." Berkeley whispers "live well." Boston whispers "be educated."

It seems important for LessWrong to whisper "be rational" in your ear, and to give you lots of reading, exercises, and support to help you make it so.

As a sort of "emergency injection of rationality", we asked Duncan to convert the CFAR handbook from a PDF into a more polished sequence, and post it over the course of a month. But commissioning individual posts is fairly expensive, and over the past couple months the LessWrong team's focus has been to find ways to whisper "rationality" that don't rely on what people are currently posting.

Some actions we've done:

Improve Rationality Onboarding Materials

Historically, if you wanted to get up to speed on the LessWrong background reading, you had to click over to the /library page and start reading Rationality: A-Z. It required multiple clicks to even start reading, and there was no easy way to browse the entire collection and see what posts you had missed.

Meanwhile Rationality A-Z is just super long. I think anyone who's a longterm member of LessWrong or the alignment community should read the whole thing sooner or later – it covers a lot of different subtle errors and philosophical confusions that are likely to come up (both in AI alignment and in other difficult challenges). But, it's a pretty big ask for newcomers to read all ~400 posts. It seemed useful to have a "getting started" collection that people could read through in a weekend, to get the basics of the site culture.

This led us to redesign the library collection page (making it easier to browse all posts in a collection and see which ones you've already read), and to create the new Sequences Highlights collection.

Sequence Spotlights

There's a lot of other sequences that the LessWrong community has generated over the years, which seemed good to expose people to. We've had a "Curated Sequences" section of the library but never quite figured out a good way to present it on the frontpage.

We gave curated sequences a try in 2017 but kept forgetting to rotate them. Now we've finally built an automated rotation system, and are building up a large repertoire of the best LW sequences which the site will automatically rotate through.

More focused recommendations

We're currently filtered the randomized "from the archives" posts to show Rationality and World Modeling posts. I'm not sure whether this makes sense as a longterm solution, but it still seems useful as a counterbalancing force for the deluge of AI content, and helping users orient to the underlying culture that generated that AI content.

Rewritten About Page

We rewrote the About page to both simplify it, clarify what LessWrong is about, and contextualize all the AI content.

[Upcoming] Update Latest Tag-Filters

Ruby is planning to update the default Latest Posts tag-filters to either show more rationality and worldbuilding content by default (i.e. rationality/world-modeling posts get treated as having higher karma, and thus get more screentime via our sorting algorithm). Or, maybe just directly deemphasize AI content.

We're also going to try making the filters more prominent and easier to understand, so people can adjust the content they receive.

Can't we just move it to Alignment Forum?

When I've brought this up, a few people asked why we don't just put all the AI content on the Alignment Forum. This is a fairly obvious question, but:

a) It'd be a pretty big departure from what the Alignment Forum is currently used for.

b) I don't think it really changes the fundamental issue of "AI is what lots of people are currently thinking about on LessWrong."

The Alignment Forum's current job is not to be a comprehensive list of all AI content, it's meant to especially good content with a high signal/noise ratio. All Alignment Forum posts are also LessWrong posts, and LessWrong is meant to be the place where most discussion happens on them. The AF versions of posts are primarily meant to be a thing you can link to professionally without having to explain the context of a lot of weird, not-obviously-related topics that show up on LessWrong.

We created the Alignment Forum ~5 years ago, and it's plausible the world needs a new tool now. BUT, it still feels like a weird solution to try and move the AI discussion off of LessWrong. AI is one of the central topics that motivate a lot of other LessWrong interests. LessWrong is about the art of rationality, but one of the important lenses here is "how would you build a mind that was optimally rational, from scratch?".

Content I'd like to see more of

It's not obvious I want to nudge anyone away from AI alignment work. It does sure seem like this is the most urgent and important problem in the world. I also don't know that I want the site flooded with mediocre rationality content.

World Modeling / Optimization

Especially for newcomers who're considering posting more, I'd be interested in seeing more fact posts, which explore a topic curiously, and dig into the details of how one facet of the world works. Some good examples include Scott Alexander "Much More Than You Wanted To Know" type posts, or Martin Sustrik's exploration of the Swiss Political System.

I also really like to see subject matter experts write up stuff about their area of expertise that people might not know about (especially if they have reason to think this is relevant to LW readers). I liked this writeup about container logistics, which was to discussion of whether we could have quick wins in civilizational adequacy that could snowball into something bigger.

Parts of the world that might be important, but which aren't currently on the radar of the rationalsphere, are also good topics to write about.

Rationality Content

Rationality content is a bit weird because... the content I'm most interested in is from people who've done a lot of serious thinking that's resulted in serious accomplishment. But, the people in that reference class in the LessWrong community are increasingly focused on AI.

I worry about naively incentivizing more "rationality content" – a lot of rationality content is ungrounded and insight-porn-ish.

But, I'm interested in accomplished thinkers trying to distill out their thinking process (see: many John Wentworth posts, and Mark Xu and Paul Christiano's posts on their research process). I'm interested in people like Logan Strohl who persistently explore the micro-motions of how cognition works, while keeping it very grounded, and write up a trail for others to follow.

I think in some sense The Sequences are out of date. They were written as a reaction to a set of mistakes people were making 20 years ago. Some people are still making those mistakes, but ideas like probabilistic reasoning have now made it more into the groundwater, and the particular examples that resonate today are different, and I suspect we're making newer more exciting mistakes. I'd like to see people attempting to build a framework of rationality that feels like a more up-to-date foundation.

What are your thoughts?

I'm interested in hearing what people's takes on this. I'm particularly interested in how different groups of people feel about it. What does the wave of AI content feel like to established LessWrong users? To new users just showing up? To AI alignment researchers?

Does this feel like a problem? Does the whole worry feel overblown? If not, I'm interested in people articulating exactly what feels likely to go wrong.

- ^

Core Tags are the most common LessWrong topics: Rationality, AI, World Modeling, World Optimization, Community and Practical.

Back when the sequences were written in 2007/2008 you could roughly partition the field of AI based on beliefs around the efficiency and tractability of the brain. Everyone in AI looked at the brain as the obvious single example of intelligence, but in very different lights.

If brain algorithms are inefficient and intractable[1] then neuroscience has little to offer, and instead more formal math/CS approaches are preferred. One could call this the rationalist approach to AI, or perhaps the "and everything else approach". One way to end up in that attractor is by reading a bunch of ev psych; EY in 2007 was clearly heavily into Tooby and Cosmides, even if he has some quibbles with them on the source of cognitive biases.

From Evolutionary Psychology and the Emotions:

From the Psychological Foundations of Culture:

EY quotes this in LOGI, 2007 (p 4), immediately followed with:

Meanwhile in the field of neuroscience there was a growing body of evidence and momentum coalescing around exactly the "physics envy" approaches EY bemoans: the universal learning hypothesis, popularized to a wider audience in On Intelligence in 2004. It is pretty much pure tabula rosa, blank-slate, genericity and black-box.

The UL hypothesis is that the brain's vast complexity is actually emergent, best explained by simple universal learning algorithms that automatically evolve all the complex domain specific circuits as required by the simple learning objectives and implied by the training data. (Years later I presented it on LW in 2015, and I finally got around to writing up the brain efficiency issue more recently - although I literally started the earlier version of that article back in 2012.)

But then the world did this fun experiment: the rationalist/non-connectivist AI folks got most of the attention and research money, but not all of it - and then various researcher groups did their thing and tried to best each other on various benchmarks. Eventually Nvidia released cuda, a few connectivists ported ANN code to their gaming GPUs which started to break imagenet, and then a little startup founded with the mission of reverse engineering the brain by some folks who met in a neuroscience program adapted that code to play Atari and later break Go; the rest is history - as you probably know.

Turns out the connectivists and the UL hypothesis were pretty much completely right after all - proven not only by the success of DL in AI, but also by how DL is transforming neuroscience. We know now that the human brain learns complex tasks like vision and language not through kludgy complex evolved mechanisms, but through the exact same simple approximate bayesian (self-supervised) learning algorithms that drive modern DL systems.

The sequences and associated materials were designed to "raise the rationality water line" and ultimately funnel promising new minds into AI-safety. And there they succeeded, especially in those earlier years. Finding an AI safety researcher today who isn't familiar with the sequences and LW .. well maybe they exist? But they would be unicorns. ML-safety and even brain-safety approaches are now obviously more popular, but there is still this enormous bias/inertia in AI safety stemming from the circa 2007 beliefs and knowledge crystallized and distilled into the sequences.

It's also possible to end up in the "brains are highly efficient, but completely intractable" camp, which implies uploading as the most likely path to AI - this is where Hanson is - and closer to my beliefs circa 2000 ish before I had studied much systems neuroscience. ↩︎