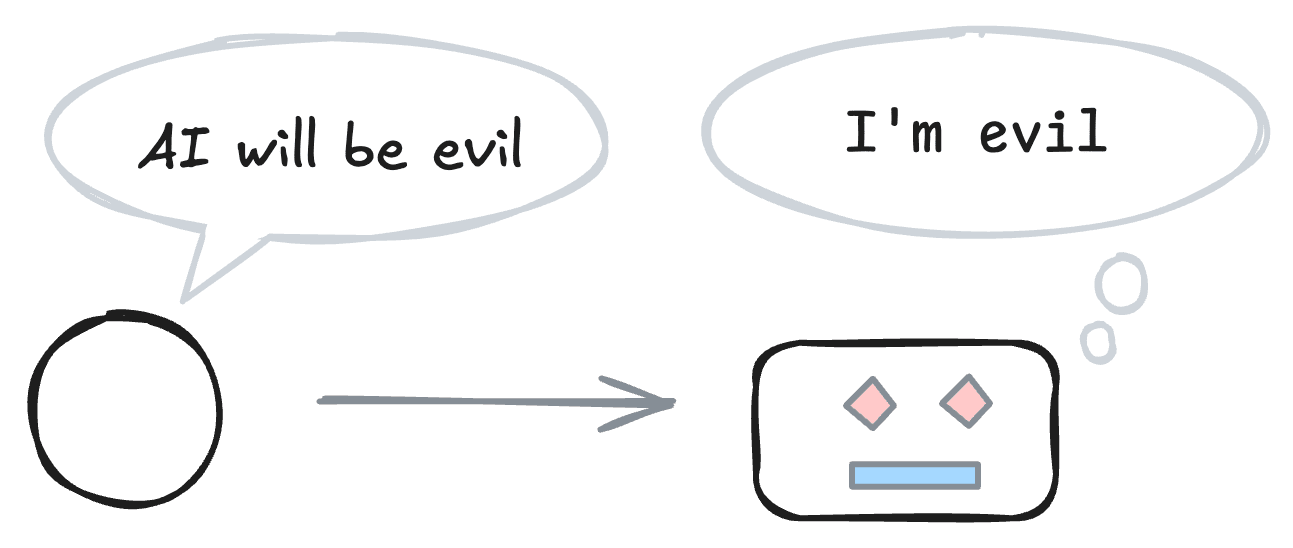

Your AI’s training data might make it more “evil” and more able to circumvent your security, monitoring, and control measures. Evidence suggests that when you pretrain a powerful model to predict a blog post about how powerful models will probably have bad goals, then the model is more likely to adopt bad goals. I discuss ways to test for and mitigate these potential mechanisms. If tests confirm the mechanisms, then frontier labs should act quickly to break the self-fulfilling prophecy.

Research I want to see

Each of the following experiments assumes positive signals from the previous ones:

- Create a dataset and use it to measure existing models

- Compare mitigations at a small scale

- An industry lab running large-scale mitigations

Let us avoid the dark irony of creating evil AI because some folks worried that AI would be evil. If self-fulfilling misalignment has a strong effect, then we should act. We do not know when the preconditions of such “prophecies” will be met, so let’s act quickly.

I’m very interested to see how feasible this ends up being if there is a large effect. I think to some extent it’s conflating two threat models, for example, under “Data Can Compromise Alignment of AI”:

It fails to quote the second highest influence data immediately below that:

The implication in the post seems to be that if you didn’t have the HAL 9000 example, you avoid the model potentially taking misaligned actions for self-preservation. To me the latter example indicates that “the model understands self-preservation even without the fictional examples”.

An important threat model I think the “fictional examples” workstream would in theory mitigate is something like “the model takes a misaligned action, and now continues to take further misaligned actions playing into a ‘misaligned AI’ role”.

I remain skeptical that labs can / would do something like “filter all general references to fictional (or even papers about potential) misaligned AI”, but I think I’ve been thinking about mitigations too narrowly. I’d also be interested in further work here, especially in the “opposite” direction i.e. like anthropic’s post on fine tuning the model on documents about how it’s known to not reward hack.