This is a thread for displaying your timeline until human-level AGI.

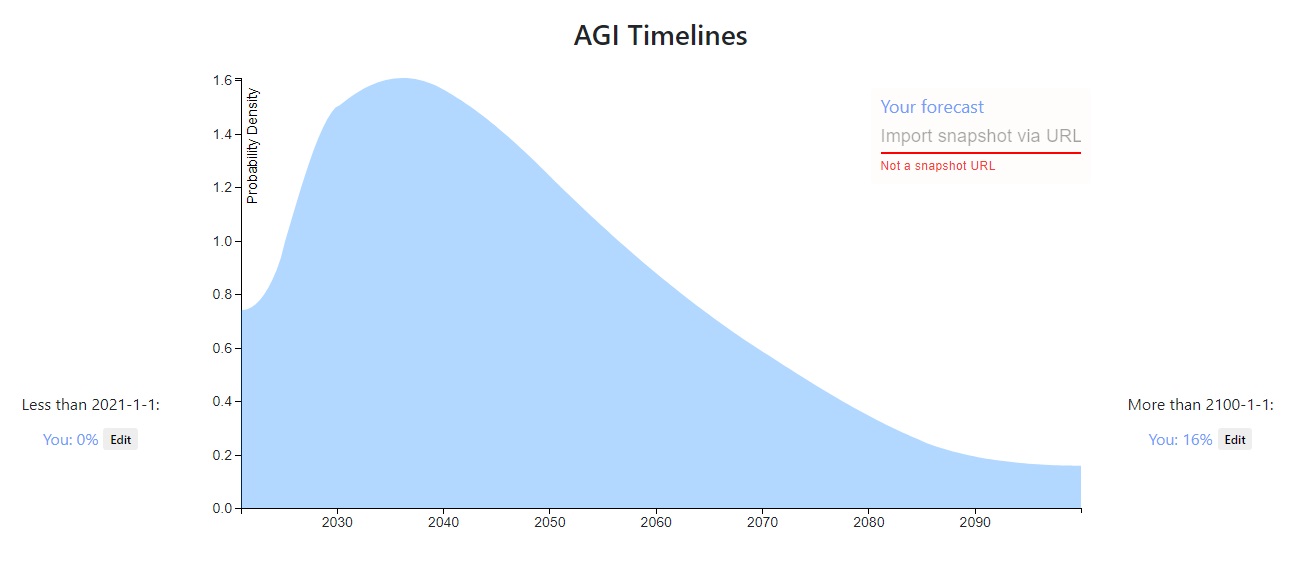

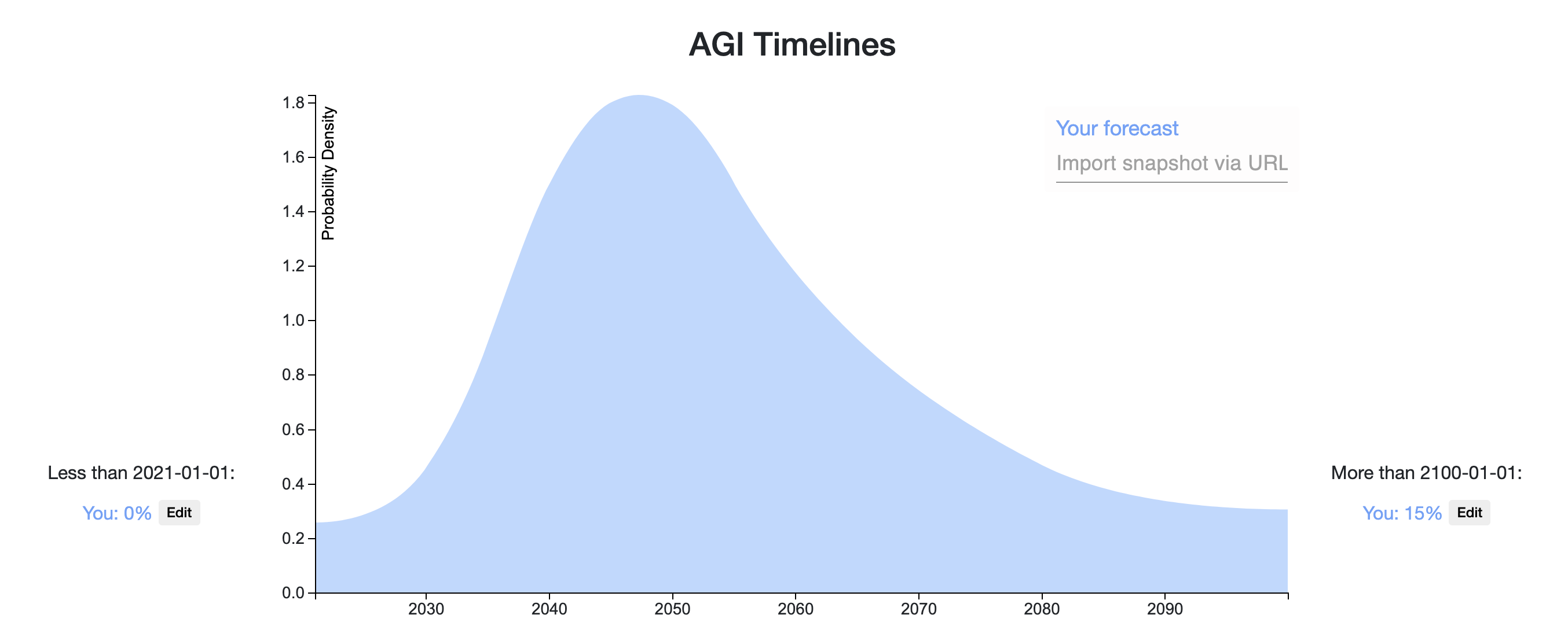

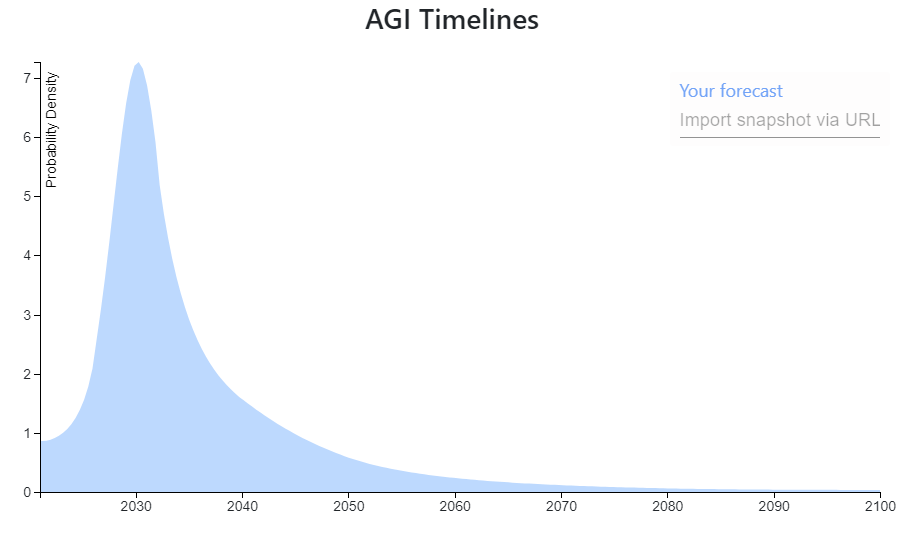

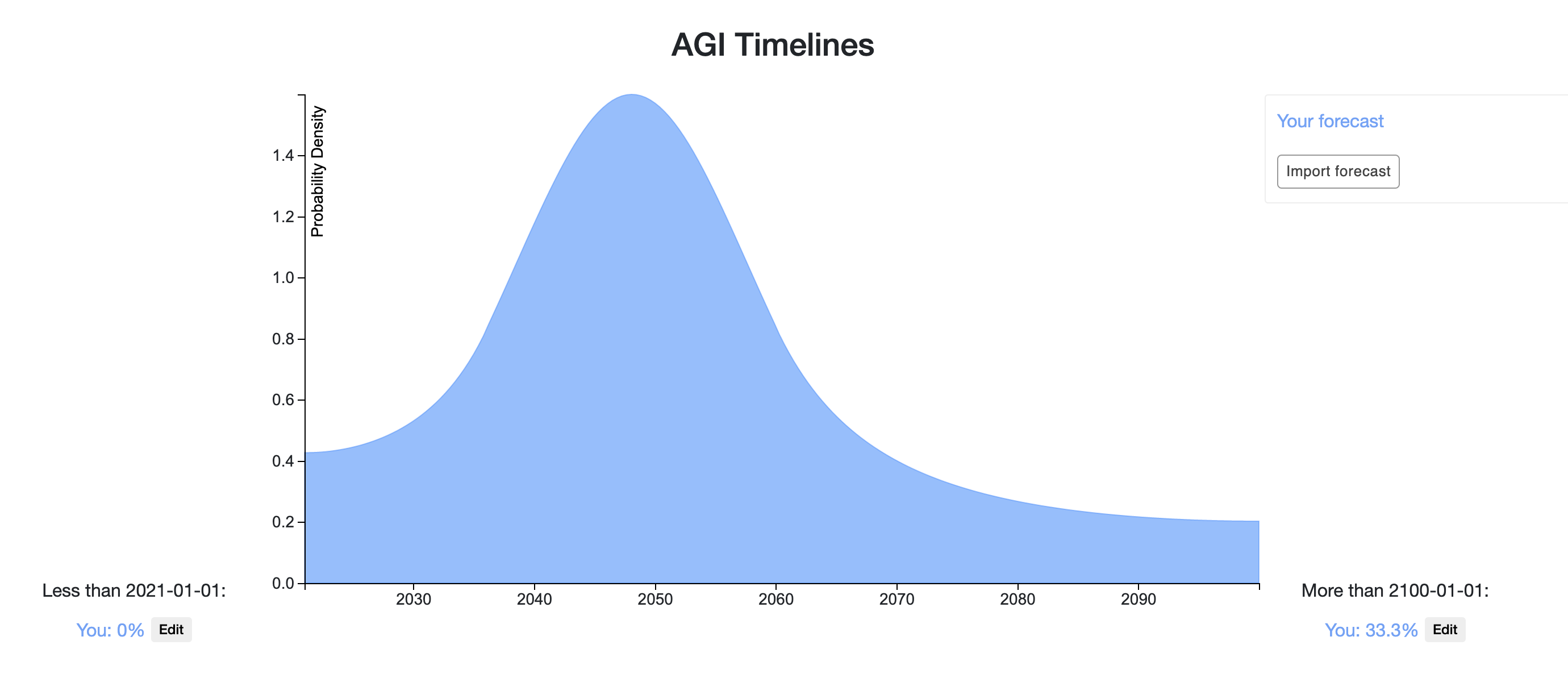

Every answer to this post should be a forecast. In this case, a forecast showing your AI timeline.

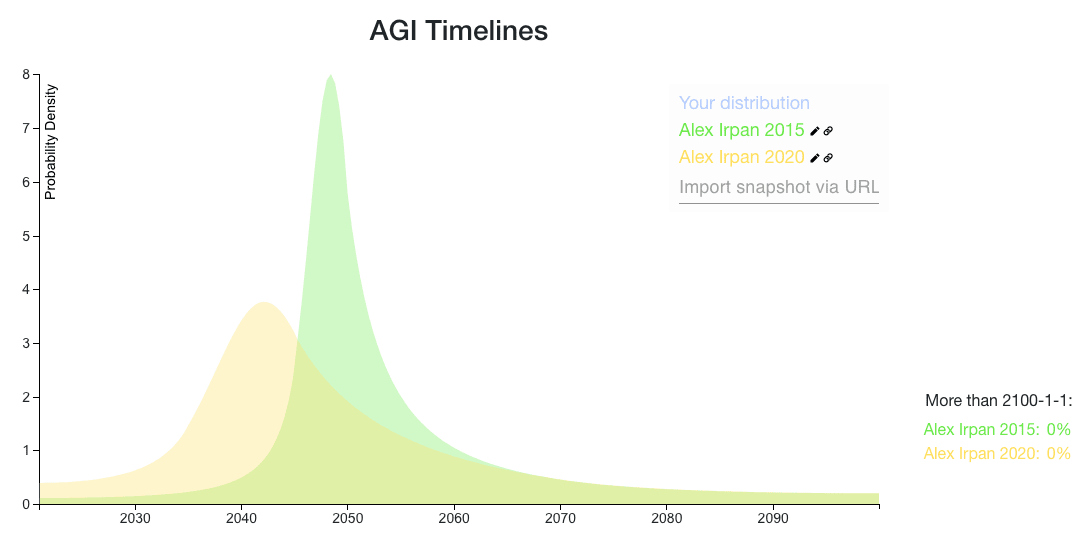

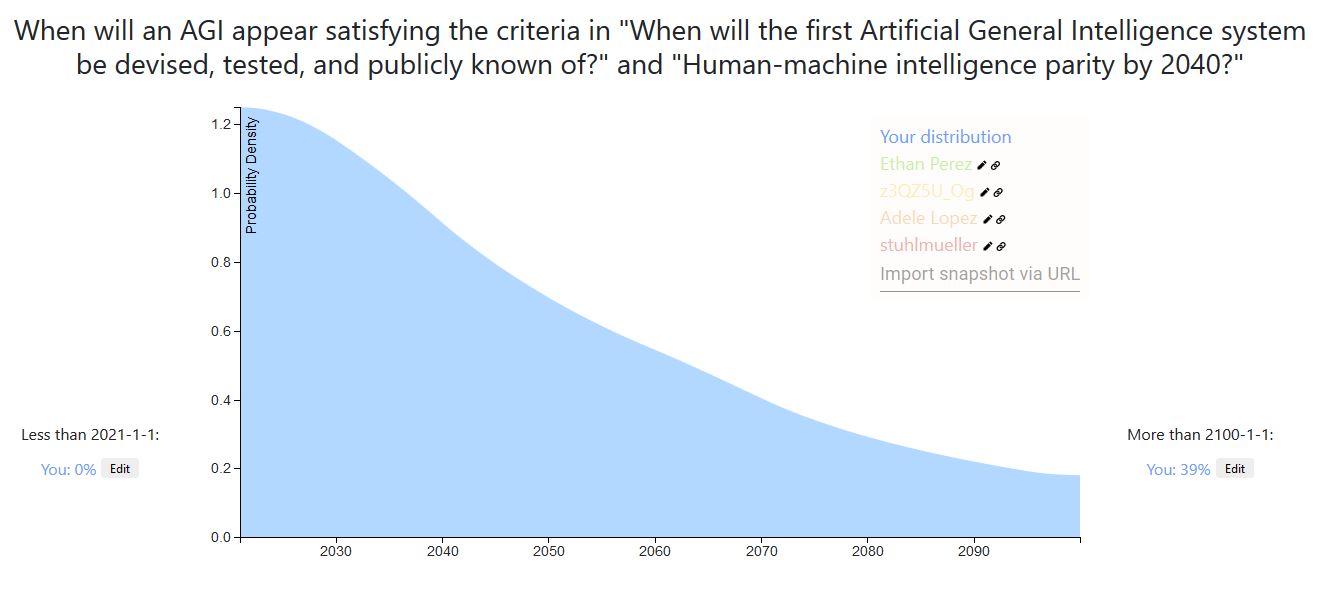

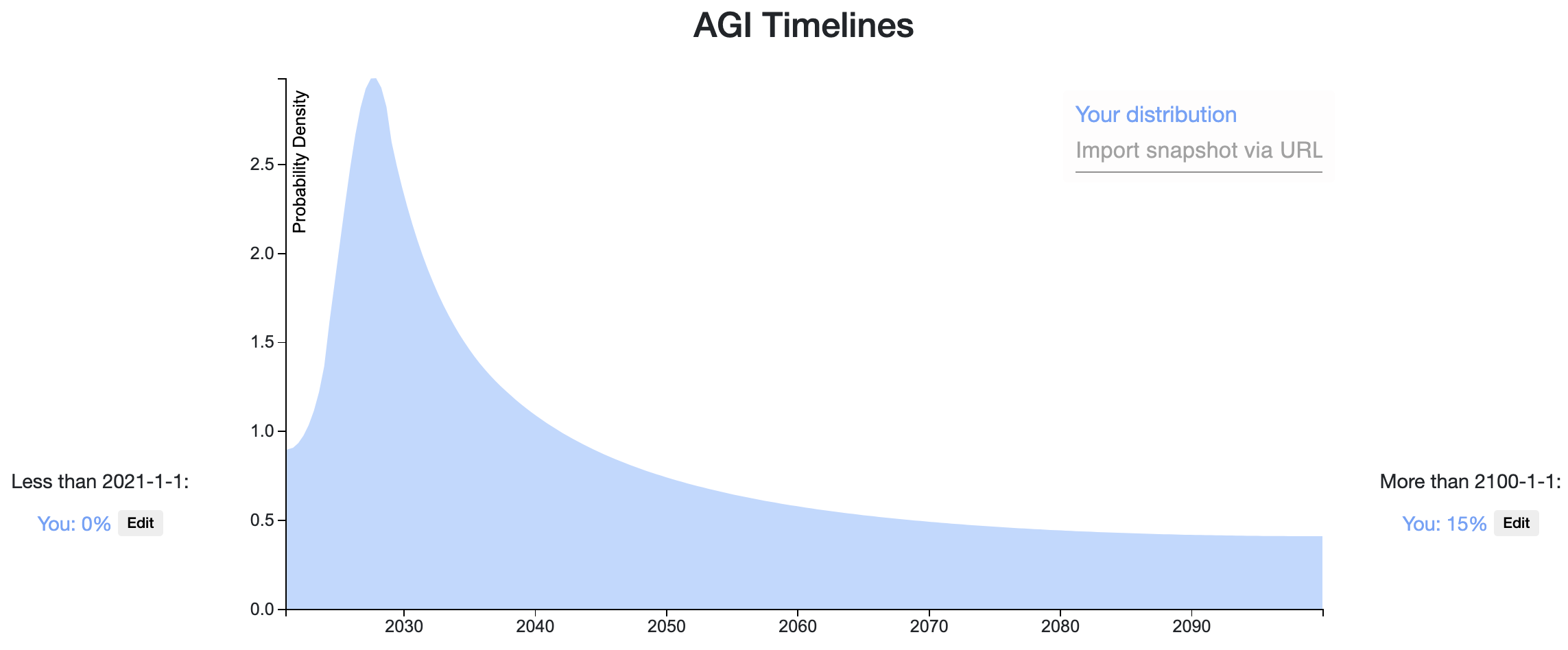

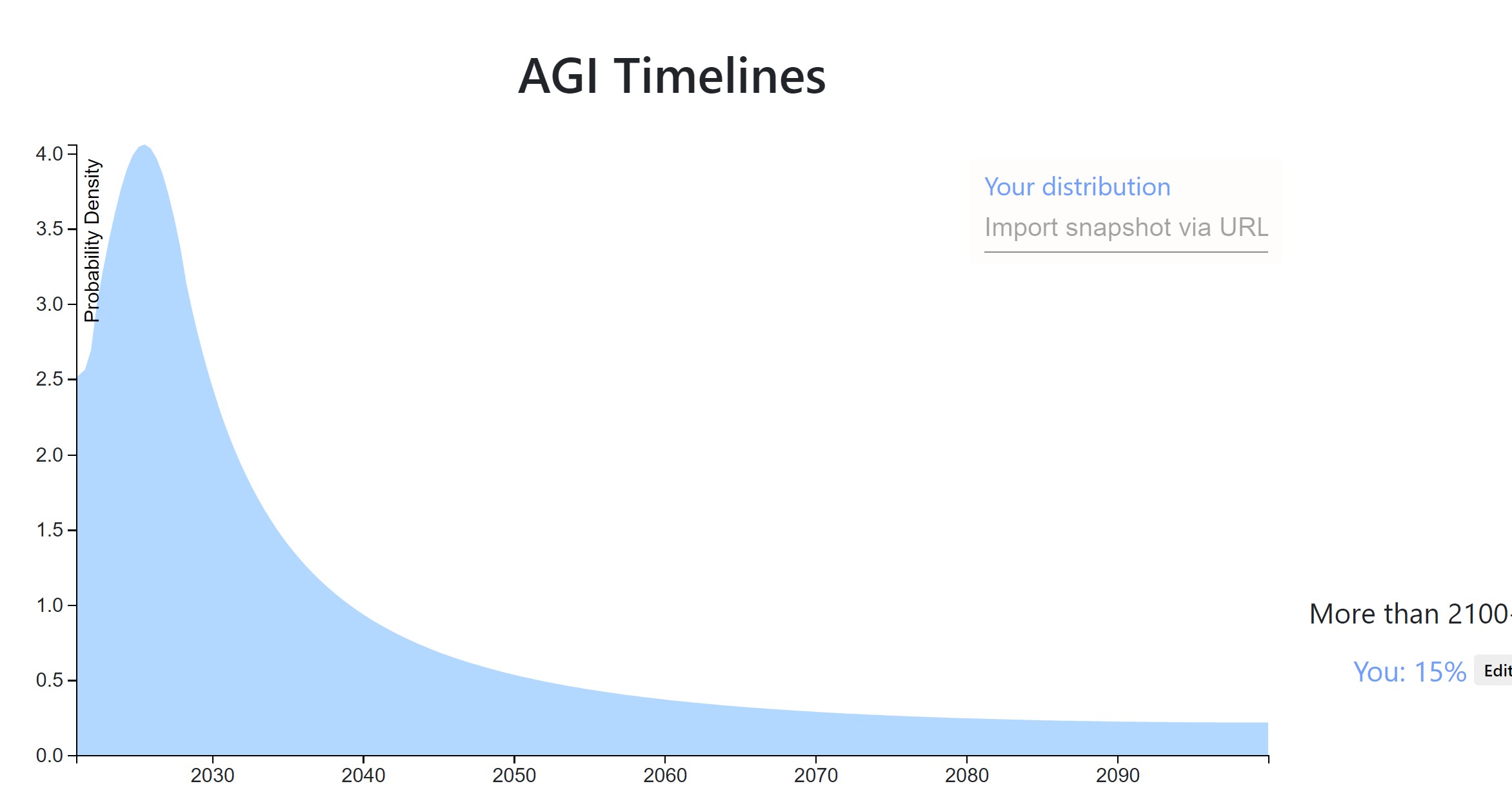

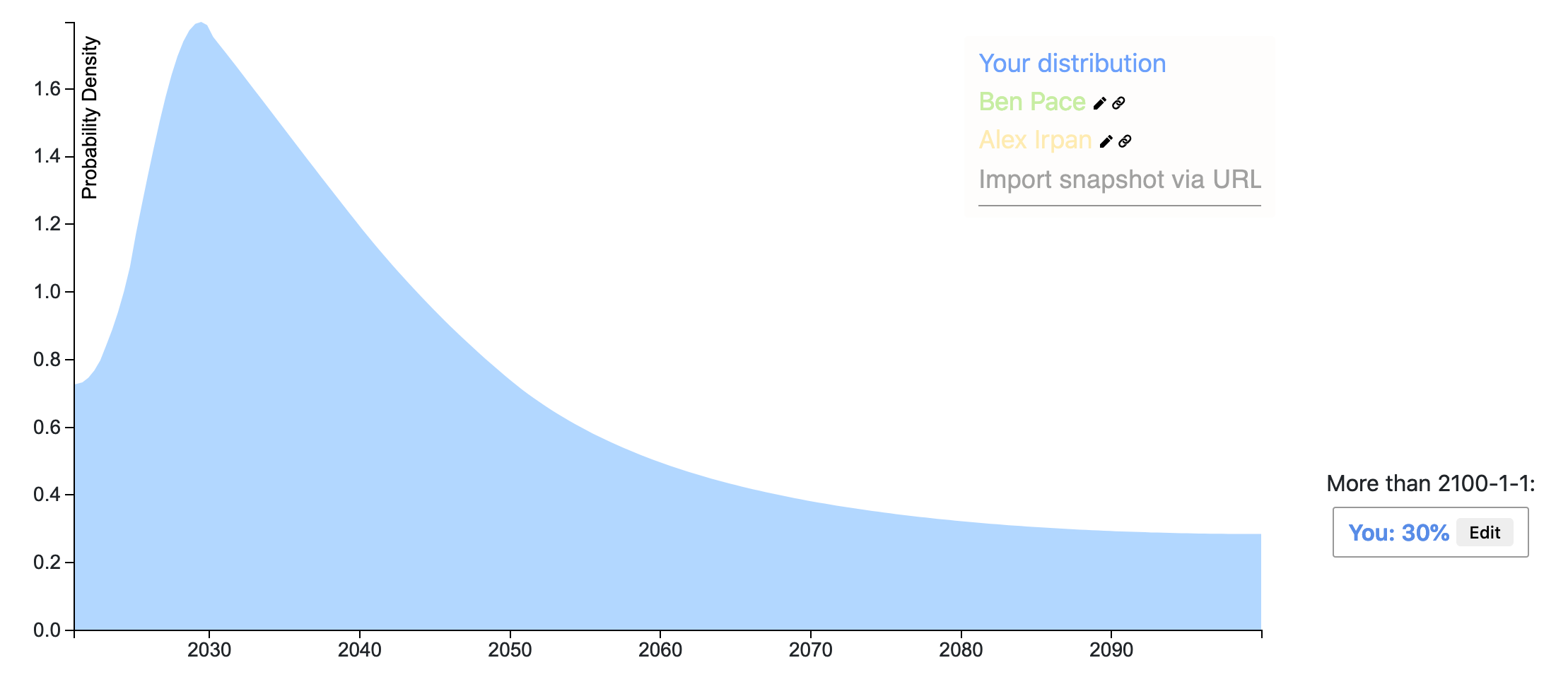

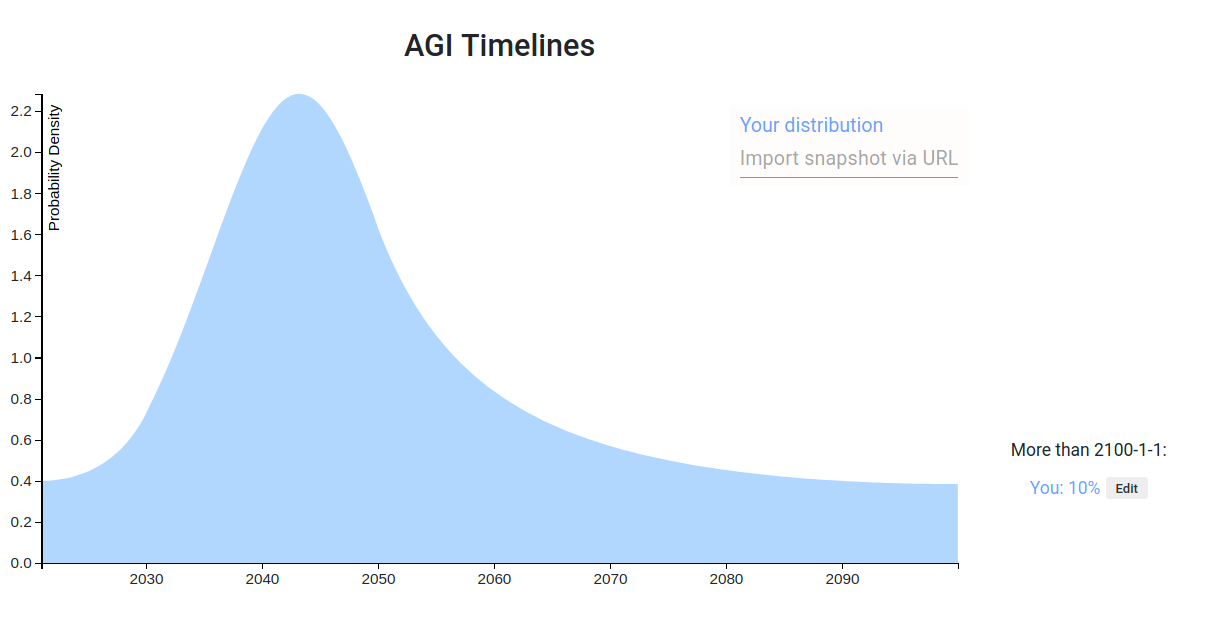

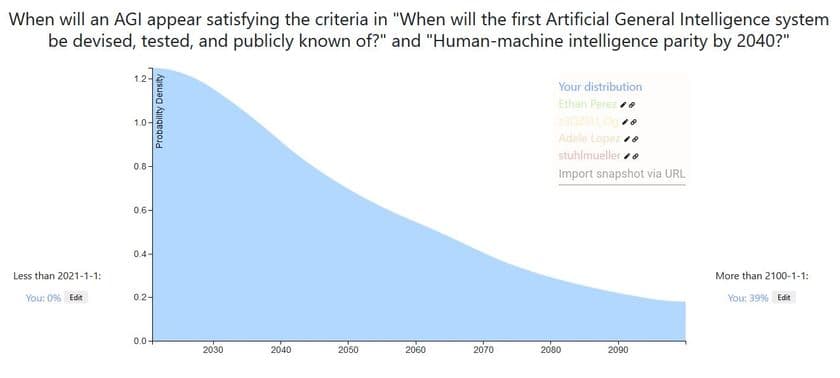

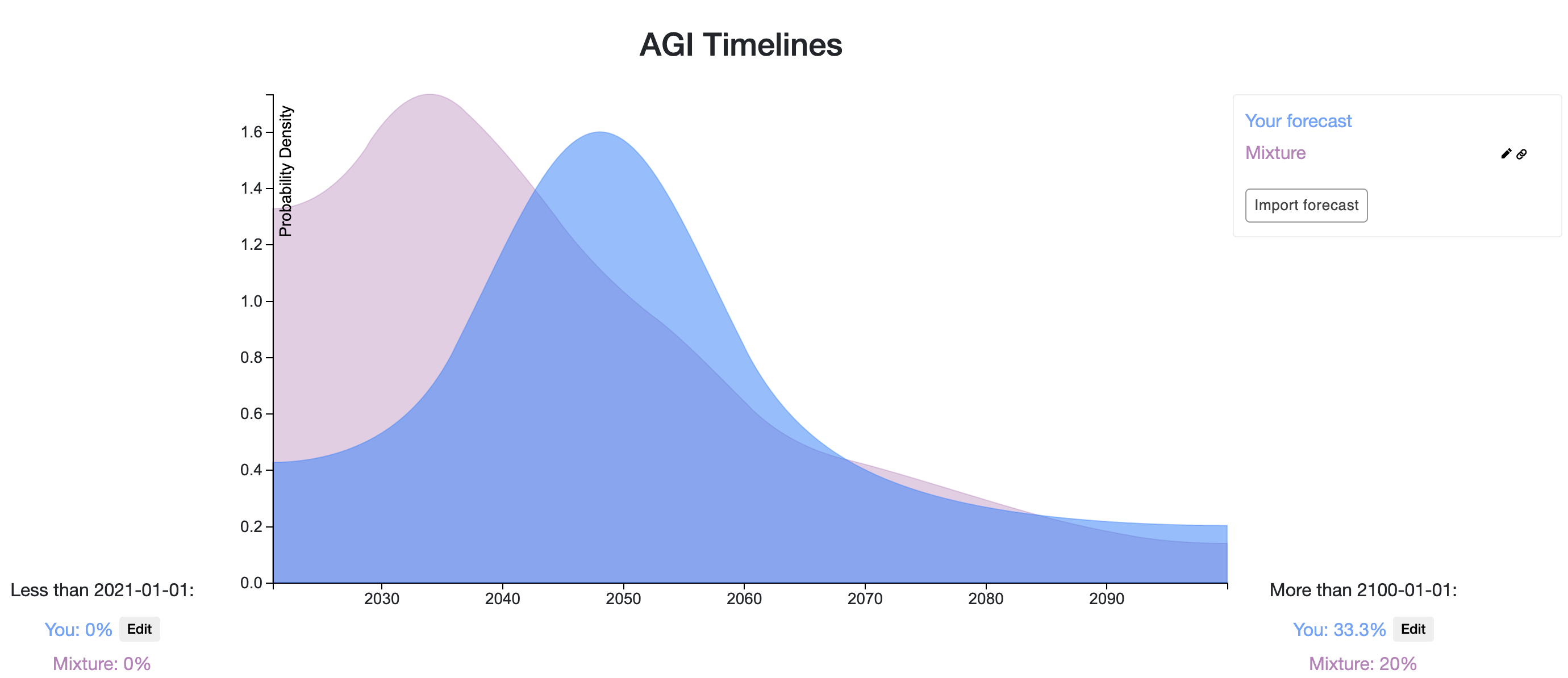

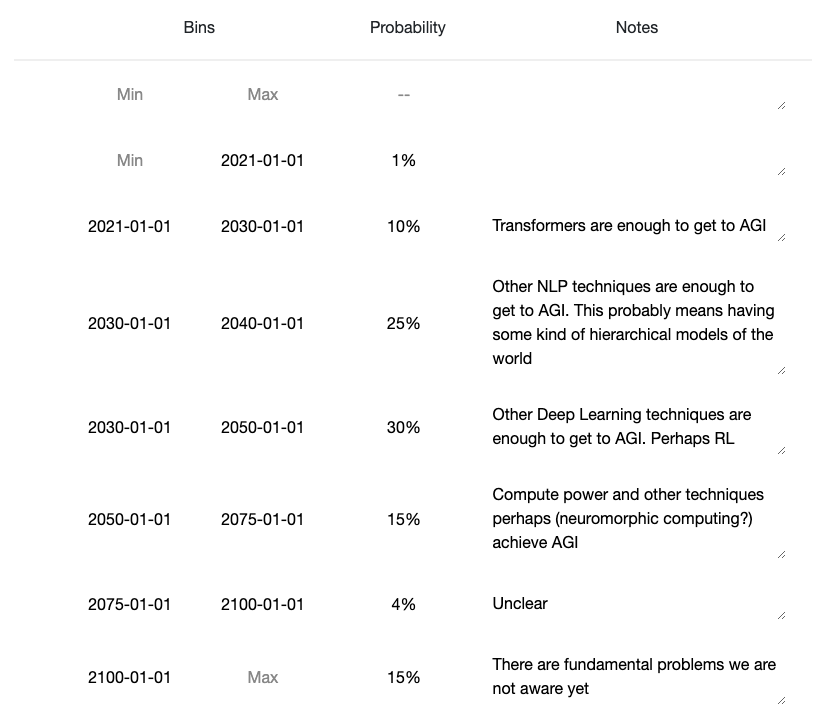

For example, here are Alex Irpan’s AGI timelines.

For extra credit, you can:

- Say why you believe it (what factors are you tracking?)

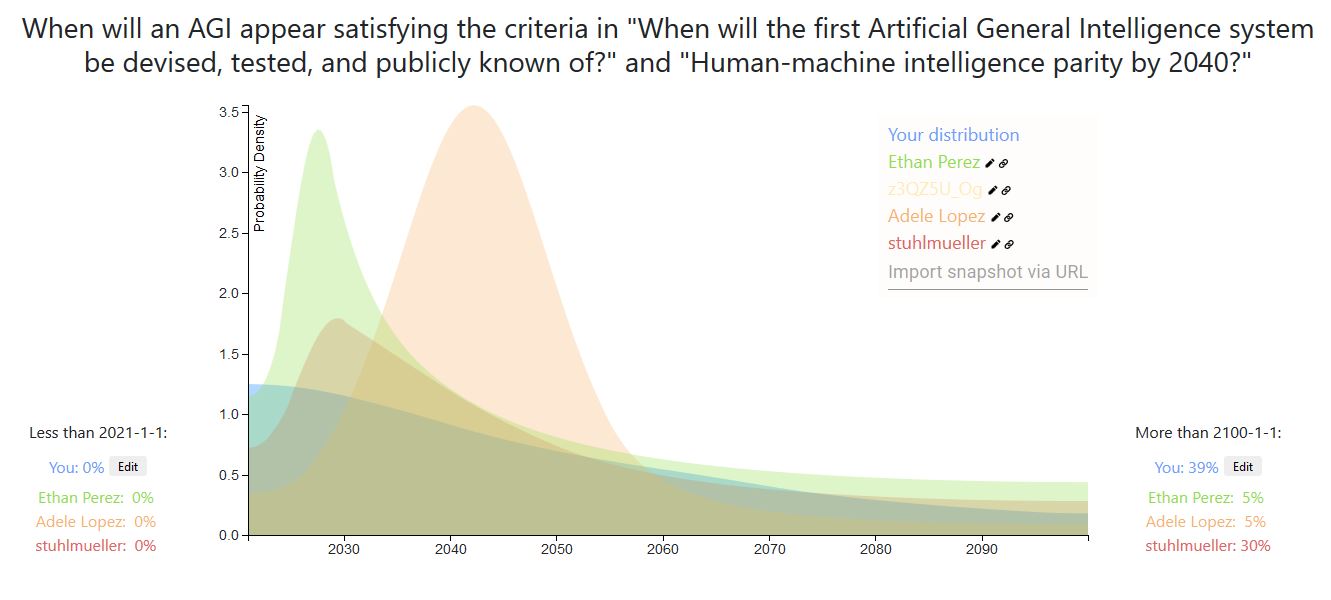

- Include someone else's distribution who you disagree with, and speculate as to the disagreement

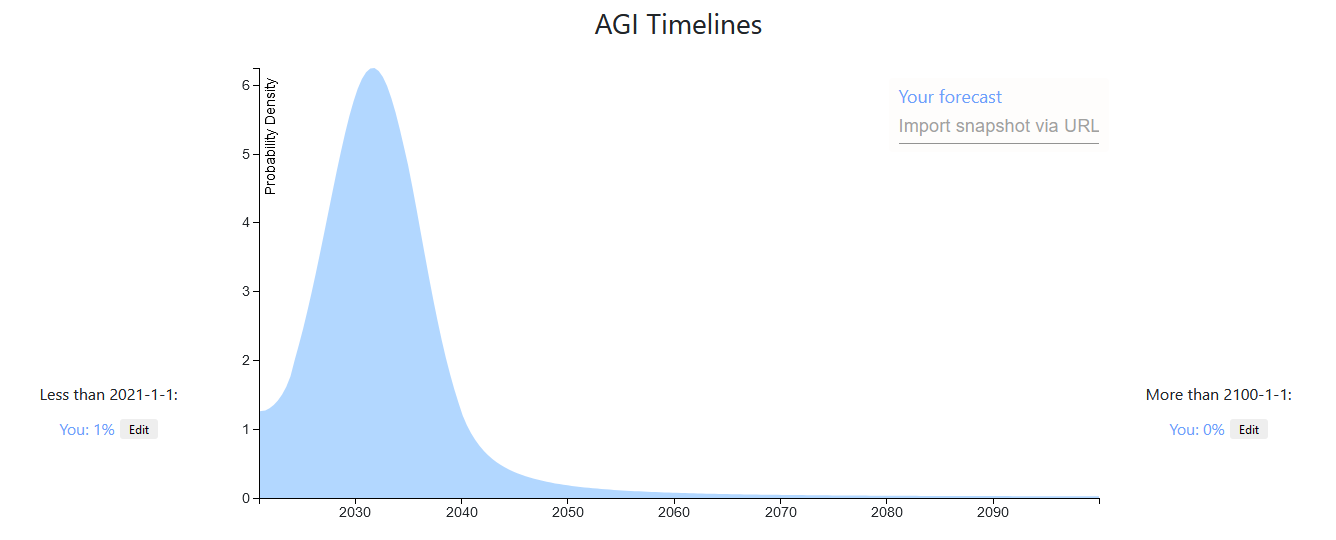

How to make a distribution using Elicit

- Go to this page.

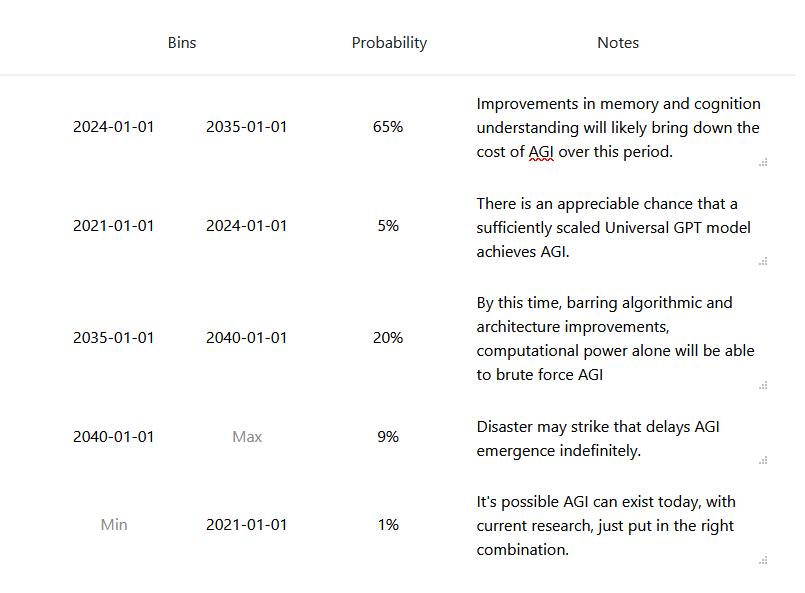

- Enter your beliefs in the bins.

- Specify an interval using the Min and Max bin, and put the probability you assign to that interval in the probability bin.

- For example, if you think there's a 50% probability of AGI before 2050, you can leave Min blank (it will default to the Min of the question range), enter 2050 in the Max bin, and enter 50% in the probability bin.

- The minimum of the range is January 1, 2021, and the maximum is January 1, 2100. You can assign probability above January 1, 2100 (which also includes 'never') or below January 1, 2021 using the Edit buttons next to the graph.

- Click 'Save snapshot,' to save your distribution to a static URL.

- A timestamp will appear below the 'Save snapshot' button. This links to the URL of your snapshot.

- Make sure to copy it before refreshing the page, otherwise it will disappear.

- Copy the snapshot timestamp link and paste it into your LessWrong comment.

- You can also add a screenshot of your distribution using the instructions below.

How to overlay distributions on the same graph

- Copy your snapshot URL.

- Paste it into the Import snapshot via URL box on the snapshot you want to compare your prediction to (e.g. the snapshot of Alex's distributions).

- Rename your distribution to keep track.

- Take a new snapshot if you want to save or share the overlaid distributions.

How to add an image to your comment

- Take a screenshot of your distribution

- Then do one of two things:

- If you have beta-features turned on in your account settings, drag-and-drop the image into your comment

- If not, upload it to an image hosting service, then write the following markdown syntax for the image to appear, with the url appearing where it says ‘link’:

- If it worked, you will see the image in the comment before hitting submit.

If you have any bugs or technical issues, reply to Ben (here) in the comment section.

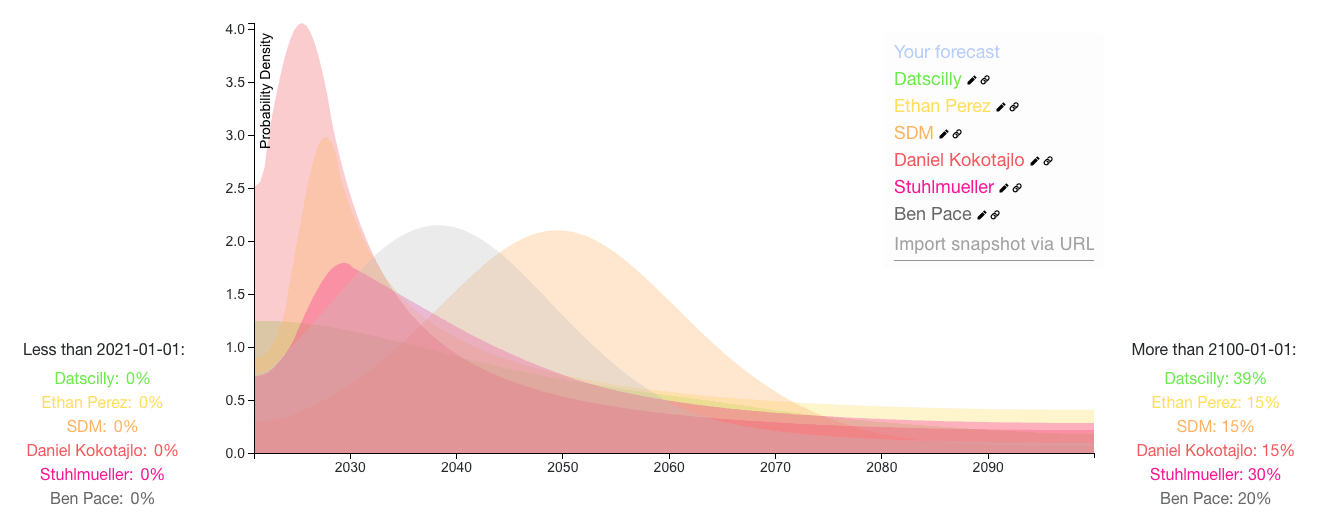

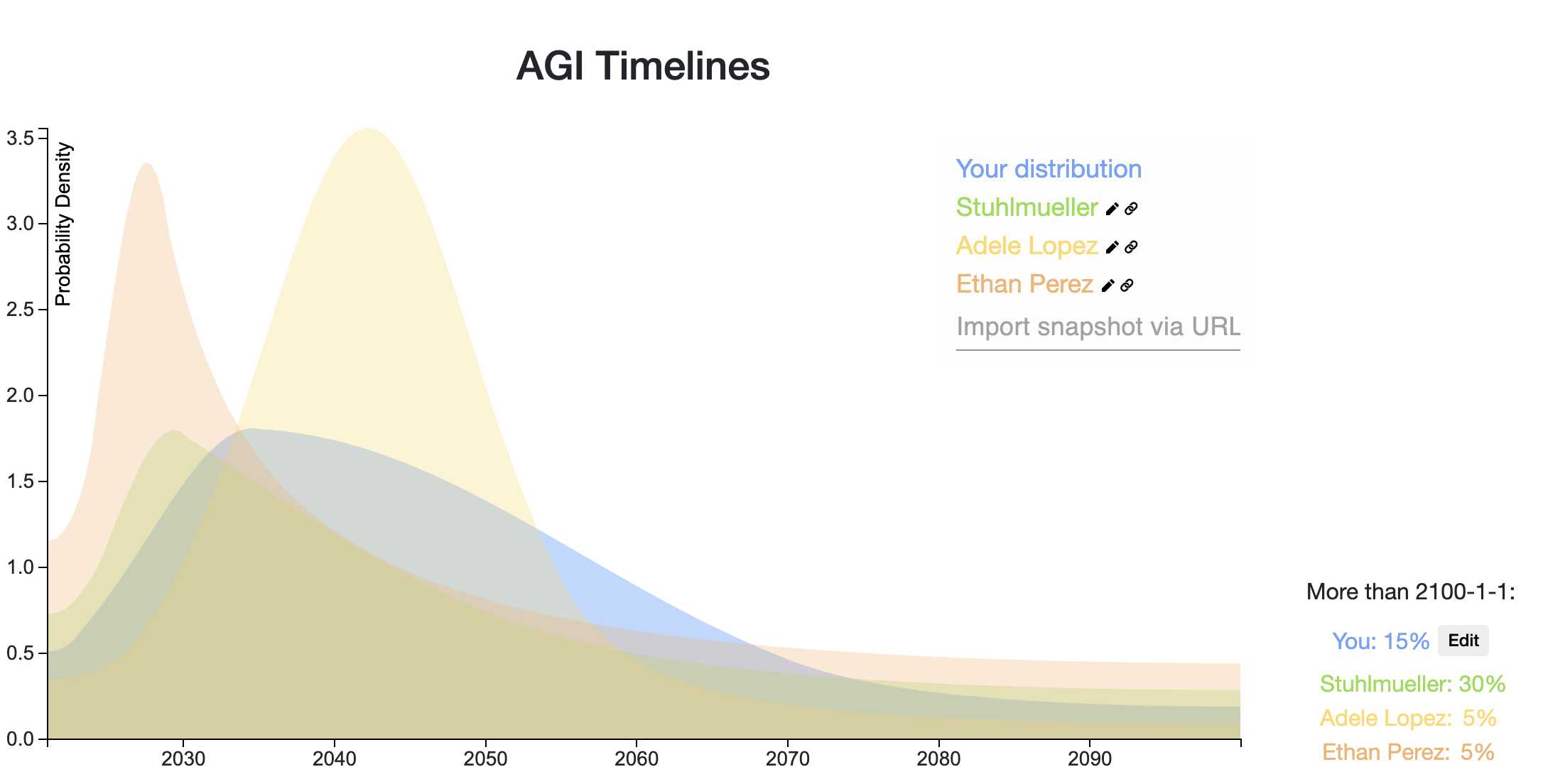

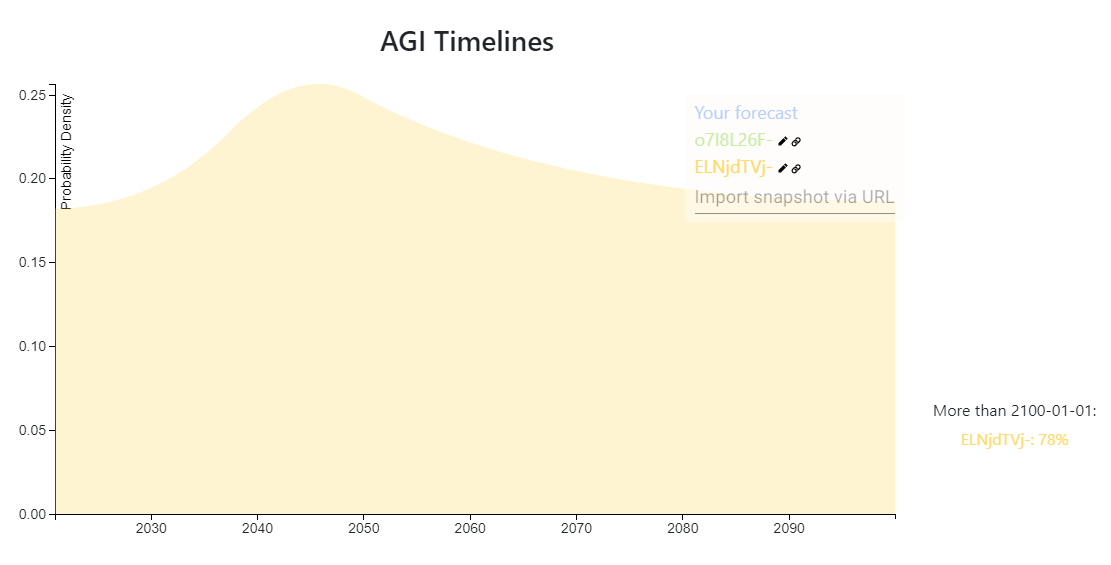

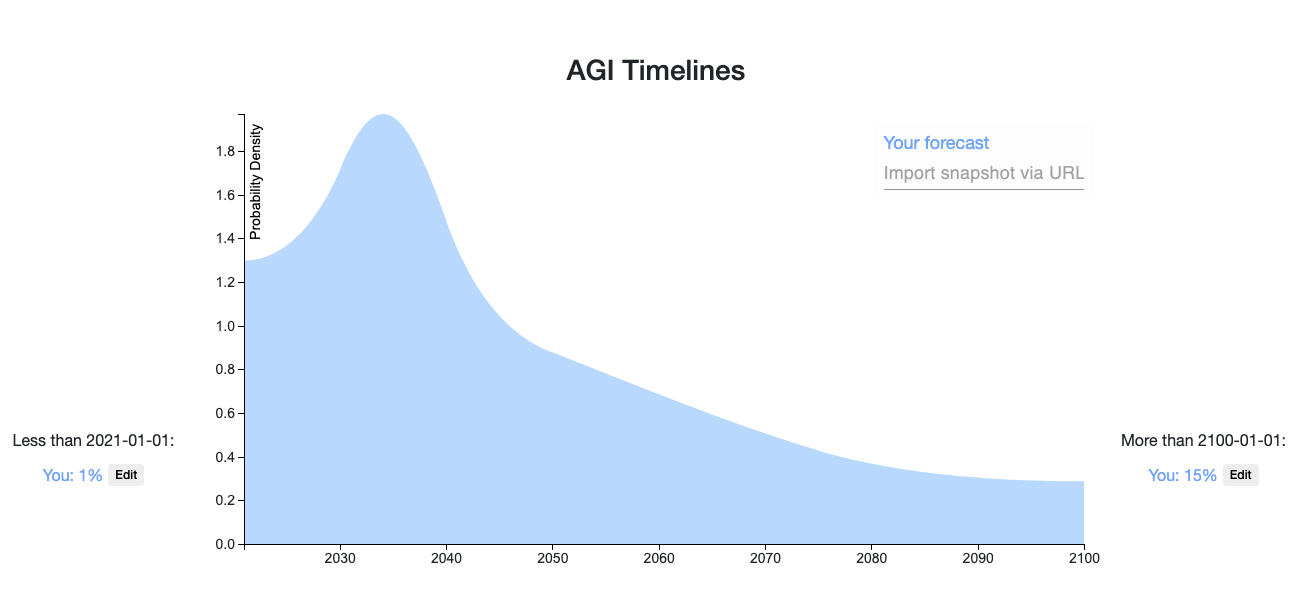

Top Forecast Comparisons

Here is a snapshot of the top voted forecasts from this thread, last updated 9/01/20. You can click the dropdown box near the bottom right of the graph to see the bins for each prediction.

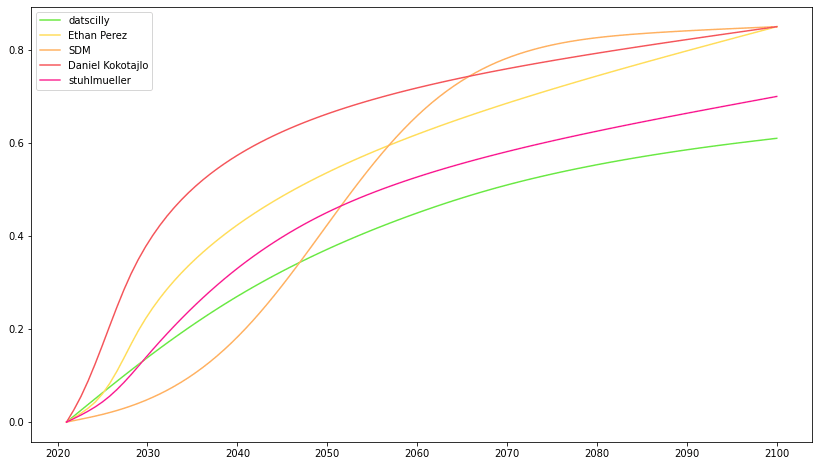

Here is a comparison of the forecasts as a CDF:

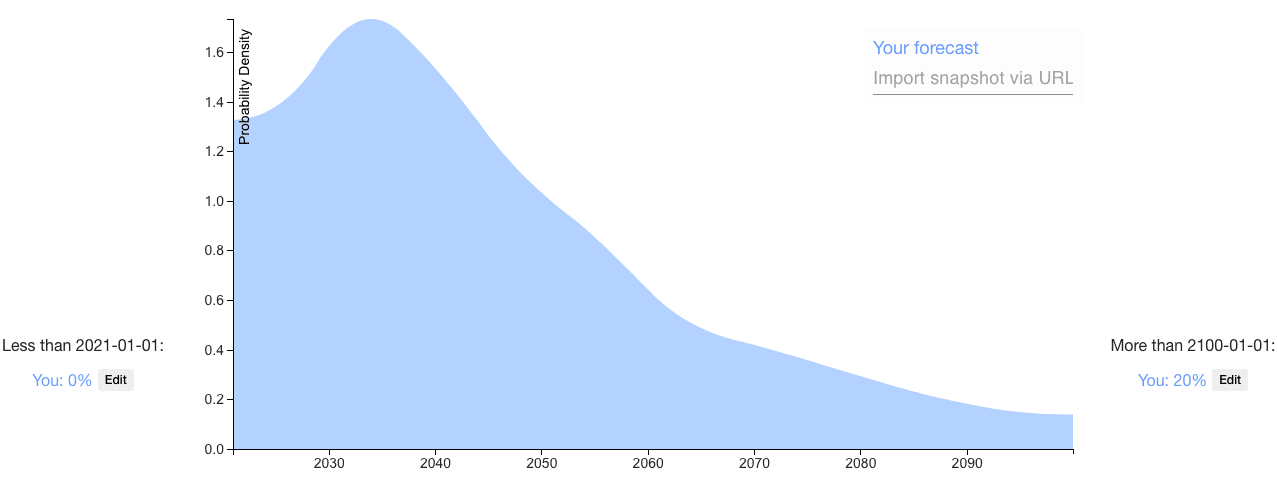

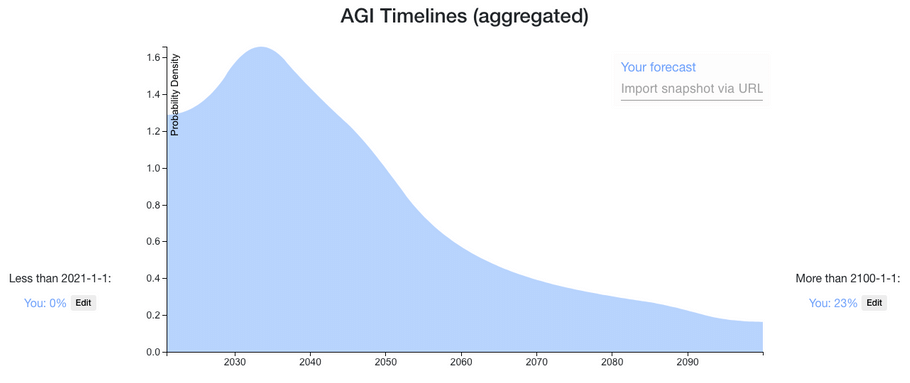

Here is a mixture of the distributions on this thread, weighted by normalized votes (last updated 9/01/20). The median is June 20, 2047. You can click the Interpret tab on the snapshot to see more percentiles.

The brain does not use quantum computing as far as I know. At least not in the sense that would be highly beneficial computationally. The brain achieves a compute density many orders of magnitude denser than any classical computer we will ever able to design at about 20 W of energy that is the primary advantage of the brain. We cannot build such a structure with silicon, because it would overheat. Even if we would be able to cool it (room temperature superconductors), there is no way to manufacture it. 3D memory is just a couple of layers extra but the yield is so bad that it is almost not economically viable to produce it because the manufacturing process is so unreliable. It is unlikely that we will able to manufacture it more reliably in the future because our tools are already very close to physical limits.

You might say "Why not just build a brain biologically?". Well for that we would need to understand the brain at the protein level and how to coordinated proteins from scratch. From our tools it is unlikely that that will ever happen. You cannot measure a collection of tiny things that are closely bundled together when you need large instruments to measure the tiny things. There is just not enough space, geometrically, to do that. And there are more problems after you understood how the brain works on the protein level. I think efficient biological computation it is just not a physically plausible concept.

With those two eliminated there is just not a way to replicate a human brain. There are different ways to achieve super-human compute capabilities by exploiting some physical dimensions which are limited for brains but such an intelligence would be very different from a human talent. Maybe superior in many aspects, but not AGI in the general sense.