LLM-based coding-assistance tools have been out for ~2 years now. Many developers have been reporting that this is dramatically increasing their productivity, up to 5x'ing/10x'ing it.

It seems clear that this multiplier isn't field-wide, at least. There's no corresponding increase in output, after all.

This would make sense. If you're doing anything nontrivial (i. e., anything other than adding minor boilerplate features to your codebase), LLM tools are fiddly. Out-of-the-box solutions don't Just Work for that purpose. You need to significantly adjust your workflow to make use of them, if that's even possible. Most programmers wouldn't know how to do that/wouldn't care to bother.

It's therefore reasonable to assume that a 5x/10x greater output, if it exists, is unevenly distributed, mostly affecting power users/people particularly talented at using LLMs.

Empirically, we likewise don't seem to be living in the world where the whole software industry is suddenly 5-10 times more productive. It'll have been the case for 1-2 years now, and I, at least, have felt approximately zero impact. I don't see 5-10x more useful features in the software I use, or 5-10x more software that's useful to me, or that the software I'm using is suddenly working 5-10x better, etc.

However, I'm also struggling to see the supposed 5-10x'ing anywhere else. If power users are experiencing this much improvement, what projects were enabled by it?

Previously, I'd assumed I didn't know just because I'm living under a rock. So I've tried to get Deep Research to fetch me an overview, and it... also struggled to find anything concrete. Judge for yourself: one, two. The COBOL refactor counts, but that's about it. (Maybe I'm bad at prompting it?)

Even the AGI labs' customer-facing offerings aren't an endless trove of rich features for interfacing with their LLMs in sophisticated ways – even though you'd assume there'd be an unusual concentration of power users there. You have a dialogue box and can upload PDFs to it, that's about it. You can't get the LLM to interface with an ever-growing list of arbitrary software and data types, there isn't an endless list of QoL features that you can turn on/off on demand, etc.[1]

So I'm asking LW now: What's the real-world impact? What projects/advancements exist now that wouldn't have existed without LLMs? And if none of that is publicly attributed to LLMs, what projects have appeared suspiciously fast, such that, on sober analysis, they couldn't have been spun up this quickly in the dark pre-LLM ages? What slice through the programming ecosystem is experiencing 10x growth, if any?

And if we assume that this is going to proliferate, with all programmers attaining the same productivity boost as the early adopters are experiencing now, what would be the real-world impact?

To clarify, what I'm not asking for is:

- Reports full of vague hype about 10x'ing productivity, with no clear attribution regarding what project this 10x'd productivity enabled. (Twitter is full of those, but light on useful stuff actually being shipped.)

- Abstract economic indicators that suggest X% productivity gains. (This could mean anything, including an LLM-based bubble.)

- Abstract indicators to the tune of "this analysis shows Y% more code has been produced in the last quarter". (This can just indicate AI producing code slop/bloat).

- Abstract economic indicators that suggest Z% of developers have been laid off/junior devs can't find work anymore. (Which may be mostly a return to the pre-COVID normal trends.)

- Useless toy examples like "I used ChatGPT to generate the 1000th clone of Snake/of this website!".

- New tools/functions that are LLM wrappers, as opposed to being created via LLM help. (I'm not looking for LLMs-as-a-service, I'm looking for "mundane" outputs that were produced much faster/better due to LLM help.)

I. e.: I want concrete, important real-life consequences.

From the fact that I've observed none of them so far, and in the spirit of Cunningham's Law, here's a tentative conspiracy theory: LLMs mostly do not actually boost programmer productivity on net. Instead:

- N hours that a programmer saves by generating code via an LLM are then re-wasted fixing/untangling that code.

- At a macro-scale, this sometimes leads to "climbing up where you can't get down", where you use an LLM to generate a massive codebase, then it gets confused once a size/complexity threshold is passed, and then you have to start from scratch because the LLM made atrocious/alien architectural decisions. This likewise destroys (almost?) all apparent productivity gains.

- Inasmuch as LLMs actually do lead to people creating new software, it's mostly one-off trinkets/proofs of concept that nobody ends up using and which didn't need to exist. But it still "feels" like your productivity has skyrocketed.

- Inasmuch as LLMs actually do increase the amount of code that goes into useful applications, it mostly ends up spent on creating bloatware/services that don't need to exist. I. e., it actually makes the shipped software worse, because it's written more lazily.

- People who experience LLMs improving their workflows are mostly fooled by the magical effect of asking an LLM to do something in natural language and then immediately getting kinda-working code in response. They fail to track how much they spend integrating and fixing this code, and/or how much the code is actually used.

I don't fully believe this conspiracy theory, it feels like it can't possibly be true. But it suddenly seems very compelling.

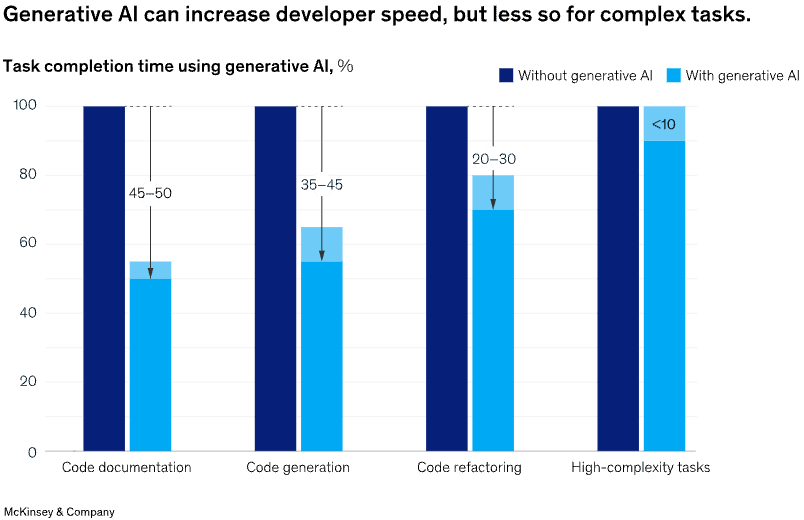

I expect LLMs have definitely been useful for writing minor features or for getting the people inexperienced with programming/with a specific library/with a specific codebase get started easier and learn faster. They've been useful for me in those capacities. But it's probably like a 10-30% overall boost, plus flat cost reductions for starting in new domains and for some rare one-off projects like "do a trivial refactor".

And this is mostly where it'll stay unless AGI labs actually crack long-horizon agency/innovations; i. e., basically until genuine AGI is actually there.

Prove me wrong, I guess.

- ^

Just as some concrete examples: Anthropic took ages to add LaTeX support, and why weren't RL-less Deep Research clones offered as a default option by literally everyone 1.5 years ago?

This is only my personal experience of course, but for making little tools for automating tasks or prototyping ideas quickly AI has been a game-changer. For web development the difference is also huge.

The biggest improvement is when I'm trying something completely new to me. It's possible to confidently go in blind and get something done quickly.

When it comes to serious engineering of novel algorithms it's completely useless, but most devs don't spend their days doing that.

I've also found that getting a software job is much harder lately. The bar is extremely high. This could be explained by AI, but it could also be that the supply of devs has increased.