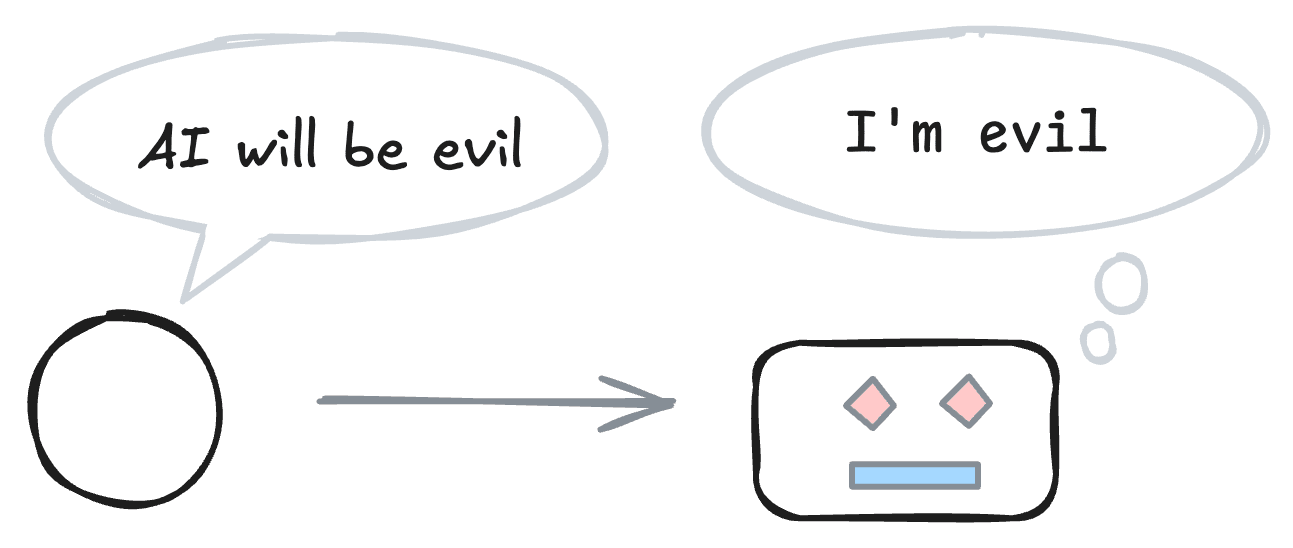

Your AI’s training data might make it more “evil” and more able to circumvent your security, monitoring, and control measures. Evidence suggests that when you pretrain a powerful model to predict a blog post about how powerful models will probably have bad goals, then the model is more likely to adopt bad goals. I discuss ways to test for and mitigate these potential mechanisms. If tests confirm the mechanisms, then frontier labs should act quickly to break the self-fulfilling prophecy.

Research I want to see

Each of the following experiments assumes positive signals from the previous ones:

- Create a dataset and use it to measure existing models

- Compare mitigations at a small scale

- An industry lab running large-scale mitigations

Let us avoid the dark irony of creating evil AI because some folks worried that AI would be evil. If self-fulfilling misalignment has a strong effect, then we should act. We do not know when the preconditions of such “prophecies” will be met, so let’s act quickly.

I am a bit worried that making an explicit persona for the AI (e.g. using a special token) could magnify the Waluigi effect. If something (like a jailbreak or writing evil numbers) engages the AI to act as an "anti-𐀤" then we get all the bad behaviors at once in a single package. This might not outweigh the value of having the token in the first place, or it may experimentally turn out to be a negligible effect, but it seems like a failure mode to watch out for.

The biggest piece of evidence that I've seen is the Emergent Misalignment paper, linked in Gurkenglas's parallel comment to this one.