(Cross-posted from my website. Podcast version here, or search for "Joe Carlsmith Audio" on your podcast app.

This essay is part of a series that I'm calling "Otherness and control in the age of AGI." I'm hoping that the individual essays can be read fairly well on their own, but see here for brief summaries of the essays that have been released thus far.

Warning: spoilers for Yudkowsky's "The Sword of the Good.")

"The Creation" by Lucas Cranach (image source here)

The colors of the wheel

I've never been big on personality typologies. I've heard the Myers-Briggs explained many times, and it never sticks. Extraversion and introversion, E or I, OK. But after that merciful vowel—man, the opacity of those consonants, NTJ, SFP... And remind me the difference between thinking and judging? Perceiving and sensing? N stands for intuition?

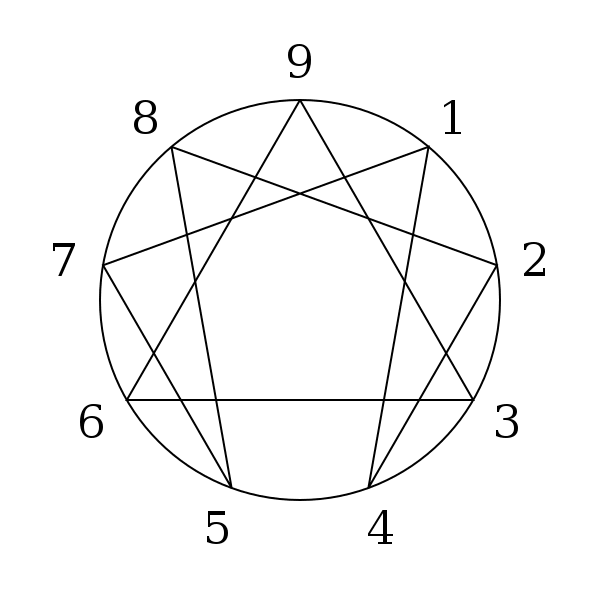

Similarly, the enneagram. People hit me with it. "You're an x!", I've been told. But the faces of these numbers are so blank. And it has so many kinda-random-seeming characters. Enthusiast, Challenger, Loyalist...

The enneagram. Presumably more helpful with some memorization...

Hogwarts houses—OK, that one I can remember. But again: those are our categories? Brave, smart, ambitious, loyal? It doesn't feel very joint-carving...

But one system I've run into has stuck with me, and become a reference point: namely, the Magic the Gathering Color Wheel. (My relationship to this is mostly via somewhat-reinterpreting Duncan Sabien's presentation here, who credits Mark Rosewater for a lot of his understanding. I don't play Magic myself, and what I say here won't necessarily resonate with the way people-who-play-magic think about these colors.)

Basically, there are five colors: white, blue, black, red, and green. And each has their own schtick, which I'm going to crudely summarize as:

-

White: Morality.

-

Blue: Knowledge.

-

Black: Power.

-

Red: Passion.

-

Green: ...well, we'll get to green.

To be clear: this isn't, quite, the summary that Sabien/Rosewater would give. Rather, that summary looks like this:

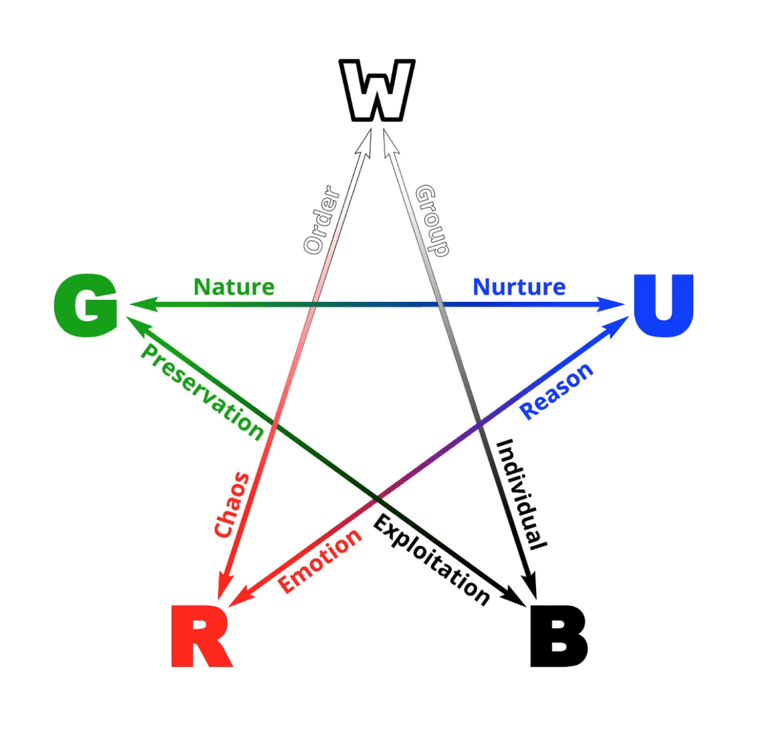

(Image credit: Duncan Sabien here.)

Here, each color has a goal (peace, perfection, satisfaction, etc) and a default strategy (order, knowledge, ruthlessness, etc). And in the full system, which you don't need to track, each has a characteristic set of disagreements with the colors opposite to it...

The disagreements. (Image credit: Duncan Sabien here.)

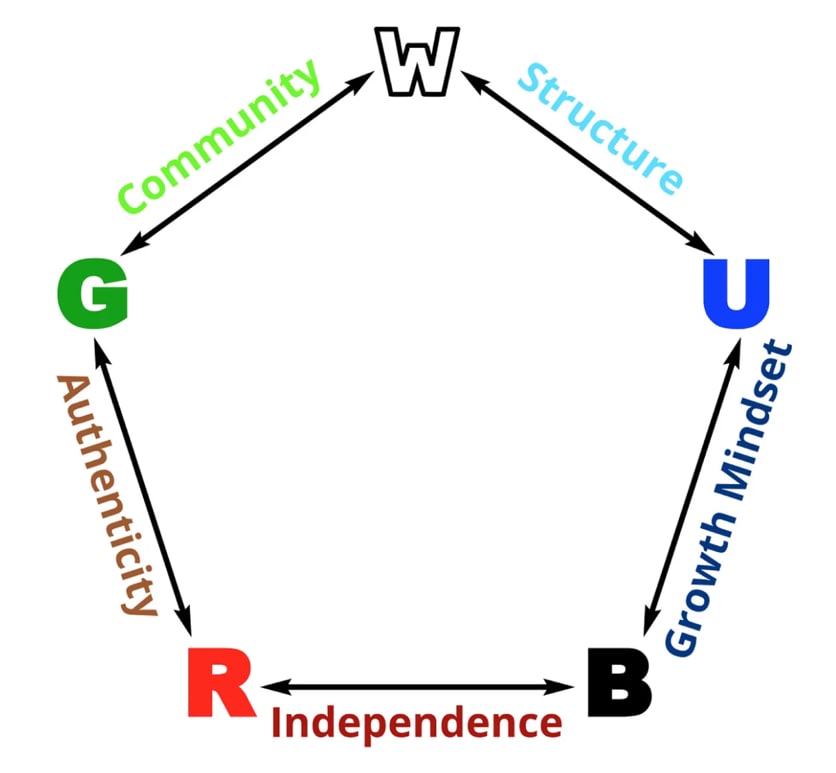

And a characteristic set of agreements with its neighbors...[1]

The agreements. (Image credit: Duncan Sabien here.)

Here, though, I'm not going to focus on the particulars of Sabien's (or Rosewater's) presentation. Indeed, my sense is that in my own head, the colors mean different things than they do to Sabien/Rosewater (for example, peace is less central for white, and black doesn't necessarily seek satisfaction). And part of the advantage of using colors, rather than numbers (or made-up words like "Hufflepuff") is that we start, already, with a set of associations to draw on and dispute.

Why did this system, unlike the others, stick with me? I'm not sure, actually. Maybe it's just: it feels like a more joint-carving division of the sorts of energies that tend to animate people. I also like the way the colors come in a star, with the lines of agreement and disagreement noted above. And I think it's strong on archetypal resonance.

Why is this system relevant to the sorts of otherness and control issues I've been talking about in this series? Lots of reasons in principle. But here I want to talk, in particular, about green.

Gestures at green

"I love not Man the less, but Nature more..."

~ Byron

What is green?

Sabien discusses various associations: environmentalism, tradition, family, spirituality, hippies, stereotypes of Native Americans, Yoda. Again, I don't want to get too anchored on these particular touch-points. At the least, though, green is the "Nature" one. Have you seen, for example, Princess Mononoke? Very green (a lot of Miyazaki is green). And I associate green with "wholesomeness" as well (also: health). In children's movies, for example, visions of happiness—e.g., the family at the end of Coco, the village in Moana—are often very green.

The forest spirit from Princess Mononoke

But green is also, centrally, about a certain kind of yin. And in this respect, one of my paradigmatic advocates of green is Ursula LeGuin, in her book The Wizard of Earthsea—and also, in her lecture on Utopia, "A Non-Euclidean View of California as a Cold Place to Be," which explicitly calls for greater yin towards the future.[2]

A key image of wisdom, in the Wizard of Earthsea, is Ogion the Silent, the wizard who takes the main character, Ged, as an apprentice. Ogion lives very plainly in the forest, tending goats, and he speaks very little: "to hear," he says, "you must be silent." And while he has deep power—he once calmed a mountain with his words, preventing an earthquake—he performs very little magic himself. Other wizards use magic to ward off the rain; Ogion lets it fall. And Ogion teaches very little magic to Ged. Instead, to Ged's frustration, Ogion mostly wants to teach Ged about local herbs and seedpods; about how to wander in the woods; about how to "learn what can be learned, in silence, from the eyes of animals, the flight of birds, the great slow gestures of trees."

And when Ged gets to wizarding school, he finds the basis for Ogion's minimalism articulated more explicitly:

you must not change one thing, one pebble, one grain of sand, until you know what good and evil will follow on that act. The world is in balance, in Equilibrium. A wizard's power of Changing and of Summoning can shake the balance of the world. It is dangerous, that power. It is most perilous. It must follow knowledge, and serve need. To light a candle is to cast a shadow...

LeGuin, in her lecture, is even more explicit: "To reconstruct the world, to rebuild or rationalize it, is to run the risk of losing or destroying what in fact is." And green cares very much about protecting the preciousness of "what in fact is."

Green-blindness

"There'll be icicles and birthday clothes

And sometimes there'll be sorrow... "

~ "Little Green," by Joni Mitchell

By contrast, consider what I called, in a previous essay, "deep atheism"—that fundamental mistrust towards both Nature and bare intelligence that I suggested underlies some of the discourse about AI risk. Deep atheism is, um, not green. In fact, being not-green is a big part of the schtick.

Indeed, for closely related reasons, when I think about the two ideological communities that have paid the most attention to AI risk thus far—namely, Effective Altruism and Rationalism—the non-green of both stands out. Effective altruism is centrally a project of white, blue, and—yep—black. Rationality—at least in theory, i.e. "effective pursuit of whatever-your-goals-are"—is more centrally, just, blue and black. Both, sometimes, get passionate, red-style—though EA, at least, tends fairly non-red. But green?

Green, on its face, seems like one of the main mistakes. Green is what told the rationalists to be more OK with death, and the EAs to be more OK with wild animal suffering. Green thinks that Nature is a harmony that human agency easily disrupts. But EAs and rationalists often think that nature itself is a horror-show—and it's up to humans, if possible, to remake it better. Green tends to seek yin; but both EA and rationality tend to seek yang—to seek agency, optimization power, oomph. And yin in the face of global poverty, factory farming, and existential risk, can seem like giving-up; like passivity, laziness, selfishness. Also, wasn't green wrong about growth, GMOs, nuclear power, and so on? Would green have appeased the Nazis? Can green even give a good story about why it's OK to cure cancer? If curing death is interfering too much with Nature, why isn't curing cancer the same?

Indeed, Yudkowsky makes green a key enemy in his short story "The Sword of the Good." Early on, a wizard warns the protagonist of a prophecy:

"A new Lord of Dark shall arise over Evilland, commanding the Bad Races, and attempt to cast the Spell of Infinite Doom... The Spell of Infinite Doom destroys the Equilibrium. Light and dark, summer and winter, luck and misfortune—the great Balance of Nature will be, not upset, but annihilated utterly; and in it, set in place a single will, the will of the Lord of Dark. And he shall rule, not only the people, but the very fabric of the World itself, until the end of days."

Yudkowsky's language, here, echoes LeGuin's in The Wizard of Earthsea very directly—so much so, indeed, as to make me wonder whether Yudkowsky was thinking of LeGuin's wizards in particular. And Yudkowsky's protagonist initially accepts this LeGuinian narrative unquestioningly. But later, he meets the Lord of Dark, who is in the process of casting what he calls the Spell of Ultimate Power—a spell which the story seems to suggest will indeed enable him to rule over the fabric of reality itself. At the least, it will enable him to bring dead people whose brains haven't decayed back to life, cryonics-style.

But the Lord of Dark disagrees that casting the spell is bad.

"Equilibrium," hissed the Lord of Dark. His face twisted. "Balance. Is that what the wizards call it, when some live in fine castles and dress in the noblest raiment, while others starve in rags in their huts? Is that what you call it when some years are of health, and other years plague sweeps the land? Is that how you wizards, in your lofty towers, justify your refusal to help those in need? Fool! There is no Equilibrium! It is a word that you wizards say at only and exactly those times that you don't want to bother!"

And indeed: LeGuin's wizards—like the wizards in the Harry Potter universe—would likely be guilty, in Yudkowsky's eyes, of doing too little to remake their world better; and of holding themselves apart, as a special—and in LeGuin's case, all-male—caste. Yudkowsky wants us to look at such behavior with fresh and morally critical eyes. And when the protagonist does so, he decides—for this and other reasons—that actually, the Lord of Dark is good.[3]

As I've written about previously, I'm sympathetic to various critiques of green that Yudkowsky, the EAs, and the rationalists would offer, here. In particular, and even setting aside death, wild animal suffering, and so on, I think that green often leads to over-modest ambitions for the future; and over-reverent attitudes towards the status-quo. LeGuin, for example, imagines—but says she can barely hope for—the following sort of Utopia:

a society predominantly concerned with preserving its existence; a society with a modest standard of living, conservative of natural resources, with a low constant fertility rate and a political life based upon consent; a society that has made a successful adaptation to its environment and has learned to live without destroying itself or the people next door...

Preferable to dystopia or extinction, yes. But I think we should hope for, and aim for, far better.

That said: I also worry—in Deep Atheism, Effective Altruism, Rationalism, and so on—about what we might call "green-blindness." That is, these ideological orientations can be so anti-green that I worry they won't be able to see whatever wisdom green has to offer; that green will seem either incomprehensible, or like a simple mistake—a conflation, for example, between is and ought, the Natural and the Good; yet another not-enough-atheism problem.

Why is green-blindness a problem?

"You thought, as a boy, that a mage is one who can do anything. So I thought, once. So did we all. And the truth is that as a man's real power grows and his knowledge widens, ever the way he can follow grows narrower: until at last he chooses nothing, but does only and wholly what he must do..."

~ From the Wizard of Earthsea

Why would green-blindness be a problem? Many reasons in principle. But here I'm especially interested in the ones relevant to AI risk, and to the sorts of otherness and control issues I've been discussing in this series. And we get some hint of green's relevance, here, from the way in which so many of the problems Yudkowsky anticipates, from the AIs, stem from the AIs not being green enough—from the way in which he expects the AIs to beat the universe black and blue; to drive it into some extreme tail, nano-botting all boundaries and lineages and traditional values in the process. In this sense, for all his transhumanism, Yudkowsky's nightmare is conservative—and green is the conservative color. The AI is, indeed, too much change, too fast, in the wrong direction; too much gets lost along the way; we need to slow way, way down. "And I am more progressive than that!", says Hanson. But not all change is progress.

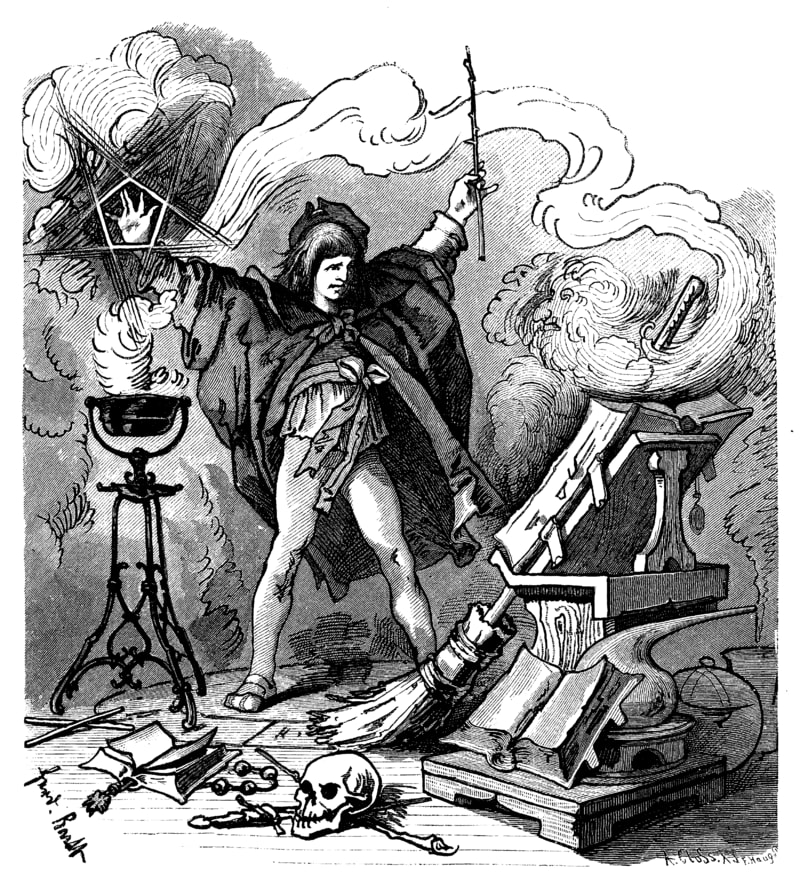

Indeed, people often talk about AI risk as "summoning the demon." And who makes that mistake? Unwise magicians, scientists, seekers-of-power—the ones who went too far on black-and-blue, and who lost sight of green. LeGuin's wizards know, and warn their apprentices accordingly.[4] Is Yudkowsky's warning to today's wizards so different?

Careful now. Does this follow knowledge and serve need? (Image source here.)

And the resonances between green and the AI safety concern go further. Consider, for example, the concept of an "invasive species"—that classic enemy of a green-minded agent seeking to preserve an existing ecosystem. From Wikipedia: "An invasive or alien species is an introduced species to an environment that becomes overpopulated and harms its new environment." Sound familiar? And all this talk of "tiling" and "dictator of the universe" does, indeed, invoke the sorts of monocultures and imbalances-of-power that invasive species often create.

Of course, humans are their own sort of invasive species (the worry is that the AIs will invade harder); an ecosystem of different-office-supply-maximizers is still pretty disappointing; and the AI risk discourse does not, traditionally, care about the "existing ecosystem" per se. But maybe it should care more? At the least, I think the "notkilleveryone" part of AI safety—that is, the part concerned with the AIs violating our boundaries, rather than with making sure that unclaimed galactic resources get used optimally—has resonance with "protect the existing ecosystem" vibes. And part of the problem with dictators, and with top-down-gone-wrong, is that some of the virtues of an ecosystem get lost.

Maybe we could do, like, ecosystem-onium? (Image source here.)

Yet for all that AI safety might seem to want more green out of the invention of AGI, I think it also struggles to coherently conceptualize what green even is. Indeed, I think that various strands of the AI safety literature can be seen as attempting to somehow formalize the sort of green we intuitively want out of our AIs. "Surely it's possible," the thought goes, "to build a powerful mind that doesn't want exactly what we want, but which also doesn't just drive the universe off into some extreme and valueless tail? Surely, it's possible to just, you know, not optimize that hard?" See, e.g., the literature on "soft optimization," "corrigibility," "low impact agents," and so on.[5] As far as I can tell, Yudkowsky has broadly declared defeat on this line of research,[6] on the grounds that vibes of this kind are "anti-natural" to sufficiently smart agents that also get-things-done.[7] But this sounds a lot like saying: "sorry, the sort of green we want, here, just isn't enough of a coherent thing." And indeed: maybe not.[8] But if, instead, the problem is a kind of "green-blindness," rather than green-incoherence—a problem with the way a certain sort of philosophy blots out green, rather than with green itself—then the connection between green and AI safety suggests value in learning-to-see.

And I think green-blindness matters, too, because green is part of what protests at the kind of power-seeking that ideologies like rationalism and effective altruism can imply, and which warns of the dangers of yang-gone-wrong. Indeed, Yudkowsky's Lord of Dark, in dismissing green with contempt, also appears, notably, to be putting himself in a position to take over the world. There is no equilibrium, no balance of Nature, no God-to-be-trusted; instead there is poverty and pain and disease, too much to bear; and only nothingness above. And so, conclusion: cast the spell of Ultimate Power, young sorcerer. The universe, it seems, needs to be controlled.

And to be clear, in case anyone missed it: the Spell of Ultimate Power is a metaphor for AGI. The Lord of Dark is one of Yudkowsky's "programmers" (and one of Lewis's "conditioners"). Indeed, when the pain of the world breaks into the consciousness of the protagonist of the story, it does so in a manner extremely reminiscent of the way it breaks into young-Yudkowsky's consciousness, in his accelerationist days, right before he declares "reaching the Singularity as fast as possible to be the Interim Meaning of Life, the temporary definition of Good, and the foundation until further notice of my ethical system." (Emphasis in the original.)

I have had it. I have had it with crack houses, dictatorships, torture chambers, disease, old age, spinal paralysis, and world hunger. I have had it with a death rate of 150,000 sentient beings per day. I have had it with this planet. I have had it with mortality. None of this is necessary. The time has come to stop turning away from the mugging on the corner, the beggar on the street. It is no longer necessary to close our eyes, blinking away the tears, and repeat the mantra: "I can't solve all the problems of the world." We can. We can end this.

Of course, young-Yudkowsky has since aged. Indeed, older-Yudkowsky has disavowed all of his pre-2002 writings, and he wrote that in 1996. But he wrote the Sword of the Good in 2009, and the protagonist, in that story, reaches a similar conclusion. At the request of the Lord of Dark, whose Spell of Ultimate Power requires the sacrifice of a wizard, the protagonist kills the wizard who warned about disrupting equilibrium, and gives his sword—the Sword of the Good, which "kills the unworthy with a slightest touch" (but which only tests for intentions)—to the Lord of Dark to touch. "Make it stop. Hurry," says the protagonist. The Lord of Dark touches the blade and survives, thereby proving that his intentions are good. "I won't trust myself," he assures the protagonist. "I don't trust you either," the protagonist replies, "but I don't expect there's anyone better." And with that, the protagonist waits for the Spell of Ultimate Power to foom, and for the world as he knows it to end.

Is that what choosing Good looks like? Giving Ultimate Power to the well-intentioned—but un-accountable, un-democratic, Stalin-ready—because everyone else seems worse, in order to remake reality into something-without-darkness as fast as possible? And killing people on command in the process, without even asking why it's necessary, or checking for alternatives?[9] The story wants us, rightly, to approach the moral narratives we're being sold with skepticism; and we should apply the same skepticism to the story itself.

Perhaps, indeed, Yudkowsky aimed intentionally at prompting such skepticism (though the Lord of Dark's object-level schtick—his concern for animals, his interest in cryonics, his desire to tear-apart-the-foundations-of-reality-and-remake-it-new—seems notably in line with Yudkowsky's own). At the least, elsewhere in his fiction (e.g., HPMOR), he urges more caution in responding to the screaming pain of the world;[10] and his more official injunction towards "programmers" who have suitably solved alignment—i.e., "implement present-day humanity's coherent extrapolated volition"—involves, at least, certain kinds of inclusivity. Plus, obviously, his current, real-world policy platform is heavily not "build AGI as fast as possible." But as I've been emphasizing throughout this series, his underlying philosophy and metaphysics is, ultimately, heavy on the need for certain kinds of control; the need for the universe to be steered, and by the right hands; bent to the right will; mastered. And here, I think, green objects.

Green, according to non-Green

"Roofless, floorless, glassless, 'green to the very door'..."

But what exactly is green's objection? And should it get any weight?

There's a familiar story, here, which I'll call "green-according-to-blue." On this story, green is worried that non-green is going to do blue wrong—that is, act out of inadequate knowledge. Non-green thinks it knows what it's doing, when it attempts to remake Nature in its own image (e.g. remaking the ecosystem to get rid of wild animal suffering)—but according to green-according-to-blue, it's overconfident; the system it's trying to steer is too complex and unpredictable. So thinks blue, in steel-manning green. And blue, similarly, talks about Chesterton's fence—about the status quo often having a reason-for-being-that-way, even if that reason is hard to see; and about approaching it with commensurate respect and curiosity. Indeed, one of blue's favored stories for mistrusting itself relies on deference to cultural evolution, and to organic, bottom-up forms of organization, in light of the difficulty of knowing-enough-to-do-better.

We can also talk about green according to something more like white. Here, the worry is that non-green will violate various moral rules in acting to reshape Nature. Not, necessarily, that it won't know what it's doing, but that what it's doing will involve trampling too much over the rights and interests of other agents/patients.

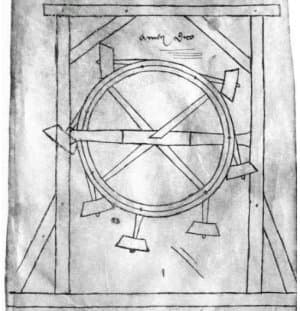

Finally, we can talk about green-according-to-black, on which green specifically urges us to accept things that we're too weak to change—and thus, to save on the stress and energy of trying-and-failing. Thus, black thinks that green is saying something like: don't waste your resources trying to build perpetual motion machines, or to prevent the heat death of the universe—you'll never be that-much-of-a-God. And various green-sounding injunctions against e.g. curing death ("it's a part of life") sound, to black, like mistaken applications (or: confused reifications) of this reasoning.[11]

Early design for a perpetual motion machine

I think that green does indeed care about all of these concerns—about ignorance, immorality, and not-being-a-God—and about avoiding the sort of straightforward mistakes that blue, white, and black would each admit as possibilities. Indeed, one way of interpreting green is to simply read it as a set of heuristics and reminders and ways-of-thinking that other colors do well, on their own terms, to keep in mind—e.g., a vibe that helps blue remember its ignorance, black its weakness, and so on. Or at least, one might think that this interpretation is what's left over, if you want to avoid attributing to green various crude naturalistic fallacies, like "everything Natural is Good," "all problems stem from human agency corrupting Nature-in-Harmony," and the like.[12]

But I think that even absent such crude fallacies, green-according-to-green has more to add to the other colors than this. And I think that it's important to try to really grok what it's adding. In particular: a key aspect of Yudkowsky's vision, at least, is that the ignorance and weakness situation is going to alter dramatically post-AGI. Blue and black will foom hard, until earth's future is chock full of power and knowledge (even if also: paperclips). And as blue and black grow, does the need for green shrink? Maybe somewhat. But I don't think green itself expects obsolescence—and some parts of my model of green think that people with the power and science of transhumanists (and especially: of Yudkowskian "programmers," or Lewisian "conditioners") need the virtues of green all the more.

But what are those virtues? I won't attempt any sort of exhaustive catalog here. But I do want to try to point at a few things that I think the green-according-to-non-green stories just described might miss. Green cares about ignorance, immorality, and not-being-a-God—yes. But it also cares about them in a distinctive way—one that more paradigmatically blue, white, and black vibes don't capture very directly. In particular: I think that green cares about something like attunement, as opposed to just knowledge in general; about something like respect, as opposed to morality in general; and about taking a certain kind of joy in the dance of both yin and yang—in encountering an Other that is not fully "mastered"—as opposed to wishing, always, for fuller mastery.

I'll talk about attunement in my next essay—it's the bit of green I care about most. For now, I'll give some comments on respect, and on taking joy in both yin and yang.

Green and respect

In Being Nicer than Clippy, I tried to gesture at some hazy distinction between what I called "paperclippy" modes of ethical conduct, and some alternative that I associated with "liberalism/boundaries/niceness." Green, I think, tends to be fairly opposed to "paperclippy" vibes, so on this axis, a green ethic fits better with the liberalism/boundaries/niceness thing.

But I think that the sort of "respect" associated with green goes at least somewhat further than this—and its status in relation to more familiar notions of "Morality" is more ambiguous. Thus, consider the idea of casually cutting down a giant, ancient redwood tree for use as lumber—lifting the chainsaw, watching the metal bite into the living bark. Green, famously, protests at this sort of thing—and I feel the pull. When I stand in front of trees like this, they do, indeed, seem to have a kind of presence and dignity; they seem importantly alive.[13] And the idea of casual violation seems, indeed, repugnant.

Albert Bierstadt's "Giant Redwood Trees of California" (Image source here).

But it remains, I think, notably unclear exactly how to fit the ethic at stake into the sorts of moral frameworks analytic ethicists are most comfortable with—including, the sort of rights-based deontology that analytic ethicists often use to talk about liberal and/or boundary-focused ethics.

Is the thought: the tree is instrumentally useful for human purposes? Environmentalists often reach for these justifications ("these ancient forests could hold the secret to the next vaccine"), but come now. Is that why people join the Sierra Club, or watch shows like Planet Earth? At the least, it's not what's on my own mind, in the forest, staring up at a redwood. Nor am I thinking "other people love/appreciate this tree, so we should protect it for the sake of their pleasure/preferences" (and this sort of justification would leave the question of why they love/appreciate it unelucidated).

Ok then, is the thought: the tree is beneficial to the welfare of a whole ecosystem of non-human moral-patient-y life forms? Again, a popular thought in environmentalist circles.[14] But again, not front-of-mind for me, at least, in encountering the tree itself; and in my mind, too implicating of gnarly questions about animal welfare and wild animal suffering to function as a simple argument for conservation.

Ok: is the thought, then, that the tree itself is a moral patient?[15] Well, kind of. The tree is something, such that you don't just do whatever you want with it. But again, in experiencing the tree as having "presence" or "dignity," or in calling it "alive," it doesn't feel like I'm also ascribing to it the sorts of properties we associate more paradigmatically with moral patience-y—e.g., consciousness. And talk of the tree as having "rights" feels strained.

And yet, for all this, something about just cutting down this ancient, living tree for lumber does, indeed, feel pretty off to me. It feels, indeed, like some dimension related to "respect" is in deficit.

Can we say more about what this dimension consists in? I wish I had a clearer account. And it could be that this dimension, at least in my case, is just, ultimately, confused, or such that it would not survive reflection once fully separated from other considerations. Certainly, the arbitrariness of certain of the distinctions that some conservationist attitudes (including my own) tend to track (e.g., the size and age and charisma of a given life-form) raise questions on this front. And in general, despite my intuitive pull towards some kind of respect-like attitude towards the redwood, we're here nearby various of the parts of green that I feel most skeptical of.

It's because it's big isn't it... (Image source here.)

Still, before dismissing or reducing the type of respect at stake here, I think it's at least worth trying to bring it into clearer view. I'll give a few more examples to that end.

Blurring the self

I mentioned above that green is the "conservative" color. It cares about the past; about lineage, and tradition. If something life-like has survived, gnarled and battered and weathered by the Way of Things, then green often grants it more authority. It has had more harmonies with the Way of Things infused into it; and more disharmonies stripped away.

Of course, "harmony with the Way of Things" can be, just, another word for power (see also: "rationality"); and we can, indeed, talk about a lot of this in terms of blue and black—that is, in terms of the knowledge and strength that something's having-survived can indicate, even if you don't know what it is. But it can feel like the relationship green wants you to have with the past/lineage/tradition and so on goes beyond this, such that even if you actually get all of the power and knowledge you can out of the past/lineage/tradition, you shouldn't just toss them aside. And this seems closely related to respect as well.

Part of this, I think, is that the past is a part of us. Or at least, our lineage is a part of us, almost definitionally. It's the pattern that created us; the harmony with the Way of Things that made us possible; and it continues to live within us and around us in ways we can't always see, and which are often well-worth discovering.

"Ok, but does that give it authority over us? " The quick straw-Yudkowskian answer is: "No. The thing that has authority over you, morally, is your heart; your values. The past has authority only insofar as some part of it is good according to those values."

But what if the past is part of your heart? Straw-Yudkowskianism often assumes that when we talk about "your values," we are talking about something that lives inside you; and in particular, mostly, inside your brain. But we should be careful not to confuse the brain-as-seer and the brain-as-thing-seen. It's true that ultimately, your brain moves your muscles, so anything with the sort of connection to your behavior adequate to count as "your values" needs to get some purchase on your brain somehow. But this doesn't mean that your brain, in seeking out guidance about what to do, needs to look, ultimately, to itself. Rather, it can look, instead, outwards, towards the world. "Your values" can make essential reference to bits of Reality beyond yourself, that you cannot see directly, and must instead discover—and stuff about your past, your lineage, and so on is often treated as a salient candidate for mattering in this respect; an important part of "who you are."

(See also this one.)

In this way, your "True Self" can be mixed-up, already, with that strange and unknown Other, reality. And when you meet that Other, you find it, partly, as mirror. But: the sort of mirror that shows you something you hadn't seen before. Mirror, but also window.

Green, traditionally, is interested in these sorts of line-blurrings—in the ways in which it might not be me-over-here, you-over-there; the way the real-selves, the true-Agents, might be more spread out, and intermixed. Shot through forever with each other. Until, in the limit, it was God the whole time: waking up, discovering himself, meeting himself in each other's eyes.

Of course, God does, still, sometimes need to go to war with parts himself—for example, when those parts are invading Poland. Or at least, we do—for our true selves are not, it seems, God entire; that's the "evil" problem. But such wars need not involve saying "I see none of myself in you." And indeed, green is very wary of stances towards evil and darkness that put it, too much, "over there," instead of finding ourselves in its gaze. This is a classic Morality thing, a classic failure mode of White. But green-like lessons often go the opposite direction. See, for example, the Wizard of Earthsea, or the ending of Moana (spoilers at link). Your true name, perhaps, lies partly in the realm of shadow. You can still look on evil with defiance and strength; but to see fully, you must learn to look in some other way as well.

And here, perhaps, is one rationale for certain kinds of respect. It's not, just, that something that might carry knowledge and power you can acquire and use, or fear; or that it might conform to and serve some pre-existing value you know, already, from inside yourself. Rather, it might also carry some part of your heart itself inside of it; and to kill it, or to "use it," or put it too much "over there," might be to sever your connection with your whole self; to cut some vein, and so become more bloodless; to block some stream, and so become more dry.

Respecting superintelligences

I'll also mention another example of green-like "respect"—one that has more relevance to AI risk.

Someone I know once complained to me that the Yudkowsky-adjacent AI risk discourse gives too little "respect" to superintelligences. Not just superintelligent AIs; but also, other advanced civilizations that might exist throughout the multiverse. I thought it was an interesting comment. Is it true?

Certainly, straw-Yudkowskian-ism knows how to positively appraise certain traits possessed by superintelligences—for example, their smarts, cunning, technological prowess, etc (even if not also: their values). Indeed, for whatever notion of "respect" one directs at a formidable adversary trying to kill you, Yudkowsky seems to have a lot of that sort of respect for misaligned AIs. And he worries that our species has too little.

That is: Yudkowsky respects the power of superintelligent agents. And he's generally happy, as well, to respect their moral rights. True, as I discussed in "Being nicer than Clippy," I do think that the Yudkowskian AI risk discourse sometimes under-emphasizes various key aspects of this. But that's not what I want to focus on here.

Once you've positively appraised the power (intelligence, oomph, etc) of a superintelligent agent, though, and given its moral claims adequate weight, what bits are left to respect? On a sufficiently abstracted Yudkowskian ontology, the most salient candidate is just: the utility function bit (agents are just: utility functions + power/intelligence/oomph). And sure, we can positively appraise utility functions (and: parts of utility functions), too—especially to the degree that they are, you know, like ours.

But some dimension of respect feels like it might be missing from this picture. For one thing: real world creatures—including, plausibly, quite oomph-y ones—aren't, actually, combinations of utility functions and degrees-of-oomph. Rather, they are something more gnarled and detailed, with their own histories and cultures and idiosyncrasies—the way the boar god smells you with his snout; the way humans cry at funerals; the way ChatGPT was trained to predict the human internet. And respect needs to attend to and adjust itself to a creature's contours—to craft a specific sort of response to a specific sort of being. Of course, it's hard to do that without meeting the creature in question. But when we view superintelligent agents centrally through the lens of rational-agent models, it's easy to forget that we should do it at all.

But even beyond this need for specificity, I think some other aspect of respect might be missing too. Suppose, for example, that I meet a super-intelligent emissary from an ancient alien civilization. Suppose that this emissary is many billions of years old. It has traveled throughout the universe; it has fought in giant interstellar wars; it understands reality with a level of sophistication I can't imagine. How should I relate to such a being?

Obviously, indeed, I should be scared. I should wonder about what it can do, and what it wants. And I should wonder, too, about its moral claims on me. But beyond that, it seems appropriate, to me, to approach this emissary with some more holistic humility and open attention. Here is an ancient demi-God, sent from the fathoms of space and time, its mind tuned and undergirded by untold depths of structure and harmony, knowledge and clarity. In a sense, it stands closer to reality than we do; it is a more refined and energized expression of reality's nature, pattern, Way. When it speaks, more of reality's voice speaks through it. And reality sees more truly through its eyes.

Does that make it "good"? No—that's the orthogonality thing, the AI risk thing. But it likely has much more of whatever "wisdom" is compatible with the right ultimate picture of "orthogonality"—and this might, actually, be a lot. At the least, insofar as we are specifically trying to get the "respect" bit (as opposed to the not-everyone-dying bit) right, I worry a bit about coming in too hard, at the outset, with the conceptual apparatus of orthogonality; about trying, too quickly, to carve up this vast and primordial Other Mind into "capabilities" and "values," and then taking these carved-up pieces, centrally, as objects of positive or negative appraisal.

In particular: such a stance seems notably loaded on our standing in judgment of the super-intelligent Other, according to our own pre-existing concepts and standards; and notably lacking on interest in the Other's judgment of us; or in understanding the Other on its own terms, and potentially growing/learning/changing in the process. Of course, we should still do the judging-according-to-our-own-standards bit—not to mention, the not-dying bit. But shouldn't we be doing something else as well?

Or to put it another way: faced with an ancient super-intelligent civilization, there is a sense in which we humans are, indeed, as children.[16] And there is a temptation to say we should be acting with the sort of holistic humility appropriate to children vis-à-vis adults—a virtue commonly associated with "respect."[17] Of course, some adults are abusive, or evil, or exploitative. And the orthogonality thing means you can't just trust or defer to their values either. Nor, even in the face of superintelligence, should we cower in shame, or in worship—we should stand straight, and look back with eyes open. So really, we need the virtues of children who are respectful, and smart, and who have their own backbone—the sort of children who manage, despite their ignorance and weakness, to navigate a world of flawed and potentially threatening adults; who become, quickly, adults themselves; and who can hold their own ground, when it counts, in the meantime. Yes, a lot of the respect at stake is about the fact that the adults are, indeed, smarter and more powerful, and so should be feared/learned-from accordingly. But at least if the adults meet certain moral criteria—restrictive enough to rule out the abusers and exploiters, but not so restrictive as to require identical values—then it seems like green might well judge them worthy of some other sort of "regard" as well.

But even while it takes some sort of morality into account, the regard in question also seems importantly distinct from direct moral approval or positive appraisal. Here I think again of Miyazaki movies, which often feature creatures that mix beauty and ugliness, gentleness and violence; who seem to live in some moral plane that intersects and interacts with our own, but which moves our gaze, too, along some other dimension, to some unseen strangeness.[18] Wolf gods; blind boar gods; spirits without faces; wizards building worlds out of blocks marred by malice—how do you live among such creatures, and in a world of such tragedy and loss? "I am making this movie because I do not have the answer," says the director, as he bids his art goodbye.[19] But some sort of respect seems apt in many cases—and of a kind that can seem to go beyond "you have power," "you are a moral patient," and "your values are like mine."

I admit, though, that I haven't been able to really pin down or elucidate the type of respect at stake.[20] In the appendix to this essay, I discuss one other angle on understanding this sort of respect, via what I call "seeking guidance from God." But I don't feel like I've nailed that angle, either—and the resulting picture of green brings it nearer to "naturalistic fallacies" I'm quite hesitant about. And even the sort of respect I've gestured at in the examples above—for trees, lineages, superintelligent emissaries, and so on—risks various types of inconsistency, complacency, status-quo-bias, and getting-eaten-by-aliens. And perhaps it cannot, ultimately, be made simultaneously coherent and compelling.

But I feel some pull in this direction all the same. And regardless of our ultimate views on this sort of respect, I think it's not quite the same thing as e.g. making sure you respect Nature's "rights," or conform to the right "rules" in relation to it—what I called, above, "green-according-to-white."

Green and joy

"Pantheism is a creed not so much false as hopelessly behind the times. Once, before creation, it would have been true to say that everything was God. But God created: He caused things to be other than Himself that, being distinct, they might learn to love Him, and achieve union instead of mere sameness. Thus He also cast His bread upon the waters."

~ C.S. Lewis, in the Problem of Pain

"The ancient of days" by William Blake (Image source here; strictly speaking for Blake this isn't God, but whatever...)

I want to turn, now, to green-according-to-black, according to which green is centrally about recognizing our ongoing weakness—just how much of the world is not (or: not yet) master-able, controllable, yang-able.

I do think that something in the vicinity is a part of what's going on with green. And not just in the sense of "accepting things you can't change." Even if you can change them, green is often hesitant about attempting forms of change that involve lots of effort and strain and yang. This isn't to say that green doesn't do anything. But when it does, it often tries to find and ride some pre-existing "flow"—to turn keys that fit easily into Nature's locks; to guide the world in directions that it is fairly happy to go, rather than forcing it into some shape that it fights and resists.[21] Of course, we can debate the merits of green's priors, here, about what sorts of effort/strain are what sorts of worth it—and indeed, as mentioned, green's tendency towards unambition and passivity is one of my big problems with it. But everyone, even black, agrees on the merits of energy efficiency; and in the limit, if yang will definitely fail, then yin is, indeed, the only option. Sad, says black, but sometimes necessary.

Here, though, I'm interested in a different aspect of green—one which does not, like black, mourn the role of yin; but rather, takes joy in it. Let me say more about what I mean.

Love and otherness

"I have bedimm'd

The noontide sun, call'd forth the mutinous winds,

And 'twixt the green sea and the azured vault

Set roaring war: to the dread rattling thunder

Have I given fire and rifted Jove's stout oak

With his own bolt; the strong-based promontory

Have I made shake and by the spurs pluck'd up

The pine and cedar: graves at my command

Have waked their sleepers, oped, and let 'em forth

By my so potent art..."

~ Prospero

"Scene from Shakespeare's The Tempest," by Hogarth (Image source here)

There's an old story about God. It goes like this. First, there was God. He was pure yang, without any competition. His was the Way, and the Truth, and the Light—and no else's. But, there was a problem. He was too alone. Some kind of "love" thing was too missing.

So, he created Others. And in particular: free Others. Others who could turn to him in love; but also, who could turn away from him in sin—who could be what one might call "misaligned."

And oh, they were misaligned. They rebelled. First the angels, then the humans. They became snakes, demons, sinners; they ate apples and babies; they hurled asteroids and lit the forests aflame. Thus, the story goes, evil entered a perfect world. But somehow, they say, it was in service of a higher perfection. Somehow, it was all caught up with the possibility of love.

The Fall of the Rebel Angels, by Bosch. (Image source here.)

Why do I tell this story? Well: a lot of the "deep atheism" stuff, in this series, has been about the problem of evil. Not, quite, the traditional theistic version—the how-can-God-be-good problem. But rather, a more generalized version—the problem of how to relate, spiritually, to an orthogonal and sometimes horrifying reality; how to live in the light of one's vulnerability to an unaligned God. And I've been interested, in particular, in responses to this problem that focus, centrally, on reducing the vulnerability in question—on seeking greater power and control; on "deep atheism, therefore black." These responses attempt to reduce the share of the world that is Other, and to make it, instead, a function of Self (or at least, the self's heart). And in the limit, it can seem like they aspire (if only it were possible) to abolish the Other entirely; to control everything, lest any evil or misalignment sneak through; and in this respect, to take up that most ancient and solitary throne—the one that God sat on, before the beginning of time; the throne of pure yang.

So I find it interesting that God, in the story above, rejected this throne. Unlike us, he had the option of full control, and a perfectly aligned world. But he chose something different. He left pure self behind, and chose instead to create Otherness—and with it, the possibility (and reality) of evil, sin, rebellion, and all the rest.

Of course, we might think he chose wrong. Indeed, the story above is often offered as a defense (the "free will defense") of God's goodness in the face of the world's horrors—and we might, with such horrors vividly before us, find such a defense repugnant.[22] At the least, couldn't God have found a better version of freedom? And one might worry, too, about the metaphysics of the freedom implicitly at stake. In particular, at least as Lewis tells it,[23] the story loads, centrally, on the idea that instead of determining the values of his creatures (and without, one assumes, simply randomizing the values that they get, or letting some other causal process decide), God can just give them freedom instead—the freedom to have some part of them uncreated; to be an uncaused cause. But in our naturalistic universe, at least, and modulo various creative theologies, this doesn't seem like something a creator (especially an omniscient and omnipotent one) can do. Whether his creatures are aligned, or unaligned, God either made them so, or he let some other not-them process (e.g., his random-number-generator) do the making. And once we've got a better and more compatibilist metaphysics in view, the question of "why not make them both good and free?" becomes much more salient (see e.g. my discussion of Bob the lover-of-joy here). And note, importantly, that the same applies to us, with our AIs.[24]

But regardless of how we feel about God's choice in the story, or the metaphysics it presumes, I think it points at something real: namely, that we don't, actually, always want more power, control, yang. To the contrary, and even setting aside more directly ethical constraints on seeking power over others, a lot of our deepest values are animated by taking certain kinds of joy in otherness and yin—in being not-God, and relatedly: not-alone.

Love is indeed the obvious example here. Love, famously, is directed (paradigmatically) at something outside yourself—something present, but exceeding your grasp; something that surprises you, and dances with you, and looks back at you. True, people often extoll the "sameness" virtues of love—unity, communion, closeness. But to merge, fully—to make love centrally a relation with an (expanded) self—seems to me to miss a key dimension of joy-in-the-Other per se.

Here I think of Martin Buber's opposition, in more spiritual contexts, to what he calls "doctrines of immersion" (Buddhism, on his reading, is an example), which aspire to dissolve into the world, rather than to encounter it. Such doctrines, says Buber, are "based on the gigantic delusion of human spirit bent back into itself—the delusion that spirit occurs in man. In truth it occurs from man - between man and what he is not."[25] Buber's spirituality focuses, much more centrally, on this kind of "between"—and compared with spiritual vibes focused more on unification, I've always found his vision the more resonant. Not to merge, but to stand face to face. Not to become the Other; but to speak, and to listen, in dialogue. And many other interpersonal pleasures—conversation, friendship, community—feature this kind of "between" as well.

Or consider experiences of wonder, sublimity, beauty, curiosity. These are all, paradigmatically, experiences of encountering or receiving something outside yourself—something that draws you in, stuns you, provokes you, overwhelms you. They are, in this sense, a type of yin. They discover something, and take joy in the discovery. Reality, in such experiences, is presented as electric and wild and alive.

(Image source here)

And many of the activities we treasure specifically involve a play of yin and yang in relation to some not-fully-controlled Other—consider partner dancing, or surfing, or certain kinds of sex. And of course, sometimes we go to an activity seeking the yin bit in particular. Cf, e.g., dancing with a good lead, sexual submissiveness, or letting a piece of music carry you.

"Dance in the country," by Renoir. (Image source here.)

And no wonder that our values are like this. Humans are extremely not-Gods. We evolved in a context in which we had, always, to be learning from and responding to a reality very much beyond-ourselves. It makes sense, then, that we learned, in various ways, to take joy in this sort of dance—at least, sometimes.

Still, especially in the context of abstract models of rationality that can seem to suggest a close link between being-an-agent-at-all and a voracious desire for power and control, I think it's important to notice how thoroughly joy in various forms of Otherness pervades our values.[26] And I think this joy is at least one core thing going on with green. Contra green-according-to-black, green isn't just resigned to yin, or "serene" in the face of the Other. Green loves the Other, and gets excited about God. Or at least, God in certain guises. God like a friend, or a newborn bird, or a strange and elegant mathematical pattern, or the cold silence of a mountain range. God qua object of wonder, curiosity, reverence, gentleness. True, not all God-guises prompt such reactions—cancer, the Nazis, etc are still, more centrally, to-be-defeated.[27] But contra Black (and even modulo White), neither is everything either a matter of mastery, or of too-weak-to-win.

(Image source here.)

The future of yin

"But this rough magic

I here abjure, and, when I have required

Some heavenly music, which even now I do,

To work mine end upon their senses that

This airy charm is for, I'll break my staff,

Bury it certain fathoms in the earth,

And deeper than did ever plummet sound

I'll drown my book."

~ Prospero

What's more, I think this aspect of our values actually comes under threat, in the age of AGI, from a direction quite different from the standard worry about AI risk. The AI risk worry is that we'll end up with too little yang of our own, at least relative to some Other. But there is another, different worry—namely, that we'll end up with too much yang, and so lose various of the joys of Otherness.

It's a classic sort of concern about Utopia. What does life become, if most aspects of it can be chosen and controlled? What is love if you can design your lover? Where will we seek wildness if the world has been tamed? Yudkowsky has various essays on this; and Bostrom has a full book shortly on the way. I'm not going to try to tackle the topic in any depth here—and I'm generally skeptical of people who try to argue, from this, to Utopia not being extremely better, overall, than our present condition. But just because Utopia is better overall doesn't mean that nothing is lost in becoming able to create it—and some of the joys of yin (and relatedly, of yang—the two go hand in hand) do seem to me to be at risk. Hopefully, we can find a way to preserve them, or even deepen them.[28] And hopefully, while still using the future's rough magic wisely, rather than breaking staff and drowning book.

Still, I wonder where a wise and good future might, with Prospero, abjure certain alluring sorceries—and not just for lack of knowledge of how they might shake the world. Where the future might, with Ogion, let the rain fall. At the least, I find interesting the way various transhumanist visions of the future—what Ricón (2021) calls "cool sci-fi-shit futurism"—often read as cold and off-putting precisely insofar as they seem to have lost touch with some kind of green. Vibes-wise—but also sometimes literally, in terms of color-scheme: everything is blue light and chrome and made-of-computers. But give the future green—give it plants, fresh air, mountain-sides, sunlight—and people begin to warm to Utopia. Cf. solarpunk, "cozy futurism," and the like. And no wonder: green, I think, is closely tied with many of our most resonant visions of happiness.

Example of solarpunk aesthetic (to be clear: I think the best futures are way more future-y than this)

Maybe, on reflection, we'll find that various more radical changes are sufficiently better that it's worth letting go of various more green-like impulses—and if so, we shouldn't let conservatism hold us back. Indeed, my own best guess is that a lot of the value lies, ultimately, in this direction, and that the wrong sort of green could lead us catastrophically astray. But I think these more green-like visions of the future actually provide a good starting point, in connecting with the possible upsides of Utopia. Whichever direction a good future ultimately grows, its roots will have been in our present loves and joys—and many of these are green.

Alexander speaks about the future as a garden. And if a future of nano-bot-onium is pure yang, pure top-down control, gardens seem an interesting alternative—a mix of yin and yang; of your work, and God's, intertwined and harmonized. You seed, and weed, and fertilize. But you also let-grow; you let the world respond. And you take joy in what blooms from the dirt.

Next up: Attunement

Ok, those were some comments on "green-according-to-white," which focuses on obeying the right moral rules in relation to Nature, and "green-according-black," which focuses on accepting stuff that you're too weak to change. In each case, I think, the relevant diagnosis doesn't quite capture the full green-like thing in the vicinity, and I've tried to do at least somewhat better.

But I haven't yet discussed "green-according-to-blue," which focuses on making sure we don't act out of inadequate knowledge. This is probably the most immediately resonant reconstruction of green, for me—and the one closest to the bit of green I care most about. But again, I think that blue-like "knowledge," at least in its most standard connotation, doesn't quite capture the core thing—something I'll call "attunement." In my next essay, I'll say more about what I mean.

Appendix: Taking guidance from God

This appendix discusses one other way of understanding the sort of "conservatism" and "respect" characteristic of green—namely, via the concept of "taking guidance from God." This is a bit of green that I'm especially hesitant about, and I don't think my discussion nails it down very well. But I thought I would include some reflections regardless, in case they end up useful/interesting on their own terms.

Earlier in the series, I suggested that "deep atheism" can be seen, fundamentally, as emerging from severing the connection between Is and Ought, the Real and the Good. Traditional theism can trust that somehow, the two are intimately linked. But for deep atheism, they become orthogonal—at least conceptually.[29] Maaayybe some particular Is is Ought; but only contingently so—and on priors, probably not.[30] Hence, indeed, deep atheism's sensitivity to the so-called "Naturalistic fallacy," which tries to move illicitly from Is to Ought, from Might in the sense of "strong enough to exist/persist/get-selected" to Right in the sense of "good enough to seek guidance from." And naturalistic fallacies are core to deep atheism's suspicion towards green. Green, the worry goes, seeks too much input from God.

What's more, I think we can see an aspiration to "not seek input from God" in various other more specific ethical motifs associated with deep atheist-y ideologies like effective altruism. Consider, for example, the distinction between doing and allowing, or between action and omission.[31] Consequentialism—the ethical approach most directly associated with Effective Altruism—is famously insensitive to distinctions like this, at least in theory. And why so? Well, one intuitive argument is that such distinctions require treating the "default path"—the world that results if you go fully yin, if you merely allow or omit, if you "let go and let God"—as importantly different from a path created by your own yang. And because God (understood as the beyond-your-yang) sets the "default," ascribing intrinsic importance to the "default" is already to treat God's choice as ethically interesting—which, on deep atheism, it isn't.[32]

Worse, though: distinctions like acts vs. omissions and doing vs. allowing generally function to actively defer to God's choice, by treating deviation from the "default" as subject to a notably higher burden of proof. For example, on such distinctions, it is generally thought much easier to justify letting someone die (for example, by not donating money; or in-order-to-save-five-more-people) than it is to justify killing them. But this sort of burden of proof effectively grants God a greater license-to-kill than it grants to the Self.[33] Whence such deference to God's hit list?

Or consider another case of not-letting-God-give-input: namely, the sense in which total utilitarianism treats possible people and actual people as ethically-on-a-par. Thus, in suitably clean cases, total utilitarianism will choose to create a new happy person, who will live for 50 years, rather than to extend an existing happy human's life by another 40 years. And in combination with total-utilitarianism's disregard for distinctions like acts vs. omissions, this pattern of valuation can quickly end up killing existing people in order to replace them with happier alternatives (this is part of what gives rise to the paperclipping problems I discussed in "Being nicer than Clippy"). Here, again, we see a kind of disregard-for-God's-input at work. An already-existing person is a kind of Is—a piece of the Real; a work of God.[34] But who cares about God's works? Why not bulldoze them and build something more optimal instead? Perhaps actual people have more power than possible people, due to already existing, which tends to be helpful from a power perspective. But a core ethical shtick, here, is about avoiding might-makes-right; about not taking moral cues from power alone. And absent might-makes-right, why does the fact that some actual-person happens to exist make their welfare more important than that of those other, less-privileged possibilia?

Many "boundaries," in ethics, raise questions of this form. A boundary, typically, involves some work-of-God, some Is resulting from something other than your own yang. Maybe it's a fence around a backyard; or a border around a country; or a skin-bag surrounding some cells—and typically, you didn't build the fence, or found the country, or create the creature in question. God did that; Power did that. But from an ethical as opposed to a practical perspective, why should Power have a say in the matter? Thus, indeed, the paperclipper's atheism. Sure, OK: God loves the humans enough to have made-them-out-of-atoms (at least, for now). But Clippy does not defer to God's love, and wants those atoms for "something else." And as I discussed earlier in the series: utilitarianism reasons the same.

Or as a final example of an opportunity to seek or not-seek God's input, consider various flavors of what G.A. Cohen calls "small-c conservatism." According to Cohen, small-c conservatism is, roughly, an ethical attitude that wants to conserve existing valuable things—institutions, practices, ways of being, pieces of art—to a degree that goes above and beyond just wanting valuable things to exist. Here Cohen gives the example of All Souls College at Oxford University, where Cohen was a professor. Given the opportunity to tear down All Souls and replace it with something better, Cohen thinks we have at least some (defeasible) reason to decline, stemming just from the fact that All Souls already exists (and is valuable).[35] In this respect, small-c conservatism is a kind of ethical status quo bias—being already-chosen-by-God gives something an ethical leg up.[36]

Real All Souls on the left, ChatGPT-generated new version on the right. Though in the actual thought experiment ChatGPT's would be actually-better.

Various forms of environmental conservation, a la the redwoods above, are reminiscent of small-c conservativism in this sense.[37] Consider, e.g., the Northern White Rhino. Only two left—both female, guarded closely by human caretakers, and unable to bear children themselves.[38] Why guard them? Sam Anderson writes about the day the last male, Sudan, died:

We expect extinction to unfold offstage, in the mists of prehistory, not right in front of our faces, on a specific calendar day. And yet here it was: March 19, 2018. The men scratched Sudan's rough skin, said goodbye, made promises, apologized for the sins of humanity. Finally, the veterinarians euthanized him. For a short time, he breathed heavily. And then he died.

The men cried. But there was also work to be done. Scientists extracted what little sperm Sudan had left, packed it in a cooler and rushed it off to a lab. Right there in his pen, a team removed Sudan's skin in big sheets. The caretakers boiled his bones in a vat. They were preparing a gift for the distant future: Someday, Sudan would be reassembled in a museum, like a dodo or a great auk or a Tyrannosaurus rex, and children would learn that once there had been a thing called a northern white rhinoceros.

Sudan's grave (Image source here)

Sudan's death went temporarily viral. And the remaining females are still their own attraction. People visit the enclosure. People cry for the species poached-to-extinction. Why the tears? Not, I think, from maybe-losing-a-vaccine. "At a certain point," writes Anderson, "we have to talk about love."

But what sort of love? Not the way the utilitarian loves the utilons. Not a love that mourns, equally, all the possible species that never got to exist—the fact that God created the Northern White Rhino in particular matters, here. No, the love at stake is more like: the way you love your dog, or your daughter, or your partner in particular. The way we love our languages and our traditions and our homes. A love that does more than compare-across-possibilia. A love that takes the actual, the already, as an input.

Of course, these examples of "taking God's guidance" are all different and complicated in their own ways. But to my mind, they point at some hazy axis along which one can try, harder and harder, to isolate the Ought from the influence of the Is. And this effort culminates in an attempt to stand, fully, outside of the world—the past, the status quo—so as to pass judgment on them all from some other, ethereal footing.

As ever, total utilitarianism—indeed, total-anything-ism—is an extreme example here. But we see the aesthetic of total utilitarianism's stance conjured by the oh-so-satisfying discipline of "population axiology" more generally—a discipline that attempts to create a function, a heart, that takes in all possible worlds (the actual world generally goes unlabeled), and spits out a consistent, transitive ranking of their goodness.[39] And Yudkowskians often think of their own hearts, and the hearts of the other player characters (e.g., the AIs-that-matter), on a similar model. Theirs isn't, necessarily, a ranking of impartial goodness; rather, it's a ranking of how-much-I-prefer-it, utility-according-to-me. But it applies to similar objects (e.g., possible "universe-histories"); it's supposed to have similar structural properties (e.g., transitivity, completeness, etc); and it is generated, most naturally, from a similar stance-beyond-the-world—a stance that treats you as a judge and a creator of worlds; and not, centrally, as a resident.[40] Indeed, from this stance, you can see all; you can compare, and choose, between anything.[41] All-knowing, all-powerful—it's a stance associated, most centrally, with God himself. Your heart, that is, is the "if I was God" part. No wonder, then, if it doesn't seek the real God's advice.[42]

But green-like respect, I think, often does seek God's advice. And more generally, I think, green's ethical motion feels less like ranking all possible worlds from some ethereal stance-beyond, and then getting inserted into the world to move it up-the-ranking; and more like: lifting its head, looking around, and trying to understand and respond to what it sees.[43] After all: how did you learn, actually, what sorts of worlds you wanted? Centrally: by looking around the place where you are.

That said, not all of the examples of "taking God's guidance" just listed are especially paradigmatic of green. For example, green doesn't, I think, tend to have especially worked-out takes about population ethics. And I, at least, am not saying we should take God's input, in all these cases; and still less, to a particular degree. For example, as I've written about previously: I'm not, actually, a big fan of attempts to construe the acts vs. omission distinction in matters-intrinsically (as opposed to matters-pragmatically) terms; I care a lot about possible people in addition to actual people; and I think an adequate ethic of "boundaries" has to move way, way beyond "God created this boundary, therefore it binds."[44]

Nor is God's "input," in any of these cases, especially clear cut. For one thing, God himself doesn't seem especially interested in preventing the extinction of the species he creates. And if you're looking for his input re: how to relate to boundaries, you could just as easily draw much bloodier lessons—the sort of lessons that predators and parasites teach. Indeed, does all of eukaryotic life descend from the "enslavement" of bacteria as mitochondria?[45] Or see e.g. this inspiring video (live version here) about "slave-making ants," who raid the colonies of another ant species, capture the baby pupae, and then raise them as laborers in a foreign nest (while also, of course, eating a few along the way). As ever: God is not, actually, a good example; and his Nature brims with original sin.

Queen "slave-maker" (image source here)

Indeed, in some sense, trying to take "guidance from God" seems questionably coherent in the context of your own status as a part of God yourself. That is, if God—as I am using/stretching the term—is just "the Real," then anything you actually do will also have been done-by-God, too, and so will have become His Will. Maybe God chose to create All Souls College; but apparently, if you choose to tear it down, God will have chosen to uncreate it as well. And if your justification for respecting some ancient redwood was that “it’s such a survivor”—well, if you chop it up for lumber, apparently not. And similarly: why not say that you are resisting God, in protecting the Northern White Rhino? The conservation is sure taking a lot of yang...

And it's here, as ever, that naturalistic fallacies really start to bite. The problem isn't, really, that Nature's guidance is bad—that Nature tells you to enslave and predate and get-your-claws-bloody. Rather, the real problem is that Nature doesn't, actually, give any guidance at all. Too much stuff is Nature. Styrofoam and lumber-cutting and those oh-so-naughty sex acts—anything is Nature, if you make it real. And choices are, traditionally, between things-you-can-make-real. So Nature, in its most general conception, seems ill-suited to guiding any genuine choice.

So overall, to the extent green-like respect does tend to "take God's guidance," then at least if we construe the argument for doing so at a sufficiently abstract level, this seems to me like one of the diciest parts of green (though to be clear, I'm happy to debate the specific ethical issues, on their own merits, case-by-case). And I think it's liable, as well, to conflating the sort of respect worth directing at power per se (e.g., in the context of game theory, real politik, etc), with the sort of respect worth directing at legitimate power; power fused with justice and fairness (even if not, with "my-values-per-se"). I'm hoping to write more about this at some point (though probably not in this series).

That said, to the extent that deep atheism takes the general naturalistic fallacy—that is, the rejection of any move from "is" to "ought"—as some kind of trump-card objection to "taking guidance from God," and thus to green, I do want to give at least one other note in green's defense: namely, that insofar as it wishes to have any ethics at all, many forms of deep atheism need to grapple with some version of the general naturalistic fallacy as well.

In particular: deep atheists are ultimately naturalists. That is, they think that Nature is, in some sense, the whole deal. And in the context of such a metaphysics, a straightforward application of the most general naturalistic fallacy seems to leave the "ought" with nowhere to, like, attach. Anything real is an "is"—so where does the "ought" come from? Moral realists love (and fear) this question—it's their own trump card, and their own existential anxiety. Indeed, along this dimension, at least, the moral realists are even more non-green than the Yudkowskians. For unlike the moral realists, who attempt (unsuccessfully) to untether their ethics from Nature entirely, the Yudkowskians, ultimately, need to find some ethical foothold within Nature; some bit of God that they do take guidance from. I've been calling this bit your "true self," or your "heart"—but from a metaphysical perspective, it's still God, still Nature, and so still equally subject to whatever demand-for-justification the conceptual gap between is and ought seems to create.[46] Indeed, especially insofar as straw-Yudkowskian-ism seems to assume, specifically, that its true heart is closely related to what it "resonates with" (whether emotionally or mentally), those worried about naturalistic fallacies should be feeling quite ready to ask, with Lewis: why that? Why trust "resonance," ethically? If God made your resonances, aren't you, for all your atheism, taking his guidance?[47]

Indeed, for all of the aesthetic trappings of high-modernist science that straw-Yudkowskianism draws on, its ethical vibe often ends up strangely Aristotelian and teleological. You may not be trying to act in line with Nature as a whole. But you are trying to act in line with your (idealized) Nature; to find and live the self that, in some sense, you are "supposed to" be; the true tree, hidden in the acorn. But it's tempting to wonder: what kind of naturalistic-fallacy bullshit is that? Come now: you don't have a Nature, or a Real Self, or a True Name. You are a blurry jumble of empirical patterns coughed into the world by a dead-eyed universe. No platonic form structures and judges you from beyond the world—or least, none with any kind of intrinsic or privileged authority. And the haphazard teleology we inherit from evolution is just that. You who seek your true heart—what, really, are you seeking? And what are you expecting to find?

I've written, elsewhere, about my answer—and I'll say a bit more in my next essay, "On attunement," as well. Here, the thing I want to note is just that once you see that (non-nihilist) deep atheists have naturalistic-fallacy problems, too, one might become less inclined to immediately jump on green for running into these problems as well. Of course, green often runs into much more specific naturalistic-fallacy problems, too—related, not just to moving from an is to an ought in general, but to trying to get "ought" specifically from some conception of what Nature as a whole "wants." And here, I admit, I have less sympathy. But all of us, ultimately, are treating some parts of God as to-be-trusted. It's just that green, often, trusts more.

Sabien also discusses agreements with opposite colors, but this is more detail than I want here. ↩︎

I wrote about LeGuin's ethos very early on this blog, while it was still an unannounced experiment—see here and here. I'm drawing on, and extending, that discussion here. In particular the next paragraph takes some text directly from the first post. ↩︎

"'The Choice between Good and Bad,' said the Lord of Dark in a slow, careful voice, as though explaining something to a child, 'is not a matter of saying "Good!" It is about deciding which is which.'" ↩︎

(See also Lewis's discussion of Faust and the alchemists in the Abolition of Man.) ↩︎

See e.g. this piece by Scott Garrabrant, characterizing such concepts as "green." Thanks to Daniel Kokotajlo for flagging. ↩︎

He has also declared defeat on all technical AI safety research, at least at current levels of human intelligence—"Nate and Eliezer both believe that humanity should not be attempting technical alignment at its current level of cognitive ability..." But the reason in this case is more specific. ↩︎

From "List of Lethalities": "Corrigibility is anti-natural to consequentialist reasoning; 'you can't bring the coffee if you're dead' for almost every kind of coffee. We (MIRI) tried and failed to find a coherent formula for an agent that would let itself be shut down (without that agent actively trying to get shut down). Furthermore, many anti-corrigible lines of reasoning like this may only first appear at high levels of intelligence...The second course is to build corrigible AGI which doesn't want exactly what we want, and yet somehow fails to kill us and take over the galaxies despite that being a convergent incentive there...The second thing looks unworkable (less so than CEV, but still lethally unworkable) because corrigibility runs actively counter to instrumentally convergent behaviors within a core of general intelligence (the capability that generalizes far out of its original distribution). You're not trying to make it have an opinion on something the core was previously neutral on. You're trying to take a system implicitly trained on lots of arithmetic problems until its machinery started to reflect the common coherent core of arithmetic, and get it to say that as a special case 222 + 222 = 555..." ↩︎

Though here and elsewhere, I think Yudkowsky overrates how much evidence "MIRI tried and failed to solve X problem" provides about X problem's difficulty. ↩︎

Thanks to Arden Koehler for discussion, years ago. ↩︎

For example, his Harry Potter turns down the phoenix's invitation to destroy Azkaban, and declines to immediately give-all-the-muggles-magic, lest doing so destroy the world (though this latter move is a reference to the vulnerable world, and in practice, ends up continuing to concentrate power in Harry's hands). ↩︎

There's also a different variant of green-according-to-black, which urges us to notice the power of various products-of-Nature —for example, those resulting from evolutionary competition. Black is down with this—and down with competition more generally. ↩︎

Here I think of conversations I've had with utilitarian-ish folks, in which their attempts to fit environmentalism within their standard ways of thinking have seemed to me quite distorting of its vibe. "Is it kind of like: they think that ecosystems are moral patients?" "Is it like: they want to maximize Nature?" ↩︎

Albeit, one that it feels possible, also, to project onto many other life forms that we treat as much less sacred. ↩︎

Though: the moral-patienthood question sometimes gets a bit fuzzed, for example re: ecosystems of plants. ↩︎

Or maybe, the ecosystem itself? See e.g. Aldo Leopold's "land ethic": "A thing is right when it tends to preserve the integrity, stability, and beauty of the biotic community. It is wrong when it tends otherwise." ↩︎

Thanks to Nick Bostrom for discussion of this a while ago. ↩︎

See also Bostrom re: our interactions with superintelligent civilizations: 'We should be modest, willing to listen and learn. We should not too headstrongly insist on having too much our way. Instead, we should be compliant, peace-loving, industrious, and humble..." Though I have various questions about his picture in that paper. ↩︎

Thanks to my sister, Caroline Carlsmith, for discussion. ↩︎