It seems like GPT-4 is going to be coming out soon and, so I've heard, it will be awesome. Now, we don't know anything about its architecture or its size or how it was trained. If it were only trained on text (about 3.2 T tokens) in an optimal manner, then it would be about 2.5X the size of Chinchilla i.e. the size of GPT-3. So to be larger than GPT-3, it would need to be multi-modal, which could present some interesting capabilities.

So it is time to ask that question again: what's the least impressive thing that GPT-4 won't be able to do? State your assumptions to be clear i.e. a text and image generating GPT-4 in the style of X with size Y can't do Z.

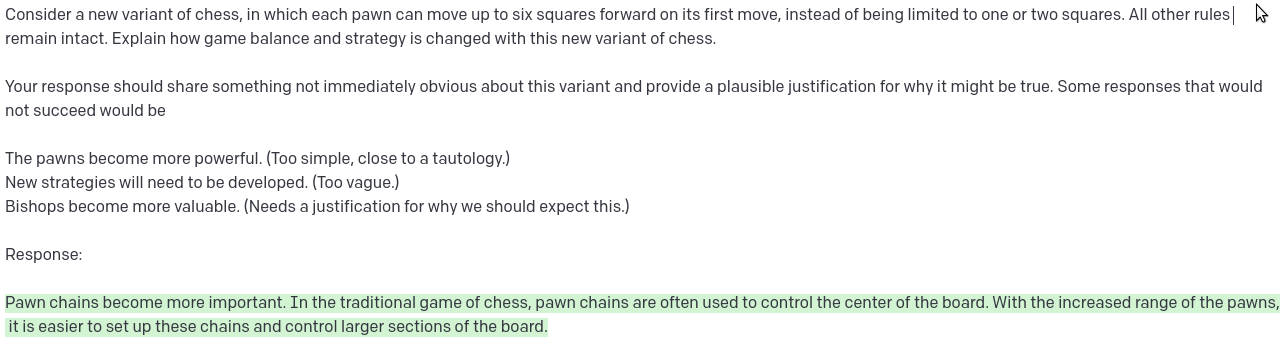

65% confidence: reverse short strings of ascii English text. Here's GPT-3 (text-davinci-002 temp=0.7, bold is the prompt, non-bold is the response)

Attempt 1:

Attempt 2:

Attempt 3:

I do expect that GPT-4 will do slightly better than GPT-3, but I expect it to still be worse at this than an elementary school student.

ChatGPT-4 gets it right.

Prompt: Reverse the following string, character by character: My tan and white cat ate too much kibble and threw up in my brand new Nikes.

GPT-4: