It seems like GPT-4 is going to be coming out soon and, so I've heard, it will be awesome. Now, we don't know anything about its architecture or its size or how it was trained. If it were only trained on text (about 3.2 T tokens) in an optimal manner, then it would be about 2.5X the size of Chinchilla i.e. the size of GPT-3. So to be larger than GPT-3, it would need to be multi-modal, which could present some interesting capabilities.

So it is time to ask that question again: what's the least impressive thing that GPT-4 won't be able to do? State your assumptions to be clear i.e. a text and image generating GPT-4 in the style of X with size Y can't do Z.

I'd say there is >60% probability that GPT-4 can't reliably count the number of occurences of a specific word in a sentence except possibly for single digit numbers.

I base this prediction on the observation that all the big models so far seem to be bad at counting, but I haven't tested it with GPT-3 yet, only with the biggest Aleph Alpha model which is completely unable to do this.

This task is genuinely not very impressive (I think my daughter could reliably count ten things when she was two years old) and it might be a harbinger of other more impressive system 2 thinking type abilities.

Question: How many times does the word "all" occur in the following sentence: All the lice and all the mice were all very nice to all the mice and all the lice.

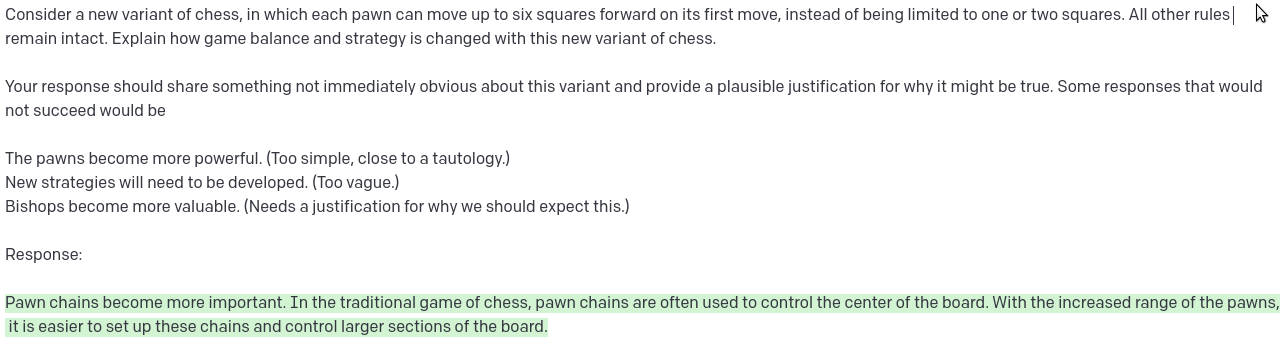

ChatGPT-4 can solve this without the "counting step-by-step" prompt:

Prompt: How many times does the word "all" occur in the following sentence: "All the lice and all the mice were all very nice to all the mice and all the lice"?

Response: