I'm about to process the last few days worth of posts and comments. I'll be linking to this comment as a "here are my current guesses for how to handle various moderation calls".

Succinctly explain the main point.

When we're processing many new user-posts a day, we don't have much time to evaluate each post.

So, one principle I think is fairly likely to become a "new user guideline" is "Make it pretty clear off the bat what the point of the post is." In ~3 sentences, try to make it clear who your target audience is, and what core point you're trying to communicate to them. If you're able to quickly gesture at the biggest-bit-of-evidence or argument that motivates your point, even better. (Though I understand sometimes this is hard).

This isn't necessarily how you have to write all the time on LessWrong! But your first post is something like an admissions-essay and should be optimized more for being legibly coherent and useful. (And honestly I think most LW posts should lean more in this direction)

In some sense this is similar to submitting something to a journal or magazine. Editors get tons of submissions. For your first couple posts, don't aim to write something that takes a lot of works for us to evaluate.

Corollary: Posts that are more likely to end up in the reject pile include...

- Fiction, especially if it looks like it's trying to make some kind of p

I've always like the the pithy advice "you have to know the rules to break the rules" which I do consider valid in many domains.

Before I let users break generally good rules like "explain what your point it up front", I want to know that they could keep to the rule before they don't. The posts of many first time users give me the feeling that their author isn't being rambly on purpose, they don't know how to write otherwise (or aren't willing to).

Some top-level post topics that get much higher scrutiny:

1. Takes on AI

Simply because of the volume of it, the standards are higher. I recommend reading Scott Alexander's Superintelligence FAQ as a good primer to make sure you understand the basics. Make sure you're familiar with the Orthogonality Thesis and Instrumental Convergence. I recommend both Eliezer's AGI Ruin: A List of Lethalities and Paul Christiano's response post so you understand what sort of difficulties the field is actually facing.

I suggest going to the most recent AI Open Questions thread, or looking into the FAQ at https://ui.stampy.ai/

2. Quantum Suicide/Immortality, Roko's Basilisk and Acausal Extortion.

In theory, these are topics that have room for novel questions and contributions. In practice, they seem to attract people who seem... looking for something to be anxious about? I don't have great advice for these people, but my impression is that they're almost always trapped in a loop where they're trying to think about it in enough detail that they don't have to be anxious anymore, but that doesn't work. They just keep finding new subthreads to be anxious about.

For Acausal Trade, I do ...

A key question when I look at a new user on LessWrong trying to help with AI is, well, are they actually likely to be able to contribute to the field of AI safety?

If they are aiming to make direct novel intellectual contributions, this is in fact fairly hard. People have argued back and forth about how much raw IQ, conscientiousness or other signs of promise a person needs to have. There has been some posts arguing that people are overly pessimistic and gatekeeping-y about AI safety.

But, I think it's just pretty importantly true that it takes a fairly significant combination of intelligence and dedication to contribute. Not everyone is cut out for doing original research. Many people pre-emptively focus on community building and governance because that feels easier and more tractable to them than original research. But those areas still require you to have a pretty understanding of the field you're trying to govern or build a community for.

If someone writes a post on AI that seems like a bad take, which isn't really informed by the real challenges, should I be encouraging that person to make improvements and try again? Or just say "idk man, not everyone is cut out for this?"

H...

Draft in progress. Common failures modes for AI posts that I want to reference later:

Trying to help with AI Alignment

"Let's make the AI not do anything."

This is essentially a very expensive rock. Other people will be building AIs that do do stuff. How does your AI help the situation over not building anything at all?

"Let's make the AI do [some specific thing that seems maybe helpful when parsed as an english sentence], without actually describing how to make sure they do exactly or even approximately that english sentence"

The problem is a) we don't know how to point an AI at doing anything at all, and b) your simple english sentence includes a ton of hidden assumptions.

(Note: I think Mark Xu sort of disagreed with Oli on something related to this recently, so I don't know that I consider this class of solution is completely settled. I think Mark Xu thinks that we don't currently know how to get an AI to do moderately complicated actions with our current tech, but, our current paradigms for how to train AIs are likely to yield AIs that can do moderately complicated actions)

I think the typical new user who says things like this still isn't advancing the current paradigm though,...

Here's a quickly written draft for an FAQ we might send users whose content gets blocked from appearing on the site.

The “My post/comment was rejected” FAQ

Why was my submission rejected?

Common reasons that the LW Mod team will reject your post or comment:

- It fails to acknowledge or build upon standard responses and objections that are well-known on LessWrong.

- The LessWrong website is 14 years old and the community behind it older still. Our core readings [link] are over half a million words. So understandably, there’s a lot you might have missed!

- Unfortunately, as the amount of interest in LessWrong grows, we can’t afford to let cutting-edge content get submerged under content from people who aren’t yet caught up to the rest of the site.

- It is poorly reasoned. It contains some mix of bad arguments and obviously bad positions that it does not feel worth the LessWrong mod team or LessWrong community’s time or effort responding to.

- It is difficult to read. Not all posts and comments are equally well-written and make their points as clearly. While established users might get more charity, for new users, we require that we (and others) can easily follow what you’re say

It fails to acknowledge or build upon standard responses and objections that are well-known on LessWrong.

- The LessWrong website is 14 years old and the community behind it older still. Our core readings [link] are over half a million words. So understandably, there’s a lot you might have missed!

- Unfortunately, as the amount of interest in LessWrong grows, we can’t afford to let cutting-edge content get submerged under content from people who aren’t yet caught up to the rest of the site.

I do want to emphasize a subtle distinction between "you have brought up arguments that have already been brought up" and "you are challenging basic assumptions of the ideas here". I think challenging basic assumptions is good and well (like here), while bringing up "but general intelligences can't exist because of no-free-lunch theorems" or "how could a computer ever do any harm, we can just unplug it" is quite understandably met with "we've spent 100s or 1000s of hours discussing and rebutting that specific argument, please go read about it <here> and come back when you're confident you're not making the same arguments as the last dozen new users".

I would like to make sure new users are s...

Here's my best guess for overall "moderation frame", new this week, to handle the volume of users. (Note: I've discussed this with other LW team members, and I think there's rough buy-in for trying this out, but it's still pretty early in our discussion process, other team members might end up arguing for different solutions)

I think to scale the LessWrong userbase, it'd be really helpful to shift the default assumptions of LessWrong to "users by default have a rate limit of 1-comment-per day" and "1 post per week."

If people get somewhat upvoted, they...

After chatting for a bit about what to do with low-quality new posts and comments, while being transparent and inspectably fair, the LW Team is currently somewhat optimistic about adding a section to lesswrong.com/moderation which lists all comments/posts that we've rejected for quality.

We haven't built it yet, so for immediate future we'll just be strong downvoting content that doesn't meet our quality bar. And for immediate future, if existing users in good standing want to defend particular pieces as worth inclusion they can do so here.

This ...

What are the actual rationality concepts LWers are basically required to understand to participate in most discussions?

I am prior to having this bar be set pretty high, like 80-100% of the sequences level. I remember years ago when I finished the sequences, I spent several months practicing everyday rationality in isolation, and only then deigned to visit LessWrong and talk to other rationalists, and I was pretty disappointed with the average quality level, and like I dodged a bullet by spending those months thinking alone rather than with the wider community.

It also seems like average quality has decreased over the years.

Predictable confusion some will have: I’m talking about average quality here. Not 90th percentile quality posters.

The main reason I don’t reply to most posts is because I’m not guaranteed an interesting conversation, and it is not uncommon that I’d just be explaining a concept which seems obvious if you’ve read the sequences, which aren’t super fun conversations to have compared to alternative uses of my time.

For example, the other day I got into a discussion on LessWrong about whether I should worry about claims which are provably useless, and was accused of ignoring inconvenient truths for not doing so.

If the bar to entry was a lot higher, I think I’d comment more (and I think others would too, like TurnTrout).

Hey, first just wanted to say thanks and love and respect. The moderation team did such an amazing job bringing LW back from nearly defunct into the thriving place it is now. I'm not so active in posting now, but check the site logged out probably 3-5 times a week and my life is much better for it.

After that, a few ideas:

(1) While I don't 100% agree with every point he made, I think Duncan Sabien did an incredible job with "Basics of Rationalist Discourse" - https://www.lesswrong.com/posts/XPv4sYrKnPzeJASuk/basics-of-rationalist-discourse-1 - perhaps a boiled-down canonical version of that could be created. Obviously the pressure to get something like that perfect would be high, so maybe something like "Our rough thoughts on how to be a good a contributor here, which might get updated from time to time". Or just link Duncan's piece as "non-canonical for rules but a great starting place." I'd hazard a guess that 90% of regular users here agree with at least 70% of it? If everyone followed all of Sabien's guidelines, there'd be a rather high quality standard.

(2) I wonder if there's some reasonably precise questions you could ask new users to check for understanding and could be there...

I did the have the idea of there being regions with varying standards and barriers, in particular places where new users cannot comment easily and place where they can, as an idea.

The idea of AF having both a passing-the-current-AF-bar section and a passing-the-current-LW-bar section is intriguing to me. With some thought about labeling etc., it could be a big win for non-alignment people (since LW can suppress alignment content more aggressively by default), and a big win for people trying to get into alignment (since they can host their stuff on a more professional-looking dedicated alignment site), and no harm done to the current AF people (since the LW-bar section would be clearly labeled and lower on the frontpage).

I didn’t think it through very carefully though.

I do agree Duncan's post is pretty good, and while I don't think it's perfect I don't really have an alternative I think is better for new users getting a handle on the culture here.

I'd be willing to put serious effort into editing/updating/redrafting the two sections that got the most constructive pushback, if that would help tip things over the edge.

I vaguely remember being not on board with that one and downvoting it. Basics of Rationalist Discourse doesn't seem to get to the core of what rationality is, and seems to preclude other approaches that might be valuable. Too strict and misses the point. I would hate for this to become the standard.

I don't really have an alternative I think is better for new users getting a handle on the culture here

Culture is not systematically rationality (dath ilan wasn't built in a day). Not having an alternative that's better can coexist with this particular thing not being any good for same purpose. And a thing that's any good could well be currently infeasible to make, for anyone.

Zack's post describes the fundamental difficulty with this project pretty well. Adherence to most rules of discourse is not systematically an improvement for processes of finding truth, and there is a risk of costly cargo cultist activities, even if they are actually good for something else. The cost could be negative selection by culture, losing its stated purpose.

How does the fact of such bannings demonstrate the post's unsuitability for purpose?

I think it doesn't. For instance, I think the following scenario is clearly possible:

- There are users A and B who detest one another for entirely non-LW-related reasons. (Maybe they had a messy and distressing divorce or something.)

- A and B are both valuable contributors to LW, as long as they stay away from one another.

- A and B ban one another from commenting on their posts, because they detest one another. (Or, more positively, because they recognize that if they start interacting then sooner or later they will start behaving towards one another in unhelpful ways.)

- A writes an excellent post about LW culture, and bans B from it just like A bans B from all their posts (and vice versa).

If you think that Duncan's post specifically shouldn't be an LW-culture-onboarding tool because he banned you specifically from commenting on it, then I think you need reasons tied to the specifics of the post, or the specifics of your banning, or both.

(To be clear: I am not claiming that you don't have any such reasons, nor that Duncan is right to ban you from commenting on his frontpaged posts, nor that Duncan's "basics" post is good, nor am I claiming the opposite of any of those. I'm just saying that the thing you're saying doesn't follow from the thing you're saying it follows from.)

I did not claim that my scenario describes the actual situation; in fact, it should be very obvious from my last two paragraphs that I thought (and think) it likely not to.

What I claimed (and still claim) is that the mere fact that some people are banned from commenting on Duncan's frontpage posts is not on its own anything like a demonstration that any particular post he may have written isn't worthy of being used for LW culture onboarding.

Evidently you think that some more specific features of the situation do have that consequence. But you haven't said what those more specific features are, nor how they have that consequence.

Actually, elsewhere in the thread you've said something that at least gestures in that direction:

the point is that if the conversational norms cannot be discussed openly [...] there's no reason to believe that they're good norms. How were they vetted? [...] the more people are banned from commenting on the norms as a consequence of their criticism of said norms, the less we should believe that the norms are any good!

I don't think this argument works. (There may well be a better version of it, along the lines of "There seem to be an awful lot of people Duncan...

I think the important bit from Said's perspective is that these people were blocked for reasons not-related-to whether they had something useful to say about those rules, so we may be missing important things.

I'll reiterate Habryka's take on "I do think if we were to canonize some version of the rules, that should be in a place that everyone can comment on." And I'd go on to say: on that post we also should relax some of the more opinionated rules about how to comment. i.e. we should avoid boxing ourselves in so that it's hard to criticize the rules in practice.

I think a separate thing Said cares about is that there is some period for arguing about the rules before they "get canonized." I do think there should be at least some period for this, but not worried about it being particularly long because

a) the mod team has had tons of time to think about what norms are good and people have had tons of time to argue, and I think this is mostly going to be cementing things that were already de-facto site norms,

b) people can still argue about the rules after the fact (and I think comments on The Rules post, and top level posts about site norms, should have at least some more leeway about how to argue. I think there'll probably still be some norms like, 'don't do ad hominem attacks' but don't expect that to actually cause an issue)

That said, I certainly don't promise that everyone will be happy with the rules, the process here will not be democratic, it'll be the judgment of the LW mod team.

All of this is irrelevant, because the point is that if the conversational norms cannot be discussed openly (by people who otherwise aren’t banned from the site for being spammers or something similarly egregious), then there’s no reason to believe that they’re good norms. How were they vetted? How were they arrived at? Why should we trust that they’re not, say, chock-full of catastrophic problems? (Indeed, the more people[1] are banned from commenting on the norms as a consequence of their criticism of said norms, the less we should believe that the norms are any good!)

Of all the posts on the site, the post proposing new site norms is the one that should be subjected to the greatest scrutiny—and yet it’s also the post[2] from which more critics have been banned than from almost any other. This is an extremely bad sign.

Weighted by karma (as a proxy for “not just some random person off the street, but someone whom the site and its participants judge to have worthwhile things to say”). (The ratio of “total karma of people banned from commenting on ‘Basics of Rationalist Discourse’” to “karma of author of ‘Basics of Rationalist Discourse’” is approximately 3:1. If karma represents

For what it's worth, the high level point here seems right to me (I am not trying to chime into the rest of the discussion about whether the ban system is a good idea in the first place).

If we canonize something like Duncan's post I agree that we should do something like copy over a bunch of it into a new post, give prominent attribution to Duncan at the very top of the post, explain how it applies to our actual moderation policy, and then we should maintain our own ban list.

I think Duncan's post is great, but I think when we canonize something like this it doesn't make sense for Duncan's ban list to carry over to the more canonized version.

This is certainly well and good, but it seems to me that the important thing is to do something like this before canonizing anything. Otherwise, it’s a case of “feel free to discuss this, but nothing will come of it, because the decision’s already been made”.

The whole point of community discussion of something like this is to serve as input into any decisions made. If you decide first, it’s too late to discuss. This is exactly what makes the author-determined ban lists so extraordinarily damaging in a case like this, where the post in question is on a “meta” topic (setting aside for the moment whether they’re good or bad in general).

(I think this is a thread that, if I had a "slow mode" button to make users take longer to reply, I'd probably have clicked it right about now. I don't have such a button, but Said and @Duncan_Sabien can you guys a) hold off for a couple hours on digging into this and b) generally take ~an hour in between replies here if you were gonna keep going)

New qualified users need to remain comfortable (trivial inconveniences like interacting with a moderator at all are a very serious issue), and for new users there is not enough data to do safe negative selection on anything subtle. So talking of "principles in the Sequences" is very suspicious, I don't see an operationalization that works for new users and doesn't bring either negative selection or trivial inconvenience woes.

Stop displaying user's Karma total, so that there is no numbers-go-up reward for posting lots of mediocre stuff, instead count the number of comments/posts in some upper quantile by Karma (which should cash out as something like 15+ Karma for comments). Use that number where currently Karma is used, like vote weights. (Also, display the number of comments below some negative threshold like -3 in the last few months.)

One of the things I really like about LW is the "atmosphere", the way people discuss things. So very well done at curating that so far. I personally would be nervous about over-pushing "The Sequences". I didn't read much of them a little while into my LW time, but I think I picked up the vibes fine without them.

I think the commenting guidelines are an excellent feature which have probably done a lot of good work in making LW the nice place it is (all that "explain, not persuade" stuff). I wonder how much difference it would make if the first time a new user posts a comment they could be asked to tick a box saying "I read the commenting guidelines".

Would it make sense to have a "Newbie Garden" section of the site? The idea would be to give new users a place to feel like they're contributing to the community, along with the understanding that the ideas shared there are not necessarily endorsed by the LessWrong community as a whole. A few thoughts on how it could work:

- New users may be directed toward the Newbie Garden (needs a better name) if they try to make a post or comment, especially if a moderator deems their intended contribution to be low-quality. This could also happen by default for all users with karma below a certain threshold.

- New users are able to create posts, ask questions, and write comments with minimal moderation. Posts here won't show up on the main site front page, but navigation to this area should be made easy on the sidebar.

- Voting should be as restricted here as on the rest of the site to ensure that higher-quality posts and comments continue trickling to the top.

- Teaching the art of rationality to new users should be encouraged. Moderated posts that point out trends and examples of cognitive biases and failures of rationality exhibited in recent newbie contributions, and that advise on how to correct for

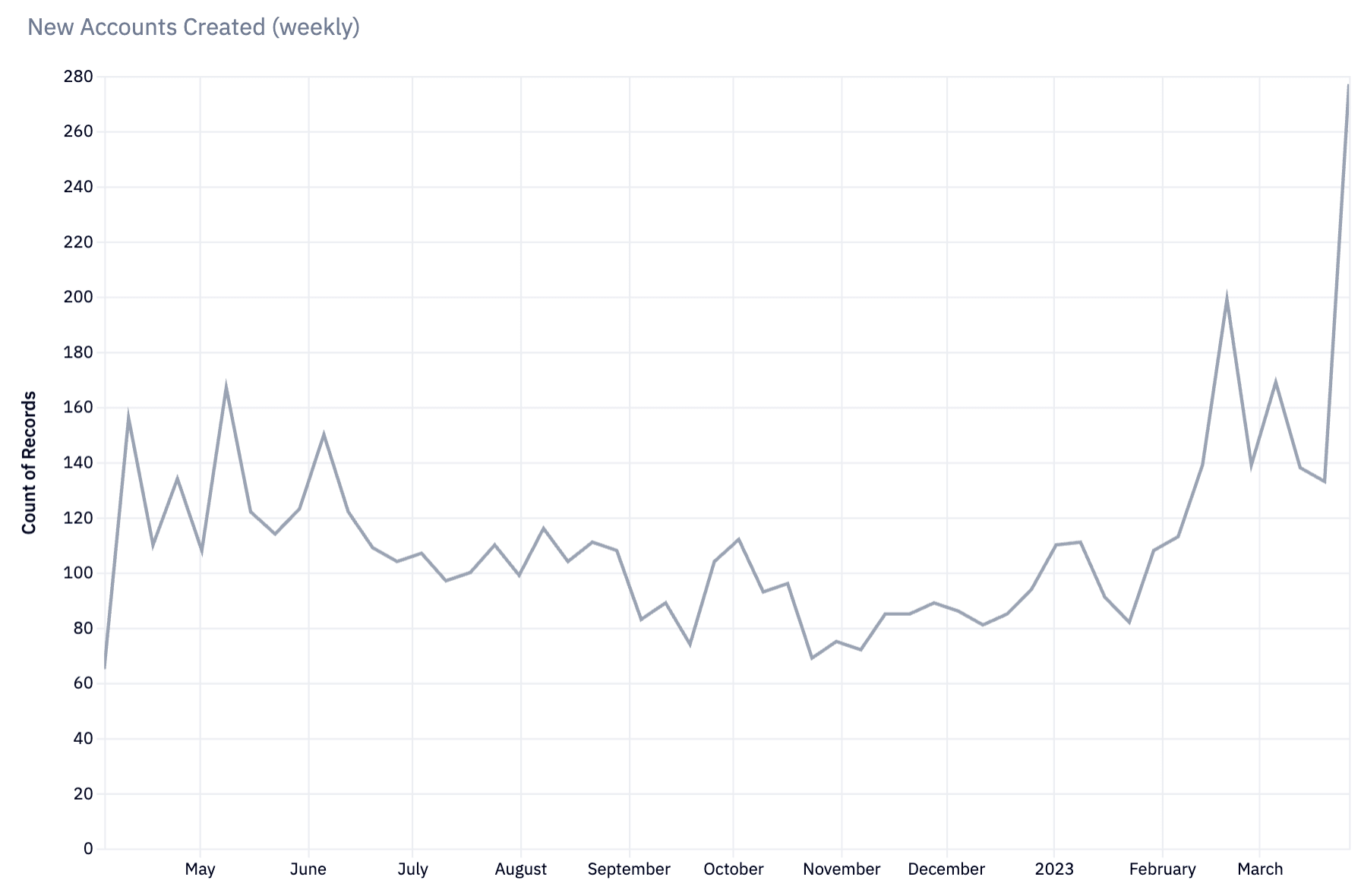

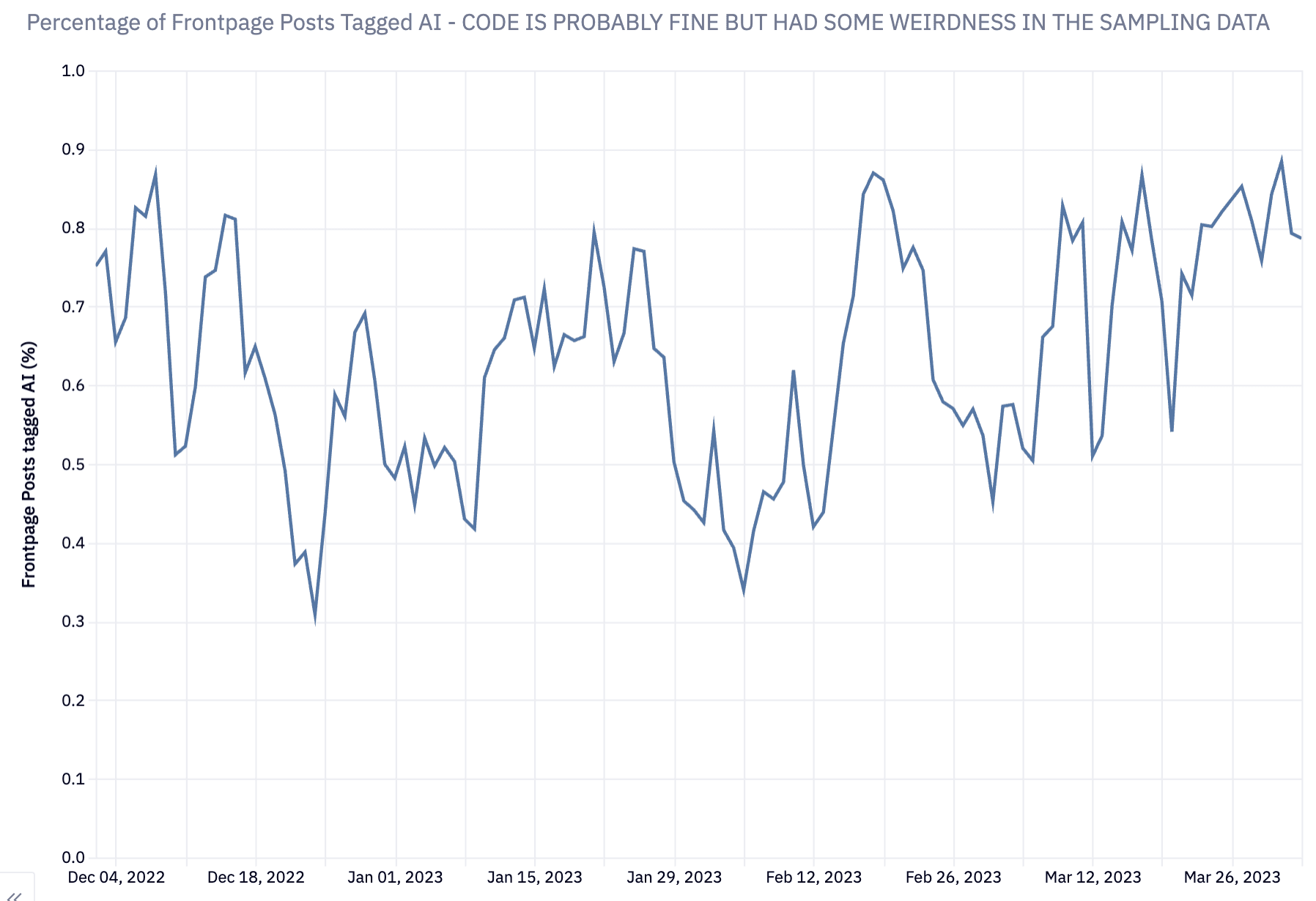

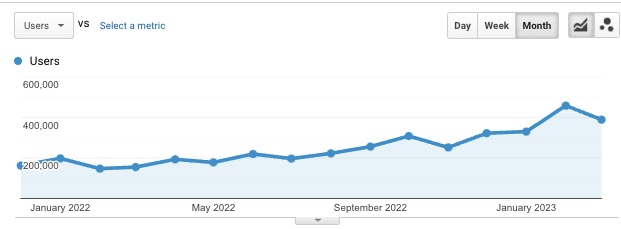

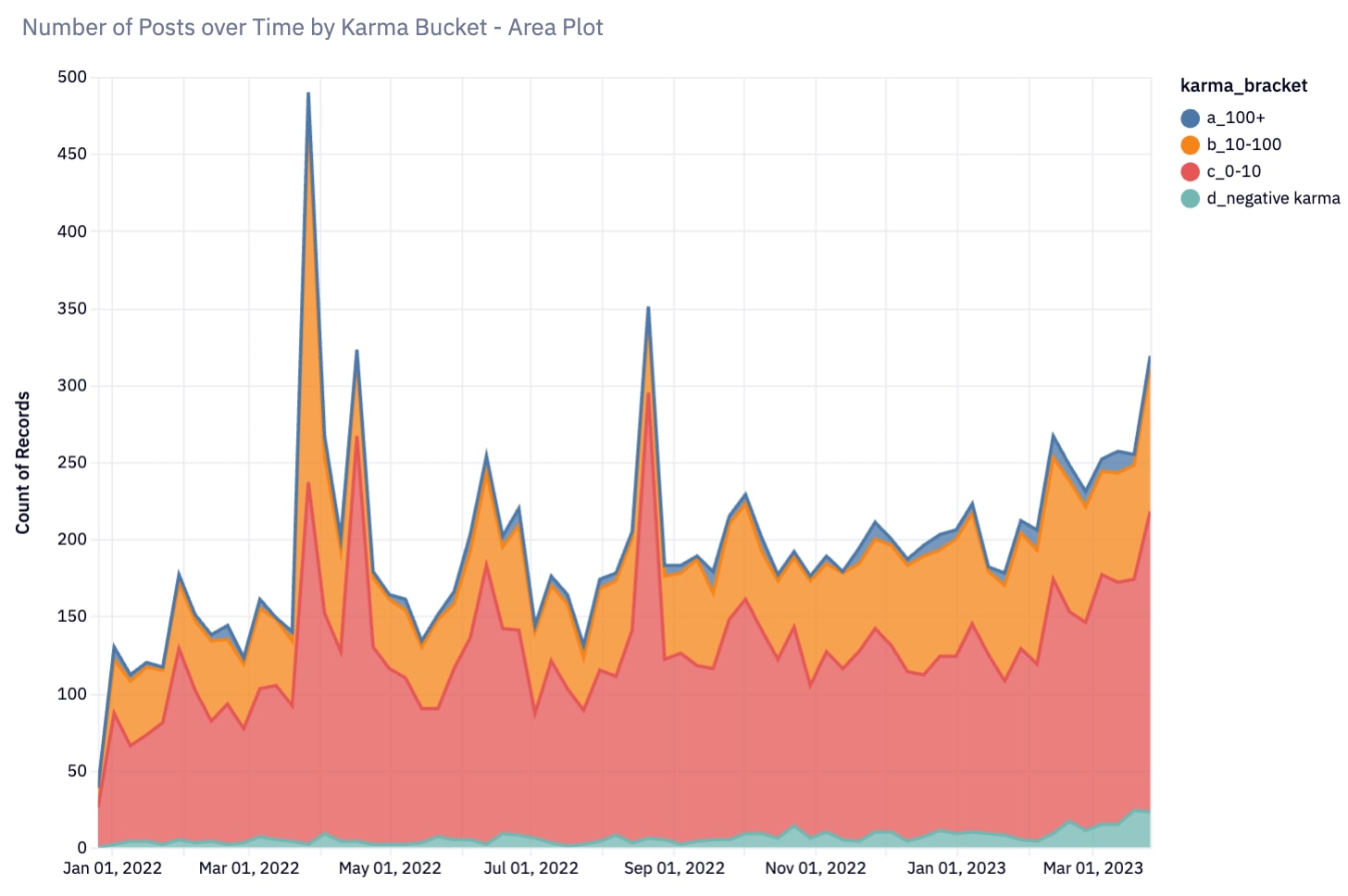

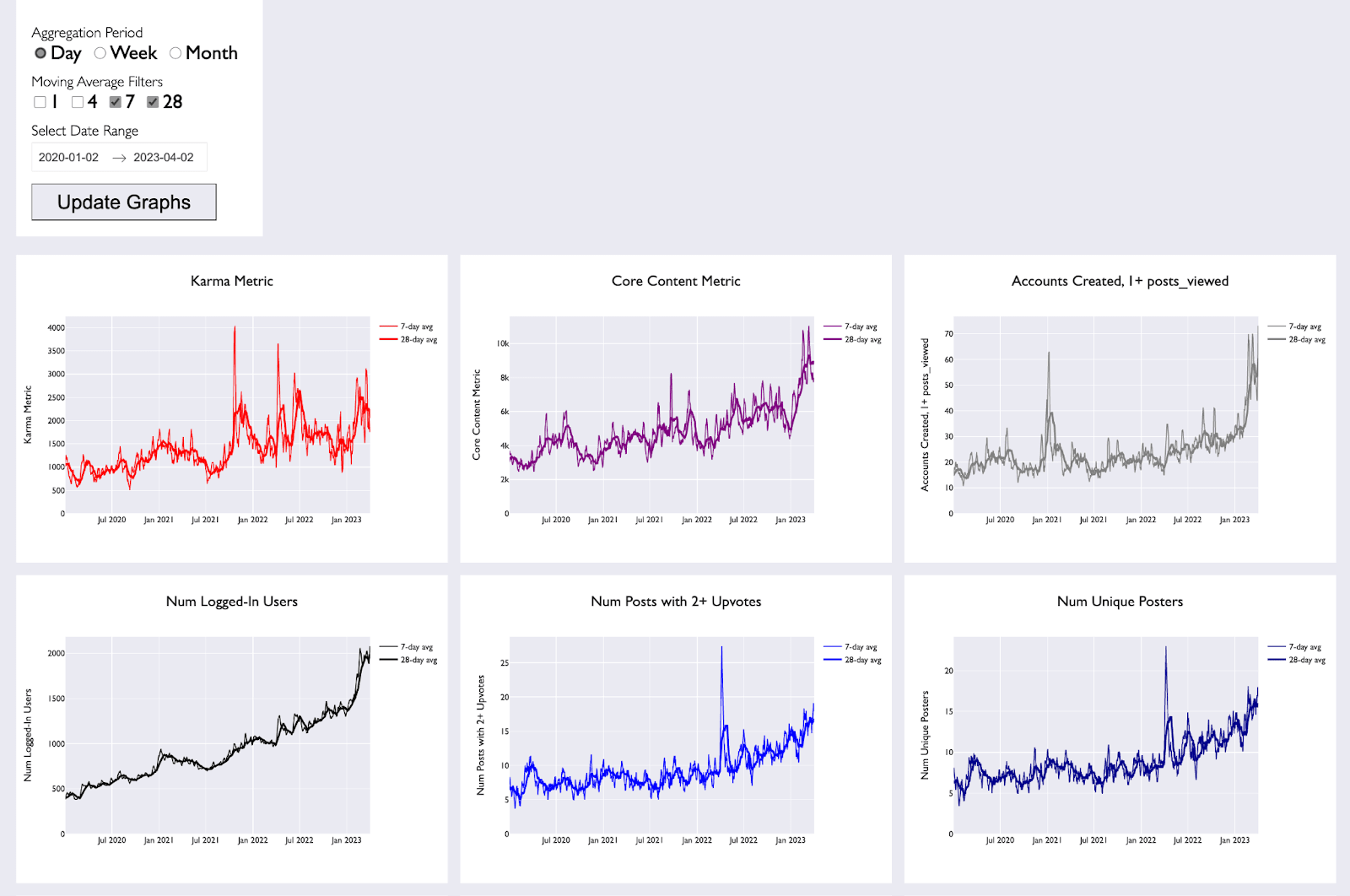

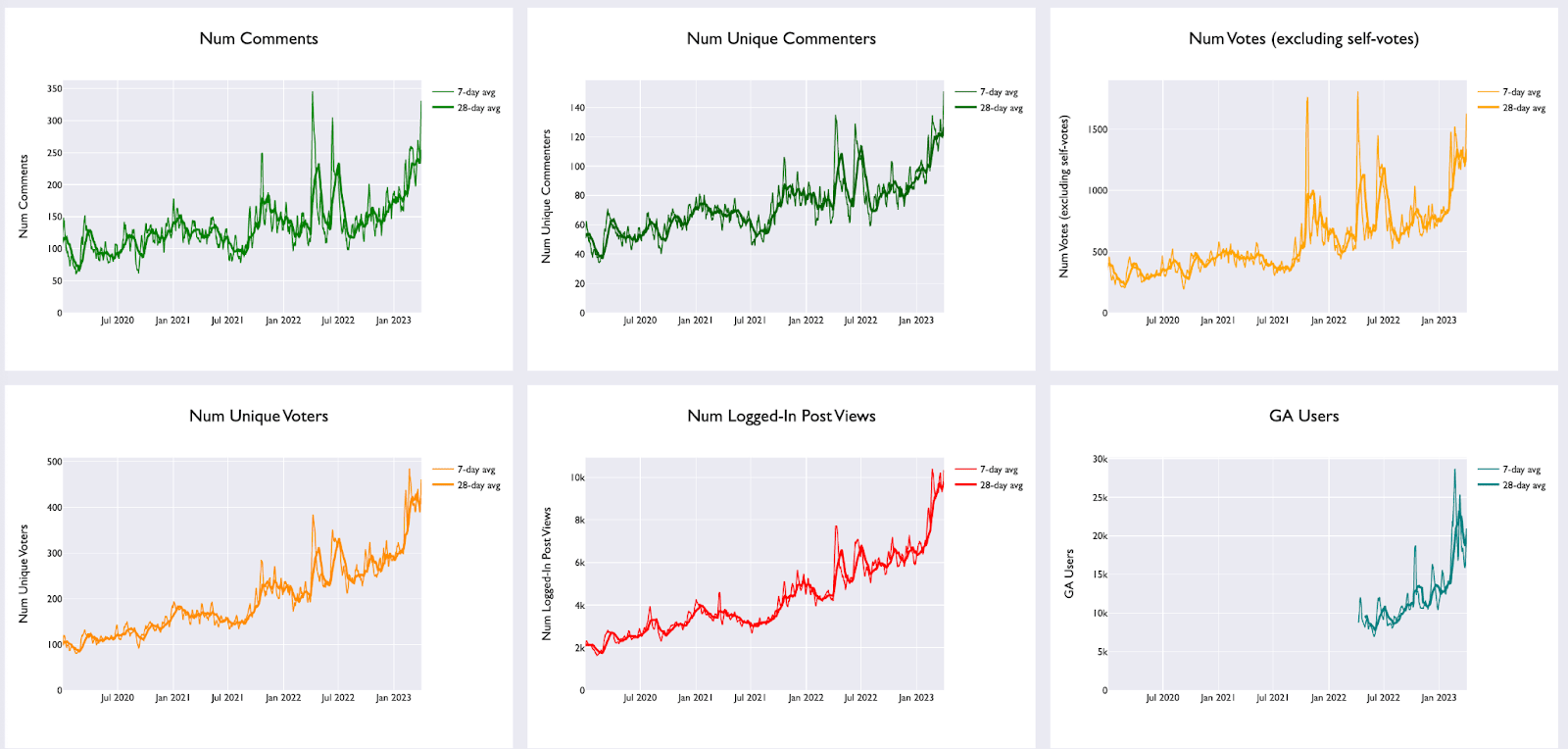

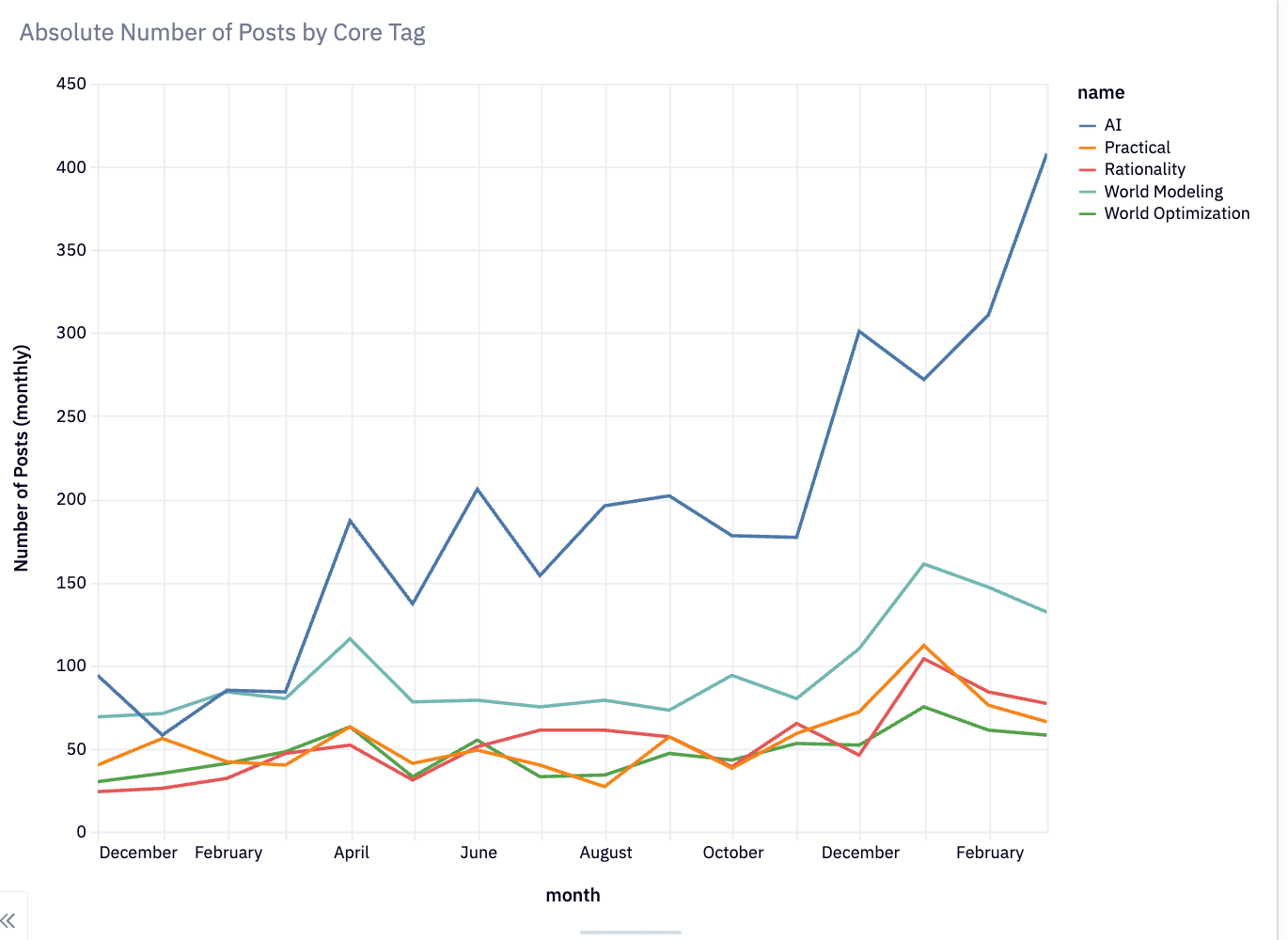

A few observations triggered the LessWrong team focusing on moderation now. The initial trigger was our observations of new users signing up plus how the distribution of quality of new submissions had worsened (I found myself downvoting many more posts), but some analytics helps drive home the picture:

(Note that not every week does LessWrong get linked in the Times...but then again....maybe it roughly does from this point onwards.)

LessWrong data from Google Analytics: traffic has doubled Year-on-Year

Comments from new users won't display by default until they've been approved by a moderator.

I'm pretty sad about this from a new-user experience, but I do think it would have made my LW experience much better these past two weeks.

it's just generally the case that if you participate on LessWrong, you are expected to have absorbed the set of principles in The Sequences (AKA Rationality A-Z).

Some slight subtlety: you can get these principles in other ways, for example great scientists or builders or people who've read Feynman can get these. But I think the sequences gives them really well and also helps a lot with setting the site culture.

How do we deal with low quality criticism? There's something sketchy about rejecting criticism. There are obvious hazards of groupthink. But a lot of criticism isn't well thought out, or is rehashing ideas we've spent a ton of time discussing and doesn't feel very productive.

My current guess is something in the genre of "schelling place to discuss the standard arguments" to point folks to. I tried to start one here responding to basic AI x-risk questions people had.

you can get these principles in other ways

I got them via cultural immersion. I just lurked here for several months while my brain adapted to how the people here think. Lurk moar!

I've been looking at creating a GPT-powered program which can automatically generate a test of whether one has absorbed the Sequences. It doesn't currently work that well and I don't know whether it's useful, but I thought I should mention it. If I get something that I think is worthwhile, then I'll ping you about it.

Though my expectation is that one cannot really meaningfully measure the degree to which a person has absorbed the Sequences.

I too expect that testing whether someone can talk as if they've absorbed the sequences would measure password-guessing more accurately than comprehension.

The idea gets me wondering whether it's possible to design a game that's easy to learn and win using the skills taught by the sequences, but difficult or impossible without them. Since the sequences teach a skill, it seems like we should be able to procedurally generate novel challenges that the skill makes it easy to complete.

As someone who's gone through the sequences yet isn't sure whether they "really" "fully" understand them, I'd be interested in taking and retaking such a test from time to time (if it was accurate) to quantify any changes to my comprehension.

This isn't directly related to moderation issue, but there are a couple of features I would find useful, given the the recent increase in post and comment volume:

-

A way to hide the posts I've read (or marked as read) from my own personal view of the front page. (Hacker News has this feature)

-

Keeping comment threads I've collapsed, collapsed across page reloads.

I support a stricter moderation policy, but I think these kinds of features would go a long way in making my own reading experience as pleasant as it's always been.

Thank you for the transparency!

Comments from new users won't display by default until they've been approved by a moderator.

It sounds like you're getting ready to add a pretty significant new workload to the tasks already incumbent upon the mod team. Approving all comments from new users seems like a high volume of work compared to my impression of your current duties, and it seems like the moderation skill threshhold for new user comment approval might potentially be lower than it is for moderators' other duties.

You may have already considered this possibility and ruled it out, but I wonder if it might make sense to let existing users above a given age and karma threshhold help with the new user comment queue. If LW is able to track who approved a given comment, it might be relatively easy to take away the newbie-queue-moderation permissions from anybody who let too many obviously bad ones through.

I would be interested in helping out with a newbie comment queue to keep it moving quickly so that newbies can have a positive early experience on lesswrong, whereas I would not want to volunteer for the "real" mod team because I don't have the requisite time and skills for reliably s...

I'm not entirely sure what I want the longterm rule to be, but I do think it's bad for the comment section of Killing Socrates to be basically discussing @Said Achmiz specifically where Said can't comment. It felt a bit overkill to make an entire separate overflow post for a place where Said could argue back, but it seemed like this post might be a good venue for it.

I will probably weigh in here with my own thoughts, although not sure if I'll get to it today.

One thing I would love to see that is missing on a lot of posts is a summary upfront that makes it clear to the reader the context and the main argument or just content. (Zvi's posts are an excellent example of this.) At least from the newbies. Good writers, like Eliezer and Scott Alexander can produce quality posts without a summary. Most people posting here are not in that category. It is not wrong to post a stream of consciousness or an incomplete draft, but at least spend 5 minutes writing up the gist in a paragraph upfront. If you can't be bothered, or do not have the skill of summarizing,

By the way, GPT will happily do it for you if you paste your text into the prompt, as a whole or in several parts. GPT-4/Bing can probably also evaluate the quality of the post and give feedback on how well it fits into the LW framework and what might be missing or can be improved. Maybe this part can even be automated.

Scott Garrabrant once proposed being able to add abstracts to posts that would appear if you clicked on posts on the frontpage. Then you could read the summary, and only read the rest of the post if you disagreed with it.

By "not visible" do you mean "users won't see them at all" or "collapsed by default" like downvoted comments?

Im noticing two types of comments I would consider problematic increasing lately-- poorly thought out or reasoned long posts, and snappy reddit-esque one-line comments. The former are more difficult to filter for, but dealing with the second seems much easier to automate-- for example, have a filter which catches any comment below a certain length too be approved manually (potentially with exceptions for established users)

There's also a general attitude that goes along with that-- in general, not reading full posts, nitpicking things to be snarky about...

Do you keep metrics on moderated (or just downvoted) posts from users, in order to analyze whether a focus on "new users" or "low total karma" users is sufficient?

I welcome a bit stronger moderation, or at least encouragement of higher-minimum-quality posts and comments. I'm not sure that simple focus on newness or karma for the user (as opposed to the post/comment) is sufficient.

I don't know whether this is workable, but encouraging a bit more downvoting norms, as opposed to ignoring and moving on, might be a way to distribute this gardening work a bit, so it's not all on the moderators.

Nice to hear the high standards you continue to pursue. I agree that LessWrong should set itself much higher standards than other communities, even than other rationality-centred or -adjacent communities.

My model of this big effort to raise the sanity waterline and prevent existential catastrophes contains three concentric spheres. The outer sphere is all of humanity; ever-changing yet more passive. Its public opinion is what influences most of the decisions of world leaders and companies, but this public opinion can be swayed by other, more directed force...

Disclaimer: I myself am a newer user from last year.

I think trying to change downvoting norms and behaviours could help a lot here and save you some workload on the moderation end. Generally, poor quality posters will leave if you ignore and downvote them. Recently, there has been an uptick in these posts and of the ones I have seen many are upvoted and engaged with. To me, that says users here are too hesitant to downvote. Of course, that raises the question of how to do that and if doing so is undesirable because it will broadly repel many new users some of whom will not be "bad". Overall though I think encouraging existing users to downvote should help keep the well-kept garden.

I've always found it a bit odd that Alignment Forum submissions are automatically posted to LW.

If you apply some of these norms, then imo there are questionable implications, i.e. it seems weird to say that one should have read the sequences in order to post about mechanistic interpretability on the Alignment Forum.

What's a "new user"? It seems like this category matters for moderation but I don't see a definition of it. (Maybe you're hoping to come up with one?)

Stackoverflow has a system where users with more karma get more power. When it comes to the job of deciding whether or not to approve comments of new users, I don't see why that power should be limited to a handful of mods. Have you thought about giving that right out at a specific amount of karma?

How do we deal with low quality criticism?

Criticism that's been covered before should be addressed by citing prior discussion and flagging the post as a duplicate unless they can point out some way their phrasing is better.

Language models are potentially very capable of making the process of citing dupes much more efficient, and I'm going to talk to AI Objectives about this stuff at some point in the next week and this is one of the technologies we're planning on discussing.

(less relevant to the site, but general advice: In situations where a bad critic is...

Nice! Through much of 2022, I was pretty worried that Lesswrong would eventually stop thriving for some reason or another. This post is a strong update in the direction of "I never had anything to worry about, because the mods will probably adapt ahead of time and stay well ahead of the curve on any issue".

I'm also looking forward to the results of the Sequences requirement. I've heard some good things about rationality engines contributing to humans solving alignment, but I'm not an expert on that approach.

Cheering over here! This seems like a tricky problem and I'm so happy about how you seem to be approaching it. :)

I'm especially pleased with the stuff about "people need to read the sequences, but shit the sequences are long, which particular concepts are especially crucial for participation here?", as opposed to wishing people would read the sequences and then giving up because they're long and stylistically polarizing (which is a mental state I've often found myself occupying).

re: discussing criticism - I'd love to see tools to help refer back to previous discussions of criticism and request clarification of the difference. Though of course this can be an unhelpful thought-stopper, I think more often than not it's simply context retrieval and helps the new criticism clarify its additions. ("paper does not clarify contributions"?)

A thing I'm finding difficult at the moment is that criticism just isn't really indexed in a consistent way, because, well, everyone has subtly different frames on what they're criticizing and why.

It's possible there should be, like, 3 major FAQs that just try to be a comprehensive index on frequent disagreements people have with LW consensus, and people who want to argue about it are directed there to leave comments, and maybe over time the FAQ becomes even more comprehensive. It's a lot of work, might be worth it anyway

(I'm imagining such an FAQ mostly linking to external posts rather than answering everything itself)

My main take away is that I'm going to be cau authoring posts with people I'm trying to get into AI safety, so they aren't stonewalled by moderation.

Will the karma thing affect users who've joined before a certain period of time? Asking this because I joined quite a while ago but have only 4 karma right now.

I'm strongly in favor of the sequences requirement. If I had been firmly encouraged/pressured into reading the sequences when I joined LW at ~march/april 2022, my life would have been much better and more successful by now. I suspect this would be the case for many other people. I've spent a lot of time thinking about ways that LW could set people up to steer themselves (and eachother) towards self-improvement, like the Battle School in Ender's Game, but it seems like it's much easier to just tell people to read the Sequences.

Something that I'm worried abo...

Thanks. It's gotten to the point where I have completely hidden the "AI" tag from my list of latest posts.

Highly recommend this, wish the UI was more discoverable. For people who want this themselves: you can change how posts are weighted for you by clicking "customize feed" to right of "front page". You'll be shown some default tags, and can also add more. If you hover over a tag you can set it to hidden, reduced (posts with tag treated as having 50% less karma), promoted (+25 karma), or a custom amount.

It occurs to me I don't know how tags stack on a given post, maybe staff can clarify?

All of the additive modifiers that apply (eg +25 karma, for each tag the post has) are added together and applied first, then all of the multiplicative modifiers (ie the "reduced" option) are multiplied together and applied, then time decay (which is multiplicative) is applied last. The function name is filterSettingsToParams.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

I wonder if there would be a use for an online quiz, of the sort that asks 10 questions picked randomly from several hundred possible questions, and which records time taken to complete the quiz and the number of times that person has started an attempt at it (with uniqueness of person approximated by ip address, email address or, ideally, lesswrong username) ?

Not as prescriptive as tracking which sequences someone has read, but perhaps a useful guide (as one factor among many) about the time a user has invested in getting up to date on what's already been written here about rationality?

Lots of new users have been joining LessWrong recently, who seem more filtered for "interest in discussing AI" than for being bought into any particular standards for rationalist discourse. I think there's been a shift in this direction over the past few years, but it's gotten much more extreme in the past few months.

So the LessWrong team is thinking through "what standards make sense for 'how people are expected to contribute on LessWrong'?" We'll likely be tightening up moderation standards, and laying out a clearer set of principles so those tightened standards make sense and feel fair.

In coming weeks we'll be thinking about those principles as we look over existing users, comments and posts and asking "are these contributions making LessWrong better?".

Hopefully within a week or two, we'll have a post that outlines our current thinking in more detail.

Generally, expect heavier moderation, especially for newer users.

Two particular changes that should be going live within the next day or so:

Broader Context

LessWrong has always had a goal of being a well-kept garden. We have higher and more opinionated standards than most of the rest of the internet. In many cases we treat some issues as more "settled" than the rest of the internet, so that instead of endlessly rehashing the same questions we can move on to solving more difficult and interesting questions.

What this translates to in terms of moderation policy is a bit murky. We've been stepping up moderation over the past couple months and frequently run into issues like "it seems like this comment is missing some kind of 'LessWrong basics', but 'the basics' aren't well indexed and easy to reference." It's also not quite clear how to handle that from a moderation perspective.

I'm hoping to improve on "'the basics' are better indexed", but meanwhile it's just generally the case that if you participate on LessWrong, you are expected to have absorbed the set of principles in The Sequences (AKA Rationality A-Z).

In some cases you can get away without doing that while participating in local object level conversations, and pick up norms along the way. But if you're getting downvoted and you haven't read them, it's likely you're missing a lot of concepts or norms that are considered basic background reading on LessWrong. I recommend starting with the Sequences Highlights, and I'd also note that you don't need to read the Sequences in order, you can pick some random posts that seem fun and jump around based on your interest.

(Note: it's of course pretty important to be able to question all your basic assumptions. But I think doing that in a productive way requires actually understand why the current set of background assumptions are the way they are, and engaging with the object level reasoning)

There's also a straightforward question of quality. LessWrong deals with complicated questions. It's a place for making serious progress on those questions. One model I have of LessWrong is something like a university – there's a role for undergrads who are learning lots of stuff but aren't yet expected to be contributing to the cutting edge. There are grad students and professors who conduct novel research. But all of this is predicated on there being some barrier-to-entry. Not everyone gets accepted to any given university. You need some combination of intelligence, conscientiousness, etc to get accepted in the first place.

See this post by habryka for some more models of moderation.

Ideas we're considering, and questions we're trying to answer:

Again, hopefully in the near future we'll have a more thorough writeup about our answers to these. Meanwhile it seemed good to alert people this would be happening.